Ke Zhuang

LPUWF-LDM: Enhanced Latent Diffusion Model for Precise Late-phase UWF-FA Generation on Limited Dataset

Sep 01, 2024

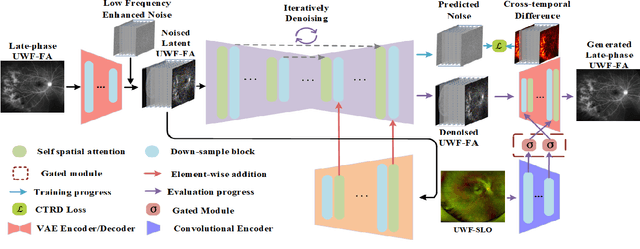

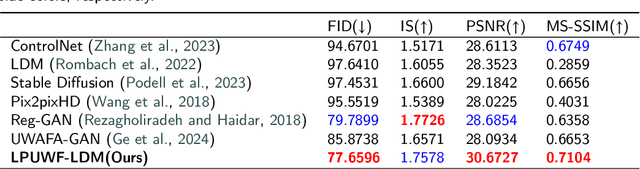

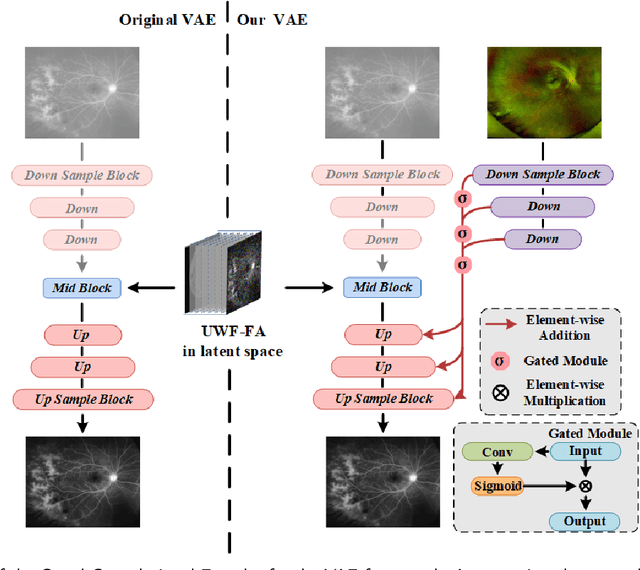

Abstract:Ultra-Wide-Field Fluorescein Angiography (UWF-FA) enables precise identification of ocular diseases using sodium fluorescein, which can be potentially harmful. Existing research has developed methods to generate UWF-FA from Ultra-Wide-Field Scanning Laser Ophthalmoscopy (UWF-SLO) to reduce the adverse reactions associated with injections. However, these methods have been less effective in producing high-quality late-phase UWF-FA, particularly in lesion areas and fine details. Two primary challenges hinder the generation of high-quality late-phase UWF-FA: the scarcity of paired UWF-SLO and early/late-phase UWF-FA datasets, and the need for realistic generation at lesion sites and potential blood leakage regions. This study introduces an improved latent diffusion model framework to generate high-quality late-phase UWF-FA from limited paired UWF images. To address the challenges as mentioned earlier, our approach employs a module utilizing Cross-temporal Regional Difference Loss, which encourages the model to focus on the differences between early and late phases. Additionally, we introduce a low-frequency enhanced noise strategy in the diffusion forward process to improve the realism of medical images. To further enhance the mapping capability of the variational autoencoder module, especially with limited datasets, we implement a Gated Convolutional Encoder to extract additional information from conditional images. Our Latent Diffusion Model for Ultra-Wide-Field Late-Phase Fluorescein Angiography (LPUWF-LDM) effectively reconstructs fine details in late-phase UWF-FA and achieves state-of-the-art results compared to other existing methods when working with limited datasets. Our source code is available at: https://github.com/Tinysqua/****.

Generic Objects as Pose Probes for Few-Shot View Synthesis

Sep 01, 2024

Abstract:Radiance fields including NeRFs and 3D Gaussians demonstrate great potential in high-fidelity rendering and scene reconstruction, while they require a substantial number of posed images as inputs. COLMAP is frequently employed for preprocessing to estimate poses, while it necessitates a large number of feature matches to operate effectively, and it struggles with scenes characterized by sparse features, large baselines between images, or a limited number of input images. We aim to tackle few-view NeRF reconstruction using only 3 to 6 unposed scene images. Traditional methods often use calibration boards but they are not common in images. We propose a novel idea of utilizing everyday objects, commonly found in both images and real life, as "pose probes". The probe object is automatically segmented by SAM, whose shape is initialized from a cube. We apply a dual-branch volume rendering optimization (object NeRF and scene NeRF) to constrain the pose optimization and jointly refine the geometry. Specifically, object poses of two views are first estimated by PnP matching in an SDF representation, which serves as initial poses. PnP matching, requiring only a few features, is suitable for feature-sparse scenes. Additional views are incrementally incorporated to refine poses from preceding views. In experiments, PoseProbe achieves state-of-the-art performance in both pose estimation and novel view synthesis across multiple datasets. We demonstrate its effectiveness, particularly in few-view and large-baseline scenes where COLMAP struggles. In ablations, using different objects in a scene yields comparable performance. Our project page is available at: \href{https://zhirui-gao.github.io/PoseProbe.github.io/}{this https URL}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge