Kang Liao

From Editor to Dense Geometry Estimator

Sep 04, 2025

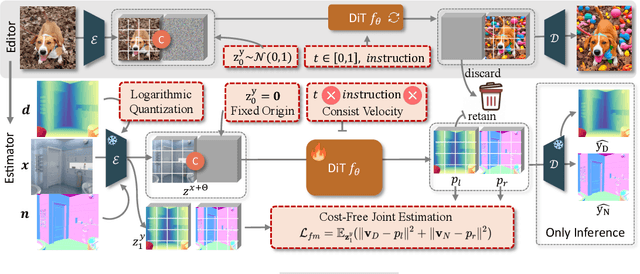

Abstract:Leveraging visual priors from pre-trained text-to-image (T2I) generative models has shown success in dense prediction. However, dense prediction is inherently an image-to-image task, suggesting that image editing models, rather than T2I generative models, may be a more suitable foundation for fine-tuning. Motivated by this, we conduct a systematic analysis of the fine-tuning behaviors of both editors and generators for dense geometry estimation. Our findings show that editing models possess inherent structural priors, which enable them to converge more stably by ``refining" their innate features, and ultimately achieve higher performance than their generative counterparts. Based on these findings, we introduce \textbf{FE2E}, a framework that pioneeringly adapts an advanced editing model based on Diffusion Transformer (DiT) architecture for dense geometry prediction. Specifically, to tailor the editor for this deterministic task, we reformulate the editor's original flow matching loss into the ``consistent velocity" training objective. And we use logarithmic quantization to resolve the precision conflict between the editor's native BFloat16 format and the high precision demand of our tasks. Additionally, we leverage the DiT's global attention for a cost-free joint estimation of depth and normals in a single forward pass, enabling their supervisory signals to mutually enhance each other. Without scaling up the training data, FE2E achieves impressive performance improvements in zero-shot monocular depth and normal estimation across multiple datasets. Notably, it achieves over 35\% performance gains on the ETH3D dataset and outperforms the DepthAnything series, which is trained on 100$\times$ data. The project page can be accessed \href{https://amap-ml.github.io/FE2E/}{here}.

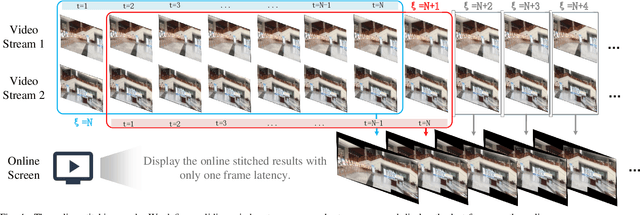

StabStitch++: Unsupervised Online Video Stitching with Spatiotemporal Bidirectional Warps

May 08, 2025

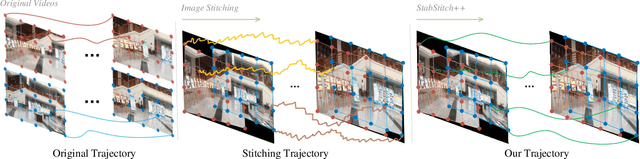

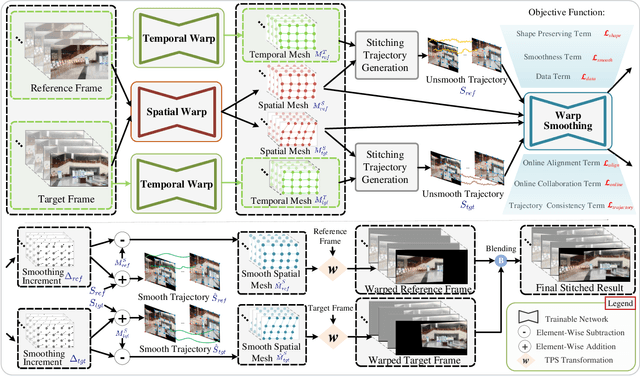

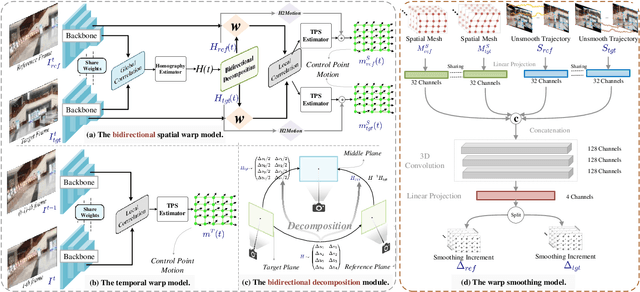

Abstract:We retarget video stitching to an emerging issue, named warping shake, which unveils the temporal content shakes induced by sequentially unsmooth warps when extending image stitching to video stitching. Even if the input videos are stable, the stitched video can inevitably cause undesired warping shakes and affect the visual experience. To address this issue, we propose StabStitch++, a novel video stitching framework to realize spatial stitching and temporal stabilization with unsupervised learning simultaneously. First, different from existing learning-based image stitching solutions that typically warp one image to align with another, we suppose a virtual midplane between original image planes and project them onto it. Concretely, we design a differentiable bidirectional decomposition module to disentangle the homography transformation and incorporate it into our spatial warp, evenly spreading alignment burdens and projective distortions across two views. Then, inspired by camera paths in video stabilization, we derive the mathematical expression of stitching trajectories in video stitching by elaborately integrating spatial and temporal warps. Finally, a warp smoothing model is presented to produce stable stitched videos with a hybrid loss to simultaneously encourage content alignment, trajectory smoothness, and online collaboration. Compared with StabStitch that sacrifices alignment for stabilization, StabStitch++ makes no compromise and optimizes both of them simultaneously, especially in the online mode. To establish an evaluation benchmark and train the learning framework, we build a video stitching dataset with a rich diversity in camera motions and scenes. Experiments exhibit that StabStitch++ surpasses current solutions in stitching performance, robustness, and efficiency, offering compelling advancements in this field by building a real-time online video stitching system.

You Need a Transition Plane: Bridging Continuous Panoramic 3D Reconstruction with Perspective Gaussian Splatting

Apr 12, 2025Abstract:Recently, reconstructing scenes from a single panoramic image using advanced 3D Gaussian Splatting (3DGS) techniques has attracted growing interest. Panoramic images offer a 360$\times$ 180 field of view (FoV), capturing the entire scene in a single shot. However, panoramic images introduce severe distortion, making it challenging to render 3D Gaussians into 2D distorted equirectangular space directly. Converting equirectangular images to cubemap projections partially alleviates this problem but introduces new challenges, such as projection distortion and discontinuities across cube-face boundaries. To address these limitations, we present a novel framework, named TPGS, to bridge continuous panoramic 3D scene reconstruction with perspective Gaussian splatting. Firstly, we introduce a Transition Plane between adjacent cube faces to enable smoother transitions in splatting directions and mitigate optimization ambiguity in the boundary region. Moreover, an intra-to-inter face optimization strategy is proposed to enhance local details and restore visual consistency across cube-face boundaries. Specifically, we optimize 3D Gaussians within individual cube faces and then fine-tune them in the stitched panoramic space. Additionally, we introduce a spherical sampling technique to eliminate visible stitching seams. Extensive experiments on indoor and outdoor, egocentric, and roaming benchmark datasets demonstrate that our approach outperforms existing state-of-the-art methods. Code and models will be available at https://github.com/zhijieshen-bjtu/TPGS.

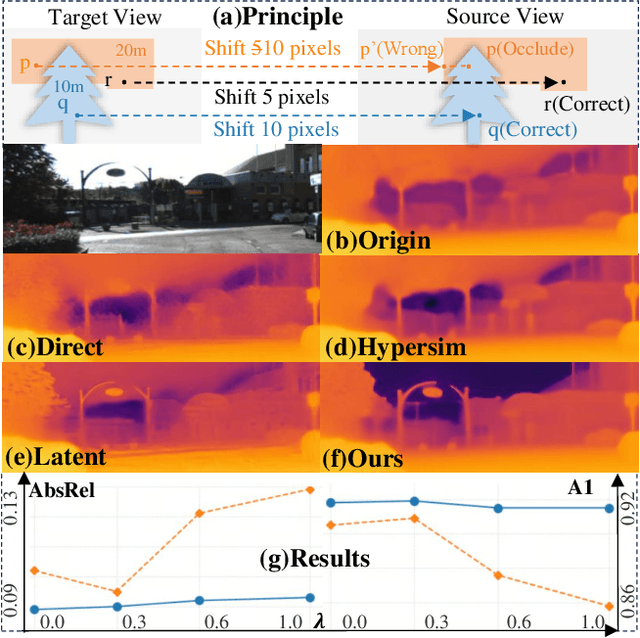

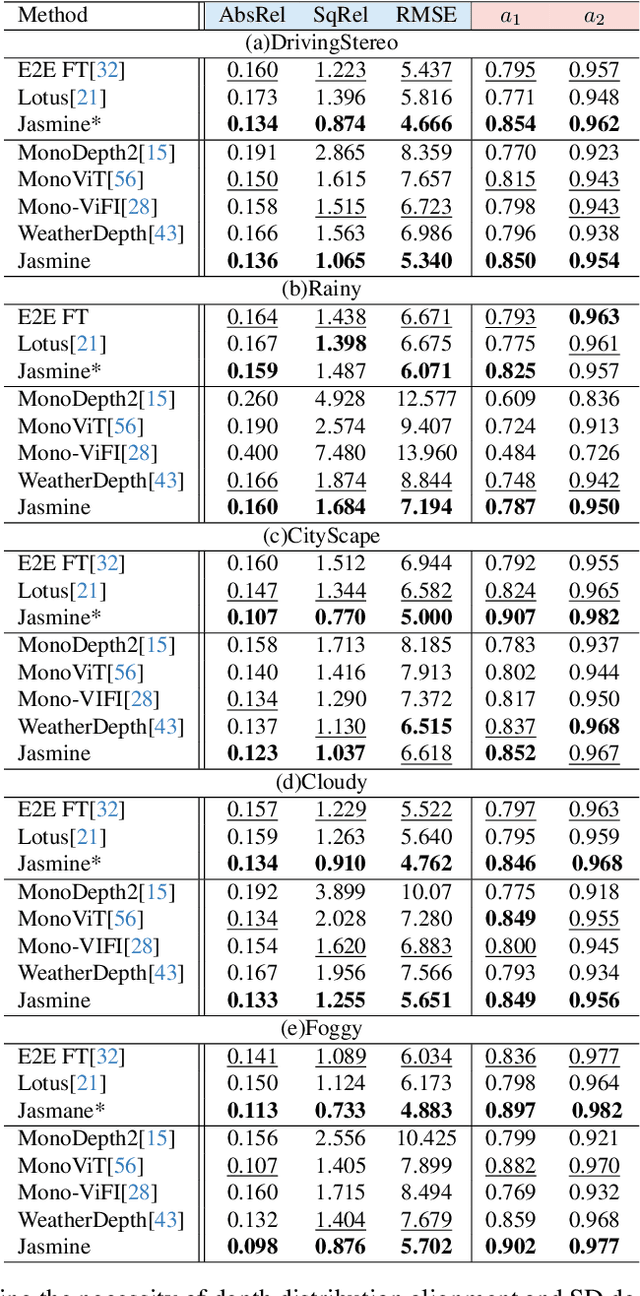

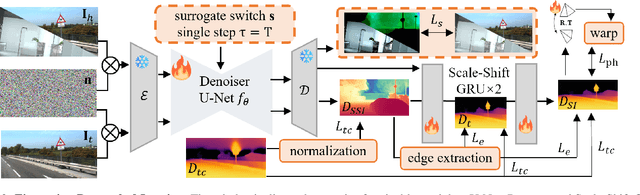

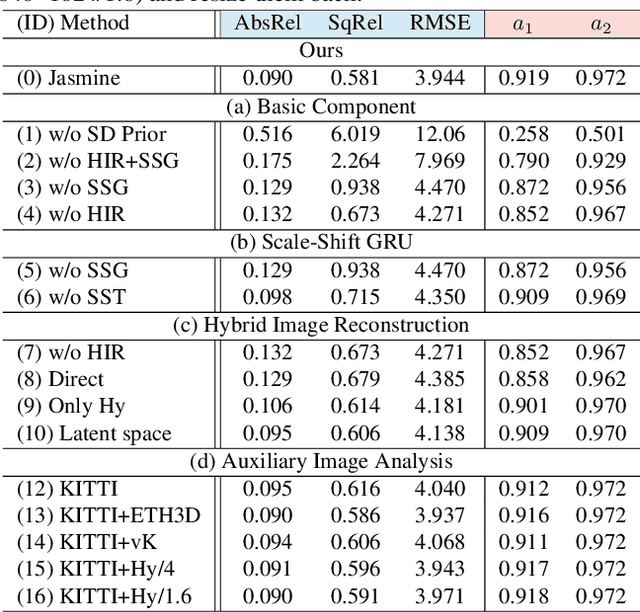

Jasmine: Harnessing Diffusion Prior for Self-supervised Depth Estimation

Mar 20, 2025

Abstract:In this paper, we propose Jasmine, the first Stable Diffusion (SD)-based self-supervised framework for monocular depth estimation, which effectively harnesses SD's visual priors to enhance the sharpness and generalization of unsupervised prediction. Previous SD-based methods are all supervised since adapting diffusion models for dense prediction requires high-precision supervision. In contrast, self-supervised reprojection suffers from inherent challenges (e.g., occlusions, texture-less regions, illumination variance), and the predictions exhibit blurs and artifacts that severely compromise SD's latent priors. To resolve this, we construct a novel surrogate task of hybrid image reconstruction. Without any additional supervision, it preserves the detail priors of SD models by reconstructing the images themselves while preventing depth estimation from degradation. Furthermore, to address the inherent misalignment between SD's scale and shift invariant estimation and self-supervised scale-invariant depth estimation, we build the Scale-Shift GRU. It not only bridges this distribution gap but also isolates the fine-grained texture of SD output against the interference of reprojection loss. Extensive experiments demonstrate that Jasmine achieves SoTA performance on the KITTI benchmark and exhibits superior zero-shot generalization across multiple datasets.

Semi-Supervised 360 Layout Estimation with Panoramic Collaborative Perturbations

Mar 03, 2025Abstract:The performance of existing supervised layout estimation methods heavily relies on the quality of data annotations. However, obtaining large-scale and high-quality datasets remains a laborious and time-consuming challenge. To solve this problem, semi-supervised approaches are introduced to relieve the demand for expensive data annotations by encouraging the consistent results of unlabeled data with different perturbations. However, existing solutions merely employ vanilla perturbations, ignoring the characteristics of panoramic layout estimation. In contrast, we propose a novel semi-supervised method named SemiLayout360, which incorporates the priors of the panoramic layout and distortion through collaborative perturbations. Specifically, we leverage the panoramic layout prior to enhance the model's focus on potential layout boundaries. Meanwhile, we introduce the panoramic distortion prior to strengthen distortion awareness. Furthermore, to prevent intense perturbations from hindering model convergence and ensure the effectiveness of prior-based perturbations, we divide and reorganize them as panoramic collaborative perturbations. Our experimental results on three mainstream benchmarks demonstrate that the proposed method offers significant advantages over existing state-of-the-art (SoTA) solutions.

Arbitrary-steps Image Super-resolution via Diffusion Inversion

Dec 12, 2024

Abstract:This study presents a new image super-resolution (SR) technique based on diffusion inversion, aiming at harnessing the rich image priors encapsulated in large pre-trained diffusion models to improve SR performance. We design a Partial noise Prediction strategy to construct an intermediate state of the diffusion model, which serves as the starting sampling point. Central to our approach is a deep noise predictor to estimate the optimal noise maps for the forward diffusion process. Once trained, this noise predictor can be used to initialize the sampling process partially along the diffusion trajectory, generating the desirable high-resolution result. Compared to existing approaches, our method offers a flexible and efficient sampling mechanism that supports an arbitrary number of sampling steps, ranging from one to five. Even with a single sampling step, our method demonstrates superior or comparable performance to recent state-of-the-art approaches. The code and model are publicly available at https://github.com/zsyOAOA/InvSR.

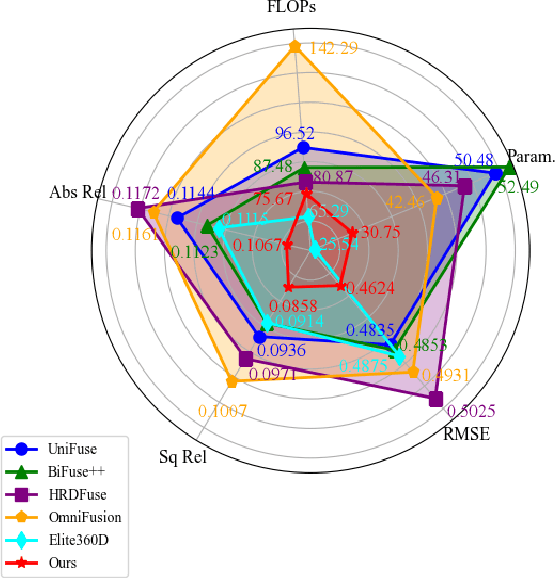

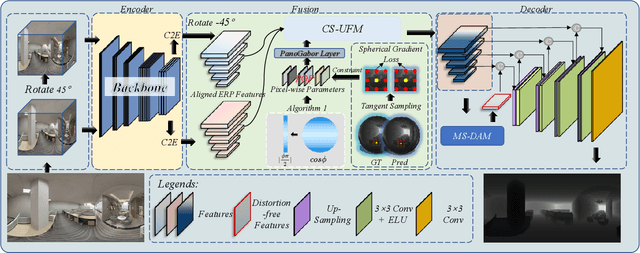

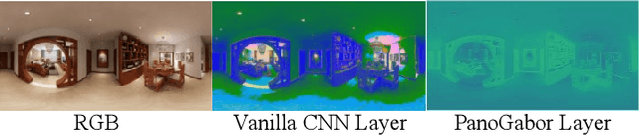

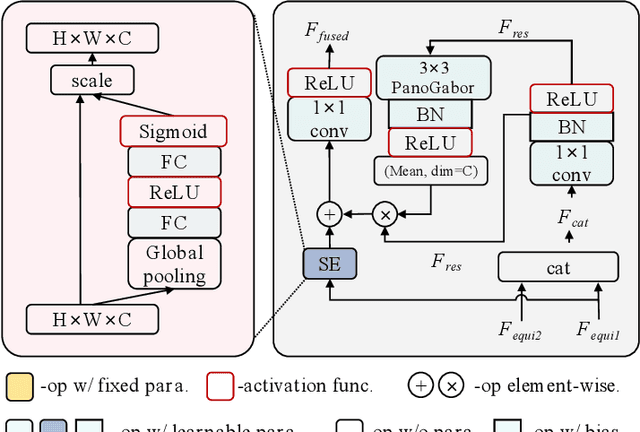

Revisiting 360 Depth Estimation with PanoGabor: A New Fusion Perspective

Aug 30, 2024

Abstract:Depth estimation from a monocular 360 image is important to the perception of the entire 3D environment. However, the inherent distortion and large field of view (FoV) in 360 images pose great challenges for this task. To this end, existing mainstream solutions typically introduce additional perspective-based 360 representations (\textit{e.g.}, Cubemap) to achieve effective feature extraction. Nevertheless, regardless of the introduced representations, they eventually need to be unified into the equirectangular projection (ERP) format for the subsequent depth estimation, which inevitably reintroduces the troublesome distortions. In this work, we propose an oriented distortion-aware Gabor Fusion framework (PGFuse) to address the above challenges. First, we introduce Gabor filters that analyze texture in the frequency domain, thereby extending the receptive fields and enhancing depth cues. To address the reintroduced distortions, we design a linear latitude-aware distortion representation method to generate customized, distortion-aware Gabor filters (PanoGabor filters). Furthermore, we design a channel-wise and spatial-wise unidirectional fusion module (CS-UFM) that integrates the proposed PanoGabor filters to unify other representations into the ERP format, delivering effective and distortion-free features. Considering the orientation sensitivity of the Gabor transform, we introduce a spherical gradient constraint to stabilize this sensitivity. Experimental results on three popular indoor 360 benchmarks demonstrate the superiority of the proposed PGFuse to existing state-of-the-art solutions. Code can be available upon acceptance.

Denoising as Adaptation: Noise-Space Domain Adaptation for Image Restoration

Jun 26, 2024

Abstract:Although deep learning-based image restoration methods have made significant progress, they still struggle with limited generalization to real-world scenarios due to the substantial domain gap caused by training on synthetic data. Existing methods address this issue by improving data synthesis pipelines, estimating degradation kernels, employing deep internal learning, and performing domain adaptation and regularization. Previous domain adaptation methods have sought to bridge the domain gap by learning domain-invariant knowledge in either feature or pixel space. However, these techniques often struggle to extend to low-level vision tasks within a stable and compact framework. In this paper, we show that it is possible to perform domain adaptation via the noise-space using diffusion models. In particular, by leveraging the unique property of how the multi-step denoising process is influenced by auxiliary conditional inputs, we obtain meaningful gradients from noise prediction to gradually align the restored results of both synthetic and real-world data to a common clean distribution. We refer to this method as denoising as adaptation. To prevent shortcuts during training, we present useful techniques such as channel shuffling and residual-swapping contrastive learning. Experimental results on three classical image restoration tasks, namely denoising, deblurring, and deraining, demonstrate the effectiveness of the proposed method. Code will be released at: https://github.com/KangLiao929/Noise-DA/.

Digging into contrastive learning for robust depth estimation with diffusion models

Apr 17, 2024

Abstract:Recently, diffusion-based depth estimation methods have drawn widespread attention due to their elegant denoising patterns and promising performance. However, they are typically unreliable under adverse conditions prevalent in real-world scenarios, such as rainy, snowy, etc. In this paper, we propose a novel robust depth estimation method called D4RD, featuring a custom contrastive learning mode tailored for diffusion models to mitigate performance degradation in complex environments. Concretely, we integrate the strength of knowledge distillation into contrastive learning, building the `trinity' contrastive scheme. This scheme utilizes the sampled noise of the forward diffusion process as a natural reference, guiding the predicted noise in diverse scenes toward a more stable and precise optimum. Moreover, we extend noise-level trinity to encompass more generic feature and image levels, establishing a multi-level contrast to distribute the burden of robust perception across the overall network. Before addressing complex scenarios, we enhance the stability of the baseline diffusion model with three straightforward yet effective improvements, which facilitate convergence and remove depth outliers. Extensive experiments demonstrate that D4RD surpasses existing state-of-the-art solutions on synthetic corruption datasets and real-world weather conditions. The code for D4RD will be made available for further exploration and adoption.

MOWA: Multiple-in-One Image Warping Model

Apr 16, 2024

Abstract:While recent image warping approaches achieved remarkable success on existing benchmarks, they still require training separate models for each specific task and cannot generalize well to different camera models or customized manipulations. To address diverse types of warping in practice, we propose a Multiple-in-One image WArping model (named MOWA) in this work. Specifically, we mitigate the difficulty of multi-task learning by disentangling the motion estimation at both the region level and pixel level. To further enable dynamic task-aware image warping, we introduce a lightweight point-based classifier that predicts the task type, serving as prompts to modulate the feature maps for better estimation. To our knowledge, this is the first work that solves multiple practical warping tasks in one single model. Extensive experiments demonstrate that our MOWA, which is trained on six tasks for multiple-in-one image warping, outperforms state-of-the-art task-specific models across most tasks. Moreover, MOWA also exhibits promising potential to generalize into unseen scenes, as evidenced by cross-domain and zero-shot evaluations. The code will be made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge