Junyao Wang

Hyperdimensional Uncertainty Quantification for Multimodal Uncertainty Fusion in Autonomous Vehicles Perception

Mar 25, 2025

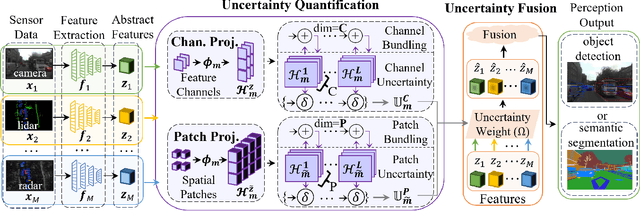

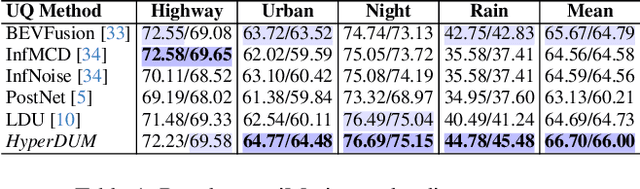

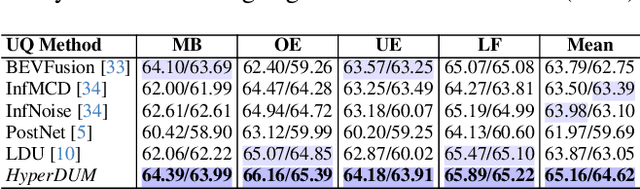

Abstract:Uncertainty Quantification (UQ) is crucial for ensuring the reliability of machine learning models deployed in real-world autonomous systems. However, existing approaches typically quantify task-level output prediction uncertainty without considering epistemic uncertainty at the multimodal feature fusion level, leading to sub-optimal outcomes. Additionally, popular uncertainty quantification methods, e.g., Bayesian approximations, remain challenging to deploy in practice due to high computational costs in training and inference. In this paper, we propose HyperDUM, a novel deterministic uncertainty method (DUM) that efficiently quantifies feature-level epistemic uncertainty by leveraging hyperdimensional computing. Our method captures the channel and spatial uncertainties through channel and patch -wise projection and bundling techniques respectively. Multimodal sensor features are then adaptively weighted to mitigate uncertainty propagation and improve feature fusion. Our evaluations show that HyperDUM on average outperforms the state-of-the-art (SOTA) algorithms by up to 2.01%/1.27% in 3D Object Detection and up to 1.29% improvement over baselines in semantic segmentation tasks under various types of uncertainties. Notably, HyperDUM requires 2.36x less Floating Point Operations and up to 38.30x less parameters than SOTA methods, providing an efficient solution for real-world autonomous systems.

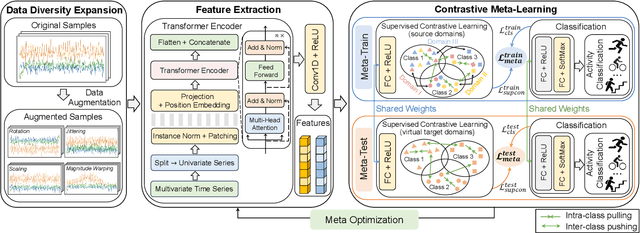

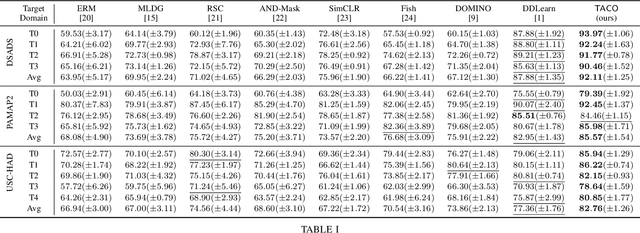

Transformer-Based Contrastive Meta-Learning For Low-Resource Generalizable Activity Recognition

Dec 28, 2024

Abstract:Deep learning has been widely adopted for human activity recognition (HAR) while generalizing a trained model across diverse users and scenarios remains challenging due to distribution shifts. The inherent low-resource challenge in HAR, i.e., collecting and labeling adequate human-involved data can be prohibitively costly, further raising the difficulty of tackling DS. We propose TACO, a novel transformer-based contrastive meta-learning approach for generalizable HAR. TACO addresses DS by synthesizing virtual target domains in training with explicit consideration of model generalizability. Additionally, we extract expressive feature with the attention mechanism of Transformer and incorporate the supervised contrastive loss function within our meta-optimization to enhance representation learning. Our evaluation demonstrates that TACO achieves notably better performance across various low-resource DS scenarios.

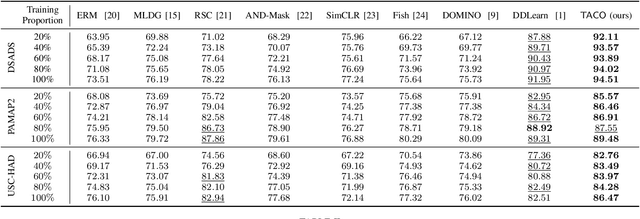

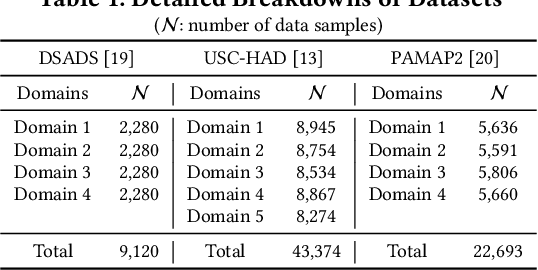

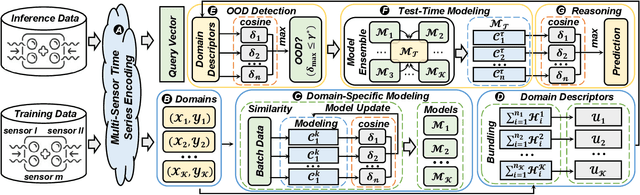

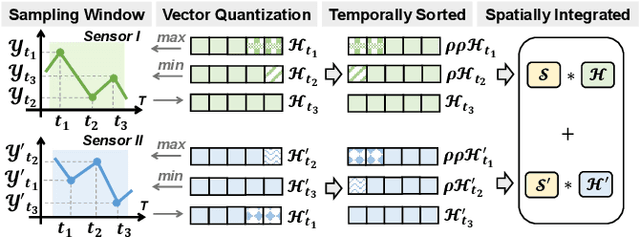

SMORE: Similarity-based Hyperdimensional Domain Adaptation for Multi-Sensor Time Series Classification

Feb 27, 2024

Abstract:Many real-world applications of the Internet of Things (IoT) employ machine learning (ML) algorithms to analyze time series information collected by interconnected sensors. However, distribution shift, a fundamental challenge in data-driven ML, arises when a model is deployed on a data distribution different from the training data and can substantially degrade model performance. Additionally, increasingly sophisticated deep neural networks (DNNs) are required to capture intricate spatial and temporal dependencies in multi-sensor time series data, often exceeding the capabilities of today's edge devices. In this paper, we propose SMORE, a novel resource-efficient domain adaptation (DA) algorithm for multi-sensor time series classification, leveraging the efficient and parallel operations of hyperdimensional computing. SMORE dynamically customizes test-time models with explicit consideration of the domain context of each sample to mitigate the negative impacts of domain shifts. Our evaluation on a variety of multi-sensor time series classification tasks shows that SMORE achieves on average 1.98% higher accuracy than state-of-the-art (SOTA) DNN-based DA algorithms with 18.81x faster training and 4.63x faster inference.

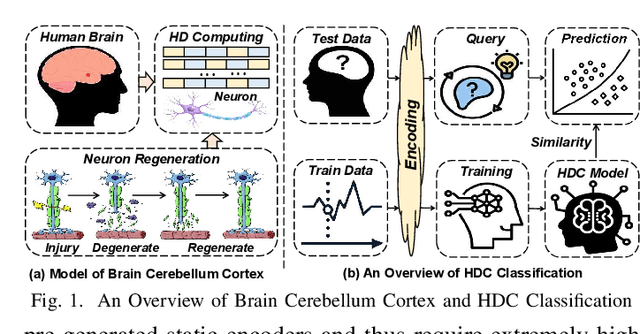

Robust and Scalable Hyperdimensional Computing With Brain-Like Neural Adaptations

Nov 13, 2023

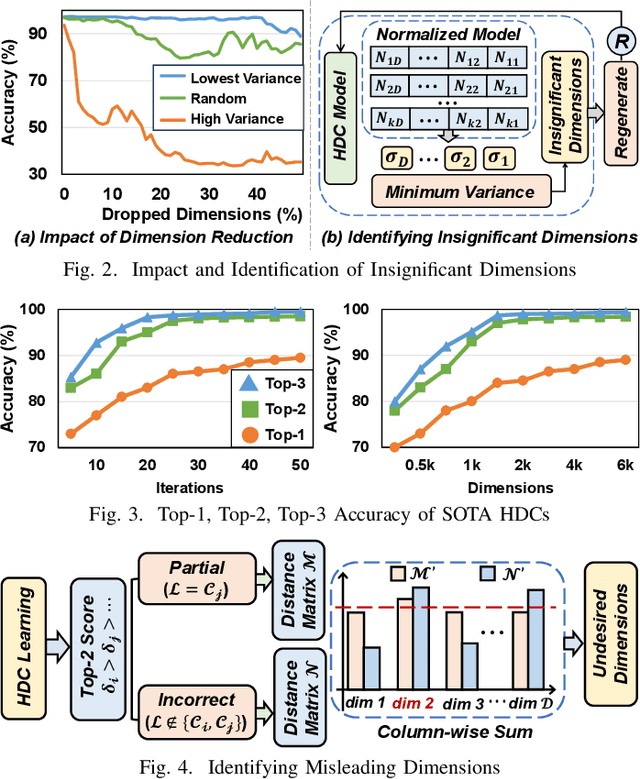

Abstract:The Internet of Things (IoT) has facilitated many applications utilizing edge-based machine learning (ML) methods to analyze locally collected data. Unfortunately, popular ML algorithms often require intensive computations beyond the capabilities of today's IoT devices. Brain-inspired hyperdimensional computing (HDC) has been introduced to address this issue. However, existing HDCs use static encoders, requiring extremely high dimensionality and hundreds of training iterations to achieve reasonable accuracy. This results in a huge efficiency loss, severely impeding the application of HDCs in IoT systems. We observed that a main cause is that the encoding module of existing HDCs lacks the capability to utilize and adapt to information learned during training. In contrast, neurons in human brains dynamically regenerate all the time and provide more useful functionalities when learning new information. While the goal of HDC is to exploit the high-dimensionality of randomly generated base hypervectors to represent the information as a pattern of neural activity, it remains challenging for existing HDCs to support a similar behavior as brain neural regeneration. In this work, we present dynamic HDC learning frameworks that identify and regenerate undesired dimensions to provide adequate accuracy with significantly lowered dimensionalities, thereby accelerating both the training and inference.

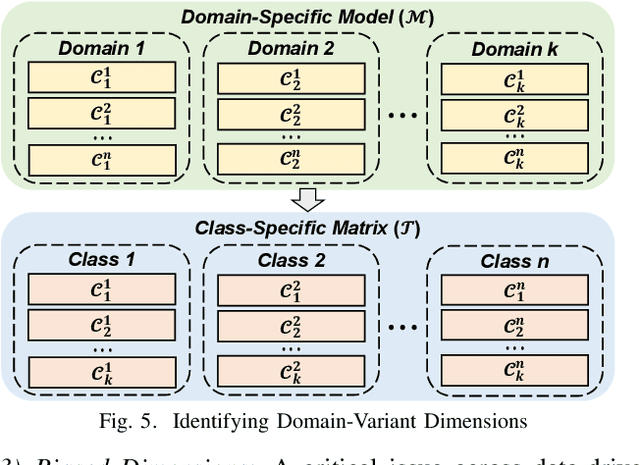

DOMINO: Domain-invariant Hyperdimensional Classification for Multi-Sensor Time Series Data

Aug 18, 2023Abstract:With the rapid evolution of the Internet of Things, many real-world applications utilize heterogeneously connected sensors to capture time-series information. Edge-based machine learning (ML) methodologies are often employed to analyze locally collected data. However, a fundamental issue across data-driven ML approaches is distribution shift. It occurs when a model is deployed on a data distribution different from what it was trained on, and can substantially degrade model performance. Additionally, increasingly sophisticated deep neural networks (DNNs) have been proposed to capture spatial and temporal dependencies in multi-sensor time series data, requiring intensive computational resources beyond the capacity of today's edge devices. While brain-inspired hyperdimensional computing (HDC) has been introduced as a lightweight solution for edge-based learning, existing HDCs are also vulnerable to the distribution shift challenge. In this paper, we propose DOMINO, a novel HDC learning framework addressing the distribution shift problem in noisy multi-sensor time-series data. DOMINO leverages efficient and parallel matrix operations on high-dimensional space to dynamically identify and filter out domain-variant dimensions. Our evaluation on a wide range of multi-sensor time series classification tasks shows that DOMINO achieves on average 2.04% higher accuracy than state-of-the-art (SOTA) DNN-based domain generalization techniques, and delivers 16.34x faster training and 2.89x faster inference. More importantly, DOMINO performs notably better when learning from partially labeled and highly imbalanced data, providing 10.93x higher robustness against hardware noises than SOTA DNNs.

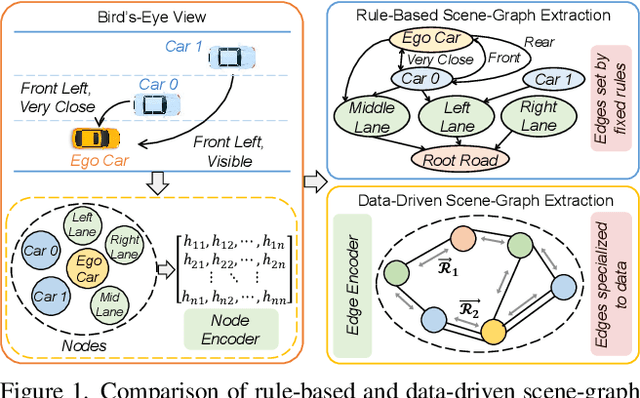

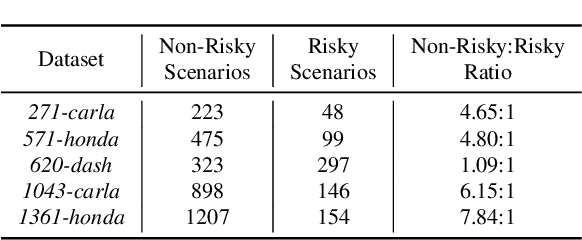

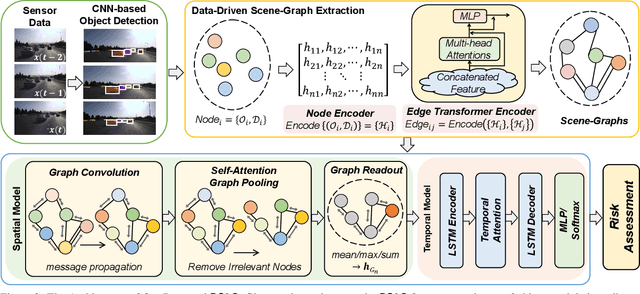

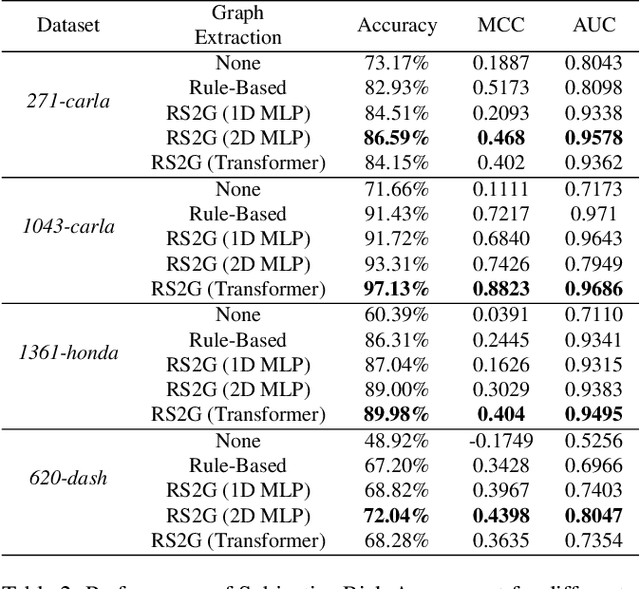

RS2G: Data-Driven Scene-Graph Extraction and Embedding for Robust Autonomous Perception and Scenario Understanding

Apr 17, 2023

Abstract:Human drivers naturally reason about interactions between road users to understand and safely navigate through traffic. Thus, developing autonomous vehicles necessitates the ability to mimic such knowledge and model interactions between road users to understand and navigate unpredictable, dynamic environments. However, since real-world scenarios often differ from training datasets, effectively modeling the behavior of various road users in an environment remains a significant research challenge. This reality necessitates models that generalize to a broad range of domains and explicitly model interactions between road users and the environment to improve scenario understanding. Graph learning methods address this problem by modeling interactions using graph representations of scenarios. However, existing methods cannot effectively transfer knowledge gained from the training domain to real-world scenarios. This constraint is caused by the domain-specific rules used for graph extraction that can vary in effectiveness across domains, limiting generalization ability. To address these limitations, we propose RoadScene2Graph (RS2G): a data-driven graph extraction and modeling approach that learns to extract the best graph representation of a road scene for solving autonomous scene understanding tasks. We show that RS2G enables better performance at subjective risk assessment than rule-based graph extraction methods and deep-learning-based models. RS2G also improves generalization and Sim2Real transfer learning, which denotes the ability to transfer knowledge gained from simulation datasets to unseen real-world scenarios. We also present ablation studies showing how RS2G produces a more useful graph representation for downstream classifiers. Finally, we show how RS2G can identify the relative importance of rule-based graph edges and enables intelligent graph sparsity tuning.

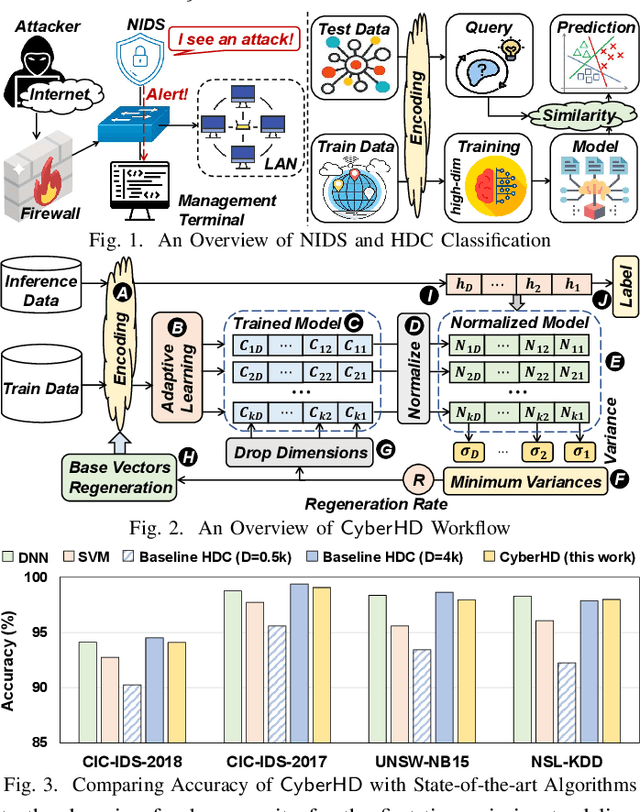

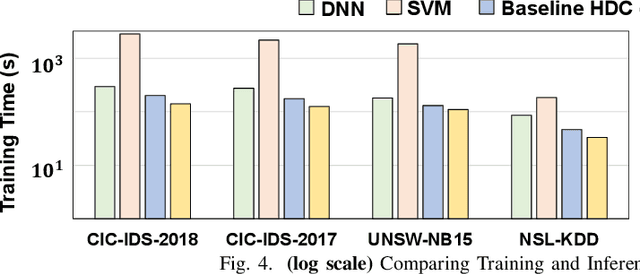

Late Breaking Results: Scalable and Efficient Hyperdimensional Computing for Network Intrusion Detection

Apr 11, 2023

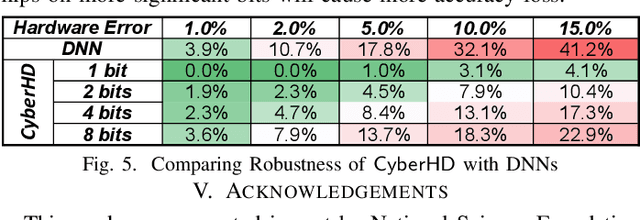

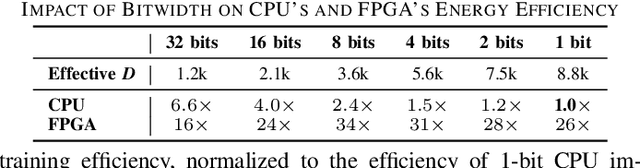

Abstract:Cybersecurity has emerged as a critical challenge for the industry. With the large complexity of the security landscape, sophisticated and costly deep learning models often fail to provide timely detection of cyber threats on edge devices. Brain-inspired hyperdimensional computing (HDC) has been introduced as a promising solution to address this issue. However, existing HDC approaches use static encoders and require very high dimensionality and hundreds of training iterations to achieve reasonable accuracy. This results in a serious loss of learning efficiency and causes huge latency for detecting attacks. In this paper, we propose CyberHD, an innovative HDC learning framework that identifies and regenerates insignificant dimensions to capture complicated patterns of cyber threats with remarkably lower dimensionality. Additionally, the holographic distribution of patterns in high dimensional space provides CyberHD with notably high robustness against hardware errors.

DistHD: A Learner-Aware Dynamic Encoding Method for Hyperdimensional Classification

Apr 11, 2023

Abstract:Brain-inspired hyperdimensional computing (HDC) has been recently considered a promising learning approach for resource-constrained devices. However, existing approaches use static encoders that are never updated during the learning process. Consequently, it requires a very high dimensionality to achieve adequate accuracy, severely lowering the encoding and training efficiency. In this paper, we propose DistHD, a novel dynamic encoding technique for HDC adaptive learning that effectively identifies and regenerates dimensions that mislead the classification and compromise the learning quality. Our proposed algorithm DistHD successfully accelerates the learning process and achieves the desired accuracy with considerably lower dimensionality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge