Trier Mortlock

Hyperdimensional Uncertainty Quantification for Multimodal Uncertainty Fusion in Autonomous Vehicles Perception

Mar 25, 2025

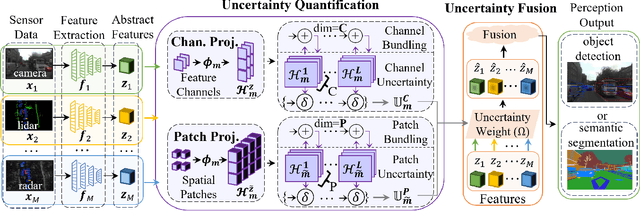

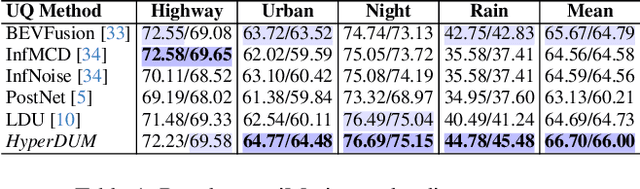

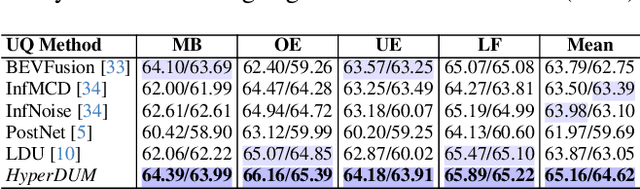

Abstract:Uncertainty Quantification (UQ) is crucial for ensuring the reliability of machine learning models deployed in real-world autonomous systems. However, existing approaches typically quantify task-level output prediction uncertainty without considering epistemic uncertainty at the multimodal feature fusion level, leading to sub-optimal outcomes. Additionally, popular uncertainty quantification methods, e.g., Bayesian approximations, remain challenging to deploy in practice due to high computational costs in training and inference. In this paper, we propose HyperDUM, a novel deterministic uncertainty method (DUM) that efficiently quantifies feature-level epistemic uncertainty by leveraging hyperdimensional computing. Our method captures the channel and spatial uncertainties through channel and patch -wise projection and bundling techniques respectively. Multimodal sensor features are then adaptively weighted to mitigate uncertainty propagation and improve feature fusion. Our evaluations show that HyperDUM on average outperforms the state-of-the-art (SOTA) algorithms by up to 2.01%/1.27% in 3D Object Detection and up to 1.29% improvement over baselines in semantic segmentation tasks under various types of uncertainties. Notably, HyperDUM requires 2.36x less Floating Point Operations and up to 38.30x less parameters than SOTA methods, providing an efficient solution for real-world autonomous systems.

Stress Detection using Context-Aware Sensor Fusion from Wearable Devices

Mar 14, 2023

Abstract:Wearable medical technology has become increasingly popular in recent years. One function of wearable health devices is stress detection, which relies on sensor inputs to determine the mental state of patients. This continuous, real-time monitoring can provide healthcare professionals with vital physiological data and enhance the quality of patient care. Current methods of stress detection lack: (i) robustness -- wearable health sensors contain high levels of measurement noise that degrades performance, and (ii) adaptation -- static architectures fail to adapt to changing contexts in sensing conditions. We propose to address these deficiencies with SELF-CARE, a generalized selective sensor fusion method of stress detection that employs novel techniques of context identification and ensemble machine learning. SELF-CARE uses a learning-based classifier to process sensor features and model the environmental variations in sensing conditions known as the noise context. SELF-CARE uses noise context to selectively fuse different sensor combinations across an ensemble of models to perform robust stress classification. Our findings suggest that for wrist-worn devices, sensors that measure motion are most suitable to understand noise context, while for chest-worn devices, the most suitable sensors are those that detect muscle contraction. SELF-CARE demonstrates state-of-the-art performance on the WESAD dataset. Using wrist-based sensors, SELF-CARE achieves 86.34% and 94.12% accuracy for the 3-class and 2-class stress classification problems, respectively. For chest-based wearable sensors, SELF-CARE achieves 86.19% (3-class) and 93.68% (2-class) classification accuracy. This work demonstrates the benefits of utilizing selective, context-aware sensor fusion in mobile health sensing that can be applied broadly to Internet of Things applications.

SELF-CARE: Selective Fusion with Context-Aware Low-Power Edge Computing for Stress Detection

May 08, 2022

Abstract:Detecting human stress levels and emotional states with physiological body-worn sensors is a complex task, but one with many health-related benefits. Robustness to sensor measurement noise and energy efficiency of low-power devices remain key challenges in stress detection. We propose SELFCARE, a fully wrist-based method for stress detection that employs context-aware selective sensor fusion that dynamically adapts based on data from the sensors. Our method uses motion to determine the context of the system and learns to adjust the fused sensors accordingly, improving performance while maintaining energy efficiency. SELF-CARE obtains state-of-the-art performance across the publicly available WESAD dataset, achieving 86.34% and 94.12% accuracy for the 3-class and 2-class classification problems, respectively. Evaluation on real hardware shows that our approach achieves up to 2.2x (3-class) and 2.7x (2-class) energy efficiency compared to traditional sensor fusion.

EcoFusion: Energy-Aware Adaptive Sensor Fusion for Efficient Autonomous Vehicle Perception

Feb 23, 2022

Abstract:Autonomous vehicles use multiple sensors, large deep-learning models, and powerful hardware platforms to perceive the environment and navigate safely. In many contexts, some sensing modalities negatively impact perception while increasing energy consumption. We propose EcoFusion: an energy-aware sensor fusion approach that uses context to adapt the fusion method and reduce energy consumption without affecting perception performance. EcoFusion performs up to 9.5% better at object detection than existing fusion methods with approximately 60% less energy and 58% lower latency on the industry-standard Nvidia Drive PX2 hardware platform. We also propose several context-identification strategies, implement a joint optimization between energy and performance, and present scenario-specific results.

HydraFusion: Context-Aware Selective Sensor Fusion for Robust and Efficient Autonomous Vehicle Perception

Jan 17, 2022

Abstract:Although autonomous vehicles (AVs) are expected to revolutionize transportation, robust perception across a wide range of driving contexts remains a significant challenge. Techniques to fuse sensor data from camera, radar, and lidar sensors have been proposed to improve AV perception. However, existing methods are insufficiently robust in difficult driving contexts (e.g., bad weather, low light, sensor obstruction) due to rigidity in their fusion implementations. These methods fall into two broad categories: (i) early fusion, which fails when sensor data is noisy or obscured, and (ii) late fusion, which cannot leverage features from multiple sensors and thus produces worse estimates. To address these limitations, we propose HydraFusion: a selective sensor fusion framework that learns to identify the current driving context and fuses the best combination of sensors to maximize robustness without compromising efficiency. HydraFusion is the first approach to propose dynamically adjusting between early fusion, late fusion, and combinations in-between, thus varying both how and when fusion is applied. We show that, on average, HydraFusion outperforms early and late fusion approaches by 13.66% and 14.54%, respectively, without increasing computational complexity or energy consumption on the industry-standard Nvidia Drive PX2 AV hardware platform. We also propose and evaluate both static and deep-learning-based context identification strategies. Our open-source code and model implementation are available at https://github.com/AICPS/hydrafusion.

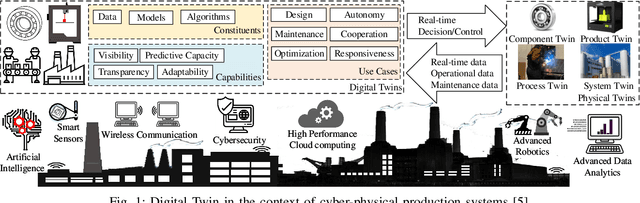

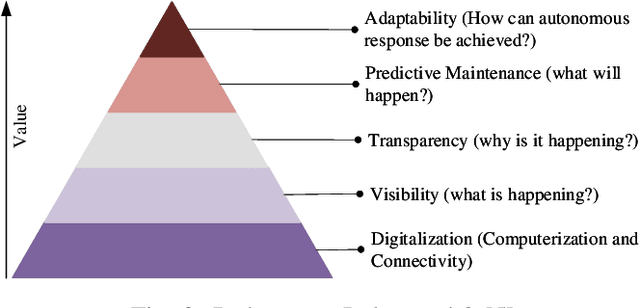

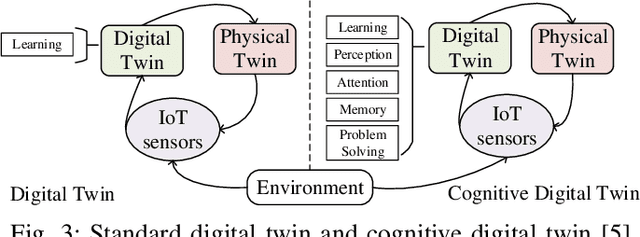

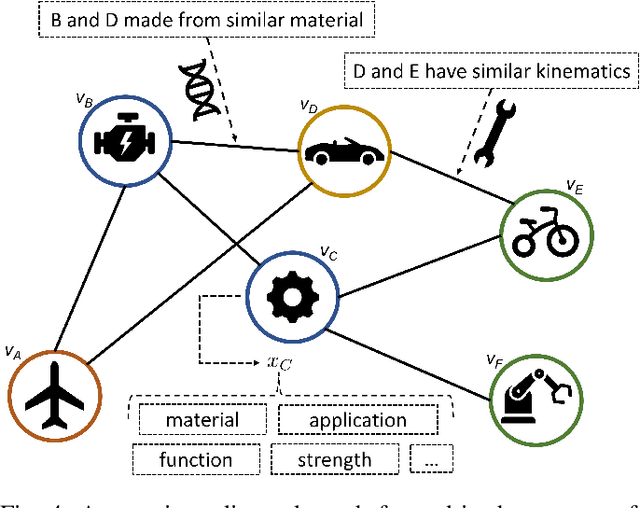

Graph Learning for Cognitive Digital Twins in Manufacturing Systems

Sep 17, 2021

Abstract:Future manufacturing requires complex systems that connect simulation platforms and virtualization with physical data from industrial processes. Digital twins incorporate a physical twin, a digital twin, and the connection between the two. Benefits of using digital twins, especially in manufacturing, are abundant as they can increase efficiency across an entire manufacturing life-cycle. The digital twin concept has become increasingly sophisticated and capable over time, enabled by rises in many technologies. In this paper, we detail the cognitive digital twin as the next stage of advancement of a digital twin that will help realize the vision of Industry 4.0. Cognitive digital twins will allow enterprises to creatively, effectively, and efficiently exploit implicit knowledge drawn from the experience of existing manufacturing systems. They also enable more autonomous decisions and control, while improving the performance across the enterprise (at scale). This paper presents graph learning as one potential pathway towards enabling cognitive functionalities in manufacturing digital twins. A novel approach to realize cognitive digital twins in the product design stage of manufacturing that utilizes graph learning is presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge