Nafiul Rashid

Stress Detection using Context-Aware Sensor Fusion from Wearable Devices

Mar 14, 2023

Abstract:Wearable medical technology has become increasingly popular in recent years. One function of wearable health devices is stress detection, which relies on sensor inputs to determine the mental state of patients. This continuous, real-time monitoring can provide healthcare professionals with vital physiological data and enhance the quality of patient care. Current methods of stress detection lack: (i) robustness -- wearable health sensors contain high levels of measurement noise that degrades performance, and (ii) adaptation -- static architectures fail to adapt to changing contexts in sensing conditions. We propose to address these deficiencies with SELF-CARE, a generalized selective sensor fusion method of stress detection that employs novel techniques of context identification and ensemble machine learning. SELF-CARE uses a learning-based classifier to process sensor features and model the environmental variations in sensing conditions known as the noise context. SELF-CARE uses noise context to selectively fuse different sensor combinations across an ensemble of models to perform robust stress classification. Our findings suggest that for wrist-worn devices, sensors that measure motion are most suitable to understand noise context, while for chest-worn devices, the most suitable sensors are those that detect muscle contraction. SELF-CARE demonstrates state-of-the-art performance on the WESAD dataset. Using wrist-based sensors, SELF-CARE achieves 86.34% and 94.12% accuracy for the 3-class and 2-class stress classification problems, respectively. For chest-based wearable sensors, SELF-CARE achieves 86.19% (3-class) and 93.68% (2-class) classification accuracy. This work demonstrates the benefits of utilizing selective, context-aware sensor fusion in mobile health sensing that can be applied broadly to Internet of Things applications.

SELF-CARE: Selective Fusion with Context-Aware Low-Power Edge Computing for Stress Detection

May 08, 2022

Abstract:Detecting human stress levels and emotional states with physiological body-worn sensors is a complex task, but one with many health-related benefits. Robustness to sensor measurement noise and energy efficiency of low-power devices remain key challenges in stress detection. We propose SELFCARE, a fully wrist-based method for stress detection that employs context-aware selective sensor fusion that dynamically adapts based on data from the sensors. Our method uses motion to determine the context of the system and learns to adjust the fused sensors accordingly, improving performance while maintaining energy efficiency. SELF-CARE obtains state-of-the-art performance across the publicly available WESAD dataset, achieving 86.34% and 94.12% accuracy for the 3-class and 2-class classification problems, respectively. Evaluation on real hardware shows that our approach achieves up to 2.2x (3-class) and 2.7x (2-class) energy efficiency compared to traditional sensor fusion.

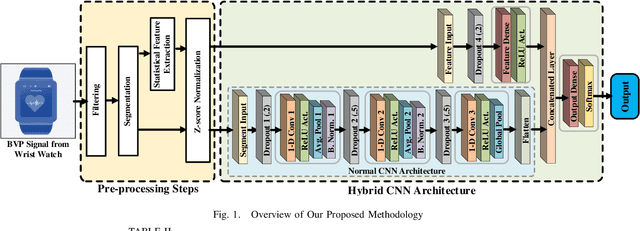

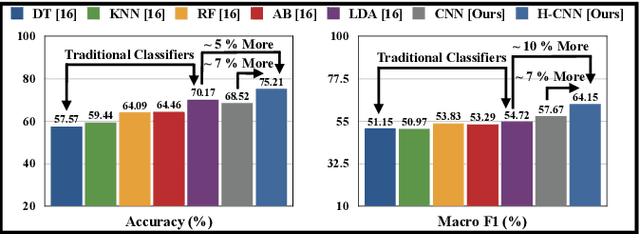

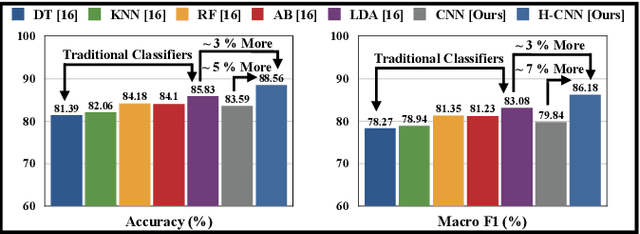

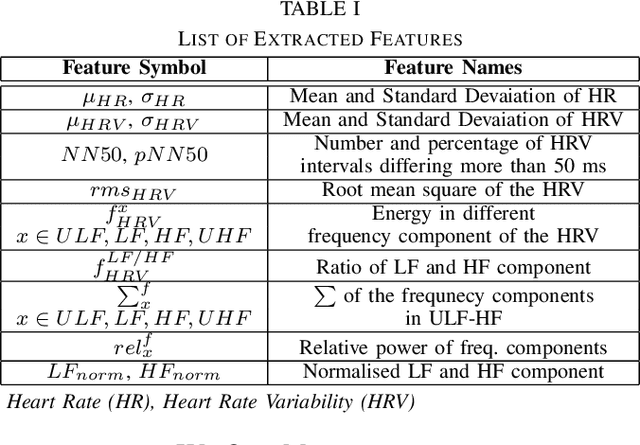

Feature Augmented Hybrid CNN for Stress Recognition Using Wrist-based Photoplethysmography Sensor

Aug 02, 2021

Abstract:Stress is a physiological state that hampers mental health and has serious consequences to physical health. Moreover, the COVID-19 pandemic has increased stress levels among people across the globe. Therefore, continuous monitoring and detection of stress are necessary. The recent advances in wearable devices have allowed the monitoring of several physiological signals related to stress. Among them, wrist-worn wearable devices like smartwatches are most popular due to their convenient usage. And the photoplethysmography (PPG) sensor is the most prevalent sensor in almost all consumer-grade wrist-worn smartwatches. Therefore, this paper focuses on using a wrist-based PPG sensor that collects Blood Volume Pulse (BVP) signals to detect stress which may be applicable for consumer-grade wristwatches. Moreover, state-of-the-art works have used either classical machine learning algorithms to detect stress using hand-crafted features or have used deep learning algorithms like Convolutional Neural Network (CNN) which automatically extracts features. This paper proposes a novel hybrid CNN (H-CNN) classifier that uses both the hand-crafted features and the automatically extracted features by CNN to detect stress using the BVP signal. Evaluation on the benchmark WESAD dataset shows that, for 3-class classification (Baseline vs. Stress vs. Amusement), our proposed H-CNN outperforms traditional classifiers and normal CNN by 5% and 7% accuracy, and 10% and 7% macro F1 score, respectively. Also for 2-class classification (Stress vs. Non-stress), our proposed H-CNN outperforms traditional classifiers and normal CNN by 3% and ~5% accuracy, and ~3% and ~7% macro F1 score, respectively.

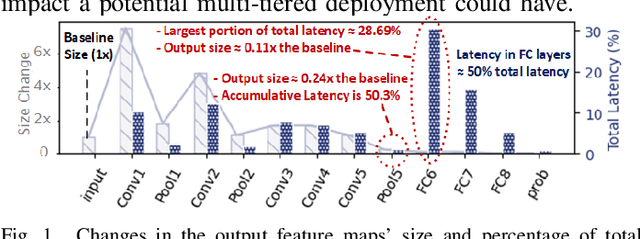

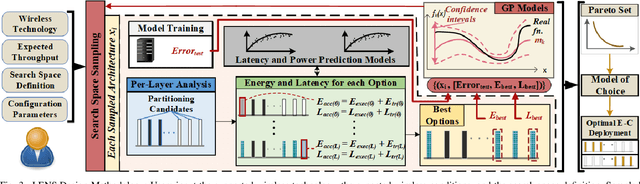

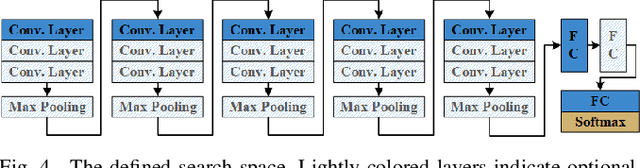

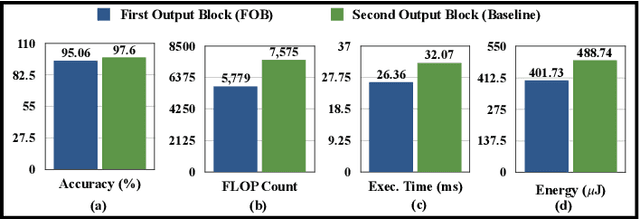

LENS: Layer Distribution Enabled Neural Architecture Search in Edge-Cloud Hierarchies

Jul 20, 2021

Abstract:Edge-Cloud hierarchical systems employing intelligence through Deep Neural Networks (DNNs) endure the dilemma of workload distribution within them. Previous solutions proposed to distribute workloads at runtime according to the state of the surroundings, like the wireless conditions. However, such conditions are usually overlooked at design time. This paper addresses this issue for DNN architectural design by presenting a novel methodology, LENS, which administers multi-objective Neural Architecture Search (NAS) for two-tiered systems, where the performance objectives are refashioned to consider the wireless communication parameters. From our experimental search space, we demonstrate that LENS improves upon the traditional solution's Pareto set by 76.47% and 75% with respect to the energy and latency metrics, respectively.

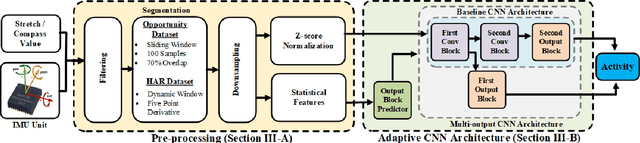

AHAR: Adaptive CNN for Energy-efficient Human Activity Recognition in Low-power Edge Devices

Feb 03, 2021

Abstract:Human Activity Recognition (HAR) is one of the key applications of health monitoring that requires continuous use of wearable devices to track daily activities. State-of-the-art works using wearable devices have been following fog/cloud computing architecture where the data is classified at the mobile phones/remote servers. This kind of approach suffers from energy, latency, and privacy issues. Therefore, we follow edge computing architecture where the wearable device solutions provide adequate performance while being energy and memory-efficient. This paper proposes an Adaptive CNN for energy-efficient HAR (AHAR) suitable for low-power edge devices. AHAR uses a novel adaptive architecture that selects a portion of the baseline architecture to use during the inference phase. We validate our methodology in classifying locomotion activities from two datasets- Opportunity and w-HAR. Compared to the fog/cloud computing approaches for the Opportunity dataset, our baseline and adaptive architecture shows a comparable weighted F1 score of 91.79%, and 91.57%, respectively. For the w-HAR dataset, our baseline and adaptive architecture outperforms the state-of-the-art works with a weighted F1 score of 97.55%, and 97.64%, respectively. Evaluation on real hardware shows that our baseline architecture is significantly energy-efficient (422.38x less) and memory-efficient (14.29x less) compared to the works on the Opportunity dataset. For the w-HAR dataset, our baseline architecture requires 2.04x less energy and 2.18x less memory compared to the state-of-the-art work. Moreover, experimental results show that our adaptive architecture is 12.32% (Opportunity) and 11.14% (w-HAR) energy-efficient than our baseline while providing similar (Opportunity) or better (w-HAR) performance with no significant memory overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge