Mohammad A. Al Faruque

Spatio-Temporal Scene-Graph Embedding for Autonomous Vehicle Collision Prediction

Nov 11, 2021

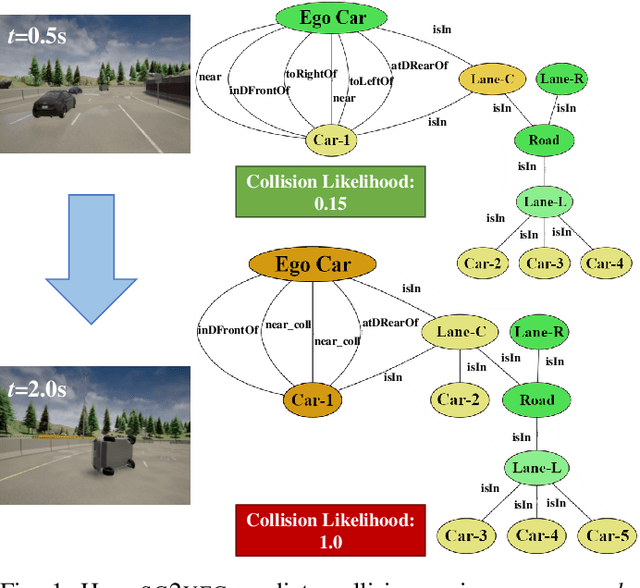

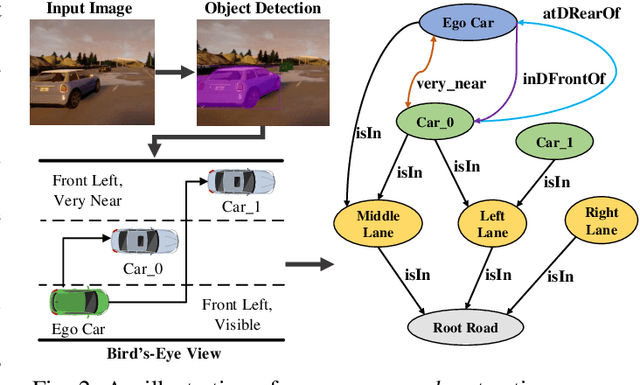

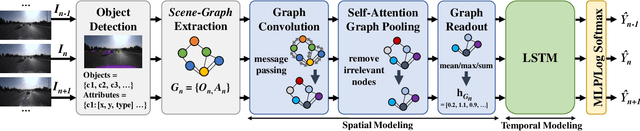

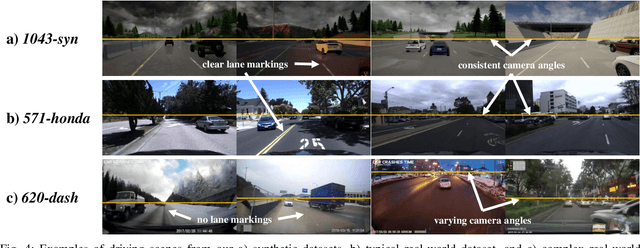

Abstract:In autonomous vehicles (AVs), early warning systems rely on collision prediction to ensure occupant safety. However, state-of-the-art methods using deep convolutional networks either fail at modeling collisions or are too expensive/slow, making them less suitable for deployment on AV edge hardware. To address these limitations, we propose sg2vec, a spatio-temporal scene-graph embedding methodology that uses Graph Neural Network (GNN) and Long Short-Term Memory (LSTM) layers to predict future collisions via visual scene perception. We demonstrate that sg2vec predicts collisions 8.11% more accurately and 39.07% earlier than the state-of-the-art method on synthesized datasets, and 29.47% more accurately on a challenging real-world collision dataset. We also show that sg2vec is better than the state-of-the-art at transferring knowledge from synthetic datasets to real-world driving datasets. Finally, we demonstrate that sg2vec performs inference 9.3x faster with an 88.0% smaller model, 32.4% less power, and 92.8% less energy than the state-of-the-art method on the industry-standard Nvidia DRIVE PX 2 platform, making it more suitable for implementation on the edge.

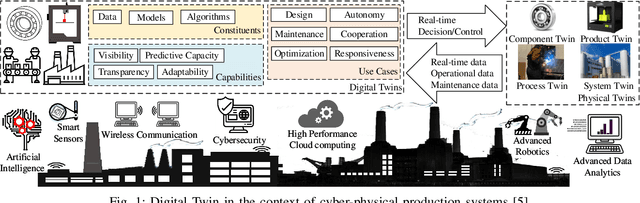

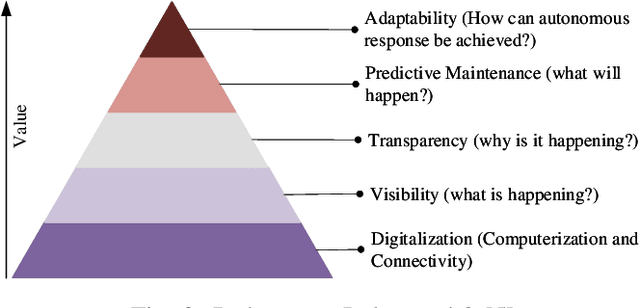

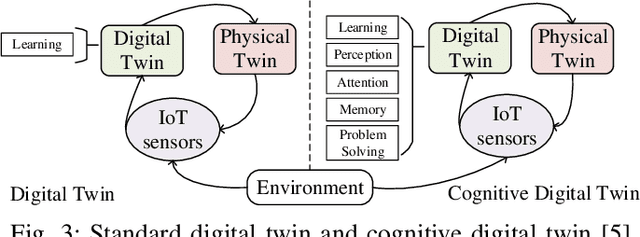

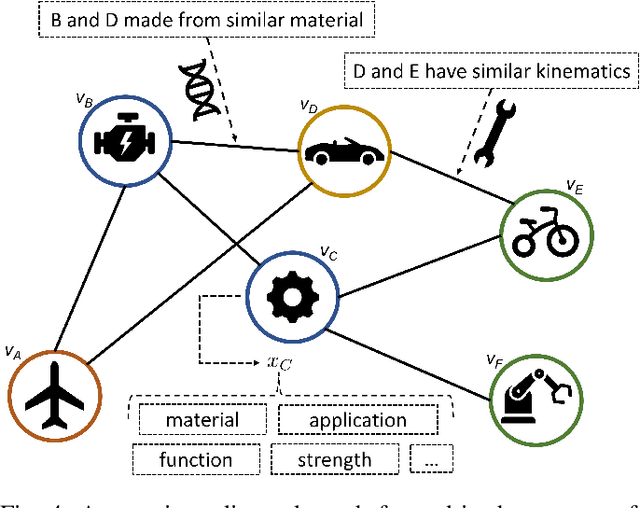

Graph Learning for Cognitive Digital Twins in Manufacturing Systems

Sep 17, 2021

Abstract:Future manufacturing requires complex systems that connect simulation platforms and virtualization with physical data from industrial processes. Digital twins incorporate a physical twin, a digital twin, and the connection between the two. Benefits of using digital twins, especially in manufacturing, are abundant as they can increase efficiency across an entire manufacturing life-cycle. The digital twin concept has become increasingly sophisticated and capable over time, enabled by rises in many technologies. In this paper, we detail the cognitive digital twin as the next stage of advancement of a digital twin that will help realize the vision of Industry 4.0. Cognitive digital twins will allow enterprises to creatively, effectively, and efficiently exploit implicit knowledge drawn from the experience of existing manufacturing systems. They also enable more autonomous decisions and control, while improving the performance across the enterprise (at scale). This paper presents graph learning as one potential pathway towards enabling cognitive functionalities in manufacturing digital twins. A novel approach to realize cognitive digital twins in the product design stage of manufacturing that utilizes graph learning is presented.

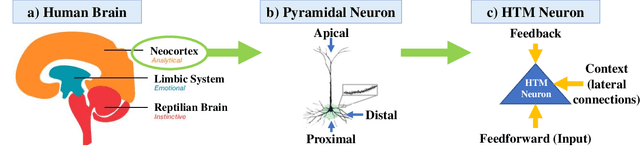

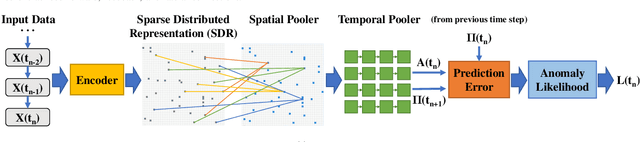

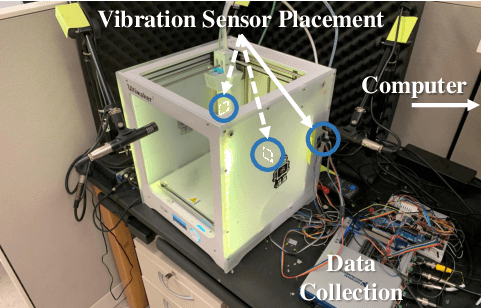

Neuroscience-Inspired Algorithms for the Predictive Maintenance of Manufacturing Systems

Feb 23, 2021

Abstract:If machine failures can be detected preemptively, then maintenance and repairs can be performed more efficiently, reducing production costs. Many machine learning techniques for performing early failure detection using vibration data have been proposed; however, these methods are often power and data-hungry, susceptible to noise, and require large amounts of data preprocessing. Also, training is usually only performed once before inference, so they do not learn and adapt as the machine ages. Thus, we propose a method of performing online, real-time anomaly detection for predictive maintenance using Hierarchical Temporal Memory (HTM). Inspired by the human neocortex, HTMs learn and adapt continuously and are robust to noise. Using the Numenta Anomaly Benchmark, we empirically demonstrate that our approach outperforms state-of-the-art algorithms at preemptively detecting real-world cases of bearing failures and simulated 3D printer failures. Our approach achieves an average score of 64.71, surpassing state-of-the-art deep-learning (49.38) and statistical (61.06) methods.

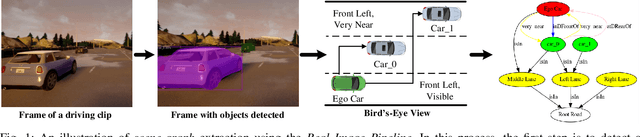

Scene-Graph Augmented Data-Driven Risk Assessment of Autonomous Vehicle Decisions

Aug 31, 2020

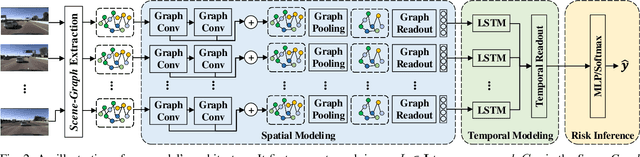

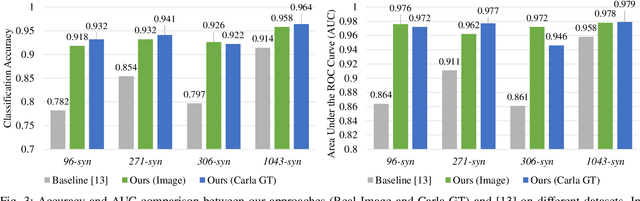

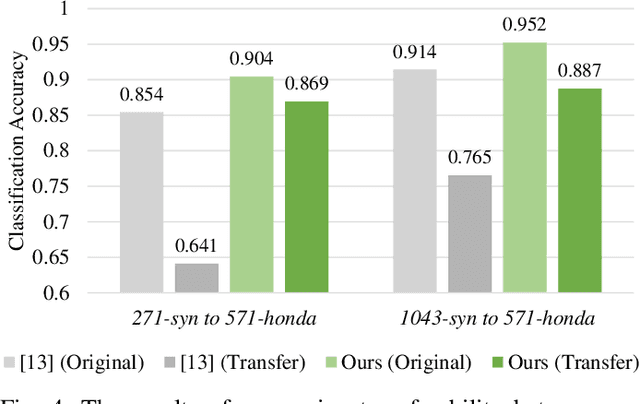

Abstract:Despite impressive advancements in Autonomous Driving Systems (ADS), navigation in complex road conditions remains a challenging problem. There is considerable evidence that evaluating the subjective risk level of various decisions can improve ADS' safety in both normal and complex driving scenarios. However, existing deep learning-based methods often fail to model the relationships between traffic participants and can suffer when faced with complex real-world scenarios. Besides, these methods lack transferability and explainability. To address these limitations, we propose a novel data-driven approach that uses scene-graphs as intermediate representations. Our approach includes a Multi-Relation Graph Convolution Network, a Long-Short Term Memory Network, and attention layers for modeling the subjective risk of driving maneuvers. To train our model, we formulate this task as a supervised scene classification problem. We consider a typical use case to demonstrate our model's capabilities: lane changes. We show that our approach achieves a higher classification accuracy than the state-of-the-art approach on both large (96.4% vs. 91.2%) and small (91.8% vs. 71.2%) synthesized datasets, also illustrating that our approach can learn effectively even from smaller datasets. We also show that our model trained on a synthesized dataset achieves an average accuracy of 87.8% when tested on a real-world dataset compared to the 70.3% accuracy achieved by the state-of-the-art model trained on the same synthesized dataset, showing that our approach can more effectively transfer knowledge. Finally, we demonstrate that the use of spatial and temporal attention layers improves our model's performance by 2.7% and 0.7% respectively, and increases its explainability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge