Junlin He

Solving Oversmoothing in GNNs via Nonlocal Message Passing: Algebraic Smoothing and Depth Scalability

Dec 10, 2025

Abstract:The relationship between Layer Normalization (LN) placement and the oversmoothing phenomenon remains underexplored. We identify a critical dilemma: Pre-LN architectures avoid oversmoothing but suffer from the curse of depth, while Post-LN architectures bypass the curse of depth but experience oversmoothing. To resolve this, we propose a new method based on Post-LN that induces algebraic smoothing, preventing oversmoothing without the curse of depth. Empirical results across five benchmarks demonstrate that our approach supports deeper networks (up to 256 layers) and improves performance, requiring no additional parameters. Key contributions: Theoretical Characterization: Analysis of LN dynamics and their impact on oversmoothing and the curse of depth. A Principled Solution: A parameter-efficient method that induces algebraic smoothing and avoids oversmoothing and the curse of depth. Empirical Validation: Extensive experiments showing the effectiveness of the method in deeper GNNs.

Preventing Dimensional Collapse in Self-Supervised Learning via Orthogonality Regularization

Nov 01, 2024

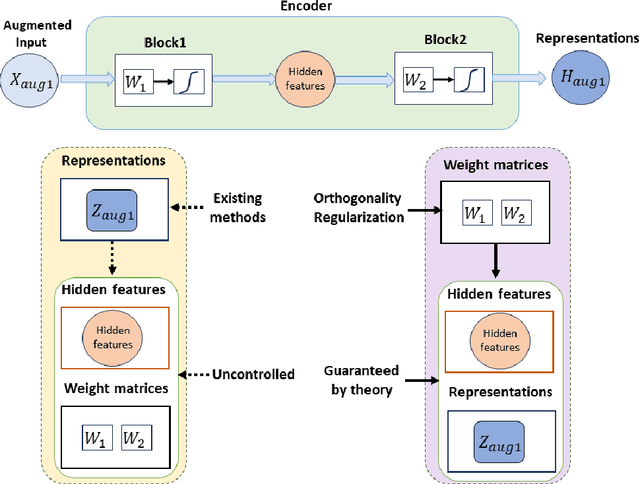

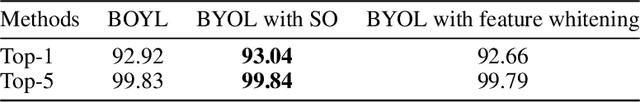

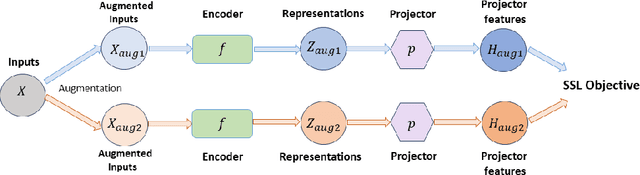

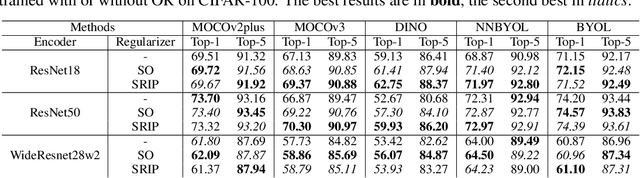

Abstract:Self-supervised learning (SSL) has rapidly advanced in recent years, approaching the performance of its supervised counterparts through the extraction of representations from unlabeled data. However, dimensional collapse, where a few large eigenvalues dominate the eigenspace, poses a significant obstacle for SSL. When dimensional collapse occurs on features (e.g. hidden features and representations), it prevents features from representing the full information of the data; when dimensional collapse occurs on weight matrices, their filters are self-related and redundant, limiting their expressive power. Existing studies have predominantly concentrated on the dimensional collapse of representations, neglecting whether this can sufficiently prevent the dimensional collapse of the weight matrices and hidden features. To this end, we first time propose a mitigation approach employing orthogonal regularization (OR) across the encoder, targeting both convolutional and linear layers during pretraining. OR promotes orthogonality within weight matrices, thus safeguarding against the dimensional collapse of weight matrices, hidden features, and representations. Our empirical investigations demonstrate that OR significantly enhances the performance of SSL methods across diverse benchmarks, yielding consistent gains with both CNNs and Transformer-based architectures.

Preventing Model Collapse in Deep Canonical Correlation Analysis by Noise Regularization

Nov 01, 2024

Abstract:Multi-View Representation Learning (MVRL) aims to learn a unified representation of an object from multi-view data. Deep Canonical Correlation Analysis (DCCA) and its variants share simple formulations and demonstrate state-of-the-art performance. However, with extensive experiments, we observe the issue of model collapse, {\em i.e.}, the performance of DCCA-based methods will drop drastically when training proceeds. The model collapse issue could significantly hinder the wide adoption of DCCA-based methods because it is challenging to decide when to early stop. To this end, we develop NR-DCCA, which is equipped with a novel noise regularization approach to prevent model collapse. Theoretical analysis shows that the Correlation Invariant Property is the key to preventing model collapse, and our noise regularization forces the neural network to possess such a property. A framework to construct synthetic data with different common and complementary information is also developed to compare MVRL methods comprehensively. The developed NR-DCCA outperforms baselines stably and consistently in both synthetic and real-world datasets, and the proposed noise regularization approach can also be generalized to other DCCA-based methods such as DGCCA.

Joint Estimation and Prediction of City-wide Delivery Demand: A Large Language Model Empowered Graph-based Learning Approach

Aug 30, 2024Abstract:The proliferation of e-commerce and urbanization has significantly intensified delivery operations in urban areas, boosting the volume and complexity of delivery demand. Data-driven predictive methods, especially those utilizing machine learning techniques, have emerged to handle these complexities in urban delivery demand management problems. One particularly pressing problem that has not yet been sufficiently studied is the joint estimation and prediction of city-wide delivery demand. To this end, we formulate this problem as a graph-based spatiotemporal learning task. First, a message-passing neural network model is formalized to capture the interaction between demand patterns of associated regions. Second, by exploiting recent advances in large language models, we extract general geospatial knowledge encodings from the unstructured locational data and integrate them into the demand predictor. Last, to encourage the cross-city transferability of the model, an inductive training scheme is developed in an end-to-end routine. Extensive empirical results on two real-world delivery datasets, including eight cities in China and the US, demonstrate that our model significantly outperforms state-of-the-art baselines in these challenging tasks.

Geolocation Representation from Large Language Models are Generic Enhancers for Spatio-Temporal Learning

Aug 22, 2024Abstract:In the geospatial domain, universal representation models are significantly less prevalent than their extensive use in natural language processing and computer vision. This discrepancy arises primarily from the high costs associated with the input of existing representation models, which often require street views and mobility data. To address this, we develop a novel, training-free method that leverages large language models (LLMs) and auxiliary map data from OpenStreetMap to derive geolocation representations (LLMGeovec). LLMGeovec can represent the geographic semantics of city, country, and global scales, which acts as a generic enhancer for spatio-temporal learning. Specifically, by direct feature concatenation, we introduce a simple yet effective paradigm for enhancing multiple spatio-temporal tasks including geographic prediction (GP), long-term time series forecasting (LTSF), and graph-based spatio-temporal forecasting (GSTF). LLMGeovec can seamlessly integrate into a wide spectrum of spatio-temporal learning models, providing immediate enhancements. Experimental results demonstrate that LLMGeovec achieves global coverage and significantly boosts the performance of leading GP, LTSF, and GSTF models.

HGARN: Hierarchical Graph Attention Recurrent Network for Human Mobility Prediction

Oct 14, 2022

Abstract:Human mobility prediction is a fundamental task essential for various applications, including urban planning, transportation services, and location recommendation. Existing approaches often ignore activity information crucial for reasoning human preferences and routines, or adopt a simplified representation of the dependencies between time, activities and locations. To address these issues, we present Hierarchical Graph Attention Recurrent Network (HGARN) for human mobility prediction. Specifically, we construct a hierarchical graph based on all users' history mobility records and employ a Hierarchical Graph Attention Module to capture complex time-activity-location dependencies. This way, HGARN can learn representations with rich contextual semantics to model user preferences at the global level. We also propose a model-agnostic history-enhanced confidence (MaHec) label to focus our model on each user's individual-level preferences. Finally, we introduce a Recurrent Encoder-Decoder Module, which employs recurrent structures to jointly predict users' next activities (as an auxiliary task) and locations. For model evaluation, we test the performances of our Hgarn against existing SOTAs in recurring and explorative settings. The recurring setting focuses more on assessing models' capabilities to capture users' individual-level preferences. In contrast, the results in the explorative setting tend to reflect the power of different models to learn users' global-level preferences. Overall, our model outperforms other baselines significantly in the main, recurring, and explorative settings based on two real-world human mobility data benchmarks. Source codes of HGARN are available at https://github.com/YihongT/HGARN.

HQANN: Efficient and Robust Similarity Search for Hybrid Queries with Structured and Unstructured Constraints

Jul 16, 2022

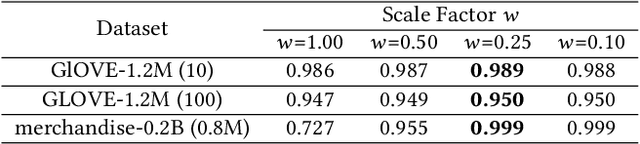

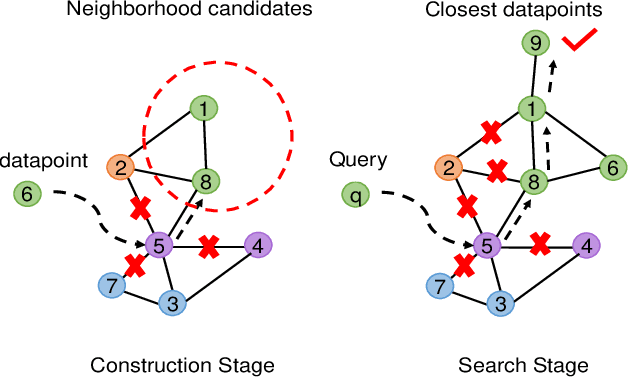

Abstract:The in-memory approximate nearest neighbor search (ANNS) algorithms have achieved great success for fast high-recall query processing, but are extremely inefficient when handling hybrid queries with unstructured (i.e., feature vectors) and structured (i.e., related attributes) constraints. In this paper, we present HQANN, a simple yet highly efficient hybrid query processing framework which can be easily embedded into existing proximity graph-based ANNS algorithms. We guarantee both low latency and high recall by leveraging navigation sense among attributes and fusing vector similarity search with attribute filtering. Experimental results on both public and in-house datasets demonstrate that HQANN is 10x faster than the state-of-the-art hybrid ANNS solutions to reach the same recall quality and its performance is hardly affected by the complexity of attributes. It can reach 99\% recall@10 in just around 50 microseconds On GLOVE-1.2M with thousands of attribute constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge