June-Woo Kim

Understanding Frechet Speech Distance for Synthetic Speech Quality Evaluation

Jan 29, 2026Abstract:Objective evaluation of synthetic speech quality remains a critical challenge. Human listening tests are the gold standard, but costly and impractical at scale. Fréchet Distance has emerged as a promising alternative, yet its reliability depends heavily on the choice of embeddings and experimental settings. In this work, we comprehensively evaluate Fréchet Speech Distance (FSD) and its variant Speech Maximum Mean Discrepancy (SMMD) under varied embeddings and conditions. We further incorporate human listening evaluations alongside TTS intelligibility and synthetic-trained ASR WER to validate the perceptual relevance of these metrics. Our findings show that WavLM Base+ features yield the most stable alignment with human ratings. While FSD and SMMD cannot fully replace subjective evaluation, we show that they can serve as complementary, cost-efficient, and reproducible measures, particularly useful when large-scale or direct listening assessments are infeasible. Code is available at https://github.com/kaen2891/FrechetSpeechDistance.

Improving Respiratory Sound Classification with Architecture-Agnostic Knowledge Distillation from Ensembles

May 28, 2025Abstract:Respiratory sound datasets are limited in size and quality, making high performance difficult to achieve. Ensemble models help but inevitably increase compute cost at inference time. Soft label training distills knowledge efficiently with extra cost only at training. In this study, we explore soft labels for respiratory sound classification as an architecture-agnostic approach to distill an ensemble of teacher models into a student model. We examine different variations of our approach and find that even a single teacher, identical to the student, considerably improves performance beyond its own capability, with optimal gains achieved using only a few teachers. We achieve the new state-of-the-art Score of 64.39 on ICHBI, surpassing the previous best by 0.85 and improving average Scores across architectures by more than 1.16. Our results highlight the effectiveness of knowledge distillation with soft labels for respiratory sound classification, regardless of size or architecture.

Language-Agnostic Suicidal Risk Detection Using Large Language Models

May 26, 2025Abstract:Suicidal risk detection in adolescents is a critical challenge, yet existing methods rely on language-specific models, limiting scalability and generalization. This study introduces a novel language-agnostic framework for suicidal risk assessment with large language models (LLMs). We generate Chinese transcripts from speech using an ASR model and then employ LLMs with prompt-based queries to extract suicidal risk-related features from these transcripts. The extracted features are retained in both Chinese and English to enable cross-linguistic analysis and then used to fine-tune corresponding pretrained language models independently. Experimental results show that our method achieves performance comparable to direct fine-tuning with ASR results or to models trained solely on Chinese suicidal risk-related features, demonstrating its potential to overcome language constraints and improve the robustness of suicidal risk assessment.

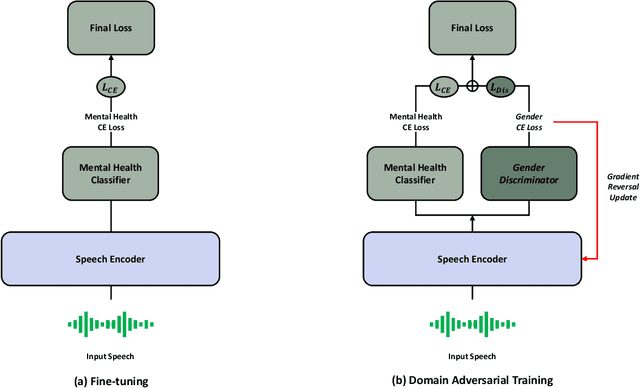

Domain Adversarial Training for Mitigating Gender Bias in Speech-based Mental Health Detection

May 06, 2025

Abstract:Speech-based AI models are emerging as powerful tools for detecting depression and the presence of Post-traumatic stress disorder (PTSD), offering a non-invasive and cost-effective way to assess mental health. However, these models often struggle with gender bias, which can lead to unfair and inaccurate predictions. In this study, our study addresses this issue by introducing a domain adversarial training approach that explicitly considers gender differences in speech-based depression and PTSD detection. Specifically, we treat different genders as distinct domains and integrate this information into a pretrained speech foundation model. We then validate its effectiveness on the E-DAIC dataset to assess its impact on performance. Experimental results show that our method notably improves detection performance, increasing the F1-score by up to 13.29 percentage points compared to the baseline. This highlights the importance of addressing demographic disparities in AI-driven mental health assessment.

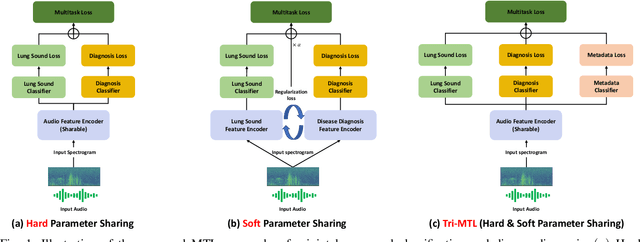

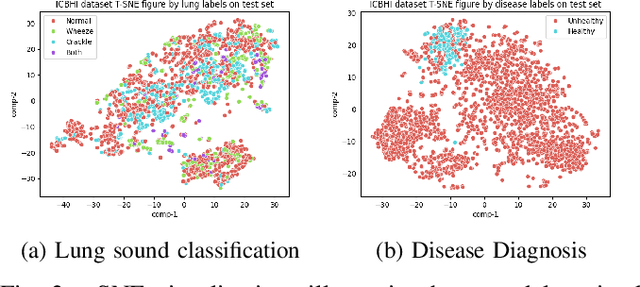

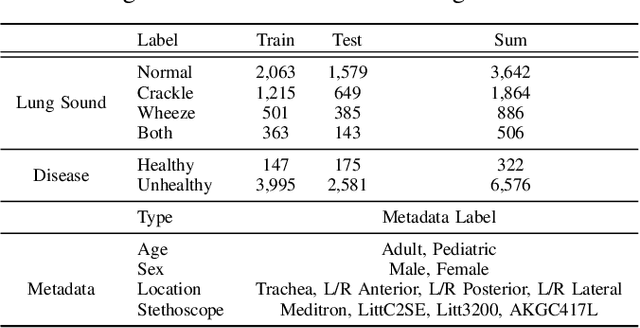

Tri-MTL: A Triple Multitask Learning Approach for Respiratory Disease Diagnosis

May 06, 2025

Abstract:Auscultation remains a cornerstone of clinical practice, essential for both initial evaluation and continuous monitoring. Clinicians listen to the lung sounds and make a diagnosis by combining the patient's medical history and test results. Given this strong association, multitask learning (MTL) can offer a compelling framework to simultaneously model these relationships, integrating respiratory sound patterns with disease manifestations. While MTL has shown considerable promise in medical applications, a significant research gap remains in understanding the complex interplay between respiratory sounds, disease manifestations, and patient metadata attributes. This study investigates how integrating MTL with cutting-edge deep learning architectures can enhance both respiratory sound classification and disease diagnosis. Specifically, we extend recent findings regarding the beneficial impact of metadata on respiratory sound classification by evaluating its effectiveness within an MTL framework. Our comprehensive experiments reveal significant improvements in both lung sound classification and diagnostic performance when the stethoscope information is incorporated into the MTL architecture.

Noise-Agnostic Multitask Whisper Training for Reducing False Alarm Errors in Call-for-Help Detection

Jan 20, 2025Abstract:Keyword spotting is often implemented by keyword classifier to the encoder in acoustic models, enabling the classification of predefined or open vocabulary keywords. Although keyword spotting is a crucial task in various applications and can be extended to call-for-help detection in emergencies, however, the previous method often suffers from scalability limitations due to retraining required to introduce new keywords or adapt to changing contexts. We explore a simple yet effective approach that leverages off-the-shelf pretrained ASR models to address these challenges, especially in call-for-help detection scenarios. Furthermore, we observed a substantial increase in false alarms when deploying call-for-help detection system in real-world scenarios due to noise introduced by microphones or different environments. To address this, we propose a novel noise-agnostic multitask learning approach that integrates a noise classification head into the ASR encoder. Our method enhances the model's robustness to noisy environments, leading to a significant reduction in false alarms and improved overall call-for-help performance. Despite the added complexity of multitask learning, our approach is computationally efficient and provides a promising solution for call-for-help detection in real-world scenarios.

BTS: Bridging Text and Sound Modalities for Metadata-Aided Respiratory Sound Classification

Jun 10, 2024Abstract:Respiratory sound classification (RSC) is challenging due to varied acoustic signatures, primarily influenced by patient demographics and recording environments. To address this issue, we introduce a text-audio multimodal model that utilizes metadata of respiratory sounds, which provides useful complementary information for RSC. Specifically, we fine-tune a pretrained text-audio multimodal model using free-text descriptions derived from the sound samples' metadata which includes the gender and age of patients, type of recording devices, and recording location on the patient's body. Our method achieves state-of-the-art performance on the ICBHI dataset, surpassing the previous best result by a notable margin of 1.17%. This result validates the effectiveness of leveraging metadata and respiratory sound samples in enhancing RSC performance. Additionally, we investigate the model performance in the case where metadata is partially unavailable, which may occur in real-world clinical setting.

RepAugment: Input-Agnostic Representation-Level Augmentation for Respiratory Sound Classification

May 05, 2024

Abstract:Recent advancements in AI have democratized its deployment as a healthcare assistant. While pretrained models from large-scale visual and audio datasets have demonstrably generalized to this task, surprisingly, no studies have explored pretrained speech models, which, as human-originated sounds, intuitively would share closer resemblance to lung sounds. This paper explores the efficacy of pretrained speech models for respiratory sound classification. We find that there is a characterization gap between speech and lung sound samples, and to bridge this gap, data augmentation is essential. However, the most widely used augmentation technique for audio and speech, SpecAugment, requires 2-dimensional spectrogram format and cannot be applied to models pretrained on speech waveforms. To address this, we propose RepAugment, an input-agnostic representation-level augmentation technique that outperforms SpecAugment, but is also suitable for respiratory sound classification with waveform pretrained models. Experimental results show that our approach outperforms the SpecAugment, demonstrating a substantial improvement in the accuracy of minority disease classes, reaching up to 7.14%.

Stethoscope-guided Supervised Contrastive Learning for Cross-domain Adaptation on Respiratory Sound Classification

Dec 15, 2023

Abstract:Despite the remarkable advances in deep learning technology, achieving satisfactory performance in lung sound classification remains a challenge due to the scarcity of available data. Moreover, the respiratory sound samples are collected from a variety of electronic stethoscopes, which could potentially introduce biases into the trained models. When a significant distribution shift occurs within the test dataset or in a practical scenario, it can substantially decrease the performance. To tackle this issue, we introduce cross-domain adaptation techniques, which transfer the knowledge from a source domain to a distinct target domain. In particular, by considering different stethoscope types as individual domains, we propose a novel stethoscope-guided supervised contrastive learning approach. This method can mitigate any domain-related disparities and thus enables the model to distinguish respiratory sounds of the recording variation of the stethoscope. The experimental results on the ICBHI dataset demonstrate that the proposed methods are effective in reducing the domain dependency and achieving the ICBHI Score of 61.71%, which is a significant improvement of 2.16% over the baseline.

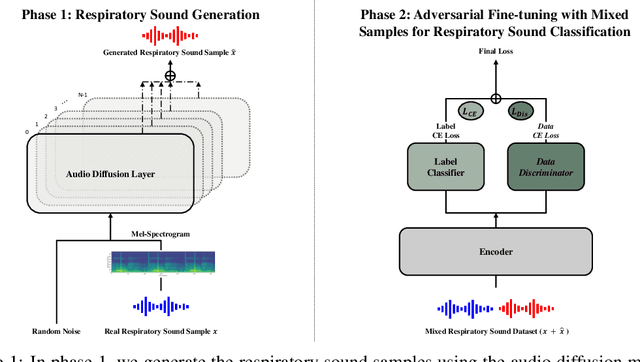

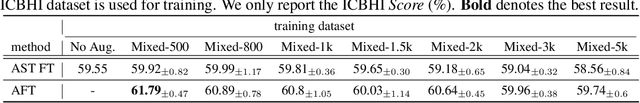

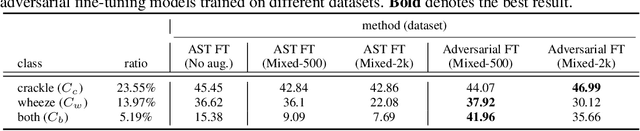

Adversarial Fine-tuning using Generated Respiratory Sound to Address Class Imbalance

Nov 11, 2023

Abstract:Deep generative models have emerged as a promising approach in the medical image domain to address data scarcity. However, their use for sequential data like respiratory sounds is less explored. In this work, we propose a straightforward approach to augment imbalanced respiratory sound data using an audio diffusion model as a conditional neural vocoder. We also demonstrate a simple yet effective adversarial fine-tuning method to align features between the synthetic and real respiratory sound samples to improve respiratory sound classification performance. Our experimental results on the ICBHI dataset demonstrate that the proposed adversarial fine-tuning is effective, while only using the conventional augmentation method shows performance degradation. Moreover, our method outperforms the baseline by 2.24% on the ICBHI Score and improves the accuracy of the minority classes up to 26.58%. For the supplementary material, we provide the code at https://github.com/kaen2891/adversarial_fine-tuning_using_generated_respiratory_sound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge