Julian Rodemann

Ludwig-Maximilians-Universität München

Beyond Arrow: From Impossibility to Possibilities in Multi-Criteria Benchmarking

Feb 07, 2026Abstract:Modern benchmarks such as HELM MMLU account for multiple metrics like accuracy, robustness and efficiency. When trying to turn these metrics into a single ranking, natural aggregation procedures can become incoherent or unstable to changes in the model set. We formalize this aggregation as a social choice problem where each metric induces a preference ranking over models on each dataset, and a benchmark operator aggregates these votes across metrics. While prior work has focused on Arrow's impossibility result, we argue that the impossibility often originates from pathological examples and identify sufficient conditions under which these disappear, and meaningful multi-criteria benchmarking becomes possible. In particular, we deal with three restrictions on the combinations of rankings and prove that on single-peaked, group-separable and distance-restricted preferences, the benchmark operator allows for the construction of well-behaved rankings of the involved models. Empirically, we investigate several modern benchmark suites like HELM MMLU and verify which structural conditions are fulfilled on which benchmark problems.

Performative Learning Theory

Feb 04, 2026Abstract:Performative predictions influence the very outcomes they aim to forecast. We study performative predictions that affect a sample (e.g., only existing users of an app) and/or the whole population (e.g., all potential app users). This raises the question of how well models generalize under performativity. For example, how well can we draw insights about new app users based on existing users when both of them react to the app's predictions? We address this question by embedding performative predictions into statistical learning theory. We prove generalization bounds under performative effects on the sample, on the population, and on both. A key intuition behind our proofs is that in the worst case, the population negates predictions, while the sample deceptively fulfills them. We cast such self-negating and self-fulfilling predictions as min-max and min-min risk functionals in Wasserstein space, respectively. Our analysis reveals a fundamental trade-off between performatively changing the world and learning from it: the more a model affects data, the less it can learn from it. Moreover, our analysis results in a surprising insight on how to improve generalization guarantees by retraining on performatively distorted samples. We illustrate our bounds in a case study on prediction-informed assignments of unemployed German residents to job trainings, drawing upon administrative labor market records from 1975 to 2017 in Germany.

GUARD: Glocal Uncertainty-Aware Robust Decoding for Effective and Efficient Open-Ended Text Generation

Aug 28, 2025Abstract:Open-ended text generation faces a critical challenge: balancing coherence with diversity in LLM outputs. While contrastive search-based decoding strategies have emerged to address this trade-off, their practical utility is often limited by hyperparameter dependence and high computational costs. We introduce GUARD, a self-adaptive decoding method that effectively balances these competing objectives through a novel "Glocal" uncertainty-driven framework. GUARD combines global entropy estimates with local entropy deviations to integrate both long-term and short-term uncertainty signals. We demonstrate that our proposed global entropy formulation effectively mitigates abrupt variations in uncertainty, such as sudden overconfidence or high entropy spikes, and provides theoretical guarantees of unbiasedness and consistency. To reduce computational overhead, we incorporate a simple yet effective token-count-based penalty into GUARD. Experimental results demonstrate that GUARD achieves a good balance between text diversity and coherence, while exhibiting substantial improvements in generation speed. In a more nuanced comparison study across different dimensions of text quality, both human and LLM evaluators validated its remarkable performance. Our code is available at https://github.com/YecanLee/GUARD.

Generalization Bounds and Stopping Rules for Learning with Self-Selected Data

May 12, 2025Abstract:Many learning paradigms self-select training data in light of previously learned parameters. Examples include active learning, semi-supervised learning, bandits, or boosting. Rodemann et al. (2024) unify them under the framework of "reciprocal learning". In this article, we address the question of how well these methods can generalize from their self-selected samples. In particular, we prove universal generalization bounds for reciprocal learning using covering numbers and Wasserstein ambiguity sets. Our results require no assumptions on the distribution of self-selected data, only verifiable conditions on the algorithms. We prove results for both convergent and finite iteration solutions. The latter are anytime valid, thereby giving rise to stopping rules for a practitioner seeking to guarantee the out-of-sample performance of their reciprocal learning algorithm. Finally, we illustrate our bounds and stopping rules for reciprocal learning's special case of semi-supervised learning.

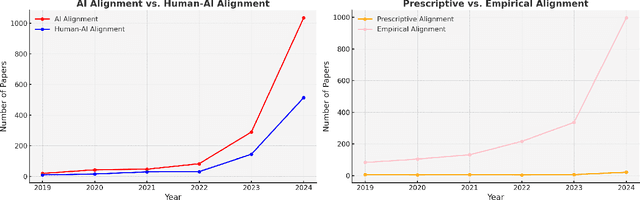

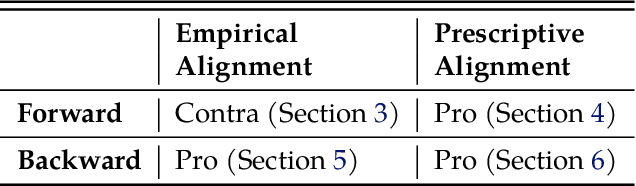

A Statistical Case Against Empirical Human-AI Alignment

Feb 20, 2025

Abstract:Empirical human-AI alignment aims to make AI systems act in line with observed human behavior. While noble in its goals, we argue that empirical alignment can inadvertently introduce statistical biases that warrant caution. This position paper thus advocates against naive empirical alignment, offering prescriptive alignment and a posteriori empirical alignment as alternatives. We substantiate our principled argument by tangible examples like human-centric decoding of language models.

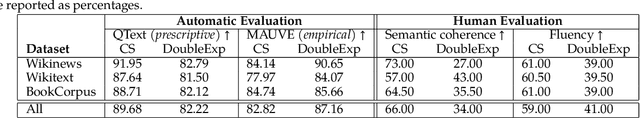

Towards Better Open-Ended Text Generation: A Multicriteria Evaluation Framework

Oct 24, 2024

Abstract:Open-ended text generation has become a prominent task in natural language processing due to the rise of powerful (large) language models. However, evaluating the quality of these models and the employed decoding strategies remains challenging because of trade-offs among widely used metrics such as coherence, diversity, and perplexity. Decoding methods often excel in some metrics while underperforming in others, complicating the establishment of a clear ranking. In this paper, we present novel ranking strategies within this multicriteria framework. Specifically, we employ benchmarking approaches based on partial orderings and present a new summary metric designed to balance existing automatic indicators, providing a more holistic evaluation of text generation quality. Furthermore, we discuss the alignment of these approaches with human judgments. Our experiments demonstrate that the proposed methods offer a robust way to compare decoding strategies, exhibit similarities with human preferences, and serve as valuable tools in guiding model selection for open-ended text generation tasks. Finally, we suggest future directions for improving evaluation methodologies in text generation. Our codebase, datasets, and models are publicly available.

Reciprocal Learning

Aug 12, 2024Abstract:We demonstrate that a wide array of machine learning algorithms are specific instances of one single paradigm: reciprocal learning. These instances range from active learning over multi-armed bandits to self-training. We show that all these algorithms do not only learn parameters from data but also vice versa: They iteratively alter training data in a way that depends on the current model fit. We introduce reciprocal learning as a generalization of these algorithms using the language of decision theory. This allows us to study under what conditions they converge. The key is to guarantee that reciprocal learning contracts such that the Banach fixed-point theorem applies. In this way, we find that reciprocal learning algorithms converge at linear rates to an approximately optimal model under relatively mild assumptions on the loss function, if their predictions are probabilistic and the sample adaption is both non-greedy and either randomized or regularized. We interpret these findings and provide corollaries that relate them to specific active learning, self-training, and bandit algorithms.

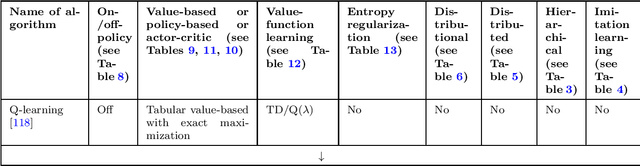

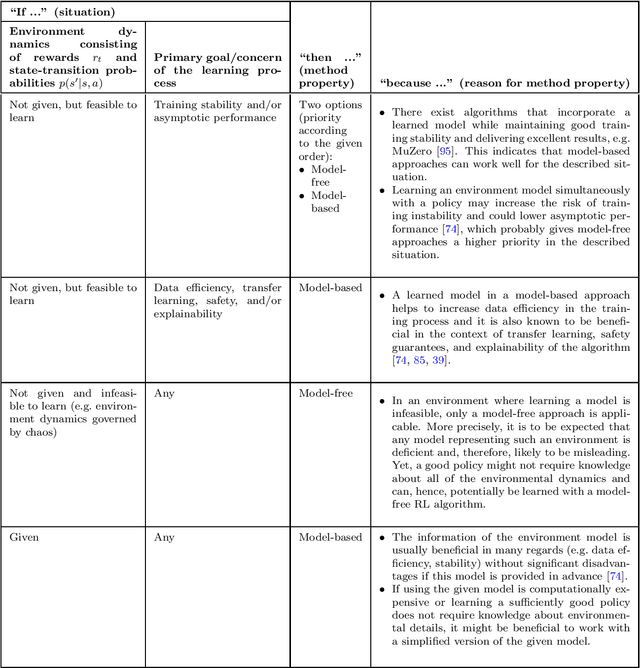

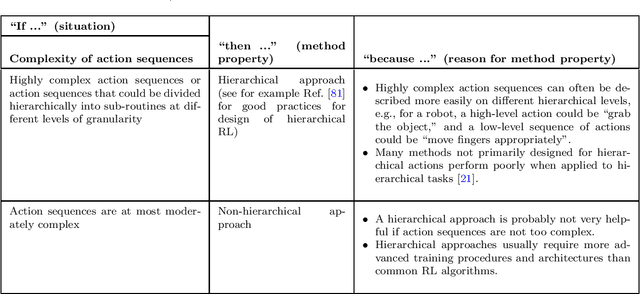

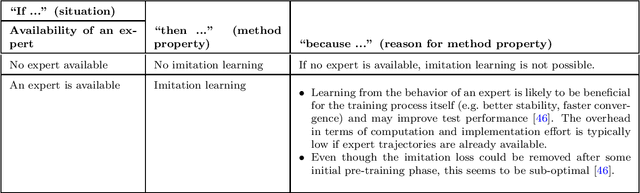

How to Choose a Reinforcement-Learning Algorithm

Jul 30, 2024

Abstract:The field of reinforcement learning offers a large variety of concepts and methods to tackle sequential decision-making problems. This variety has become so large that choosing an algorithm for a task at hand can be challenging. In this work, we streamline the process of choosing reinforcement-learning algorithms and action-distribution families. We provide a structured overview of existing methods and their properties, as well as guidelines for when to choose which methods. An interactive version of these guidelines is available online at https://rl-picker.github.io/.

Adaptive Contrastive Search: Uncertainty-Guided Decoding for Open-Ended Text Generation

Jul 26, 2024Abstract:Decoding from the output distributions of large language models to produce high-quality text is a complex challenge in language modeling. Various approaches, such as beam search, sampling with temperature, $k-$sampling, nucleus $p-$sampling, typical decoding, contrastive decoding, and contrastive search, have been proposed to address this problem, aiming to improve coherence, diversity, as well as resemblance to human-generated text. In this study, we introduce adaptive contrastive search, a novel decoding strategy extending contrastive search by incorporating an adaptive degeneration penalty, guided by the estimated uncertainty of the model at each generation step. This strategy is designed to enhance both the creativity and diversity of the language modeling process while at the same time producing coherent and high-quality generated text output. Our findings indicate performance enhancement in both aspects, across different model architectures and datasets, underscoring the effectiveness of our method in text generation tasks. Our code base, datasets, and models are publicly available.

Towards Bayesian Data Selection

Jun 24, 2024Abstract:A wide range of machine learning algorithms iteratively add data to the training sample. Examples include semi-supervised learning, active learning, multi-armed bandits, and Bayesian optimization. We embed this kind of data addition into decision theory by framing data selection as a decision problem. This paves the way for finding Bayes-optimal selections of data. For the illustrative case of self-training in semi-supervised learning, we derive the respective Bayes criterion. We further show that deploying this criterion mitigates the issue of confirmation bias by empirically assessing our method for generalized linear models, semi-parametric generalized additive models, and Bayesian neural networks on simulated and real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge