Juha Karhunen

A Character-Word Compositional Neural Language Model for Finnish

Dec 10, 2016

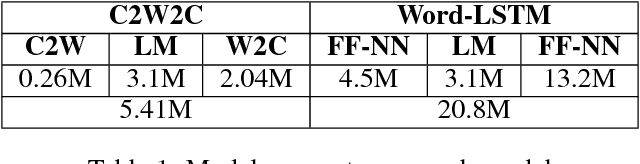

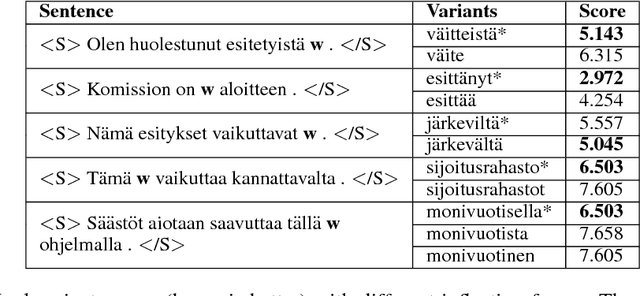

Abstract:Inspired by recent research, we explore ways to model the highly morphological Finnish language at the level of characters while maintaining the performance of word-level models. We propose a new Character-to-Word-to-Character (C2W2C) compositional language model that uses characters as input and output while still internally processing word level embeddings. Our preliminary experiments, using the Finnish Europarl V7 corpus, indicate that C2W2C can respond well to the challenges of morphologically rich languages such as high out of vocabulary rates, the prediction of novel words, and growing vocabulary size. Notably, the model is able to correctly score inflectional forms that are not present in the training data and sample grammatically and semantically correct Finnish sentences character by character.

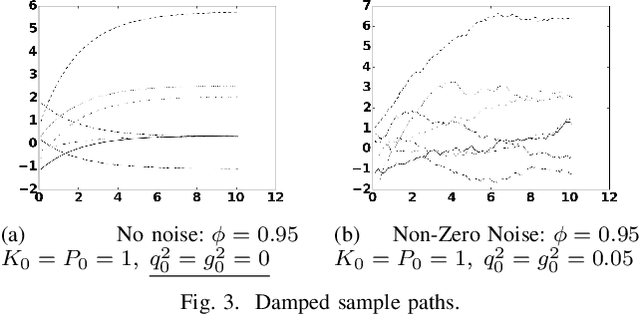

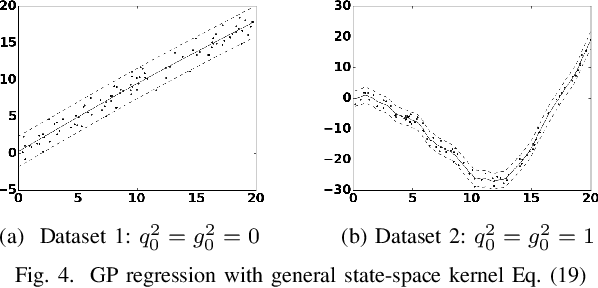

Gaussian Process Kernels for Popular State-Space Time Series Models

Oct 25, 2016

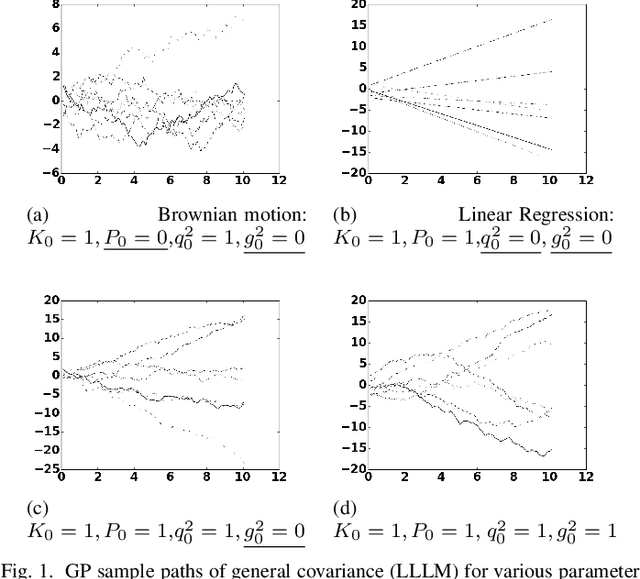

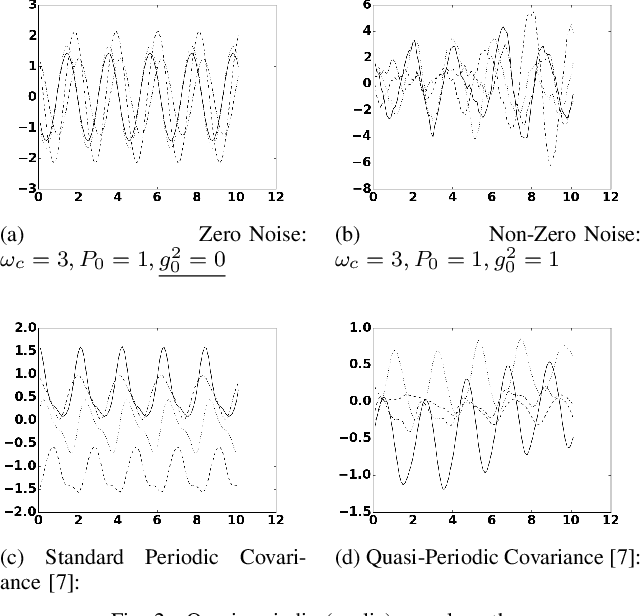

Abstract:In this paper we investigate a link between state- space models and Gaussian Processes (GP) for time series modeling and forecasting. In particular, several widely used state- space models are transformed into continuous time form and corresponding Gaussian Process kernels are derived. Experimen- tal results demonstrate that the derived GP kernels are correct and appropriate for Gaussian Process Regression. An experiment with a real world dataset shows that the modeling is identical with state-space models and with the proposed GP kernels. The considered connection allows the researchers to look at their models from a different angle and facilitate sharing ideas between these two different modeling approaches.

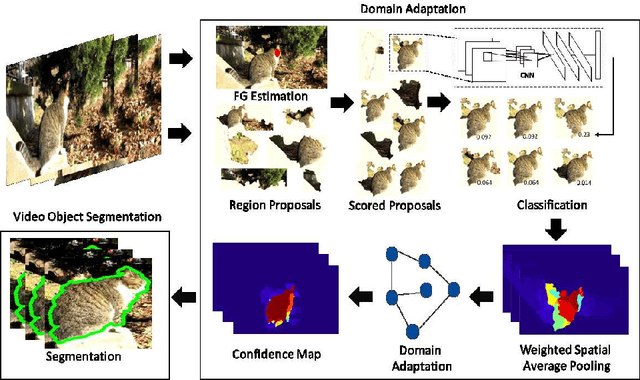

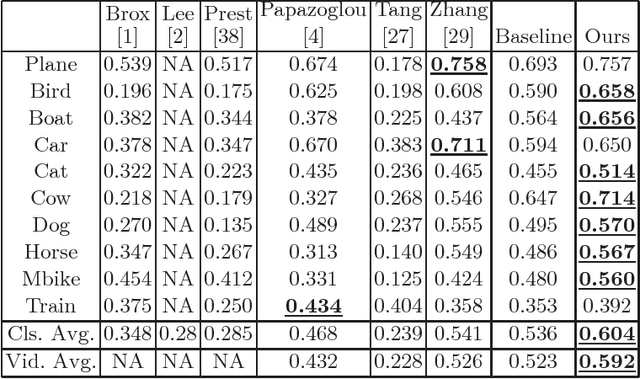

Semi-Supervised Domain Adaptation for Weakly Labeled Semantic Video Object Segmentation

Jun 07, 2016

Abstract:Deep convolutional neural networks (CNNs) have been immensely successful in many high-level computer vision tasks given large labeled datasets. However, for video semantic object segmentation, a domain where labels are scarce, effectively exploiting the representation power of CNN with limited training data remains a challenge. Simply borrowing the existing pretrained CNN image recognition model for video segmentation task can severely hurt performance. We propose a semi-supervised approach to adapting CNN image recognition model trained from labeled image data to the target domain exploiting both semantic evidence learned from CNN, and the intrinsic structures of video data. By explicitly modeling and compensating for the domain shift from the source domain to the target domain, this proposed approach underpins a robust semantic object segmentation method against the changes in appearance, shape and occlusion in natural videos. We present extensive experiments on challenging datasets that demonstrate the superior performance of our approach compared with the state-of-the-art methods.

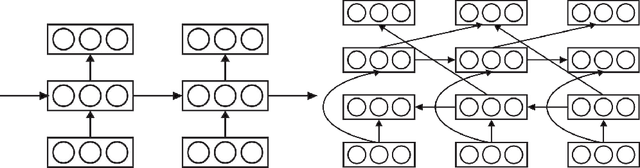

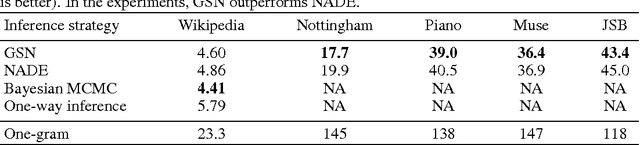

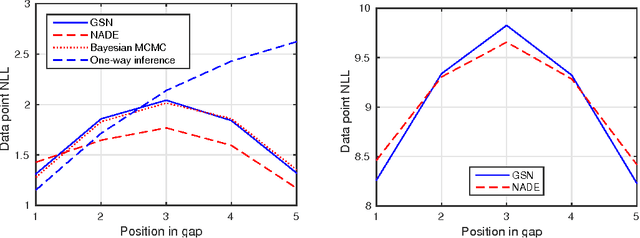

Bidirectional Recurrent Neural Networks as Generative Models - Reconstructing Gaps in Time Series

Nov 02, 2015

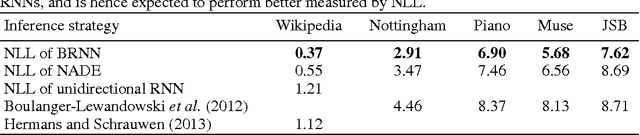

Abstract:Bidirectional recurrent neural networks (RNN) are trained to predict both in the positive and negative time directions simultaneously. They have not been used commonly in unsupervised tasks, because a probabilistic interpretation of the model has been difficult. Recently, two different frameworks, GSN and NADE, provide a connection between reconstruction and probabilistic modeling, which makes the interpretation possible. As far as we know, neither GSN or NADE have been studied in the context of time series before. As an example of an unsupervised task, we study the problem of filling in gaps in high-dimensional time series with complex dynamics. Although unidirectional RNNs have recently been trained successfully to model such time series, inference in the negative time direction is non-trivial. We propose two probabilistic interpretations of bidirectional RNNs that can be used to reconstruct missing gaps efficiently. Our experiments on text data show that both proposed methods are much more accurate than unidirectional reconstructions, although a bit less accurate than a computationally complex bidirectional Bayesian inference on the unidirectional RNN. We also provide results on music data for which the Bayesian inference is computationally infeasible, demonstrating the scalability of the proposed methods.

Bayes Blocks: An Implementation of the Variational Bayesian Building Blocks Framework

Jul 04, 2012

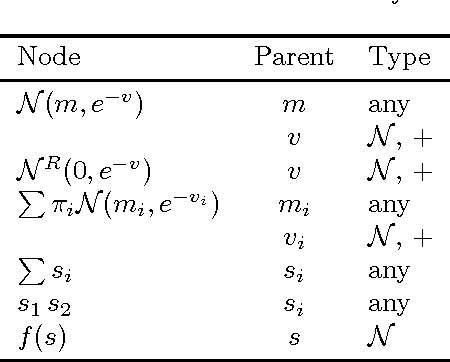

Abstract:A software library for constructing and learning probabilistic models is presented. The library offers a set of building blocks from which a large variety of static and dynamic models can be built. These include hierarchical models for variances of other variables and many nonlinear models. The underlying variational Bayesian machinery, providing for fast and robust estimation but being mathematically rather involved, is almost completely hidden from the user thus making it very easy to use the library. The building blocks include Gaussian, rectified Gaussian and mixture-of-Gaussians variables and computational nodes which can be combined rather freely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge