Juan Carlos Nieves

Human Emotion Verification by Action Languages via Answer Set Programming

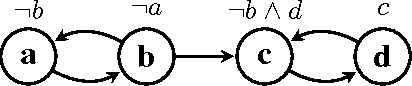

Jan 19, 2026Abstract:In this paper, we introduce the action language C-MT (Mind Transition Language). It is built on top of answer set programming (ASP) and transition systems to represent how human mental states evolve in response to sequences of observable actions. Drawing on well-established psychological theories, such as the Appraisal Theory of Emotion, we formalize mental states, such as emotions, as multi-dimensional configurations. With the objective to address the need for controlled agent behaviors and to restrict unwanted mental side-effects of actions, we extend the language with a novel causal rule, forbids to cause, along with expressions specialized for mental state dynamics, which enables the modeling of principles for valid transitions between mental states. These principles of mental change are translated into transition constraints, and properties of invariance, which are rigorously evaluated using transition systems in terms of so-called trajectories. This enables controlled reasoning about the dynamic evolution of human mental states. Furthermore, the framework supports the comparison of different dynamics of change by analyzing trajectories that adhere to different psychological principles. We apply the action language to design models for emotion verification. Under consideration in Theory and Practice of Logic Programming (TPLP).

Disagree and Commit: Degrees of Argumentation-based Agreements

Dec 31, 2024

Abstract:In cooperative human decision-making, agreements are often not total; a partial degree of agreement is sufficient to commit to a decision and move on, as long as one is somewhat confident that the involved parties are likely to stand by their commitment in the future, given no drastic unexpected changes. In this paper, we introduce the notion of agreement scenarios that allow artificial autonomous agents to reach such agreements, using formal models of argumentation, in particular abstract argumentation and value-based argumentation. We introduce the notions of degrees of satisfaction and (minimum, mean, and median) agreement, as well as a measure of the impact a value in a value-based argumentation framework has on these notions. We then analyze how degrees of agreement are affected when agreement scenarios are expanded with new information, to shed light on the reliability of partial agreements in dynamic scenarios. An implementation of the introduced concepts is provided as part of an argumentation-based reasoning software library.

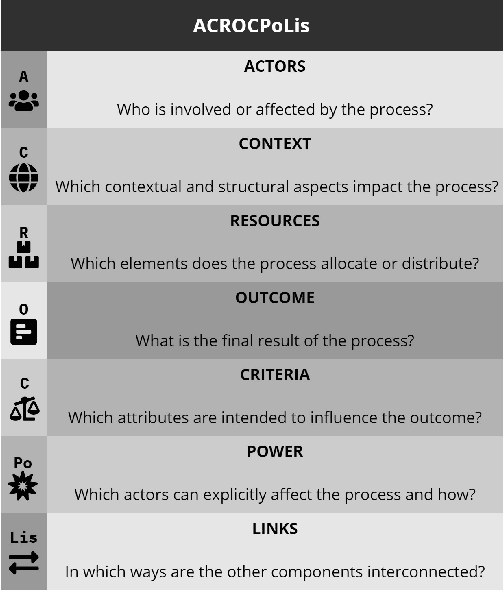

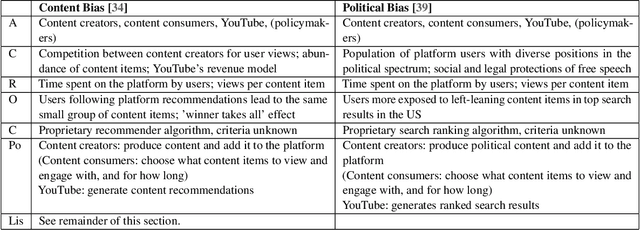

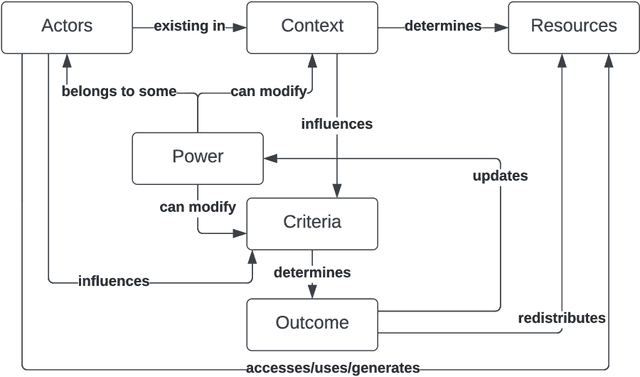

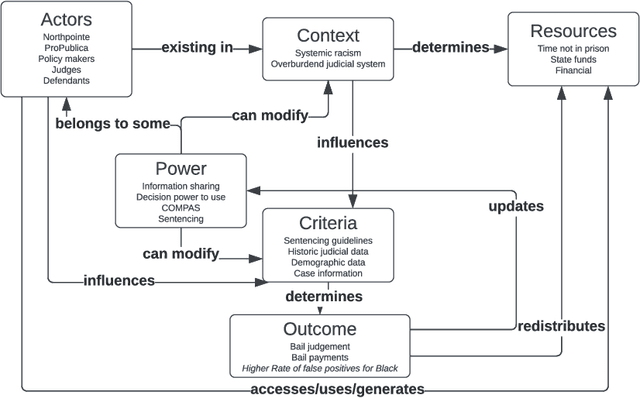

ACROCPoLis: A Descriptive Framework for Making Sense of Fairness

Apr 19, 2023

Abstract:Fairness is central to the ethical and responsible development and use of AI systems, with a large number of frameworks and formal notions of algorithmic fairness being available. However, many of the fairness solutions proposed revolve around technical considerations and not the needs of and consequences for the most impacted communities. We therefore want to take the focus away from definitions and allow for the inclusion of societal and relational aspects to represent how the effects of AI systems impact and are experienced by individuals and social groups. In this paper, we do this by means of proposing the ACROCPoLis framework to represent allocation processes with a modeling emphasis on fairness aspects. The framework provides a shared vocabulary in which the factors relevant to fairness assessments for different situations and procedures are made explicit, as well as their interrelationships. This enables us to compare analogous situations, to highlight the differences in dissimilar situations, and to capture differing interpretations of the same situation by different stakeholders.

Interrogating the Black Box: Transparency through Information-Seeking Dialogues

Feb 09, 2021

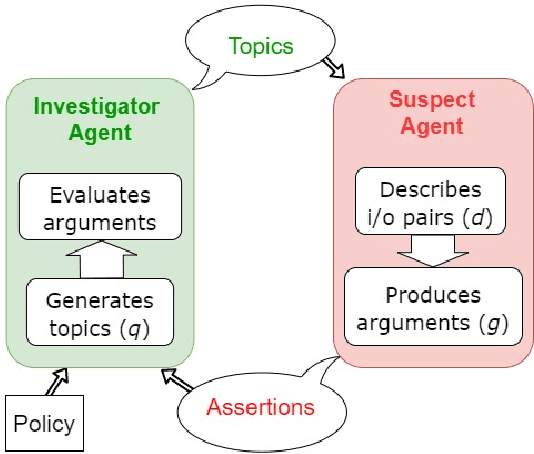

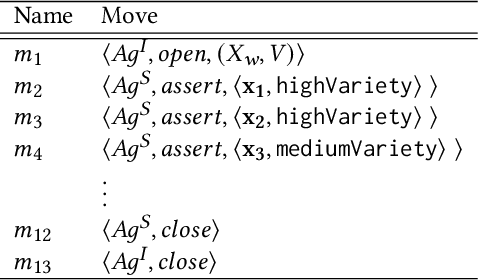

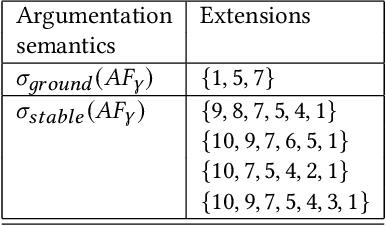

Abstract:This paper is preoccupied with the following question: given a (possibly opaque) learning system, how can we understand whether its behaviour adheres to governance constraints? The answer can be quite simple: we just need to "ask" the system about it. We propose to construct an investigator agent to query a learning agent -- the suspect agent -- to investigate its adherence to a given ethical policy in the context of an information-seeking dialogue, modeled in formal argumentation settings. This formal dialogue framework is the main contribution of this paper. Through it, we break down compliance checking mechanisms into three modular components, each of which can be tailored to various needs in a vast amount of ways: an investigator agent, a suspect agent, and an acceptance protocol determining whether the responses of the suspect agent comply with the policy. This acceptance protocol presents a fundamentally different approach to aggregation: rather than using quantitative methods to deal with the non-determinism of a learning system, we leverage the use of argumentation semantics to investigate the notion of properties holding consistently. Overall, we argue that the introduced formal dialogue framework opens many avenues both in the area of compliance checking and in the analysis of properties of opaque systems.

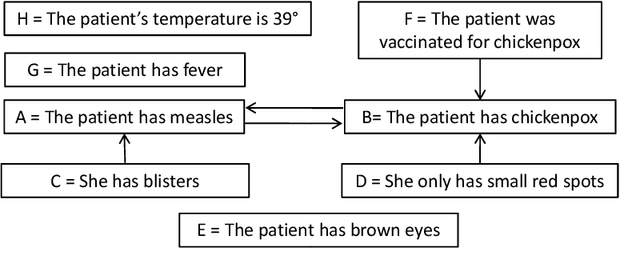

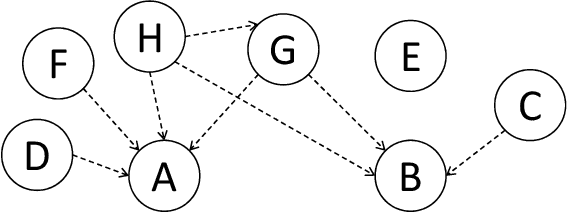

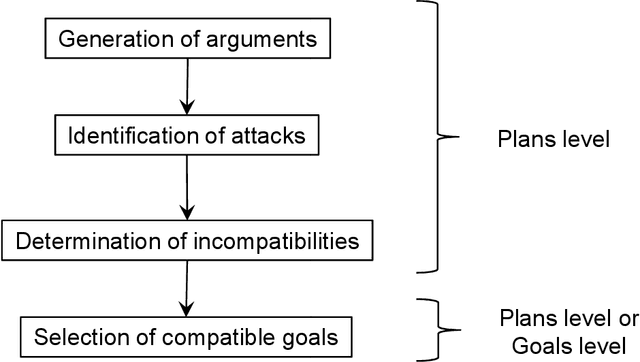

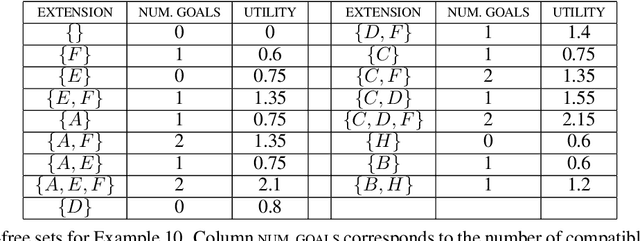

Dealing with Incompatibilities among Procedural Goals under Uncertainty

Sep 17, 2020

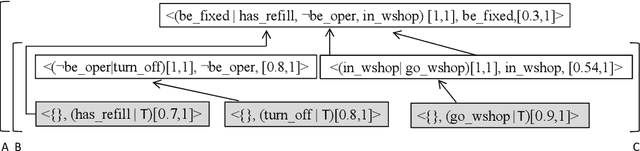

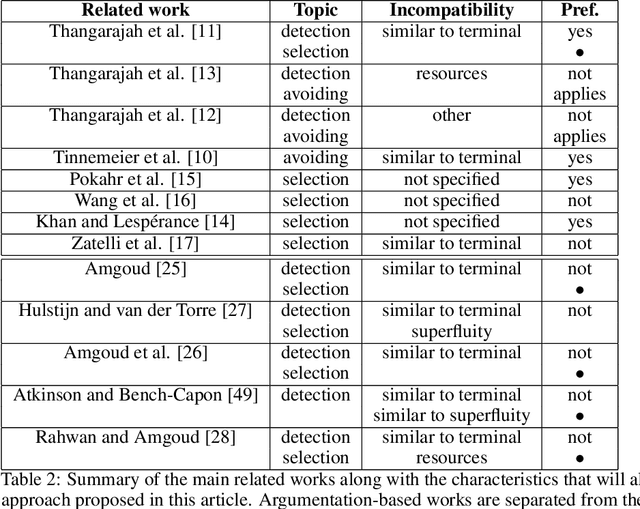

Abstract:By considering rational agents, we focus on the problem of selecting goals out of a set of incompatible ones. We consider three forms of incompatibility introduced by Castelfranchi and Paglieri, namely the terminal, the instrumental (or based on resources), and the superfluity. We represent the agent's plans by means of structured arguments whose premises are pervaded with uncertainty. We measure the strength of these arguments in order to determine the set of compatible goals. We propose two novel ways for calculating the strength of these arguments, depending on the kind of incompatibility that exists between them. The first one is the logical strength value, it is denoted by a three-dimensional vector, which is calculated from a probabilistic interval associated with each argument. The vector represents the precision of the interval, the location of it, and the combination of precision and location. This type of representation and treatment of the strength of a structured argument has not been defined before by the state of the art. The second way for calculating the strength of the argument is based on the cost of the plans (regarding the necessary resources) and the preference of the goals associated with the plans. Considering our novel approach for measuring the strength of structured arguments, we propose a semantics for the selection of plans and goals that is based on Dung's abstract argumentation theory. Finally, we make a theoretical evaluation of our proposal.

An Imprecise Probability Approach for Abstract Argumentation based on Credal Sets

Sep 16, 2020

Abstract:Some abstract argumentation approaches consider that arguments have a degree of uncertainty, which impacts on the degree of uncertainty of the extensions obtained from a abstract argumentation framework (AAF) under a semantics. In these approaches, both the uncertainty of the arguments and of the extensions are modeled by means of precise probability values. However, in many real life situations the exact probabilities values are unknown and sometimes there is a need for aggregating the probability values of different sources. In this paper, we tackle the problem of calculating the degree of uncertainty of the extensions considering that the probability values of the arguments are imprecise. We use credal sets to model the uncertainty values of arguments and from these credal sets, we calculate the lower and upper bounds of the extensions. We study some properties of the suggested approach and illustrate it with an scenario of decision making.

An Argumentation-based Approach for Identifying and Dealing with Incompatibilities among Procedural Goals

Sep 11, 2020

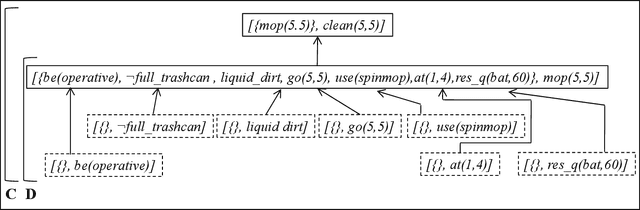

Abstract:During the first step of practical reasoning, i.e. deliberation, an intelligent agent generates a set of pursuable goals and then selects which of them he commits to achieve. An intelligent agent may in general generate multiple pursuable goals, which may be incompatible among them. In this paper, we focus on the definition, identification and resolution of these incompatibilities. The suggested approach considers the three forms of incompatibility introduced by Castelfranchi and Paglieri, namely the terminal incompatibility, the instrumental or resources incompatibility and the superfluity. We characterise computationally these forms of incompatibility by means of arguments that represent the plans that allow an agent to achieve his goals. Thus, the incompatibility among goals is defined based on the conflicts among their plans, which are represented by means of attacks in an argumentation framework. We also work on the problem of goals selection; we propose to use abstract argumentation theory to deal with this problem, i.e. by applying argumentation semantics. We use a modified version of the "cleaner world" scenario in order to illustrate the performance of our proposal.

* 31 pages, 9 figures, Accepted in the International Journal of Approximate Reasoning (2019)

JS-son -- A Lean, Extensible JavaScript Agent Programming Library

Mar 10, 2020

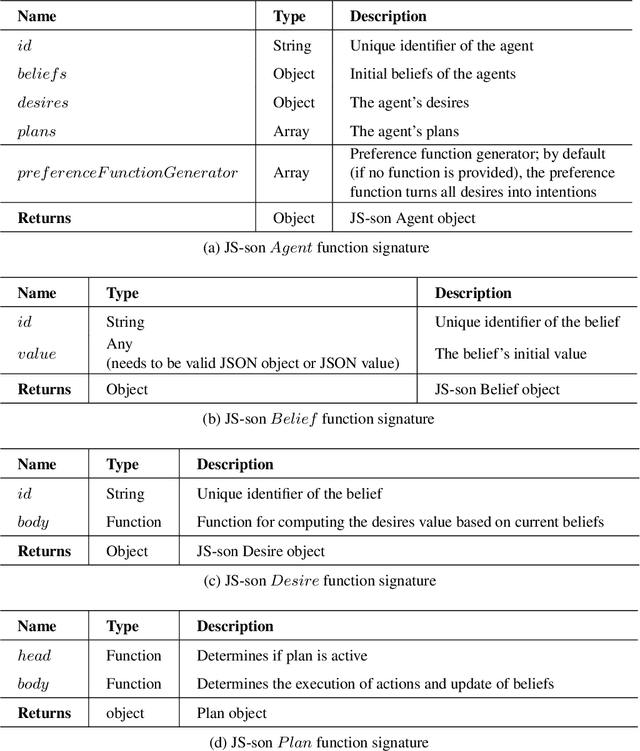

Abstract:A multitude of agent-oriented software engineering frameworks exist, most of which are developed by the academic multi-agent systems community. However, these frameworks often impose programming paradigms on their users that are challenging to learn for engineers who are used to modern high-level programming languages such as JavaScript and Python. To show how the adoption of agent-oriented programming by the software engineering mainstream can be facilitated, we provide a lean JavaScript library prototype for implementing reasoning-loop agents. The library focuses on core agent programming concepts and refrains from imposing further restrictions on the programming approach. To illustrate its usefulness, we show how the library can be applied to multi-agent systems simulations on the web, deployed to cloud-hosted function-as-a-service environments, and embedded in Python-based data science tools.

Abstract Argumentation and the Rational Man

Dec 13, 2019

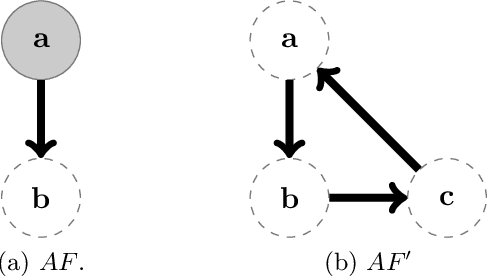

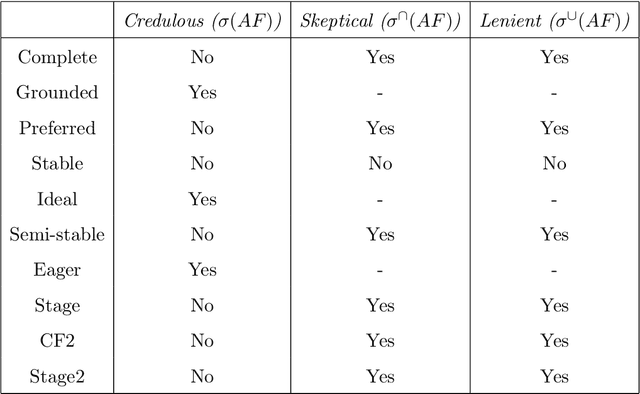

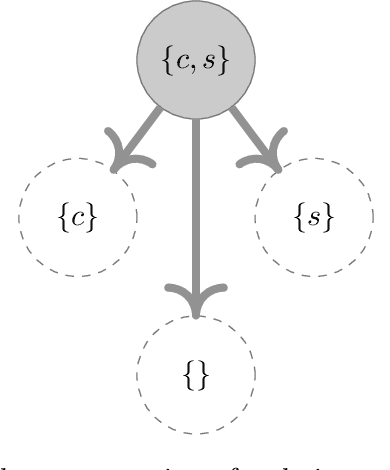

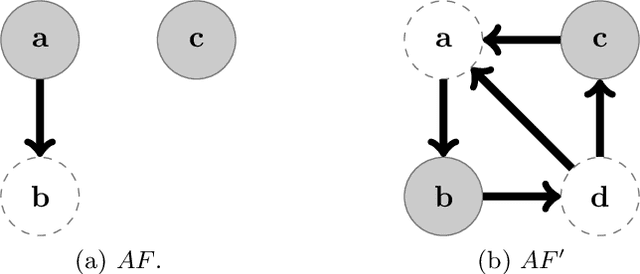

Abstract:Abstract argumentation has emerged as a method for non-monotonic reasoning that has gained tremendous traction in the symbolic artificial intelligence community. In the literature, the different approaches to abstract argumentation that were refined over the years are typically evaluated from a logics perspective; an analysis that is based on models of ideal, rational decision-making does not exist. In this paper, we close this gap by analyzing abstract argumentation from the perspective of the rational man paradigm in microeconomic theory. To assess under which conditions abstract argumentation-based choice functions can be considered economically rational, we define a new argumentation principle that ensures compliance with the rational man's reference independence property, which stipulates that a rational agent's preferences over two choice options should not be influenced by the absence or presence of additional options. We show that the argumentation semantics as proposed in Dung's classical paper, as well as all of a range of other semantics we evaluate do not fulfill this newly created principle. Consequently, we investigate how structural properties of argumentation frameworks impact the reference independence principle, and propose a restriction to argumentation expansions that allows all of the evaluated semantics to fulfill the requirements for economically rational argumentation-based choice. For this purpose, we define the rational man's expansion as a normal and non-cyclic expansion. Finally, we put reference independence into the context of preference-based argumentation and show that for this argumentation variant, which explicitly model preferences, the rational man's expansion cannot ensure reference independence.

Proceedings of the First International Workshop on Argumentation in Logic Programming and Non-Monotonic Reasoning

Nov 08, 2016

Abstract:This volume contains the papers presented at Arg-LPNMR 2016: First International Workshop on Argumentation in Logic Programming and Nonmonotonic Reasoning held on July 8-10, 2016 in New York City, NY.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge