Joseph R. Davidson

SeeTree -- A modular, open-source system for tree detection and orchard localization

Apr 14, 2025Abstract:Accurate localization is an important functional requirement for precision orchard management. However, there are few off-the-shelf commercial solutions available to growers. In this paper, we present SeeTree, a modular, open source embedded system for tree trunk detection and orchard localization that is deployable on any vehicle. Building on our prior work on vision-based in-row localization using particle filters, SeeTree includes several new capabilities. First, it provides capacity for full orchard localization including out-of-row headland turning. Second, it includes the flexibility to integrate either visual, GNSS, or wheel odometry in the motion model. During field experiments in a commercial orchard, the system converged to the correct location 99% of the time over 800 trials, even when starting with large uncertainty in the initial particle locations. When turning out of row, the system correctly tracked 99% of the turns (860 trials representing 43 unique row changes). To help support adoption and future research and development, we make our dataset, design files, and source code freely available to the community.

Compact robotic gripper with tandem actuation for selective fruit harvesting

Aug 13, 2024Abstract:Selective fruit harvesting is a challenging manipulation problem due to occlusions and clutter arising from plant foliage. A harvesting gripper should i) have a small cross-section, to avoid collisions while approaching the fruit; ii) have a soft and compliant grasp to adapt to different fruit geometry and avoid bruising it; and iii) be capable of rigidly holding the fruit tightly enough to counteract detachment forces. Previous work on fruit harvesting has primarily focused on using grippers with a single actuation mode, either suction or fingers. In this paper we present a compact robotic gripper that combines the benefits of both. The gripper first uses an array of compliant suction cups to gently attach to the fruit. After attachment, telescoping cam-driven fingers deploy, sweeping obstacles away before pivoting inwards to provide a secure grip on the fruit for picking. We present and analyze the finger design for both ability to sweep clutter and maintain a tight grasp. Specifically, we use a motorized test bed to measure grasp strength for each actuation mode (suction, fingers, or both). We apply a tensile force at different angles (0{\deg}, 15{\deg}, 30{\deg} and 45{\deg}), and vary the point of contact between the fingers and the fruit. We observed that with both modes the grasp strength is approximately 40 N. We use an apple proxy to test the gripper's ability to obtain a grasp in the presence of occluding apples and leaves, achieving a grasp success rate over 96% (with an ideal controller). Finally, we validate our gripper in a commercial apple orchard.

Machine Vision Based Assessment of Fall Color Changes in Apple Trees: Exploring Relationship with Leaf Nitrogen Concentration

Apr 23, 2024Abstract:Apple trees being deciduous trees, shed leaves each year which is preceded by the change in color of leaves from green to yellow (also known as senescence) during the fall season. The rate and timing of color change are affected by the number of factors including nitrogen (N) deficiencies. The green color of leaves is highly dependent on the chlorophyll content, which in turn depends on the nitrogen concentration in the leaves. The assessment of the leaf color can give vital information on the nutrient status of the tree. The use of a machine vision based system to capture and quantify these timings and changes in leaf color can be a great tool for that purpose. \par This study is based on data collected during the fall of 2021 and 2023 at a commercial orchard using a ground-based stereo-vision sensor for five weeks. The point cloud obtained from the sensor was segmented to get just the tree in the foreground. The study involved the segmentation of the trees in a natural background using point cloud data and quantification of the color using a custom-defined metric, \textit{yellowness index}, varying from $-1$ to $+1$ ($-1$ being completely green and $+1$ being completely yellow), which gives the proportion of yellow leaves on a tree. The performance of K-means based algorithm and gradient boosting algorithm were compared for \textit{yellowness index} calculation. The segmentation method proposed in the study was able to estimate the \textit{yellowness index} on the trees with $R^2 = 0.72$. The results showed that the metric was able to capture the gradual color transition from green to yellow over the study duration. It was also observed that the trees with lower nitrogen showed the color transition to yellow earlier than the trees with higher nitrogen. The onset of color transition during both years aligned with the $29^{th}$ week post-full bloom.

A real-time, hardware agnostic framework for close-up branch reconstruction using RGB data

Sep 20, 2023Abstract:Creating accurate 3D models of tree topology is an important task for tree pruning. The 3D model is used to decide which branches to prune and then to execute the pruning cuts. Previous methods for creating 3D tree models have typically relied on point clouds, which are often computationally expensive to process and can suffer from data defects, especially with thin branches. In this paper, we propose a method for actively scanning along a primary tree branch, detecting secondary branches to be pruned, and reconstructing their 3D geometry using just an RGB camera mounted on a robot arm. We experimentally validate that our setup is able to produce primary branch models with 4-5 mm accuracy and secondary branch models with 15 degrees orientation accuracy with respect to the ground truth model. Our framework is real-time and can run up to 10 cm/s with no loss in model accuracy or ability to detect secondary branches.

HISSbot: Sidewinding with a Soft Snake Robot

Mar 28, 2023

Abstract:Snake robots are characterized by their ability to navigate through small spaces and loose terrain by utilizing efficient cyclic forms of locomotion. Soft snake robots are a subset of these robots which utilize soft, compliant actuators to produce movement. Prior work on soft snake robots has primarily focused on planar gaits, such as undulation. More efficient spatial gaits, such as sidewinding, are unexplored gaits for soft snake robots. We propose a novel means of constructing a soft snake robot capable of sidewinding, and introduce the Helical Inflating Soft Snake Robot (HISSbot). We validate this actuation through the physical HISSbot, and demonstrate its ability to sidewind across various surfaces. Our tests show robustness in locomotion through low-friction and granular media.

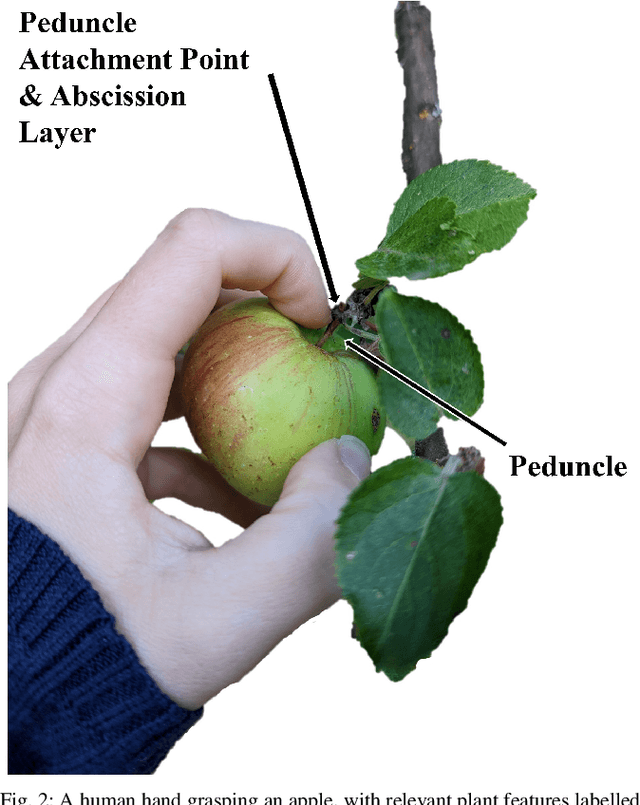

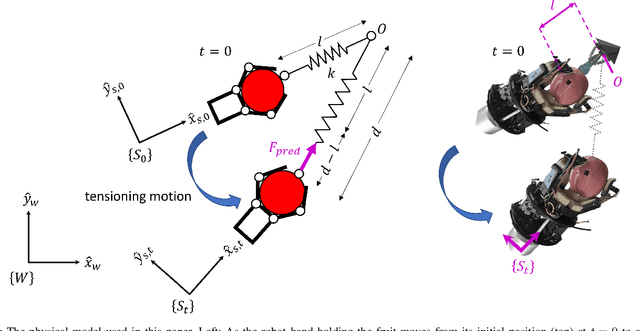

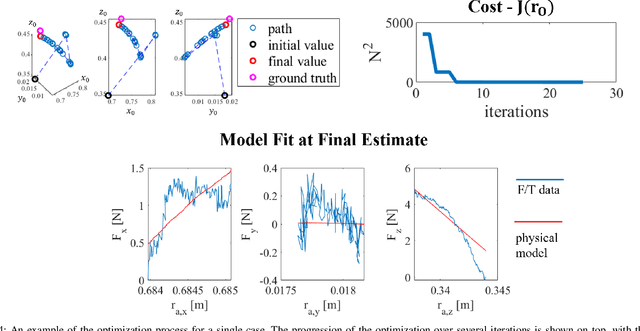

Optimization-Based Mechanical Perception for Peduncle Localization During Robotic Fruit Harvest

Sep 27, 2022

Abstract:Rising global food demand and harsh working conditions make fruit harvest an important domain to automate. Peduncle localization is an important step for any automated fruit harvesting system, since fruit separation techniques are highly sensitive to peduncle location. Most work on peduncle localization has focused on computer vision, but peduncles can be difficult to visually access due to the cluttered nature of agricultural environments. Our work proposes an alternative method which relies on mechanical -- rather than visual -- perception to localize the peduncle. To estimate the location of this important plant feature, we fit wrench measurements from a wrist force/torque sensor to a physical model of the fruit-plant system, treating the fruit's attachment point as a parameter to be tuned. This method is performed inline as part of the fruit picking procedure. Using our orchard proxy for evaluation, we demonstrate that the technique is able to localize the peduncle within a median distance of 3.8 cm and median orientation error of 16.8 degrees.

Forecasting Vehicle Pitch of a Lightweight Underwater Vehicle Manipulator System with Recurrent Neural Networks

Sep 27, 2022

Abstract:As Underwater Vehicle Manipulator Systems (UVMSs) have gotten smaller and lighter over the past years, it is becoming increasingly important to consider the coupling forces between the manipulator and the vehicle when planning and controlling the system. However, typical methods of handling these forces require an exact hydrodynamic model of the vehicle and access to low-level torque control on the manipulator, both of which are uncommon in the field. Therefore, many UVMS control methods are kinematics-based, which cannot inherently account for these effects. Our work bridges the gap between kinematic control and dynamics by training a recurrent neural network on simulated UVMS data to predict the pitch of the vehicle in the future based on the system's previous states. Kinematic planners and controllers can use this metric to incorporate dynamic knowledge without a computationally expensive model, improving their ability to perform underwater manipulation tasks.

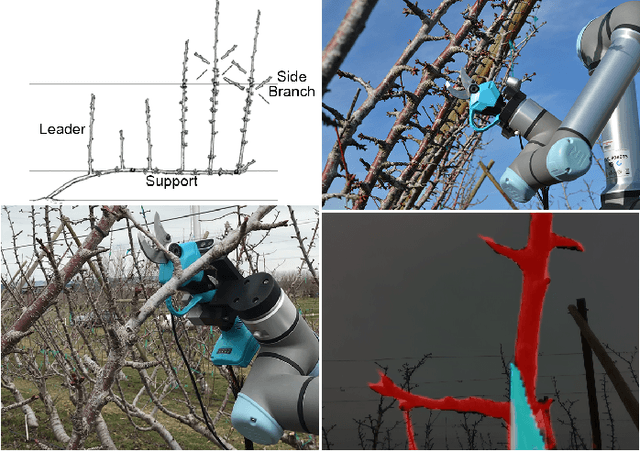

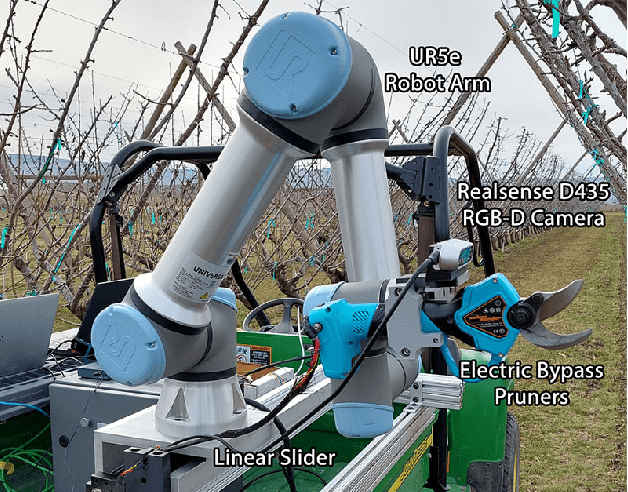

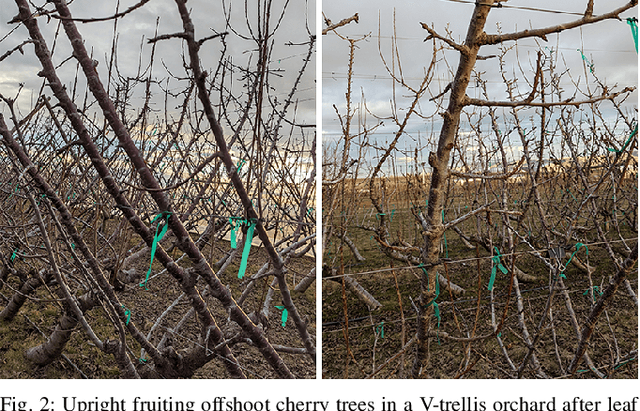

An autonomous robot for pruning modern, planar fruit trees

Jun 14, 2022

Abstract:Dormant pruning of fruit trees is an important task for maintaining tree health and ensuring high-quality fruit. Due to decreasing labor availability, pruning is a prime candidate for robotic automation. However, pruning also represents a uniquely difficult problem for robots, requiring robust systems for perception, pruning point determination, and manipulation that must operate under variable lighting conditions and in complex, highly unstructured environments. In this paper, we introduce a system for pruning sweet cherry trees (in a planar tree architecture called an upright fruiting offshoot configuration) that integrates various subsystems from our previous work on perception and manipulation. The resulting system is capable of operating completely autonomously and requires minimal control of the environment. We validate the performance of our system through field trials in a sweet cherry orchard, ultimately achieving a cutting success rate of 58%. Though not fully robust and requiring improvements in throughput, our system is the first to operate on fruit trees and represents a useful base platform to be improved in the future.

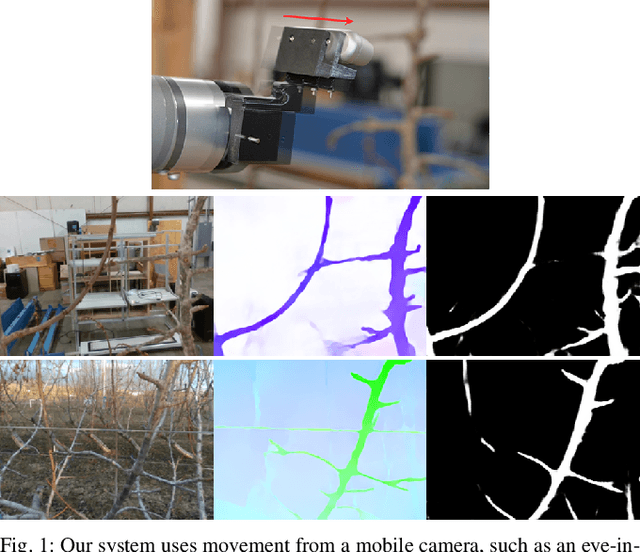

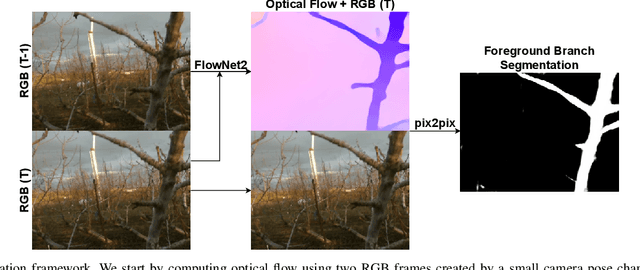

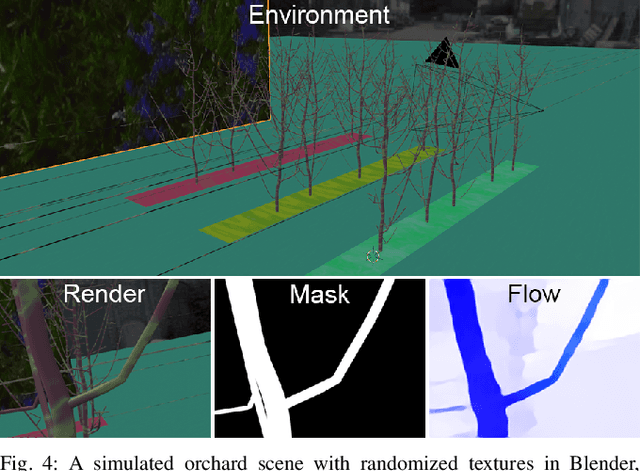

Optical flow-based branch segmentation for complex orchard environments

Feb 26, 2022

Abstract:Machine vision is a critical subsystem for enabling robots to be able to perform a variety of tasks in orchard environments. However, orchards are highly visually complex environments, and computer vision algorithms operating in them must be able to contend with variable lighting conditions and background noise. Past work on enabling deep learning algorithms to operate in these environments has typically required large amounts of hand-labeled data to train a deep neural network or physically controlling the conditions under which the environment is perceived. In this paper, we train a neural network system in simulation only using simulated RGB data and optical flow. This resulting neural network is able to perform foreground segmentation of branches in a busy orchard environment without additional real-world training or using any special setup or equipment beyond a standard camera. Our results show that our system is highly accurate and, when compared to a network using manually labeled RGBD data, achieves significantly more consistent and robust performance across environments that differ from the training set.

Precision fruit tree pruning using a learned hybrid vision/interaction controller

Sep 27, 2021

Abstract:Robotic tree pruning requires highly precise manipulator control in order to accurately align a cutting implement with the desired pruning point at the correct angle. Simultaneously, the robot must avoid applying excessive force to rigid parts of the environment such as trees, support posts, and wires. In this paper, we propose a hybrid control system that uses a learned vision-based controller to initially align the cutter with the desired pruning point, taking in images of the environment and outputting control actions. This controller is trained entirely in simulation, but transfers easily to real trees via a neural network which transforms raw images into a simplified, segmented representation. Once contact is established, the system hands over control to an interaction controller that guides the cutter pivot point to the branch while minimizing interaction forces. With this simple, yet novel, approach we demonstrate an improvement of over 30 percentage points in accuracy over a baseline controller that uses camera depth data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge