Nidhi Parayil

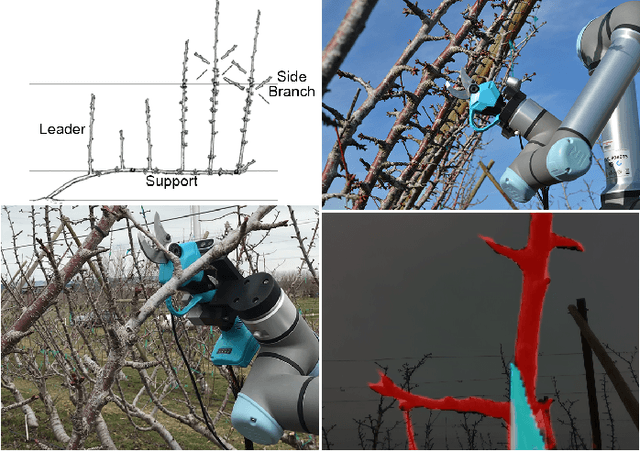

An autonomous robot for pruning modern, planar fruit trees

Jun 14, 2022

Abstract:Dormant pruning of fruit trees is an important task for maintaining tree health and ensuring high-quality fruit. Due to decreasing labor availability, pruning is a prime candidate for robotic automation. However, pruning also represents a uniquely difficult problem for robots, requiring robust systems for perception, pruning point determination, and manipulation that must operate under variable lighting conditions and in complex, highly unstructured environments. In this paper, we introduce a system for pruning sweet cherry trees (in a planar tree architecture called an upright fruiting offshoot configuration) that integrates various subsystems from our previous work on perception and manipulation. The resulting system is capable of operating completely autonomously and requires minimal control of the environment. We validate the performance of our system through field trials in a sweet cherry orchard, ultimately achieving a cutting success rate of 58%. Though not fully robust and requiring improvements in throughput, our system is the first to operate on fruit trees and represents a useful base platform to be improved in the future.

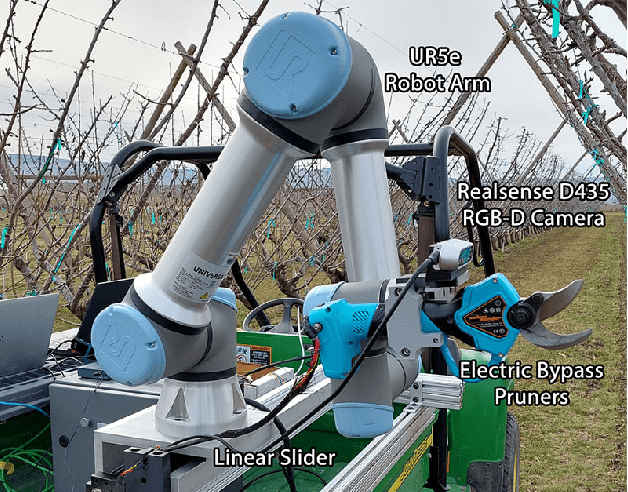

Precision fruit tree pruning using a learned hybrid vision/interaction controller

Sep 27, 2021

Abstract:Robotic tree pruning requires highly precise manipulator control in order to accurately align a cutting implement with the desired pruning point at the correct angle. Simultaneously, the robot must avoid applying excessive force to rigid parts of the environment such as trees, support posts, and wires. In this paper, we propose a hybrid control system that uses a learned vision-based controller to initially align the cutter with the desired pruning point, taking in images of the environment and outputting control actions. This controller is trained entirely in simulation, but transfers easily to real trees via a neural network which transforms raw images into a simplified, segmented representation. Once contact is established, the system hands over control to an interaction controller that guides the cutter pivot point to the branch while minimizing interaction forces. With this simple, yet novel, approach we demonstrate an improvement of over 30 percentage points in accuracy over a baseline controller that uses camera depth data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge