Jorge A. Mendez

Embodied Lifelong Learning for Task and Motion Planning

Jul 13, 2023

Abstract:A robot deployed in a home over long stretches of time faces a true lifelong learning problem. As it seeks to provide assistance to its users, the robot should leverage any accumulated experience to improve its own knowledge to become a more proficient assistant. We formalize this setting with a novel lifelong learning problem formulation in the context of learning for task and motion planning (TAMP). Exploiting the modularity of TAMP systems, we develop a generative mixture model that produces candidate continuous parameters for a planner. Whereas most existing lifelong learning approaches determine a priori how data is shared across task models, our approach learns shared and non-shared models and determines which to use online during planning based on auxiliary tasks that serve as a proxy for each model's understanding of a state. Our method exhibits substantial improvements in planning success on simulated 2D domains and on several problems from the BEHAVIOR benchmark.

Robotic Manipulation Datasets for Offline Compositional Reinforcement Learning

Jul 13, 2023

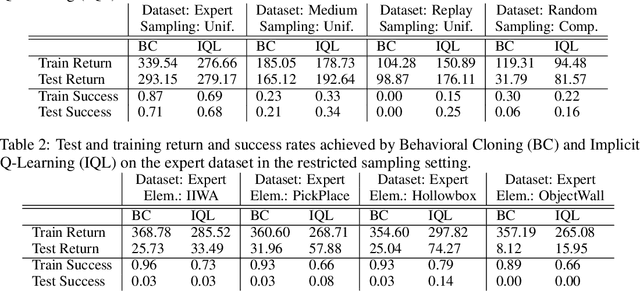

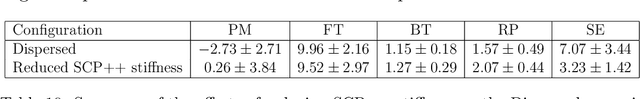

Abstract:Offline reinforcement learning (RL) is a promising direction that allows RL agents to pre-train on large datasets, avoiding the recurrence of expensive data collection. To advance the field, it is crucial to generate large-scale datasets. Compositional RL is particularly appealing for generating such large datasets, since 1) it permits creating many tasks from few components, 2) the task structure may enable trained agents to solve new tasks by combining relevant learned components, and 3) the compositional dimensions provide a notion of task relatedness. This paper provides four offline RL datasets for simulated robotic manipulation created using the 256 tasks from CompoSuite [Mendez et al., 2022a]. Each dataset is collected from an agent with a different degree of performance, and consists of 256 million transitions. We provide training and evaluation settings for assessing an agent's ability to learn compositional task policies. Our benchmarking experiments on each setting show that current offline RL methods can learn the training tasks to some extent and that compositional methods significantly outperform non-compositional methods. However, current methods are still unable to extract the tasks' compositional structure to generalize to unseen tasks, showing a need for further research in offline compositional RL.

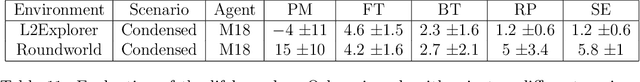

A Domain-Agnostic Approach for Characterization of Lifelong Learning Systems

Jan 18, 2023

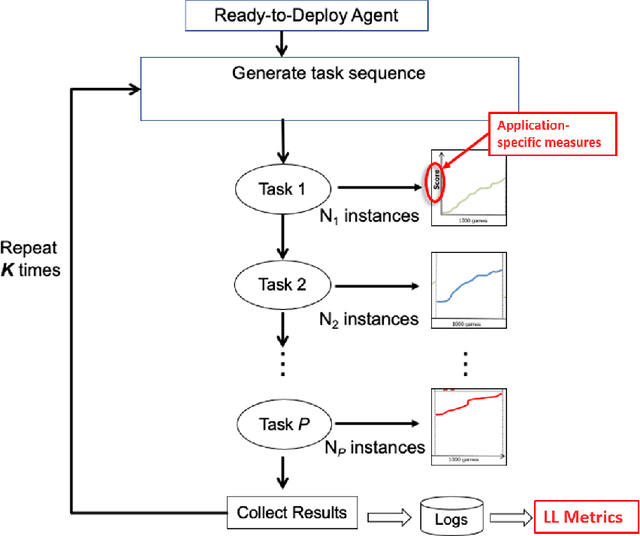

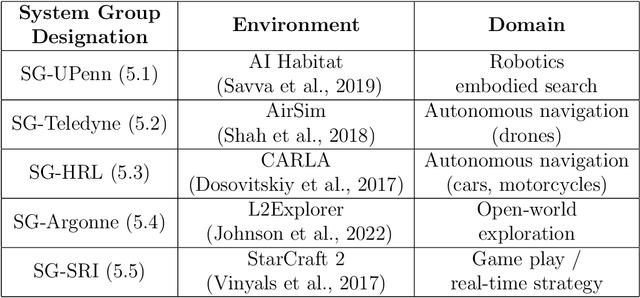

Abstract:Despite the advancement of machine learning techniques in recent years, state-of-the-art systems lack robustness to "real world" events, where the input distributions and tasks encountered by the deployed systems will not be limited to the original training context, and systems will instead need to adapt to novel distributions and tasks while deployed. This critical gap may be addressed through the development of "Lifelong Learning" systems that are capable of 1) Continuous Learning, 2) Transfer and Adaptation, and 3) Scalability. Unfortunately, efforts to improve these capabilities are typically treated as distinct areas of research that are assessed independently, without regard to the impact of each separate capability on other aspects of the system. We instead propose a holistic approach, using a suite of metrics and an evaluation framework to assess Lifelong Learning in a principled way that is agnostic to specific domains or system techniques. Through five case studies, we show that this suite of metrics can inform the development of varied and complex Lifelong Learning systems. We highlight how the proposed suite of metrics quantifies performance trade-offs present during Lifelong Learning system development - both the widely discussed Stability-Plasticity dilemma and the newly proposed relationship between Sample Efficient and Robust Learning. Further, we make recommendations for the formulation and use of metrics to guide the continuing development of Lifelong Learning systems and assess their progress in the future.

Lifelong Machine Learning of Functionally Compositional Structures

Jul 25, 2022Abstract:A hallmark of human intelligence is the ability to construct self-contained chunks of knowledge and reuse them in novel combinations for solving different problems. Learning such compositional structures has been a challenge for artificial systems, due to the underlying combinatorial search. To date, research into compositional learning has largely proceeded separately from work on lifelong or continual learning. This dissertation integrated these two lines of work to present a general-purpose framework for lifelong learning of functionally compositional structures. The framework separates the learning into two stages: learning how to combine existing components to assimilate a novel problem, and learning how to adapt the existing components to accommodate the new problem. This separation explicitly handles the trade-off between stability and flexibility. This dissertation instantiated the framework into various supervised and reinforcement learning (RL) algorithms. Supervised learning evaluations found that 1) compositional models improve lifelong learning of diverse tasks, 2) the multi-stage process permits lifelong learning of compositional knowledge, and 3) the components learned by the framework represent self-contained and reusable functions. Similar RL evaluations demonstrated that 1) algorithms under the framework accelerate the discovery of high-performing policies, and 2) these algorithms retain or improve performance on previously learned tasks. The dissertation extended one lifelong compositional RL algorithm to the nonstationary setting, where the task distribution varies over time, and found that modularity permits individually tracking changes to different elements in the environment. The final contribution of this dissertation was a new benchmark for compositional RL, which exposed that existing methods struggle to discover the compositional properties of the environment.

How to Reuse and Compose Knowledge for a Lifetime of Tasks: A Survey on Continual Learning and Functional Composition

Jul 15, 2022

Abstract:A major goal of artificial intelligence (AI) is to create an agent capable of acquiring a general understanding of the world. Such an agent would require the ability to continually accumulate and build upon its knowledge as it encounters new experiences. Lifelong or continual learning addresses this setting, whereby an agent faces a continual stream of problems and must strive to capture the knowledge necessary for solving each new task it encounters. If the agent is capable of accumulating knowledge in some form of compositional representation, it could then selectively reuse and combine relevant pieces of knowledge to construct novel solutions. Despite the intuitive appeal of this simple idea, the literatures on lifelong learning and compositional learning have proceeded largely separately. In an effort to promote developments that bridge between the two fields, this article surveys their respective research landscapes and discusses existing and future connections between them.

CompoSuite: A Compositional Reinforcement Learning Benchmark

Jul 08, 2022

Abstract:We present CompoSuite, an open-source simulated robotic manipulation benchmark for compositional multi-task reinforcement learning (RL). Each CompoSuite task requires a particular robot arm to manipulate one individual object to achieve a task objective while avoiding an obstacle. This compositional definition of the tasks endows CompoSuite with two remarkable properties. First, varying the robot/object/objective/obstacle elements leads to hundreds of RL tasks, each of which requires a meaningfully different behavior. Second, RL approaches can be evaluated specifically for their ability to learn the compositional structure of the tasks. This latter capability to functionally decompose problems would enable intelligent agents to identify and exploit commonalities between learning tasks to handle large varieties of highly diverse problems. We benchmark existing single-task, multi-task, and compositional learning algorithms on various training settings, and assess their capability to compositionally generalize to unseen tasks. Our evaluation exposes the shortcomings of existing RL approaches with respect to compositionality and opens new avenues for investigation.

Reinforcement Learning of Multi-Domain Dialog Policies Via Action Embeddings

Jul 01, 2022

Abstract:Learning task-oriented dialog policies via reinforcement learning typically requires large amounts of interaction with users, which in practice renders such methods unusable for real-world applications. In order to reduce the data requirements, we propose to leverage data from across different dialog domains, thereby reducing the amount of data required from each given domain. In particular, we propose to learn domain-agnostic action embeddings, which capture general-purpose structure that informs the system how to act given the current dialog context, and are then specialized to a specific domain. We show how this approach is capable of learning with significantly less interaction with users, with a reduction of 35% in the number of dialogs required to learn, and to a higher level of proficiency than training separate policies for each domain on a set of simulated domains.

Lifelong Inverse Reinforcement Learning

Jul 01, 2022

Abstract:Methods for learning from demonstration (LfD) have shown success in acquiring behavior policies by imitating a user. However, even for a single task, LfD may require numerous demonstrations. For versatile agents that must learn many tasks via demonstration, this process would substantially burden the user if each task were learned in isolation. To address this challenge, we introduce the novel problem of lifelong learning from demonstration, which allows the agent to continually build upon knowledge learned from previously demonstrated tasks to accelerate the learning of new tasks, reducing the amount of demonstrations required. As one solution to this problem, we propose the first lifelong learning approach to inverse reinforcement learning, which learns consecutive tasks via demonstration, continually transferring knowledge between tasks to improve performance.

Modular Lifelong Reinforcement Learning via Neural Composition

Jul 01, 2022

Abstract:Humans commonly solve complex problems by decomposing them into easier subproblems and then combining the subproblem solutions. This type of compositional reasoning permits reuse of the subproblem solutions when tackling future tasks that share part of the underlying compositional structure. In a continual or lifelong reinforcement learning (RL) setting, this ability to decompose knowledge into reusable components would enable agents to quickly learn new RL tasks by leveraging accumulated compositional structures. We explore a particular form of composition based on neural modules and present a set of RL problems that intuitively admit compositional solutions. Empirically, we demonstrate that neural composition indeed captures the underlying structure of this space of problems. We further propose a compositional lifelong RL method that leverages accumulated neural components to accelerate the learning of future tasks while retaining performance on previous tasks via off-line RL over replayed experiences.

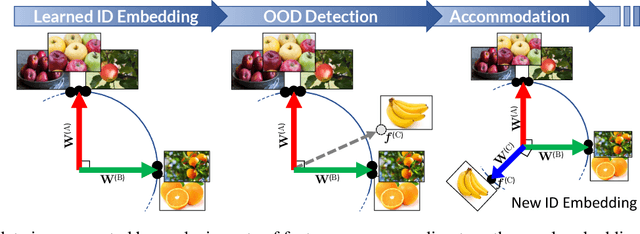

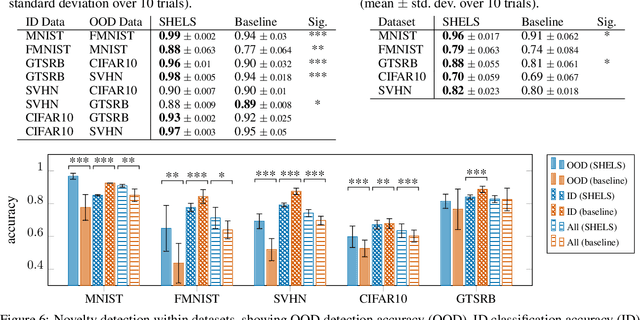

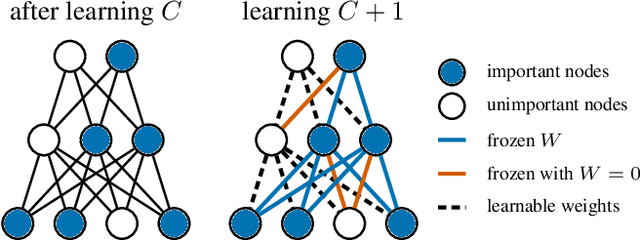

SHELS: Exclusive Feature Sets for Novelty Detection and Continual Learning Without Class Boundaries

Jun 28, 2022

Abstract:While deep neural networks (DNNs) have achieved impressive classification performance in closed-world learning scenarios, they typically fail to generalize to unseen categories in dynamic open-world environments, in which the number of concepts is unbounded. In contrast, human and animal learners have the ability to incrementally update their knowledge by recognizing and adapting to novel observations. In particular, humans characterize concepts via exclusive (unique) sets of essential features, which are used for both recognizing known classes and identifying novelty. Inspired by natural learners, we introduce a Sparse High-level-Exclusive, Low-level-Shared feature representation (SHELS) that simultaneously encourages learning exclusive sets of high-level features and essential, shared low-level features. The exclusivity of the high-level features enables the DNN to automatically detect out-of-distribution (OOD) data, while the efficient use of capacity via sparse low-level features permits accommodating new knowledge. The resulting approach uses OOD detection to perform class-incremental continual learning without known class boundaries. We show that using SHELS for novelty detection results in statistically significant improvements over state-of-the-art OOD detection approaches over a variety of benchmark datasets. Further, we demonstrate that the SHELS model mitigates catastrophic forgetting in a class-incremental learning setting,enabling a combined novelty detection and accommodation framework that supports learning in open-world settings

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge