JongYoon Lim

A Sign Language Recognition System with Pepper, Lightweight-Transformer, and LLM

Sep 28, 2023Abstract:This research explores using lightweight deep neural network architectures to enable the humanoid robot Pepper to understand American Sign Language (ASL) and facilitate non-verbal human-robot interaction. First, we introduce a lightweight and efficient model for ASL understanding optimized for embedded systems, ensuring rapid sign recognition while conserving computational resources. Building upon this, we employ large language models (LLMs) for intelligent robot interactions. Through intricate prompt engineering, we tailor interactions to allow the Pepper Robot to generate natural Co-Speech Gesture responses, laying the foundation for more organic and intuitive humanoid-robot dialogues. Finally, we present an integrated software pipeline, embodying advancements in a socially aware AI interaction model. Leveraging the Pepper Robot's capabilities, we demonstrate the practicality and effectiveness of our approach in real-world scenarios. The results highlight a profound potential for enhancing human-robot interaction through non-verbal interactions, bridging communication gaps, and making technology more accessible and understandable.

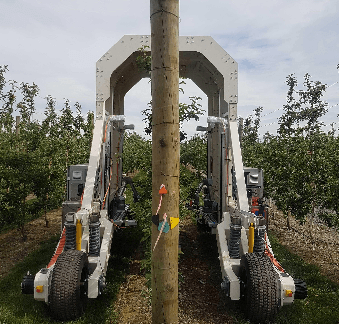

Seeing the Fruit for the Leaves: Robotically Mapping Apple Fruitlets in a Commercial Orchard

Aug 15, 2023

Abstract:Aotearoa New Zealand has a strong and growing apple industry but struggles to access workers to complete skilled, seasonal tasks such as thinning. To ensure effective thinning and make informed decisions on a per-tree basis, it is crucial to accurately measure the crop load of individual apple trees. However, this task poses challenges due to the dense foliage that hides the fruitlets within the tree structure. In this paper, we introduce the vision system of an automated apple fruitlet thinning robot, developed to tackle the labor shortage issue. This paper presents the initial design, implementation,and evaluation specifics of the system. The platform straddles the 3.4 m tall 2D apple canopy structures to create an accurate map of the fruitlets on each tree. We show that this platform can measure the fruitlet load on an apple tree by scanning through both sides of the branch. The requirement of an overarching platform was justified since two-sided scans had a higher counting accuracy of 81.17 % than one-sided scans at 73.7 %. The system was also demonstrated to produce size estimates within 5.9% RMSE of their true size.

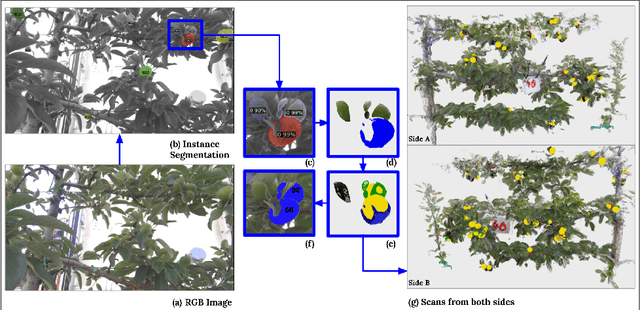

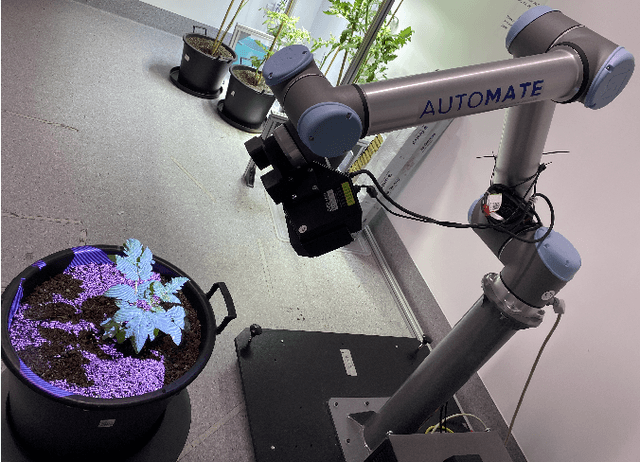

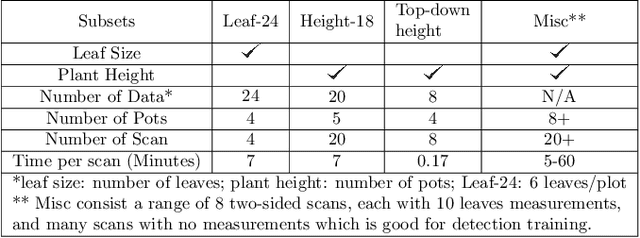

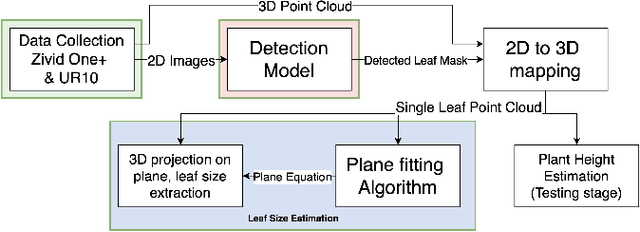

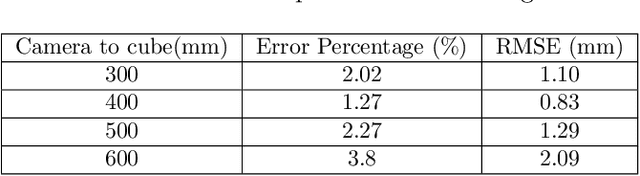

Look how they have grown: Non-destructive Leaf Detection and Size Estimation of Tomato Plants for 3D Growth Monitoring

Apr 07, 2023

Abstract:Smart farming is a growing field as technology advances. Plant characteristics are crucial indicators for monitoring plant growth. Research has been done to estimate characteristics like leaf area index, leaf disease, and plant height. However, few methods have been applied to non-destructive measurements of leaf size. In this paper, an automated non-destructive imaged-based measuring system is presented, which uses 2D and 3D data obtained using a Zivid 3D camera, creating 3D virtual representations (digital twins) of the tomato plants. Leaves are detected from corresponding 2D RGB images and mapped to their 3D point cloud using the detected leaf masks, which then pass the leaf point cloud to the plane fitting algorithm to extract the leaf size to provide data for growth monitoring. The performance of the measurement platform has been measured through a comprehensive trial on real-world tomato plants with quantified performance metrics compared to ground truth measurements. Three tomato leaf and height datasets (including 50+ 3D point cloud files of tomato plants) were collected and open-sourced in this project. The proposed leaf size estimation method demonstrates an RMSE value of 4.47mm and an R^2 value of 0.87. The overall measurement system (leaf detection and size estimation algorithms combine) delivers an RMSE value of 8.13mm and an R^2 value of 0.899.

* 10 Pages, 10 Figures

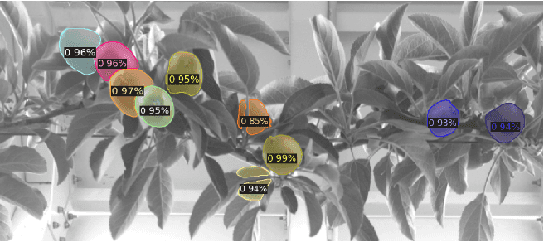

Seeing the Fruit for the Leaves: Towards Automated Apple Fruitlet Thinning

Feb 20, 2023

Abstract:Following a global trend, the lack of reliable access to skilled labour is causing critical issues for the effective management of apple orchards. One of the primary challenges is maintaining skilled human operators capable of making precise fruitlet thinning decisions. Thinning requires accurately measuring the true crop load for individual apple trees to provide optimal thinning decisions on an individual basis. A challenging task due to the dense foliage obscuring the fruitlets within the tree structure. This paper presents the initial design, implementation, and evaluation details of the vision system for an automatic apple fruitlet thinning robot to meet this need. The platform consists of a UR5 robotic arm and stereo cameras which enable it to look around the leaves to map the precise number and size of the fruitlets on the apple branches. We show that this platform can measure the fruitlet load on the apple tree to with 84% accuracy in a real-world commercial apple orchard while being 87% precise.

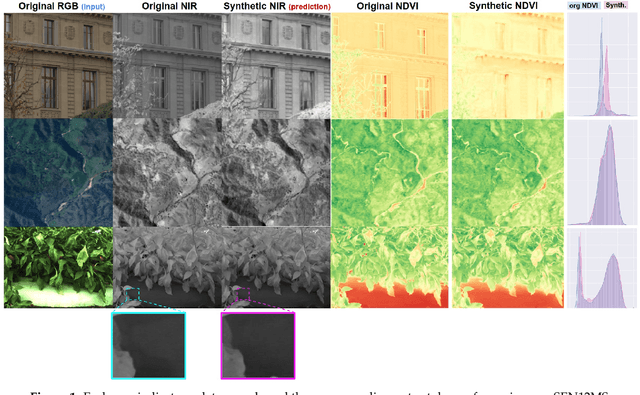

deepNIR: Datasets for generating synthetic NIR images and improved fruit detection system using deep learning techniques

Mar 17, 2022

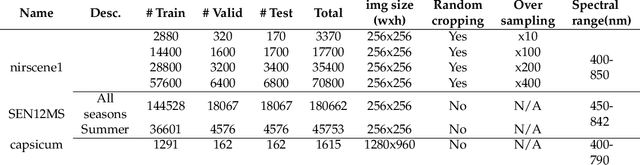

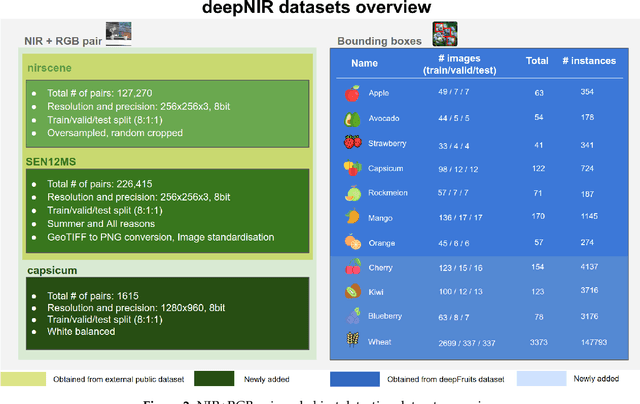

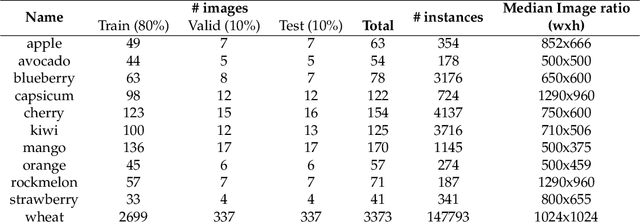

Abstract:This paper presents datasets utilised for synthetic near-infrared (NIR) image generation and bounding-box level fruit detection systems. It is undeniable that high-calibre machine learning frameworks such as Tensorflow or Pytorch, and large-scale ImageNet or COCO datasets with the aid of accelerated GPU hardware have pushed the limit of machine learning techniques for more than decades. Among these breakthroughs, a high-quality dataset is one of the essential building blocks that can lead to success in model generalisation and the deployment of data-driven deep neural networks. In particular, synthetic data generation tasks often require more training samples than other supervised approaches. Therefore, in this paper, we share the NIR+RGB datasets that are re-processed from two public datasets (i.e., nirscene and SEN12MS) and our novel NIR+RGB sweet pepper(capsicum) dataset. We quantitatively and qualitatively demonstrate that these NIR+RGB datasets are sufficient to be used for synthetic NIR image generation. We achieved Frechet Inception Distance (FID) of 11.36, 26.53, and 40.15 for nirscene1, SEN12MS, and sweet pepper datasets respectively. In addition, we release manual annotations of 11 fruit bounding boxes that can be exported as various formats using cloud service. Four newly added fruits [blueberry, cherry, kiwi, and wheat] compound 11 novel bounding box datasets on top of our previous work presented in the deepFruits project [apple, avocado, capsicum, mango, orange, rockmelon, strawberry]. The total number of bounding box instances of the dataset is 162k and it is ready to use from cloud service. For the evaluation of the dataset, Yolov5 single stage detector is exploited and reported impressive mean-average-precision,mAP[0.5:0.95] results of[min:0.49, max:0.812]. We hope these datasets are useful and serve as a baseline for the future studies.

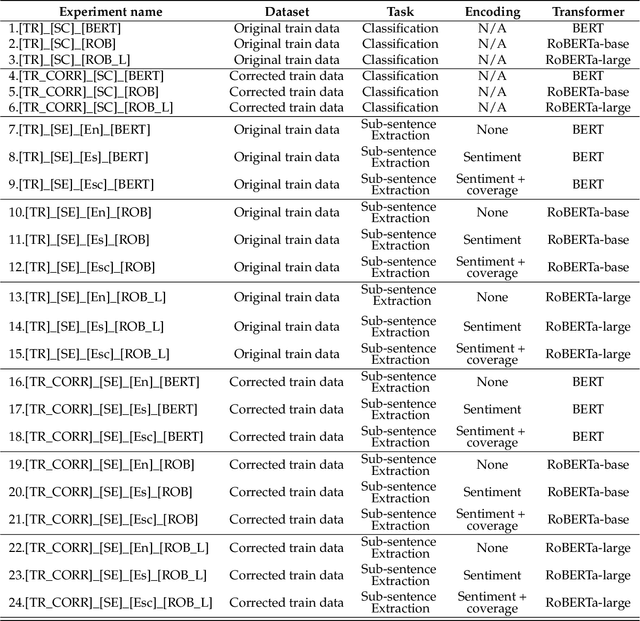

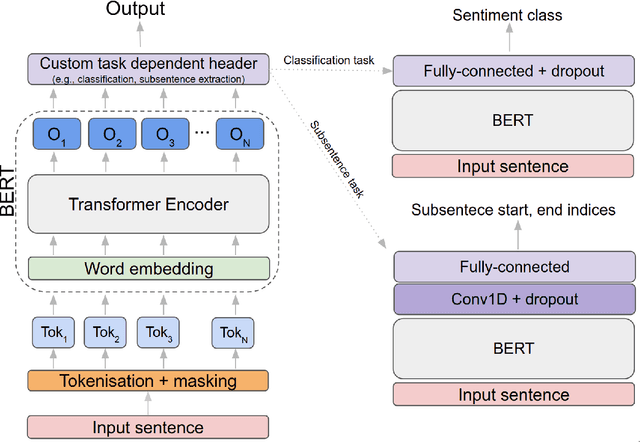

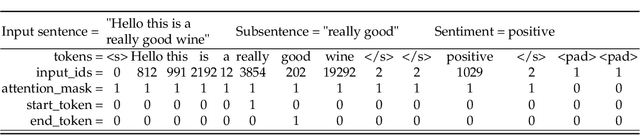

Subsentence Extraction from Text Using Coverage-Based Deep Learning Language Models

May 07, 2021

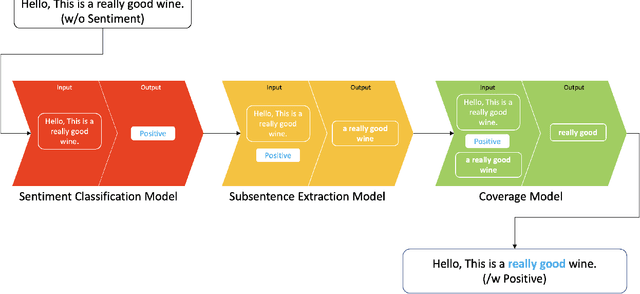

Abstract:Sentiment prediction remains a challenging and unresolved task in various research fields, including psychology, neuroscience, and computer science. This stems from its high degree of subjectivity and limited input sources that can effectively capture the actual sentiment. This can be even more challenging with only text-based input. Meanwhile, the rise of deep learning and an unprecedented large volume of data have paved the way for artificial intelligence to perform impressively accurate predictions or even human-level reasoning. Drawing inspiration from this, we propose a coverage-based sentiment and subsentence extraction system that estimates a span of input text and recursively feeds this information back to the networks. The predicted subsentence consists of auxiliary information expressing a sentiment. This is an important building block for enabling vivid and epic sentiment delivery (within the scope of this paper) and for other natural language processing tasks such as text summarisation and Q&A. Our approach outperforms the state-of-the-art approaches by a large margin in subsentence prediction (i.e., Average Jaccard scores from 0.72 to 0.89). For the evaluation, we designed rigorous experiments consisting of 24 ablation studies. Finally, our learned lessons are returned to the community by sharing software packages and a public dataset that can reproduce the results presented in this paper.

* 27 pages, 16 figures

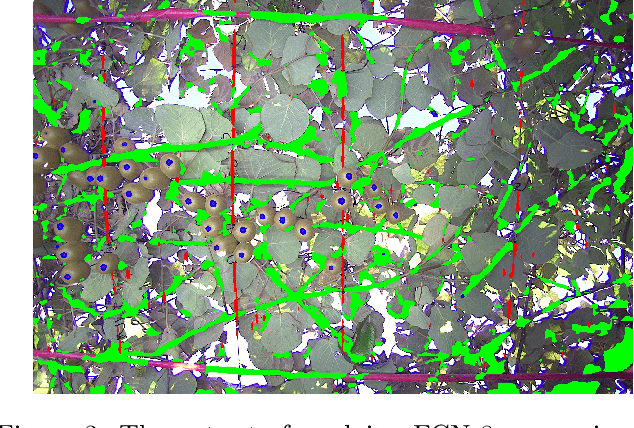

Kiwifruit detection in challenging conditions

Jun 21, 2020

Abstract:Accurate and reliable kiwifruit detection is one of the biggest challenges in developing a selective fruit harvesting robot. The vision system of an orchard robot faces difficulties such as dynamic lighting conditions and fruit occlusions. This paper presents a semantic segmentation approach with two novel image prepossessing techniques designed to detect kiwifruit under the harsh lighting conditions found in the canopy. The performance of the presented system is evaluated on a 3D real-world image set of kiwifruit under different lighting conditions (typical, glare, and overexposed). Alone the semantic segmentation approach achieves an F1_score of 0.82 on the typical lighting image set, but struggles with harsh lighting with an F1_score of 0.13. Utilising the prepossessing techniques the vision system under harsh lighting improves to an F1_score 0.42. To address the fruit occlusion challenge, the overall approach was found to be capable of detecting 87.0% of non-occluded and 30.0% of occluded kiwifruit across all lighting conditions.

Deep Neural Network Based Real-time Kiwi Fruit Flower Detection in an Orchard Environment

Jun 08, 2020

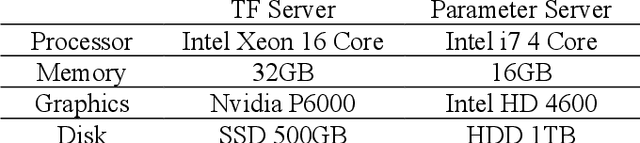

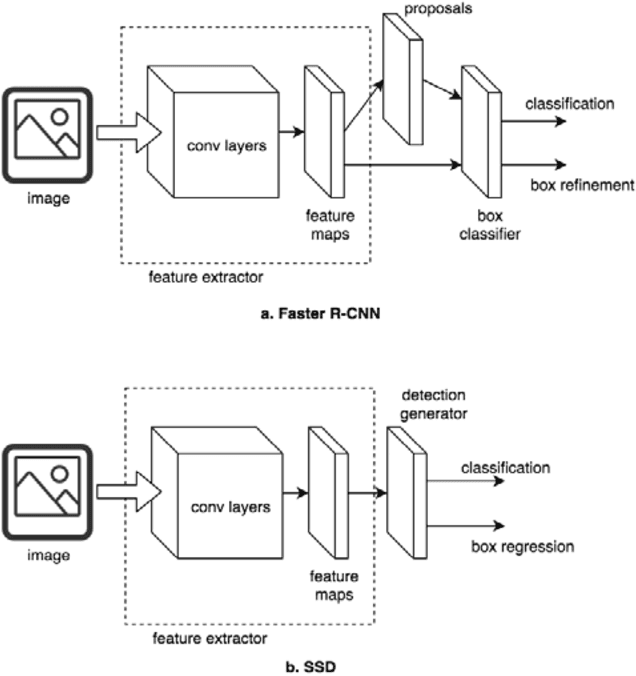

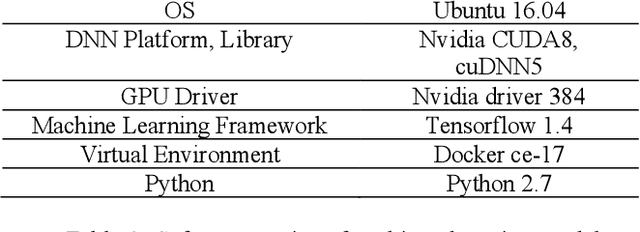

Abstract:In this paper, we present a novel approach to kiwi fruit flower detection using Deep Neural Networks (DNNs) to build an accurate, fast, and robust autonomous pollination robot system. Recent work in deep neural networks has shown outstanding performance on object detection tasks in many areas. Inspired this, we aim for exploiting DNNs for kiwi fruit flower detection and present intensive experiments and their analysis on two state-of-the-art object detectors; Faster R-CNN and Single Shot Detector (SSD) Net, and feature extractors; Inception Net V2 and NAS Net with real-world orchard datasets. We also compare those approaches to find an optimal model which is suitable for a real-time agricultural pollination robot system in terms of accuracy and processing speed. We perform experiments with dataset collected from different seasons and locations (spatio-temporal consistency) in order to demonstrate the performance of the generalized model. The proposed system demonstrates promising results of 0.919, 0.874, and 0.889 for precision, recall, and F1-score respectively on our real-world dataset, and the performance satisfies the requirement for deploying the system onto an autonomous pollination robotics system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge