Jamie Bell

Kiwifruit detection in challenging conditions

Jun 21, 2020

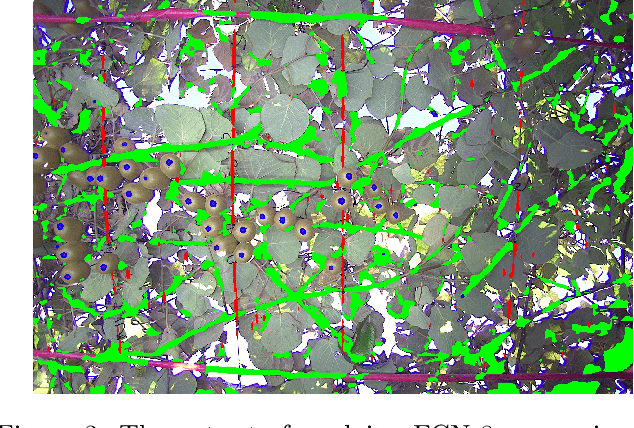

Abstract:Accurate and reliable kiwifruit detection is one of the biggest challenges in developing a selective fruit harvesting robot. The vision system of an orchard robot faces difficulties such as dynamic lighting conditions and fruit occlusions. This paper presents a semantic segmentation approach with two novel image prepossessing techniques designed to detect kiwifruit under the harsh lighting conditions found in the canopy. The performance of the presented system is evaluated on a 3D real-world image set of kiwifruit under different lighting conditions (typical, glare, and overexposed). Alone the semantic segmentation approach achieves an F1_score of 0.82 on the typical lighting image set, but struggles with harsh lighting with an F1_score of 0.13. Utilising the prepossessing techniques the vision system under harsh lighting improves to an F1_score 0.42. To address the fruit occlusion challenge, the overall approach was found to be capable of detecting 87.0% of non-occluded and 30.0% of occluded kiwifruit across all lighting conditions.

Deep Neural Network Based Real-time Kiwi Fruit Flower Detection in an Orchard Environment

Jun 08, 2020

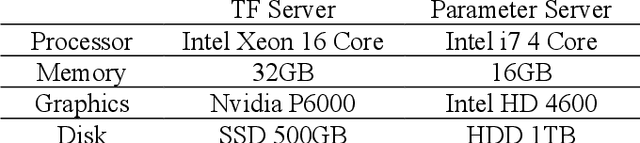

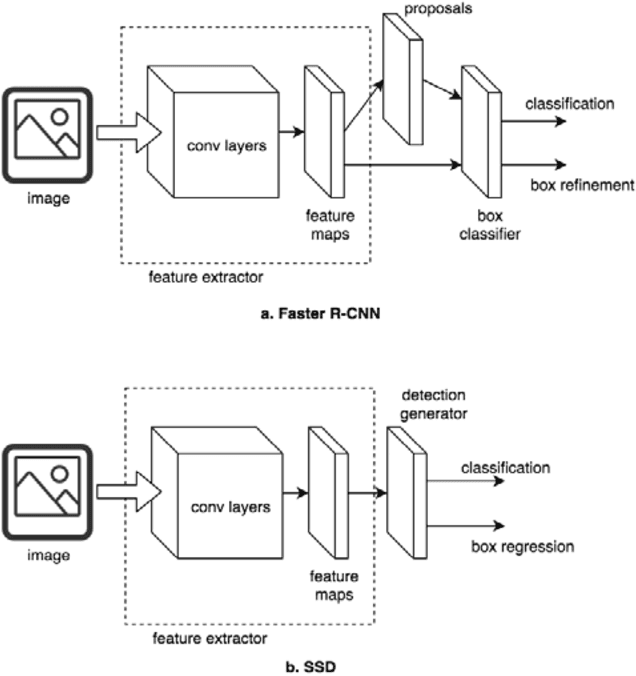

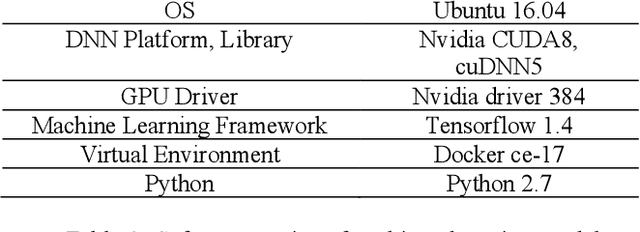

Abstract:In this paper, we present a novel approach to kiwi fruit flower detection using Deep Neural Networks (DNNs) to build an accurate, fast, and robust autonomous pollination robot system. Recent work in deep neural networks has shown outstanding performance on object detection tasks in many areas. Inspired this, we aim for exploiting DNNs for kiwi fruit flower detection and present intensive experiments and their analysis on two state-of-the-art object detectors; Faster R-CNN and Single Shot Detector (SSD) Net, and feature extractors; Inception Net V2 and NAS Net with real-world orchard datasets. We also compare those approaches to find an optimal model which is suitable for a real-time agricultural pollination robot system in terms of accuracy and processing speed. We perform experiments with dataset collected from different seasons and locations (spatio-temporal consistency) in order to demonstrate the performance of the generalized model. The proposed system demonstrates promising results of 0.919, 0.874, and 0.889 for precision, recall, and F1-score respectively on our real-world dataset, and the performance satisfies the requirement for deploying the system onto an autonomous pollination robotics system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge