Johannes Meyer

A Novel Signal Processing Strategy for Short-Range Laser Feedback Interferometry Sensors

Jun 12, 2025

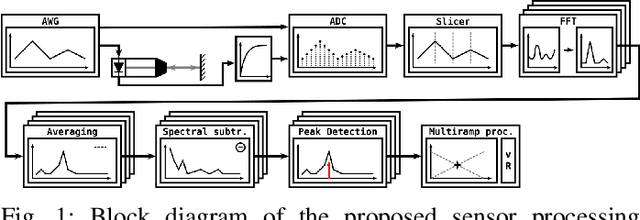

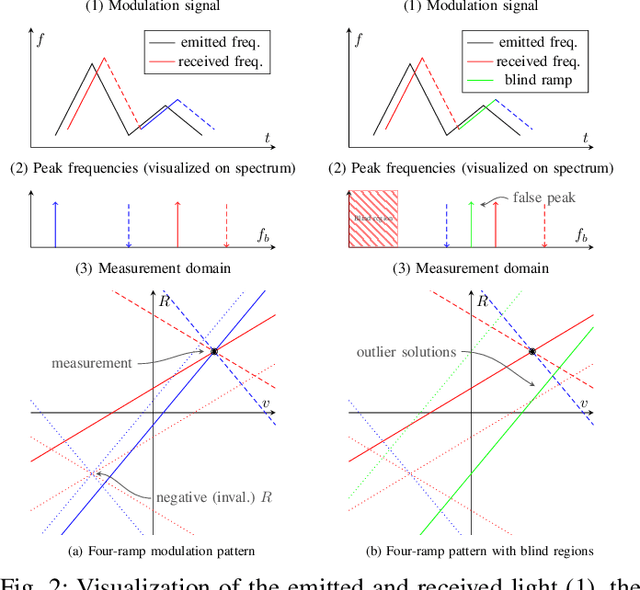

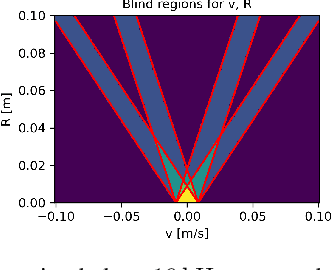

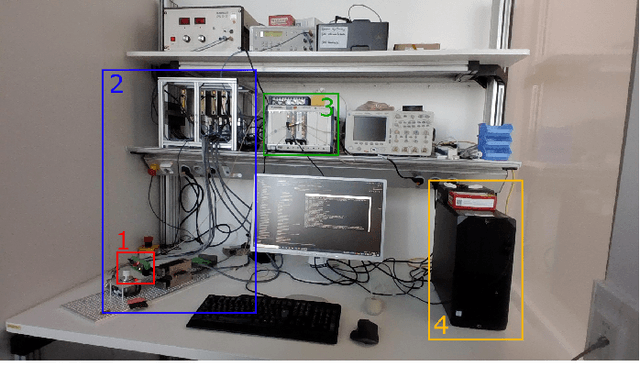

Abstract:The rapid evolution of wearable technologies, such as AR glasses, demands compact, energy-efficient sensors capable of high-precision measurements in dynamic environments. Traditional Frequency-Modulated Continuous Wave (FMCW) Laser Feedback Interferometry (LFI) sensors, while promising, falter in applications that feature small distances, high velocities, shallow modulation, and low-power constraints. We propose a novel sensor-processing pipeline that reliably extracts distance and velocity measurements at distances as low as 1 cm. As a core contribution, we introduce a four-ramp modulation scheme that resolves persistent ambiguities in beat frequency signs and overcomes spectral blind regions caused by hardware limitations. Based on measurements of the implemented pipeline, a noise model is defined to evaluate its performance and sensitivity to several algorithmic and working point parameters. We show that the pipeline generally achieves robust and low-noise measurements using state-of-the-art hardware.

Automatic Target-Less Camera-LiDAR Calibration From Motion and Deep Point Correspondences

Apr 26, 2024

Abstract:Sensor setups of robotic platforms commonly include both camera and LiDAR as they provide complementary information. However, fusing these two modalities typically requires a highly accurate calibration between them. In this paper, we propose MDPCalib which is a novel method for camera-LiDAR calibration that requires neither human supervision nor any specific target objects. Instead, we utilize sensor motion estimates from visual and LiDAR odometry as well as deep learning-based 2D-pixel-to-3D-point correspondences that are obtained without in-domain retraining. We represent the camera-LiDAR calibration as a graph optimization problem and minimize the costs induced by constraints from sensor motion and point correspondences. In extensive experiments, we demonstrate that our approach yields highly accurate extrinsic calibration parameters and is robust to random initialization. Additionally, our approach generalizes to a wide range of sensor setups, which we demonstrate by employing it on various robotic platforms including a self-driving perception car, a quadruped robot, and a UAV. To make our calibration method publicly accessible, we release the code on our project website at http://calibration.cs.uni-freiburg.de.

Towards Energy Efficient Mobile Eye Tracking for AR Glasses through Optical Sensor Technology

Dec 06, 2022Abstract:After the introduction of smartphones and smartwatches, AR glasses are considered the next breakthrough in the field of wearables. While the transition from smartphones to smartwatches was based mainly on established display technologies, the display technology of AR glasses presents a technological challenge. Many display technologies, such as retina projectors, are based on continuous adaptive control of the display based on the user's pupil position. Furthermore, head-mounted systems require an adaptation and extension of established interaction concepts to provide the user with an immersive experience. Eye-tracking is a crucial technology to help AR glasses achieve a breakthrough through optimized display technology and gaze-based interaction concepts. Available eye-tracking technologies, such as VOG, do not meet the requirements of AR glasses, especially regarding power consumption, robustness, and integrability. To further overcome these limitations and push mobile eye-tracking for AR glasses forward, novel laser-based eye-tracking sensor technologies are researched in this thesis. The thesis contributes to a significant scientific advancement towards energy-efficient mobile eye-tracking for AR glasses.

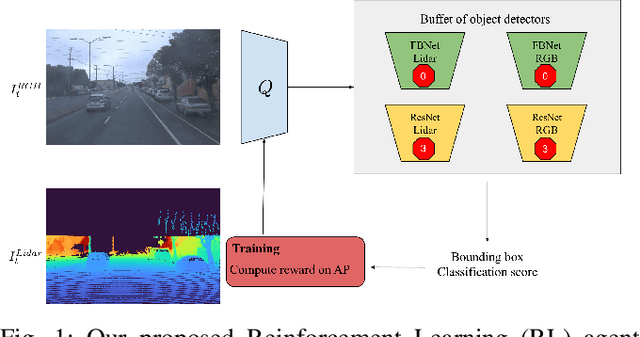

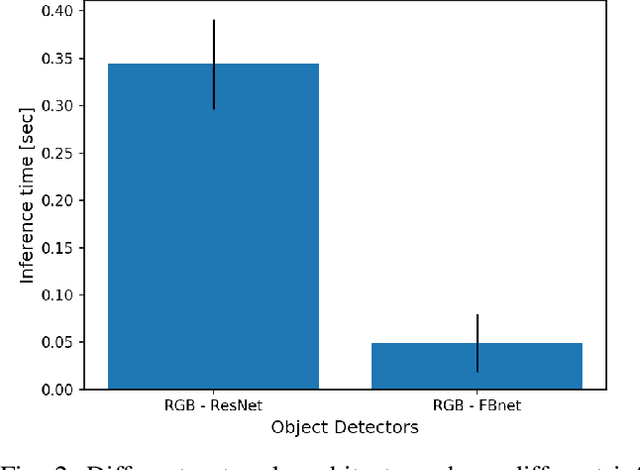

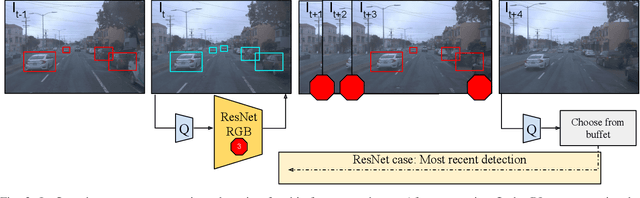

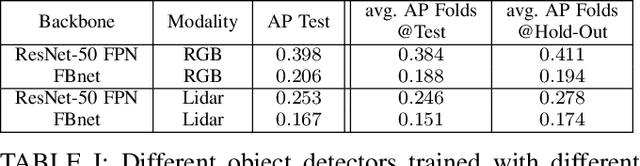

Modality-Buffet for Real-Time Object Detection

Nov 17, 2020

Abstract:Real-time object detection in videos using lightweight hardware is a crucial component of many robotic tasks. Detectors using different modalities and with varying computational complexities offer different trade-offs. One option is to have a very lightweight model that can predict from all modalities at once for each frame. However, in some situations (e.g., in static scenes) it might be better to have a more complex but more accurate model and to extrapolate from previous predictions for the frames coming in at processing time. We formulate this task as a sequential decision making problem and use reinforcement learning (RL) to generate a policy that decides from the RGB input which detector out of a portfolio of different object detectors to take for the next prediction. The objective of the RL agent is to maximize the accuracy of the predictions per image. We evaluate the approach on the Waymo Open Dataset and show that it exceeds the performance of each single detector.

Stochastic gradient descent for hybrid quantum-classical optimization

Oct 02, 2019

Abstract:Within the context of hybrid quantum-classical optimization, gradient descent based optimizers typically require the evaluation of expectation values with respect to the outcome of parameterized quantum circuits. In this work, we explore the significant consequences of the simple observation that the estimation of these quantities on quantum hardware is a form of stochastic gradient descent optimization. We formalize this notion, which allows us to show that in many relevant cases, including VQE, QAOA and certain quantum classifiers, estimating expectation values with $k$ measurement outcomes results in optimization algorithms whose convergence properties can be rigorously well understood, for any value of $k$. In fact, even using single measurement outcomes for the estimation of expectation values is sufficient. Moreover, in many settings the required gradients can be expressed as linear combinations of expectation values -- originating, e.g., from a sum over local terms of a Hamiltonian, a parameter shift rule, or a sum over data-set instances -- and we show that in these cases $k$-shot expectation value estimation can be combined with sampling over terms of the linear combination, to obtain "doubly stochastic" gradient descent optimizers. For all algorithms we prove convergence guarantees, providing a framework for the derivation of rigorous optimization results in the context of near-term quantum devices. Additionally, we explore numerically these methods on benchmark VQE, QAOA and quantum-enhanced machine learning tasks and show that treating the stochastic settings as hyper-parameters allows for state-of-the-art results with significantly fewer circuit executions and measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge