Jodi Forlizzi

Can You Keep a Secret? Exploring AI for Care Coordination in Cognitive Decline

Dec 14, 2025Abstract:The increasing number of older adults who experience cognitive decline places a burden on informal caregivers, whose support with tasks of daily living determines whether older adults can remain in their homes. To explore how agents might help lower-SES older adults to age-in-place, we interviewed ten pairs of older adults experiencing cognitive decline and their informal caregivers. We explored how they coordinate care, manage burdens, and sustain autonomy and privacy. Older adults exercised control by delegating tasks to specific caregivers, keeping information about all the care they received from their adult children. Many abandoned some tasks of daily living, lowering their quality of life to ease caregiver burden. One effective strategy, piggybacking, uses spontaneous overlaps in errands to get more work done with less caregiver effort. This raises the questions: (i) Can agents help with piggyback coordination? (ii) Would it keep older adults in their homes longer, while not increasing caregiver burden?

Exploring the Innovation Opportunities for Pre-trained Models

May 21, 2025Abstract:Innovators transform the world by understanding where services are successfully meeting customers' needs and then using this knowledge to identify failsafe opportunities for innovation. Pre-trained models have changed the AI innovation landscape, making it faster and easier to create new AI products and services. Understanding where pre-trained models are successful is critical for supporting AI innovation. Unfortunately, the hype cycle surrounding pre-trained models makes it hard to know where AI can really be successful. To address this, we investigated pre-trained model applications developed by HCI researchers as a proxy for commercially successful applications. The research applications demonstrate technical capabilities, address real user needs, and avoid ethical challenges. Using an artifact analysis approach, we categorized capabilities, opportunity domains, data types, and emerging interaction design patterns, uncovering some of the opportunity space for innovation with pre-trained models.

Rethinking Theory of Mind Benchmarks for LLMs: Towards A User-Centered Perspective

Apr 15, 2025Abstract:The last couple of years have witnessed emerging research that appropriates Theory-of-Mind (ToM) tasks designed for humans to benchmark LLM's ToM capabilities as an indication of LLM's social intelligence. However, this approach has a number of limitations. Drawing on existing psychology and AI literature, we summarize the theoretical, methodological, and evaluation limitations by pointing out that certain issues are inherently present in the original ToM tasks used to evaluate human's ToM, which continues to persist and exacerbated when appropriated to benchmark LLM's ToM. Taking a human-computer interaction (HCI) perspective, these limitations prompt us to rethink the definition and criteria of ToM in ToM benchmarks in a more dynamic, interactional approach that accounts for user preferences, needs, and experiences with LLMs in such evaluations. We conclude by outlining potential opportunities and challenges towards this direction.

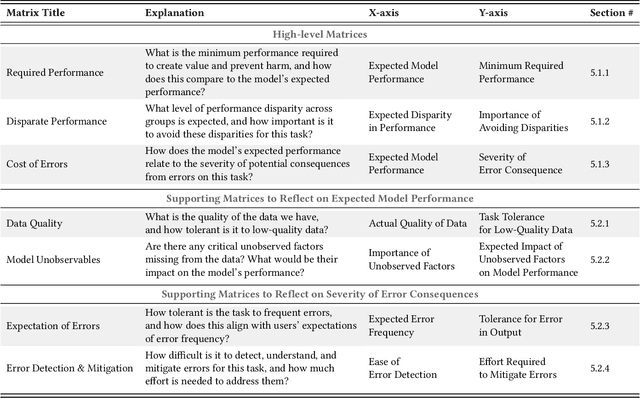

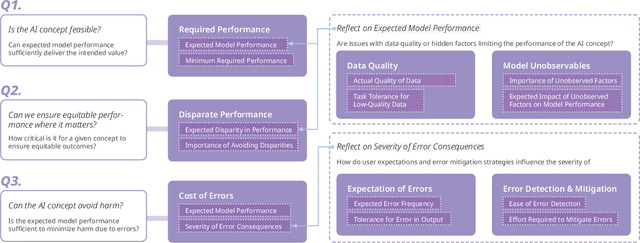

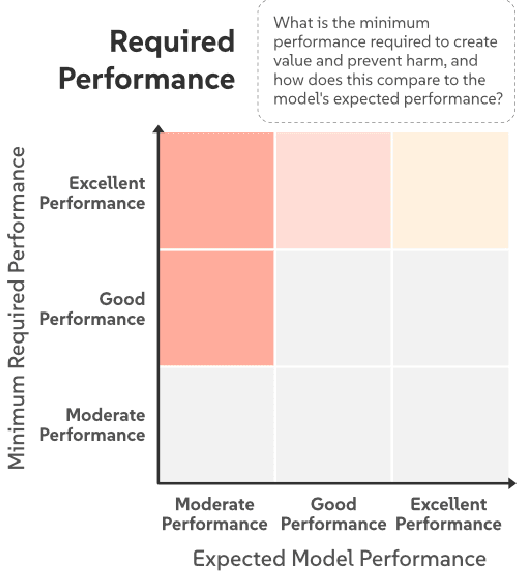

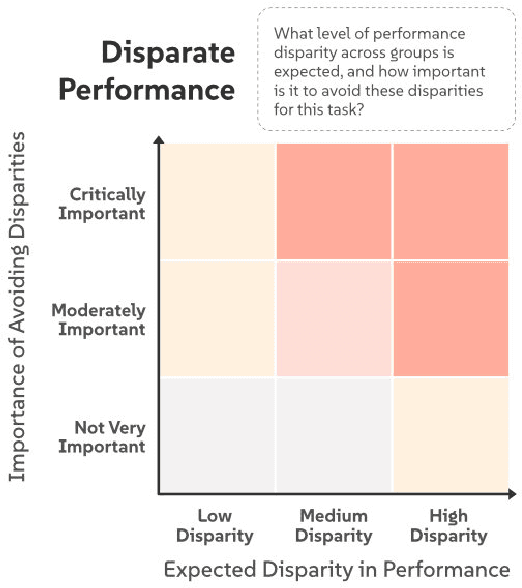

AI Mismatches: Identifying Potential Algorithmic Harms Before AI Development

Feb 25, 2025

Abstract:AI systems are often introduced with high expectations, yet many fail to deliver, resulting in unintended harm and missed opportunities for benefit. We frequently observe significant "AI Mismatches", where the system's actual performance falls short of what is needed to ensure safety and co-create value. These mismatches are particularly difficult to address once development is underway, highlighting the need for early-stage intervention. Navigating complex, multi-dimensional risk factors that contribute to AI Mismatches is a persistent challenge. To address it, we propose an AI Mismatch approach to anticipate and mitigate risks early on, focusing on the gap between realistic model performance and required task performance. Through an analysis of 774 AI cases, we extracted a set of critical factors, which informed the development of seven matrices that map the relationships between these factors and highlight high-risk areas. Through case studies, we demonstrate how our approach can help reduce risks in AI development.

Championing Research Through Design in HRI

Aug 20, 2019

Abstract:One of the challenges in conducting research on the intersection of the CHI and Human-Robot Interaction (HRI) communities is in addressing the gap of acceptable design research methods between the two. While HRI is focused on interaction with robots and includes design research in its scope, the community is not as accustomed to exploratory design methods as the CHI community. This workshop paper argues for bringing exploratory design, and specifically Research through Design (RtD) methods that have been established in CHI for the past decade to the foreground of HRI. RtD can enable design researchers in the field of HRI to conduct exploratory design work that asks what is the right thing to design and share it within the community.

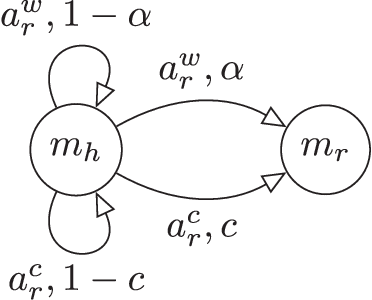

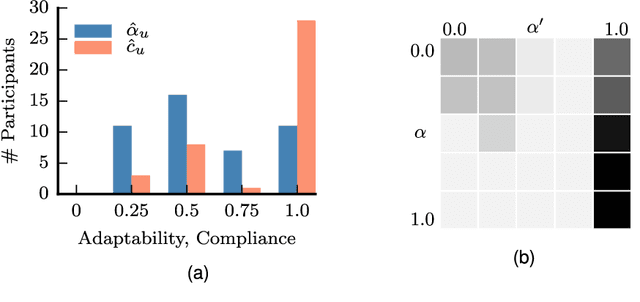

Mathematical Models of Adaptation in Human-Robot Collaboration

Aug 04, 2017

Abstract:A robot operating in isolation needs to reason over the uncertainty in its model of the world and adapt its own actions to account for this uncertainty. Similarly, a robot interacting with people needs to reason over its uncertainty over the human internal state, as well as over how this state may change, as humans adapt to the robot. This paper summarizes our own work in this area, which depicts the different ways that probabilistic planning and game-theoretic algorithms can enable such reasoning in robotic systems that collaborate with people. We start with a general formulation of the problem as a two-player game with incomplete information. We then articulate the different assumptions within this general formulation, and we explain how these lead to exciting and diverse robot behaviors in real-time interactions with actual human subjects, in a variety of manufacturing, personal robotics and assistive care settings.

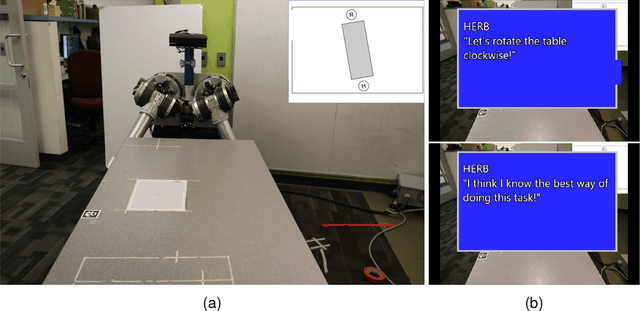

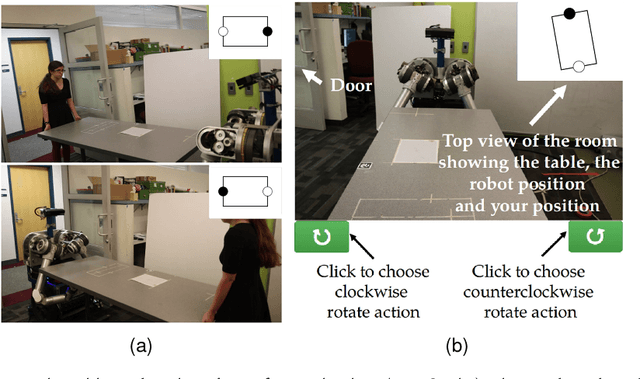

Planning with Verbal Communication for Human-Robot Collaboration

Jun 14, 2017

Abstract:Human collaborators coordinate effectively their actions through both verbal and non-verbal communication. We believe that the the same should hold for human-robot teams. We propose a formalism that enables a robot to decide optimally between doing a task and issuing an utterance. We focus on two types of utterances: verbal commands, where the robot expresses how it wants its human teammate to behave, and state-conveying actions, where the robot explains why it is behaving this way. Human subject experiments show that enabling the robot to issue verbal commands is the most effective form of communicating objectives, while retaining user trust in the robot. Communicating why information should be done judiciously, since many participants questioned the truthfulness of the robot statements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge