Qiaosi Wang

Hyun Jin

Situated, Dynamic, and Subjective: Envisioning the Design of Theory-of-Mind-Enabled Everyday AI with Industry Practitioners

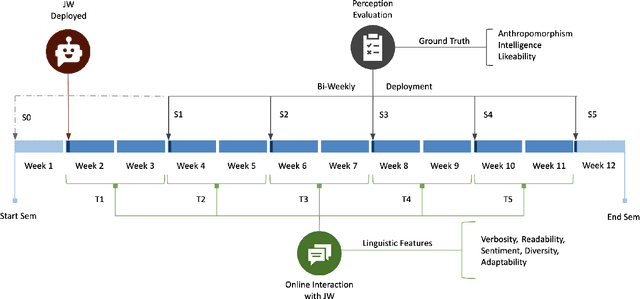

Feb 11, 2026Abstract:Theory of Mind (ToM) -- the ability to infer what others are thinking (e.g., intentions) from observable cues -- is traditionally considered fundamental to human social interactions. This has sparked growing efforts in building and benchmarking AI's ToM capability, yet little is known about how such capability could translate into the design and experience of everyday user-facing AI products and services. We conducted 13 co-design sessions with 26 U.S.-based AI practitioners to envision, reflect, and distill design recommendations for ToM-enabled everyday AI products and services that are both future-looking and grounded in the realities of AI design and development practices. Analysis revealed three interrelated design recommendations: ToM-enabled AI should 1) be situated in the social context that shape users' mental states, 2) be responsive to the dynamic nature of mental states, and 3) be attuned to subjective individual differences. We surface design tensions within each recommendation that reveal a broader gap between practitioners' envisioned futures of ToM-enabled AI and the realities of current AI design and development practices. These findings point toward the need to move beyond static, inference-driven approach to ToM and toward designing ToM as a pervasive capability that supports continuous human-AI interaction loops.

* 16 pages, preprint for ACM CHI 2026 Conference

Rethinking Theory of Mind Benchmarks for LLMs: Towards A User-Centered Perspective

Apr 15, 2025Abstract:The last couple of years have witnessed emerging research that appropriates Theory-of-Mind (ToM) tasks designed for humans to benchmark LLM's ToM capabilities as an indication of LLM's social intelligence. However, this approach has a number of limitations. Drawing on existing psychology and AI literature, we summarize the theoretical, methodological, and evaluation limitations by pointing out that certain issues are inherently present in the original ToM tasks used to evaluate human's ToM, which continues to persist and exacerbated when appropriated to benchmark LLM's ToM. Taking a human-computer interaction (HCI) perspective, these limitations prompt us to rethink the definition and criteria of ToM in ToM benchmarks in a more dynamic, interactional approach that accounts for user preferences, needs, and experiences with LLMs in such evaluations. We conclude by outlining potential opportunities and challenges towards this direction.

Navigating AI Fallibility: Examining People's Reactions and Perceptions of AI after Encountering Personality Misrepresentations

May 25, 2024Abstract:Many hyper-personalized AI systems profile people's characteristics (e.g., personality traits) to provide personalized recommendations. These systems are increasingly used to facilitate interactions among people, such as providing teammate recommendations. Despite improved accuracy, such systems are not immune to errors when making inferences about people's most personal traits. These errors manifested as AI misrepresentations. However, the repercussions of such AI misrepresentations are unclear, especially on people's reactions and perceptions of the AI. We present two studies to examine how people react and perceive the AI after encountering personality misrepresentations in AI-facilitated team matching in a higher education context. Through semi-structured interviews (n=20) and a survey experiment (n=198), we pinpoint how people's existing and newly acquired AI knowledge could shape their perceptions and reactions of the AI after encountering AI misrepresentations. Specifically, we identified three rationales that people adopted through knowledge acquired from AI (mis)representations: AI works like a machine, human, and/or magic. These rationales are highly connected to people's reactions of over-trusting, rationalizing, and forgiving of AI misrepresentations. Finally, we found that people's existing AI knowledge, i.e., AI literacy, could moderate people's changes in their trust in AI after encountering AI misrepresentations, but not changes in people's social perceptions of AI. We discuss the role of people's AI knowledge when facing AI fallibility and implications for designing responsible mitigation and repair strategies.

Mutual Theory of Mind for Human-AI Communication

Oct 07, 2022

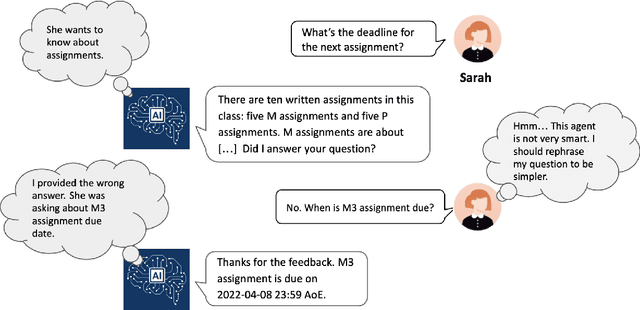

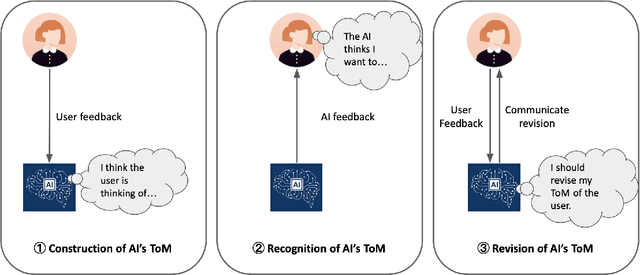

Abstract:From navigation systems to smart assistants, we communicate with various AI on a daily basis. At the core of such human-AI communication, we convey our understanding of the AI's capability to the AI through utterances with different complexities, and the AI conveys its understanding of our needs and goals to us through system outputs. However, this communication process is prone to failures for two reasons: the AI might have the wrong understanding of the user and the user might have the wrong understanding of the AI. To enhance mutual understanding in human-AI communication, we posit the Mutual Theory of Mind (MToM) framework, inspired by our basic human capability of "Theory of Mind." In this paper, we discuss the motivation of the MToM framework and its three key components that continuously shape the mutual understanding during three stages of human-AI communication. We then describe a case study inspired by the MToM framework to demonstrate the power of MToM framework to guide the design and understanding of human-AI communication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge