Planning with Verbal Communication for Human-Robot Collaboration

Paper and Code

Jun 14, 2017

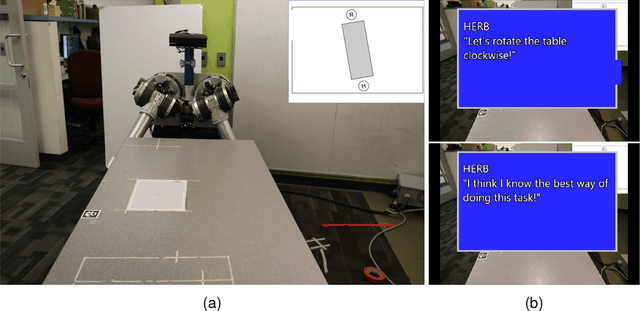

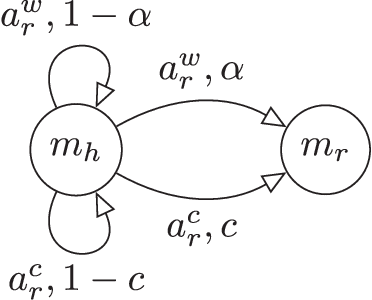

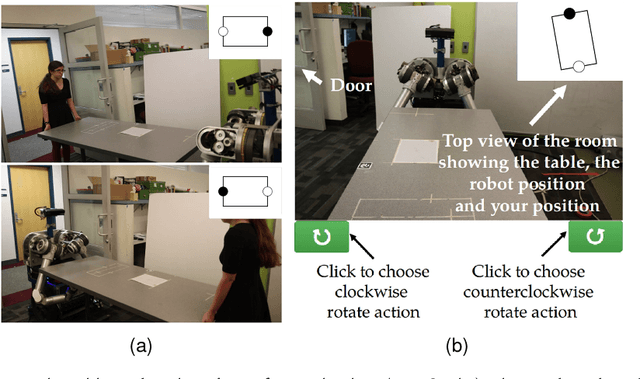

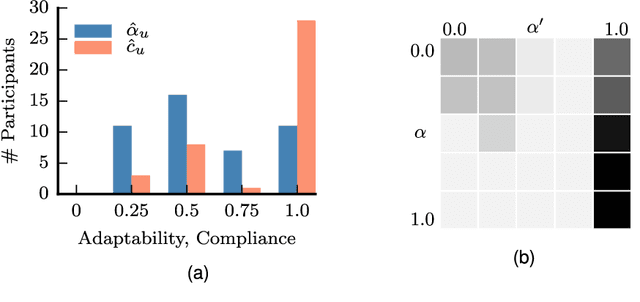

Human collaborators coordinate effectively their actions through both verbal and non-verbal communication. We believe that the the same should hold for human-robot teams. We propose a formalism that enables a robot to decide optimally between doing a task and issuing an utterance. We focus on two types of utterances: verbal commands, where the robot expresses how it wants its human teammate to behave, and state-conveying actions, where the robot explains why it is behaving this way. Human subject experiments show that enabling the robot to issue verbal commands is the most effective form of communicating objectives, while retaining user trust in the robot. Communicating why information should be done judiciously, since many participants questioned the truthfulness of the robot statements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge