Jiunn-Kai Huang

Realtime Safety Control for Bipedal Robots to Avoid Multiple Obstacles via CLF-CBF Constraints

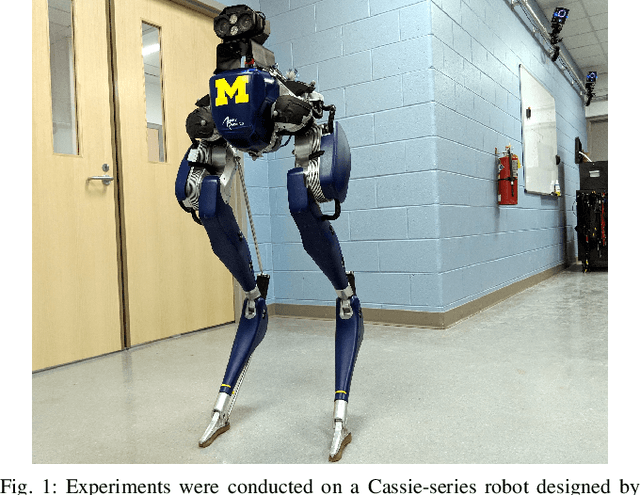

Jan 05, 2023Abstract:This paper presents a reactive planning system that allows a Cassie-series bipedal robot to avoid multiple non-overlapping obstacles via a single, continuously differentiable control barrier function (CBF). The overall system detects an individual obstacle via a height map derived from a LiDAR point cloud and computes an elliptical outer approximation, which is then turned into a CBF. The QP-CLF-CBF formalism developed by Ames et al. is applied to ensure that safe trajectories are generated. Liveness is ensured by an analysis of induced equilibrium points that are distinct from the goal state. Safe planning in environments with multiple obstacles is demonstrated both in simulation and experimentally on the Cassie biped.

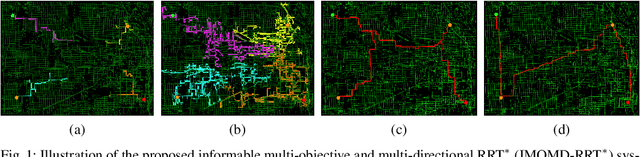

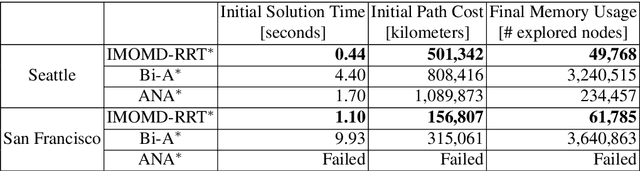

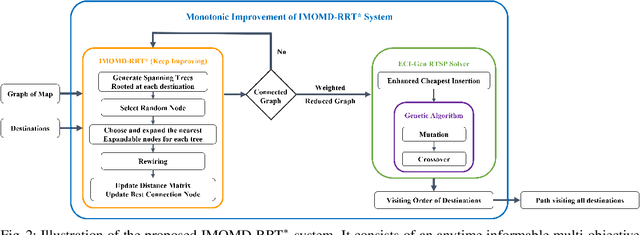

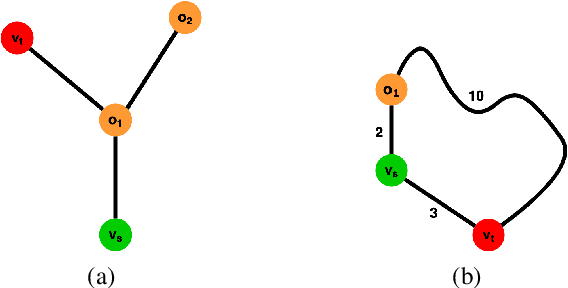

Informable Multi-Objective and Multi-Directional RRT* System for Robot Path Planning

May 30, 2022

Abstract:Multi-objective or multi-destination path planning is crucial for mobile robotics applications such as mobility as a service, robotics inspection, and electric vehicle charging for long trips. This work proposes an anytime iterative system to concurrently solve the multi-objective path planning problem and determine the visiting order of destinations. The system is comprised of an anytime informable multi-objective and multi-directional RRT* algorithm to form a simple connected graph, and a proposed solver that consists of an enhanced cheapest insertion algorithm and a genetic algorithm to solve the relaxed traveling salesman problem in polynomial time. Moreover, a list of waypoints is often provided for robotics inspection and vehicle routing so that the robot can preferentially visit certain equipment or areas of interest. We show that the proposed system can inherently incorporate such knowledge, and can navigate through challenging topology. The proposed anytime system is evaluated on large and complex graphs built for real-world driving applications. All implementations are coded in multi-threaded C++ and are available at: https://github.com/UMich-BipedLab/IMOMD-RRTStar.

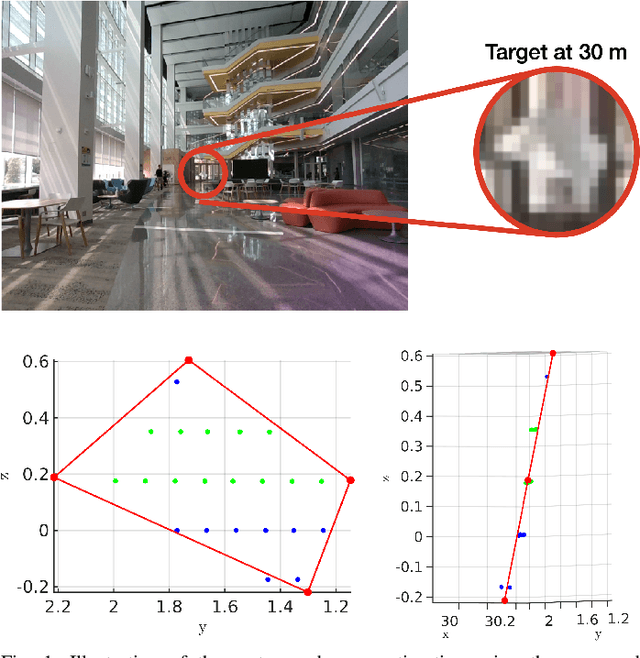

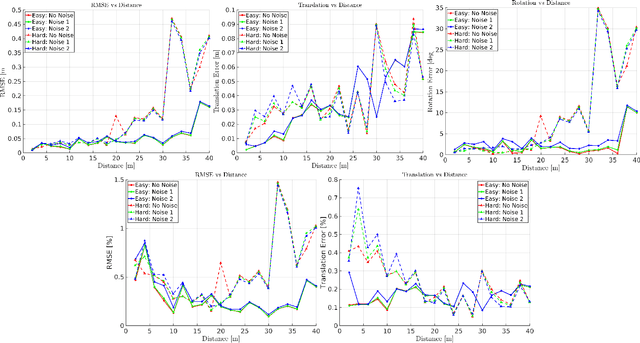

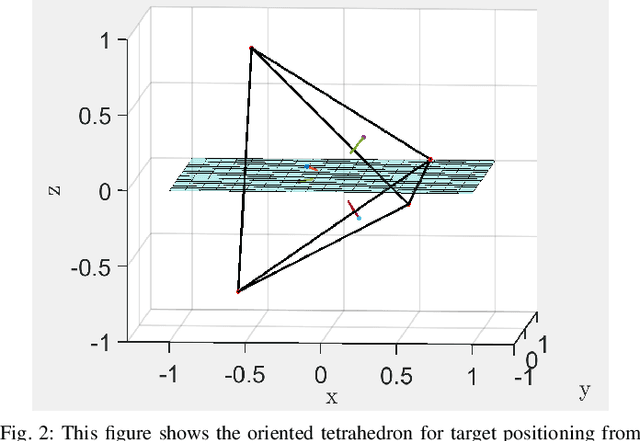

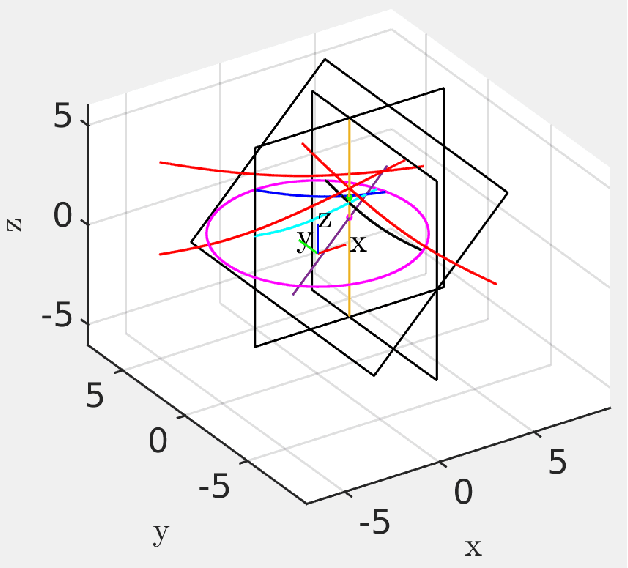

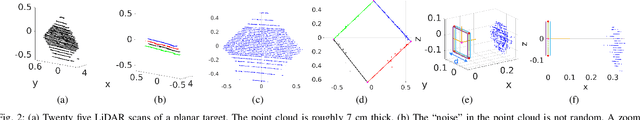

Optimal Target Shape for LiDAR Pose Estimation

Sep 06, 2021

Abstract:Targets are essential in problems such as object tracking in cluttered or textureless environments, camera (and multi-sensor) calibration tasks, and simultaneous localization and mapping (SLAM). Target shapes for these tasks typically are symmetric (square, rectangular, or circular) and work well for structured, dense sensor data such as pixel arrays (i.e., image). However, symmetric shapes lead to pose ambiguity when using sparse sensor data such as LiDAR point clouds and suffer from the quantization uncertainty of the LiDAR. This paper introduces the concept of optimizing target shape to remove pose ambiguity for LiDAR point clouds. A target is designed to induce large gradients at edge points under rotation and translation relative to the LiDAR to ameliorate the quantization uncertainty associated with point cloud sparseness. Moreover, given a target shape, we present a means that leverages the target's geometry to estimate the target's vertices while globally estimating the pose. Both the simulation and the experimental results (verified by a motion capture system) confirm that by using the optimal shape and the global solver, we achieve centimeter error in translation and a few degrees in rotation even when a partially illuminated target is placed 30 meters away. All the implementations and datasets are available at https://github.com/UMich-BipedLab/optimal_shape_global_pose_estimation.

Efficient Anytime CLF Reactive Planning System for a Bipedal Robot on Undulating Terrain

Aug 18, 2021

Abstract:We propose and experimentally demonstrate a reactive planning system for bipedal robots on unexplored, challenging terrains. The system consists of a low-frequency planning thread (5 Hz) to find an asymptotically optimal path and a high-frequency reactive thread (300 Hz) to accommodate robot deviation. The planning thread includes: a multi-layer local map to compute traversability for the robot on the terrain; an anytime omnidirectional Control Lyapunov Function (CLF) for use with a Rapidly Exploring Random Tree Star (RRT*) that generates a vector field for specifying motion between nodes; a sub-goal finder when the final goal is outside of the current map; and a finite-state machine to handle high-level mission decisions. The system also includes a reactive thread to obviate the non-smooth motions that arise with traditional RRT* algorithms when performing path following. The reactive thread copes with robot deviation while eliminating non-smooth motions via a vector field (defined by a closed-loop feedback policy) that provides real-time control commands to the robot's gait controller as a function of instantaneous robot pose. The system is evaluated on various challenging outdoor terrains and cluttered indoor scenes in both simulation and experiment on Cassie Blue, a bipedal robot with 20 degrees of freedom. All implementations are coded in C++ with the Robot Operating System (ROS) and are available at https://github.com/UMich-BipedLab/CLF_reactive_planning_system.

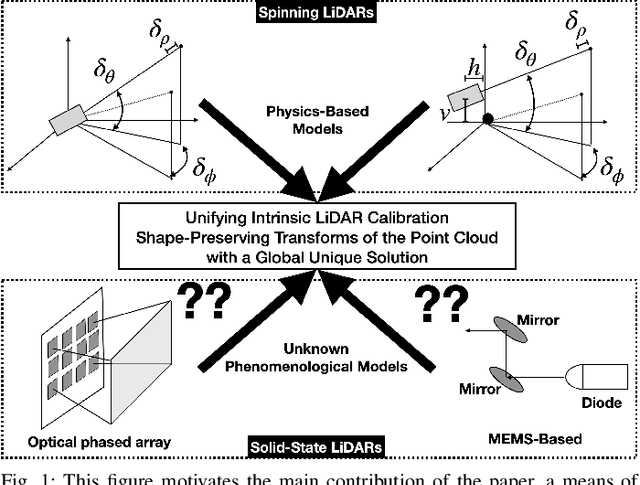

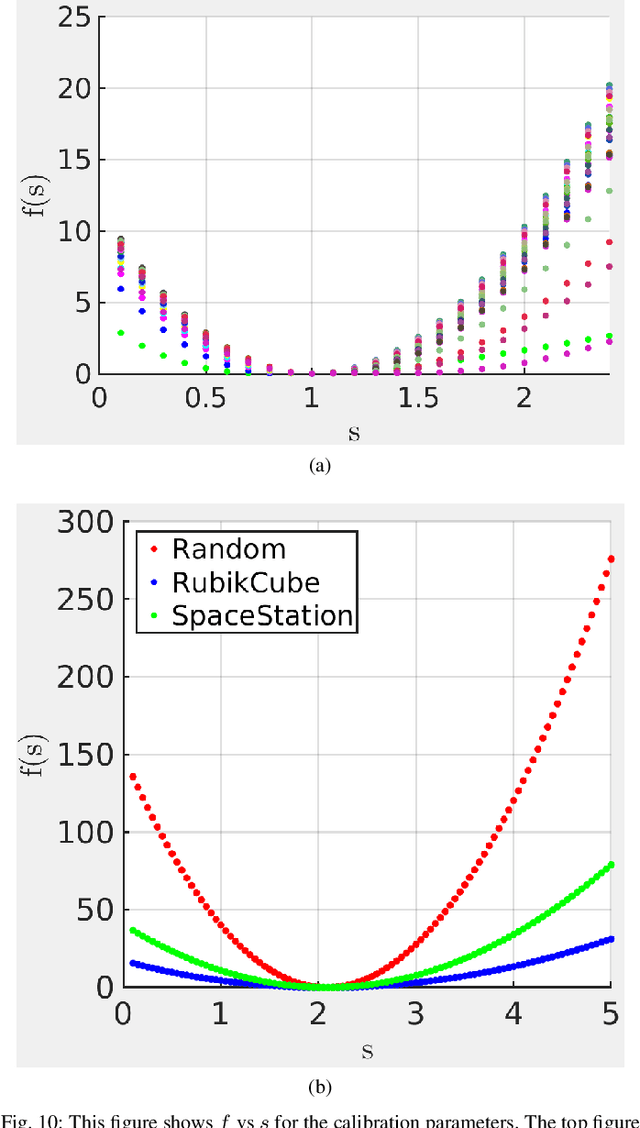

Global Unifying Intrinsic Calibration for Spinning and Solid-State LiDARs

Dec 06, 2020

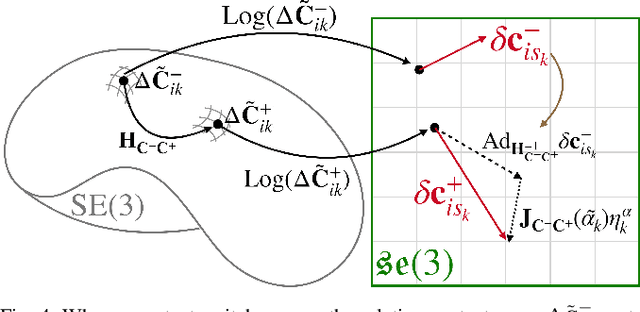

Abstract:Sensor calibration, which can be intrinsic or extrinsic, is an essential step to achieve the measurement accuracy required for modern perception and navigation systems deployed on autonomous robots. To date, intrinsic calibration models for spinning LiDARs have been based on hypothesized based on their physical mechanisms, resulting in anywhere from three to ten parameters to be estimated from data, while no phenomenological models have yet been proposed for solid-state LiDARs. Instead of going down that road, we propose to abstract away from the physics of a LiDAR type (spinning vs solid-state, for example), and focus on the spatial geometry of the point cloud generated by the sensor. By modeling the calibration parameters as an element of a special matrix Lie Group, we achieve a unifying view of calibration for different types of LiDARs. We further prove mathematically that the proposed model is well-constrained (has a unique answer) given four appropriately orientated targets. The proof provides a guideline for target positioning in the form of a tetrahedron. Moreover, an existing Semidefinite programming global solver for SE(3) can be modified to compute efficiently the optimal calibration parameters. For solid state LiDARs, we illustrate how the method works in simulation. For spinning LiDARs, we show with experimental data that the proposed matrix Lie Group model performs equally well as physics-based models in terms of reducing the P2P distance, while being more robust to noise.

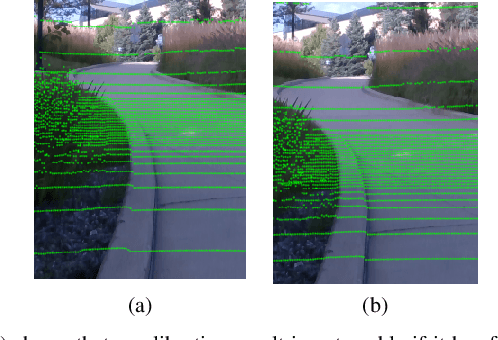

Improvements to Target-Based 3D LiDAR to Camera Calibration

Oct 07, 2019

Abstract:The homogeneous transformation between a LiDAR and monocular camera is required for sensor fusion tasks, such as SLAM. While determining such a transformation is not considered glamorous in any sense of the word, it is nonetheless crucial for many modern autonomous systems. Indeed, an error of a few degrees in rotation or a few percent in translation can lead to 20 cm translation errors at a distance of 5 m when overlaying a LiDAR image on a camera image. The biggest impediments to determining the transformation accurately are the relative sparsity of LiDAR point clouds and systematic errors in their distance measurements. This paper proposes (1) the use of targets of known dimension and geometry to ameliorate target pose estimation in face of the quantization and systematic errors inherent in a LiDAR image of a target, and (2) a fitting method for the LiDAR to monocular camera transformation that fundamentally assumes the camera image data is the most accurate information in one's possession.

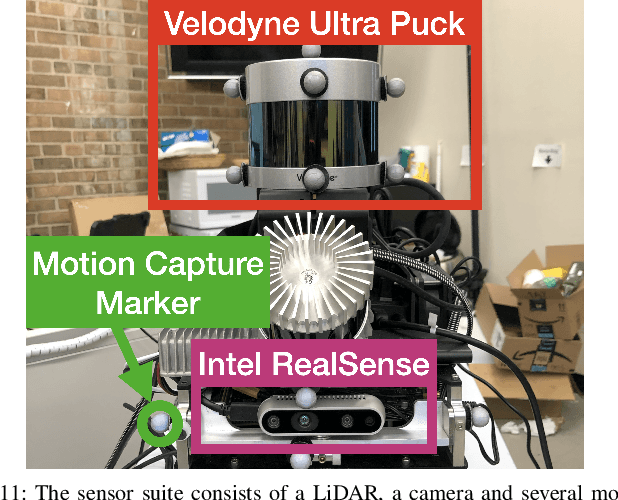

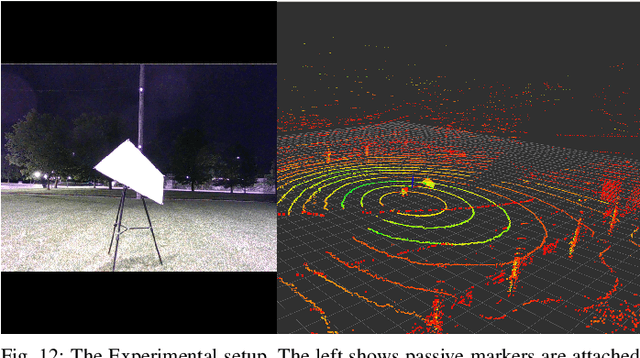

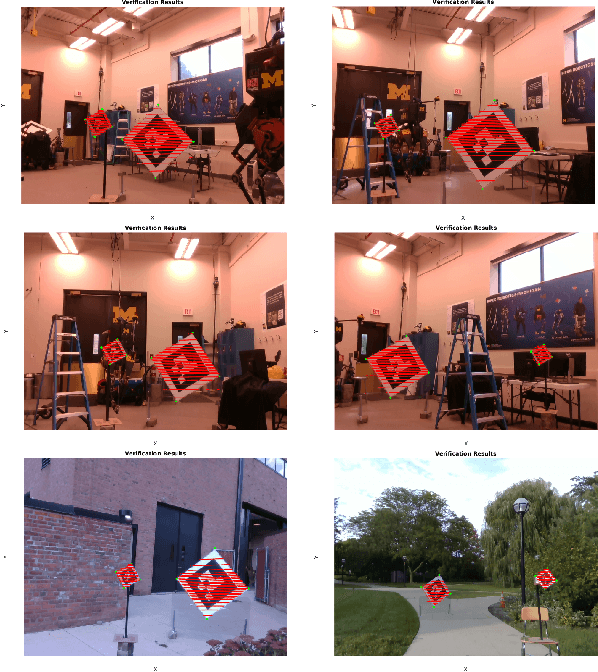

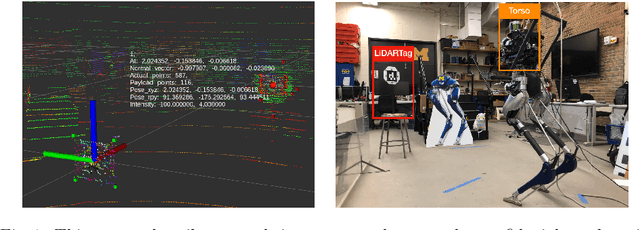

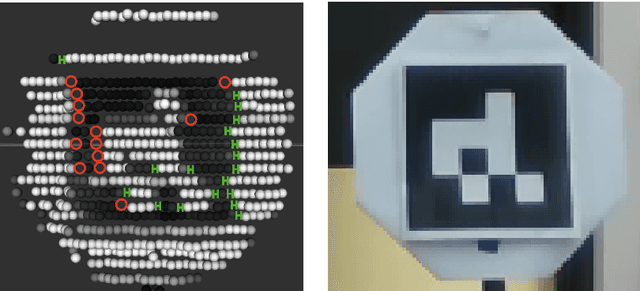

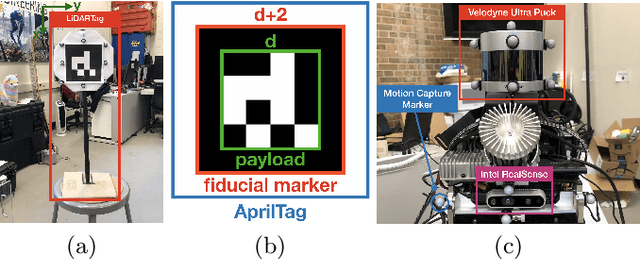

LiDARTag: A Real-Time Fiducial Tag using Point Clouds

Aug 23, 2019

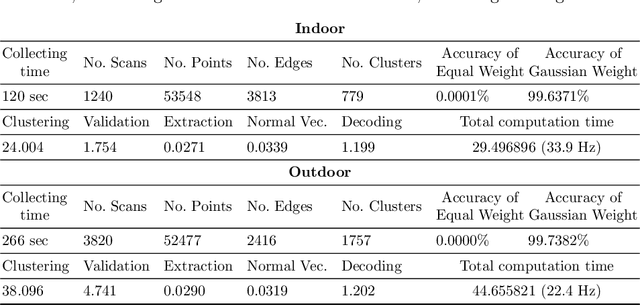

Abstract:Image-based fiducial markers are widely used in robotics and computer vision problems such as object tracking in cluttered or textureless environments, camera (and multi-sensor) calibration tasks, or vision-based simultaneous localization and mapping (SLAM). The state-of-the-art fiducial marker detection algorithms rely on consistency of the ambient lighting. This paper introduces LiDARTag, a novel fiducial tag design and detection algorithm suitable for light detection and ranging (LiDAR) point clouds. The proposed tag runs in real-time and can process data faster than the currently available LiDAR sensors frequencies. Due to the nature of the LiDAR's sensor, rapidly changing ambient lighting will not affect detection of a LiDARTag; hence, the proposed fiducial marker can operate in a completely dark environment. In addition, the LiDARTag nicely complements available visual fiducial markers as the tag design is compatible with available techniques, such as AprilTags, allowing for efficient multi-sensor fusion and calibration tasks. The experimental results, verified by a motion capture system, confirm the proposed technique can reliably provide a tag's pose and its unique ID code. All implementations are done in C++ and will be available soon at: https://github.com/brucejk/LiDARTag

Hybrid Contact Preintegration for Visual-Inertial-Contact State Estimation Using Factor Graphs

Oct 02, 2018

Abstract:The factor graph framework is a convenient modeling technique for robotic state estimation where states are represented as nodes, and measurements are modeled as factors. When designing a sensor fusion framework for legged robots, one often has access to visual, inertial, joint encoder, and contact sensors. While visual-inertial odometry has been studied extensively in this framework, the addition of a preintegrated contact factor for legged robots has been only recently proposed. This allowed for integration of encoder and contact measurements into existing factor graphs, however, new nodes had to be added to the graph every time contact was made or broken. In this work, to cope with the problem of switching contact frames, we propose a hybrid contact preintegration theory that allows contact information to be integrated through an arbitrary number of contact switches. The proposed hybrid modeling approach reduces the number of required variables in the nonlinear optimization problem by only requiring new states to be added alongside camera or selected keyframes. This method is evaluated using real experimental data collected from a Cassie-series robot where the trajectory of the robot produced by a motion capture system is used as a proxy for ground truth. The evaluation shows that inclusion of the proposed preintegrated hybrid contact factor alongside visual-inertial navigation systems improves estimation accuracy as well as robustness to vision failure, while its generalization makes it more accessible for legged platforms.

Feedback Control of a Cassie Bipedal Robot: Walking, Standing, and Riding a Segway

Sep 19, 2018

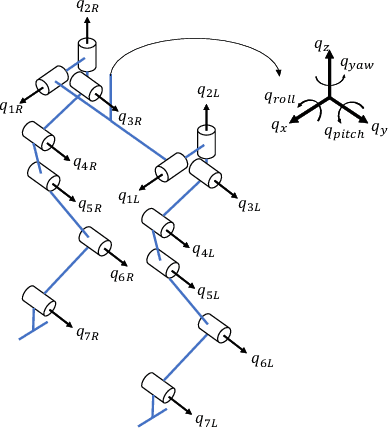

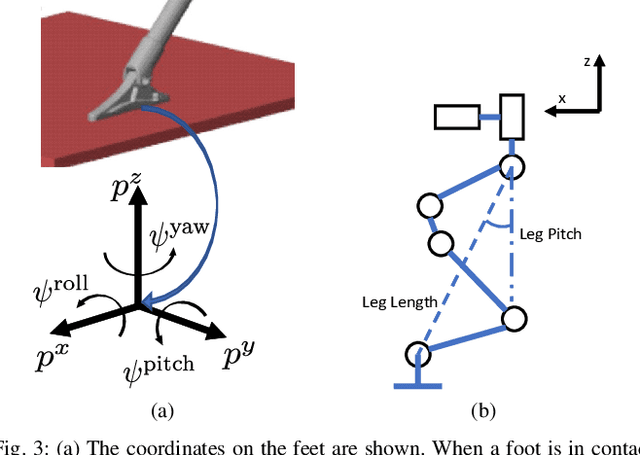

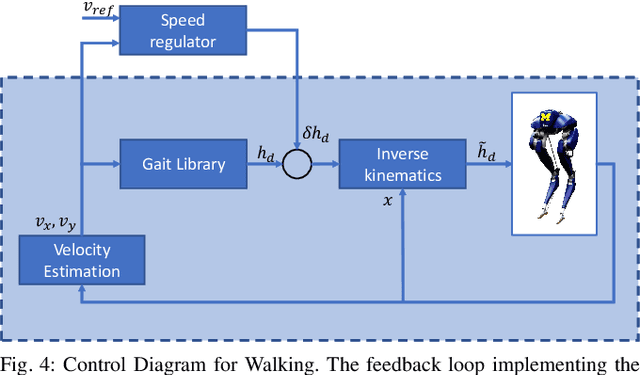

Abstract:The Cassie bipedal robot designed by Agility Robotics is providing academics a common platform for sharing and comparing algorithms for locomotion, perception, and navigation. This paper focuses on feedback control for standing and walking using the methods of virtual constraints and gait libraries. The designed controller was implemented six weeks after the robot arrived at the University of Michigan and allowed it to stand in place as well as walk over sidewalks, grass, snow, sand, and burning brush. The controller for standing also enables the robot to ride a Segway. A model of the Cassie robot has been placed on GitHub and the controller will also be made open source if the paper is accepted.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge