Jingnan Liu

Multi-Agent Deep Research: Training Multi-Agent Systems with M-GRPO

Nov 18, 2025

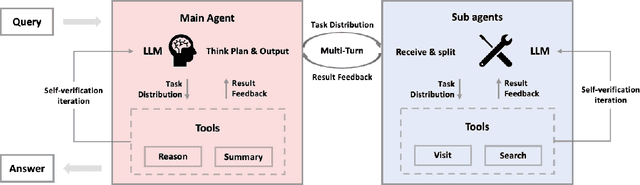

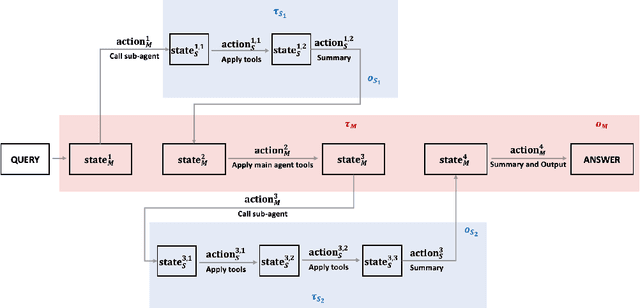

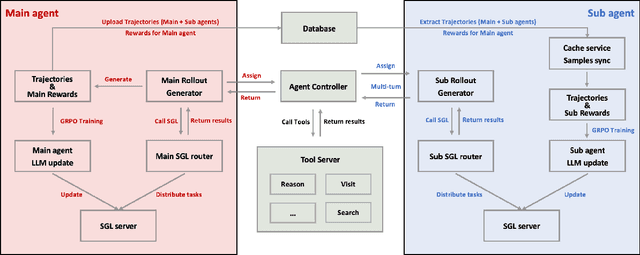

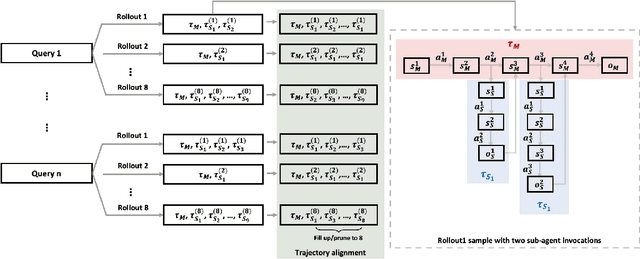

Abstract:Multi-agent systems perform well on general reasoning tasks. However, the lack of training in specialized areas hinders their accuracy. Current training methods train a unified large language model (LLM) for all agents in the system. This may limit the performances due to different distributions underlying for different agents. Therefore, training multi-agent systems with distinct LLMs should be the next step to solve. However, this approach introduces optimization challenges. For example, agents operate at different frequencies, rollouts involve varying sub-agent invocations, and agents are often deployed across separate servers, disrupting end-to-end gradient flow. To address these issues, we propose M-GRPO, a hierarchical extension of Group Relative Policy Optimization designed for vertical Multi-agent systems with a main agent (planner) and multiple sub-agents (multi-turn tool executors). M-GRPO computes group-relative advantages for both main and sub-agents, maintaining hierarchical credit assignment. It also introduces a trajectory-alignment scheme that generates fixed-size batches despite variable sub-agent invocations. We deploy a decoupled training pipeline in which agents run on separate servers and exchange minimal statistics via a shared store. This enables scalable training without cross-server backpropagation. In experiments on real-world benchmarks (e.g., GAIA, XBench-DeepSearch, and WebWalkerQA), M-GRPO consistently outperforms both single-agent GRPO and multi-agent GRPO with frozen sub-agents, demonstrating improved stability and sample efficiency. These results show that aligning heterogeneous trajectories and decoupling optimization across specialized agents enhances tool-augmented reasoning tasks.

MedReseacher-R1: Expert-Level Medical Deep Researcher via A Knowledge-Informed Trajectory Synthesis Framework

Aug 20, 2025Abstract:Recent developments in Large Language Model (LLM)-based agents have shown impressive capabilities spanning multiple domains, exemplified by deep research systems that demonstrate superior performance on complex information-seeking and synthesis tasks. While general-purpose deep research agents have shown impressive capabilities, they struggle significantly with medical domain challenges, as evidenced by leading proprietary systems achieving limited accuracy on complex medical benchmarks. The key limitations are: (1) the model lacks sufficient dense medical knowledge for clinical reasoning, and (2) the framework is constrained by the absence of specialized retrieval tools tailored for medical contexts.We present a medical deep research agent that addresses these challenges through two core innovations. First, we develop a novel data synthesis framework using medical knowledge graphs, extracting the longest chains from subgraphs around rare medical entities to generate complex multi-hop question-answer pairs. Second, we integrate a custom-built private medical retrieval engine alongside general-purpose tools, enabling accurate medical information synthesis. Our approach generates 2100+ diverse trajectories across 12 medical specialties, each averaging 4.2 tool interactions.Through a two-stage training paradigm combining supervised fine-tuning and online reinforcement learning with composite rewards, our MedResearcher-R1-32B model demonstrates exceptional performance, establishing new state-of-the-art results on medical benchmarks while maintaining competitive performance on general deep research tasks. Our work demonstrates that strategic domain-specific innovations in architecture, tool design, and training data construction can enable smaller open-source models to outperform much larger proprietary systems in specialized domains.

Sky-GVIO: an enhanced GNSS/INS/Vision navigation with FCN-based sky-segmentation in urban canyon

Apr 17, 2024Abstract:Accurate, continuous, and reliable positioning is a critical component of achieving autonomous driving. However, in complex urban canyon environments, the vulnerability of a stand-alone sensor and non-line-of-sight (NLOS) caused by high buildings, trees, and elevated structures seriously affect positioning results. To address these challenges, a sky-view images segmentation algorithm based on Fully Convolutional Network (FCN) is proposed for GNSS NLOS detection. Building upon this, a novel NLOS detection and mitigation algorithm (named S-NDM) is extended to the tightly coupled Global Navigation Satellite Systems (GNSS), Inertial Measurement Units (IMU), and visual feature system which is called Sky-GVIO, with the aim of achieving continuous and accurate positioning in urban canyon environments. Furthermore, the system harmonizes Single Point Positioning (SPP) with Real-Time Kinematic (RTK) methodologies to bolster its operational versatility and resilience. In urban canyon environments, the positioning performance of S-NDM algorithm proposed in this paper is evaluated under different tightly coupled SPP-related and RTK-related models. The results exhibit that Sky-GVIO system achieves meter-level accuracy under SPP mode and sub-decimeter precision with RTK, surpassing the performance of GNSS/INS/Vision frameworks devoid of S-NDM. Additionally, the sky-view image dataset, inclusive of training and evaluation subsets, has been made publicly accessible for scholarly exploration at https://github.com/whuwangjr/sky-view-images .

FF-LINS: A Consistent Frame-to-Frame Solid-State-LiDAR-Inertial State Estimator

Jul 13, 2023

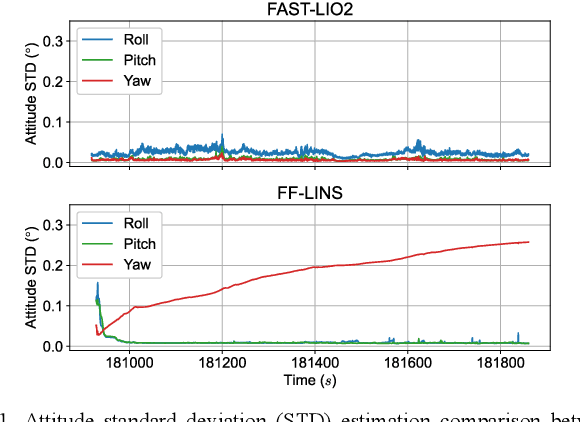

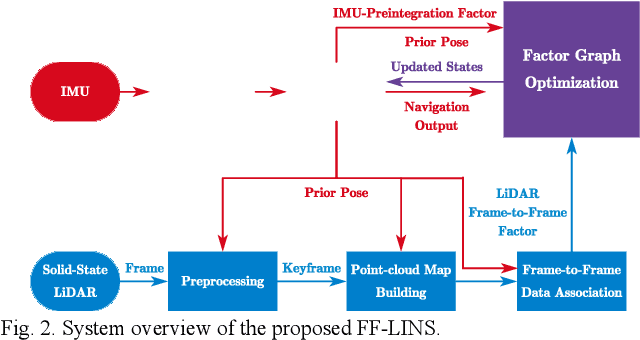

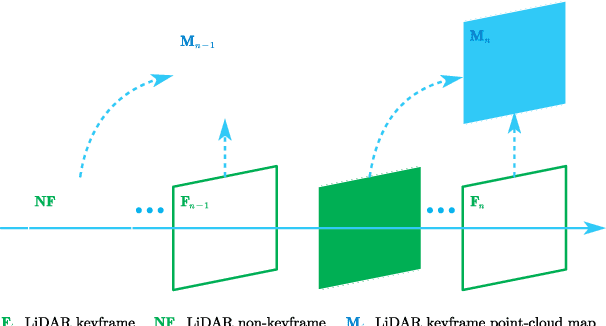

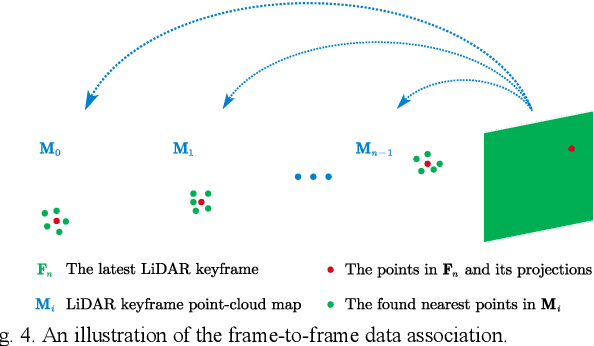

Abstract:Most of the existing LiDAR-inertial navigation systems are based on frame-to-map registrations, leading to inconsistency in state estimation. The newest solid-state LiDAR with a non-repetitive scanning pattern makes it possible to achieve a consistent LiDAR-inertial estimator by employing a frame-to-frame data association. In this letter, we propose a robust and consistent frame-to-frame LiDAR-inertial navigation system (FF-LINS) for solid-state LiDARs. With the INS-centric LiDAR frame processing, the keyframe point-cloud map is built using the accumulated point clouds to construct the frame-to-frame data association. The LiDAR frame-to-frame and the inertial measurement unit (IMU) preintegration measurements are tightly integrated using the factor graph optimization, with online calibration of the LiDAR-IMU extrinsic and time-delay parameters. The experiments on the public and private datasets demonstrate that the proposed FF-LINS achieves superior accuracy and robustness than the state-of-the-art systems. Besides, the LiDAR-IMU extrinsic and time-delay parameters are estimated effectively, and the online calibration notably improves the pose accuracy. The proposed FF-LINS and the employed datasets are open-sourced on GitHub (https://github.com/i2Nav-WHU/FF-LINS).

PO-VINS: An Efficient Pose-Only LiDAR-Enhanced Visual-Inertial State Estimator

May 22, 2023

Abstract:The pose-only (PO) visual representation has been proven to be equivalent to the classical multiple-view geometry, while significantly improving computational efficiency. However, its applicability for real-world navigation in large-scale complex environments has not yet been demonstrated. In this study, we present an efficient pose-only LiDAR-enhanced visual-inertial navigation system (PO-VINS) to enhance the real-time performance of the state estimator. In the visual-inertial state estimator (VISE), we propose a pose-only visual-reprojection measurement model that only contains the inertial measurement unit (IMU) pose and extrinsic-parameter states. We further integrated the LiDAR-enhanced method to construct a pose-only LiDAR-depth measurement model. Real-world experiments were conducted in large-scale complex environments, demonstrating that the proposed PO-VISE and LiDAR-enhanced PO-VISE reduce computational complexity by more than 50% and over 20%, respectively. Additionally, the PO-VINS yields the same accuracy as conventional methods. These results indicate that the pose-only solution is efficient and applicable for real-time visual-inertial state estimation.

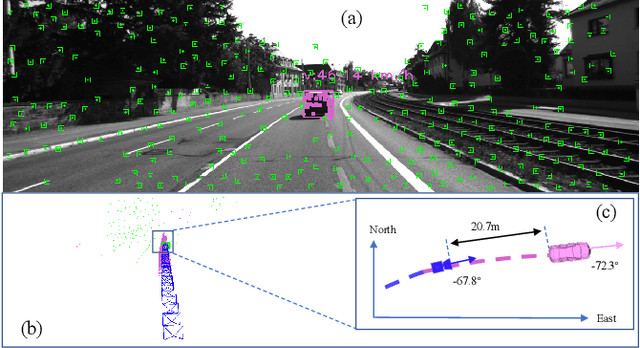

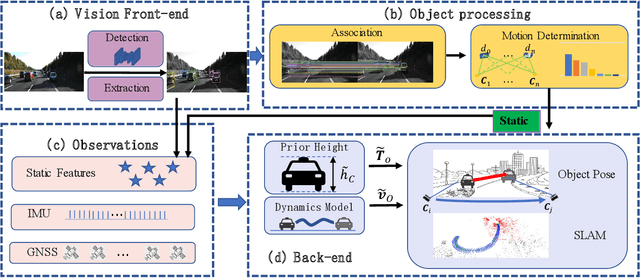

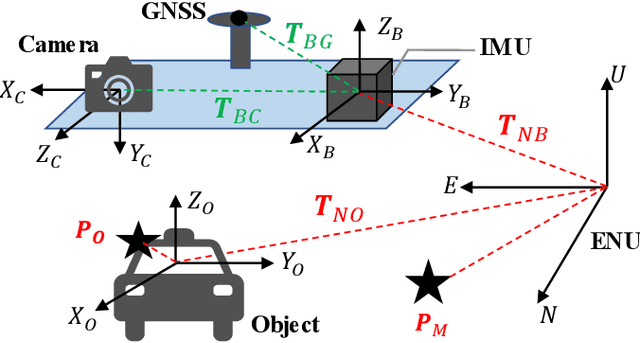

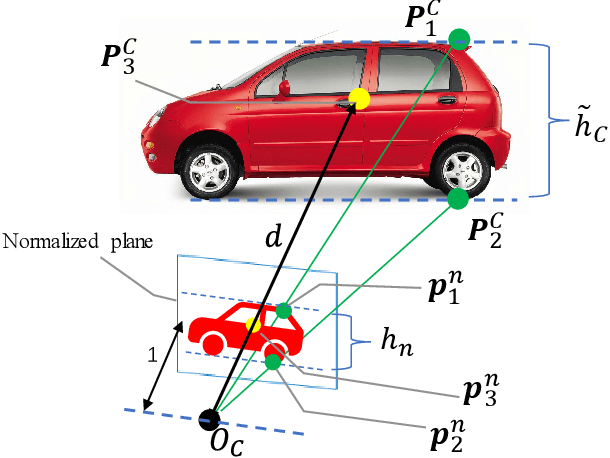

DynaVIG: Monocular Vision/INS/GNSS Integrated Navigation and Object Tracking for AGV in Dynamic Scenes

Nov 26, 2022

Abstract:Visual-Inertial Odometry (VIO) usually suffers from drifting over long-time runs, the accuracy is easily affected by dynamic objects. We propose DynaVIG, a navigation and object tracking system based on the integration of Monocular Vision, Inertial Navigation System (INS), and Global Navigation Satellite System (GNSS). Our system aims to provide an accurate global estimation of the navigation states and object poses for the automated ground vehicle (AGV) in dynamic scenes. Due to the scale ambiguity of the object, a prior height model is proposed to initialize the object pose, and the scale is continuously estimated with the aid of GNSS and INS. To precisely track the object with complex moving, we establish an accurate dynamics model according to its motion state. Then the multi-sensor observations are optimized in a unified framework. Experiments on the KITTI dataset demonstrate that the multisensor fusion can effectively improve the accuracy of navigation and object tracking, compared to state-of-the-art methods. In addition, the proposed system achieves good estimation of the objects that change speed or direction.

IC-GVINS: A Robust, Real-time, INS-Centric GNSS-Visual-Inertial Navigation System for Wheeled Robot

Apr 11, 2022

Abstract:In this letter, we present a robust, real-time, inertial navigation system (INS)-Centric GNSS-Visual-Inertial navigation system (IC-GVINS) for wheeled robot, in which the precise INS is fully utilized in both the state estimation and visual process. To improve the system robustness, the INS information is employed during the whole keyframe-based visual process, with strict outlier-culling strategy. GNSS is adopted to perform an accurate and convenient initialization of the IC-GVINS, and is further employed to achieve absolute positioning in large-scale environments. The IMU, visual, and GNSS measurements are tightly fused within the framework of factor graph optimization. Dedicated experiments were conducted to evaluate the robustness and accuracy of the IC-GVINS on a wheeled robot. The IC-GVINS demonstrates superior robustness in various visual-degenerated scenes with moving objects. Compared to the state-of-the-art visual-inertial navigation systems, the proposed method yields improved robustness and accuracy in various environments. We open source our codes combined with the dataset on GitHub

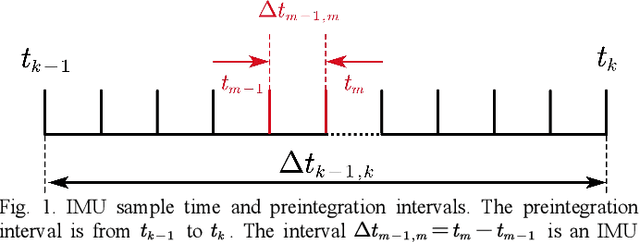

The Unified Mathematical Framework for IMU Preintegration in Inertial-Aided Navigation System

Dec 05, 2021Abstract:This paper proposes a unified mathematical framework for inertial measurement unit (IMU) preintegration in inertial-aided navigation system in different frames under different motion condition. The navigation state is precisely discretized as three part: local increment, global state, and global increment. The global increment can be calculated in different frames such as local geodetic navigation frame and earth-centered-earth-fixed frame. The local increment which is referred as the IMU preintegration can be calculated under different assumptions according to the motion of the agent and the grade of the IMU. Thus, it more accurate and more convenient for online state estimation of inertial-integrated navigation system under different environment.

OdoNet: Untethered Speed Aiding for Vehicle Navigation Without Hardware Wheeled Odometer

Sep 07, 2021

Abstract:Odometer has been proven to significantly improve the accuracy of the Global Navigation Satellite System / Inertial Navigation System (GNSS/INS) integrated vehicle navigation in GNSS-challenged environments. However, the odometer is inaccessible in many applications, especially for aftermarket devices. To apply forward speed aiding without hardware wheeled odometer, we propose OdoNet, an untethered one-dimensional Convolution Neural Network (CNN)-based pseudo-odometer model learning from a single Inertial Measurement Unit (IMU), which can act as an alternative to the wheeled odometer. Dedicated experiments have been conducted to verify the feasibility and robustness of the OdoNet. The results indicate that the IMU individuality, the vehicle loads, and the road conditions have little impact on the robustness and precision of the OdoNet, while the IMU biases and the mounting angles may notably ruin the OdoNet. Thus, a data-cleaning procedure is added to effectively mitigate the impacts of the IMU biases and the mounting angles. Compared to the process using only non-holonomic constraint (NHC), after employing the pseudo-odometer, the positioning error is reduced by around 68%, while the percentage is around 74% for the hardware wheeled odometer. In conclusion, the proposed OdoNet can be employed as an untethered pseudo-odometer for vehicle navigation, which can efficiently improve the accuracy and reliability of the positioning in GNSS-denied environments.

Exploring the Accuracy Potential of IMU Preintegration in Factor Graph Optimization

Sep 07, 2021

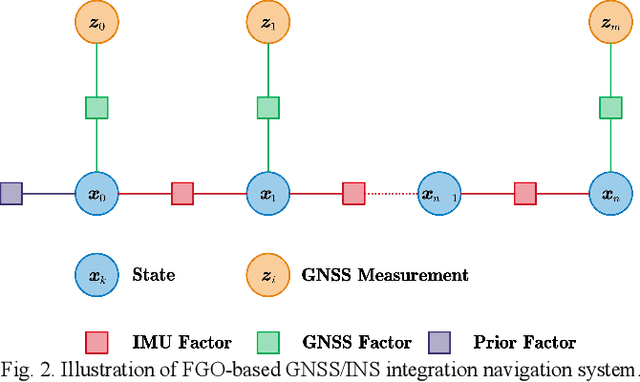

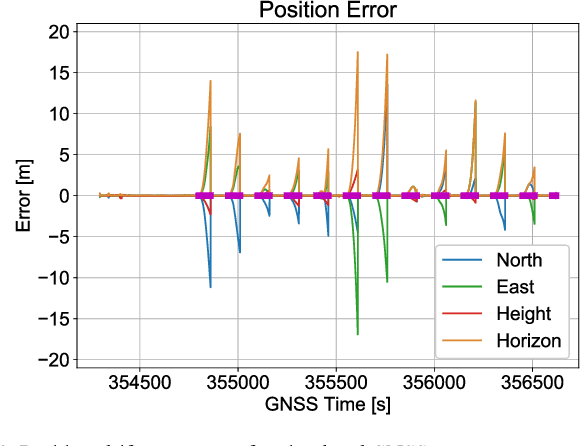

Abstract:Inertial measurement unit (IMU) preintegration is widely used in factor graph optimization (FGO); e.g., in visual-inertial navigation system and global navigation satellite system/inertial navigation system (GNSS/INS) integration. However, most existing IMU preintegration models ignore the Earth's rotation and lack delicate integration processes, and these limitations severely degrade the INS accuracy. In this study, we construct a refined IMU preintegration model that incorporates the Earth's rotation, and analytically compute the covariance and Jacobian matrix. To mitigate the impact caused by sensors other than IMU in the evaluation system, FGO-based GNSS/INS integration is adopted to quantitatively evaluate the accuracy of the refined preintegration. Compared to a classic filtering-based GNSS/INS integration baseline, the employed FGO-based integration using the refined preintegration yields the same accuracy. In contrast, the existing rough preintegration yields significant accuracy degradation. The performance difference between the refined and rough preintegration models can exceed 200% for an industrial-grade MEMS module and 10% for a consumer-grade MEMS chip. Clearly, the Earth's rotation is the major factor to be considered in IMU preintegration in order to maintain the IMU precision, even for a consumer-grade IMU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge