Jiayu Huang

Bayesian Entropy Neural Networks for Physics-Aware Prediction

Jul 01, 2024Abstract:This paper addresses the need for deep learning models to integrate well-defined constraints into their outputs, driven by their application in surrogate models, learning with limited data and partial information, and scenarios requiring flexible model behavior to incorporate non-data sample information. We introduce Bayesian Entropy Neural Networks (BENN), a framework grounded in Maximum Entropy (MaxEnt) principles, designed to impose constraints on Bayesian Neural Network (BNN) predictions. BENN is capable of constraining not only the predicted values but also their derivatives and variances, ensuring a more robust and reliable model output. To achieve simultaneous uncertainty quantification and constraint satisfaction, we employ the method of multipliers approach. This allows for the concurrent estimation of neural network parameters and the Lagrangian multipliers associated with the constraints. Our experiments, spanning diverse applications such as beam deflection modeling and microstructure generation, demonstrate the effectiveness of BENN. The results highlight significant improvements over traditional BNNs and showcase competitive performance relative to contemporary constrained deep learning methods.

SwapTalk: Audio-Driven Talking Face Generation with One-Shot Customization in Latent Space

May 09, 2024

Abstract:Combining face swapping with lip synchronization technology offers a cost-effective solution for customized talking face generation. However, directly cascading existing models together tends to introduce significant interference between tasks and reduce video clarity because the interaction space is limited to the low-level semantic RGB space. To address this issue, we propose an innovative unified framework, SwapTalk, which accomplishes both face swapping and lip synchronization tasks in the same latent space. Referring to recent work on face generation, we choose the VQ-embedding space due to its excellent editability and fidelity performance. To enhance the framework's generalization capabilities for unseen identities, we incorporate identity loss during the training of the face swapping module. Additionally, we introduce expert discriminator supervision within the latent space during the training of the lip synchronization module to elevate synchronization quality. In the evaluation phase, previous studies primarily focused on the self-reconstruction of lip movements in synchronous audio-visual videos. To better approximate real-world applications, we expand the evaluation scope to asynchronous audio-video scenarios. Furthermore, we introduce a novel identity consistency metric to more comprehensively assess the identity consistency over time series in generated facial videos. Experimental results on the HDTF demonstrate that our method significantly surpasses existing techniques in video quality, lip synchronization accuracy, face swapping fidelity, and identity consistency. Our demo is available at http://swaptalk.cc.

Image-based Novel Fault Detection with Deep Learning Classifiers using Hierarchical Labels

Mar 26, 2024

Abstract:One important characteristic of modern fault classification systems is the ability to flag the system when faced with previously unseen fault types. This work considers the unknown fault detection capabilities of deep neural network-based fault classifiers. Specifically, we propose a methodology on how, when available, labels regarding the fault taxonomy can be used to increase unknown fault detection performance without sacrificing model performance. To achieve this, we propose to utilize soft label techniques to improve the state-of-the-art deep novel fault detection techniques during the training process and novel hierarchically consistent detection statistics for online novel fault detection. Finally, we demonstrated increased detection performance on novel fault detection in inspection images from the hot steel rolling process, with results well replicated across multiple scenarios and baseline detection methods.

Transfer learning with high-dimensional quantile regression

Nov 26, 2022

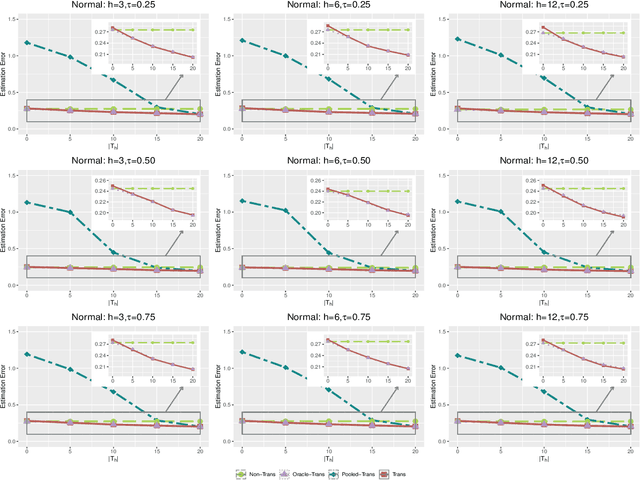

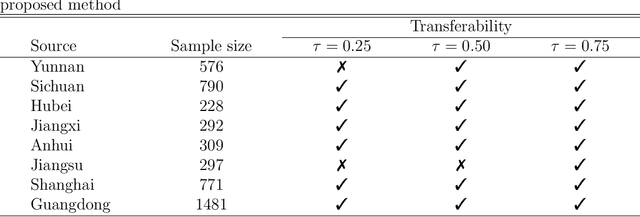

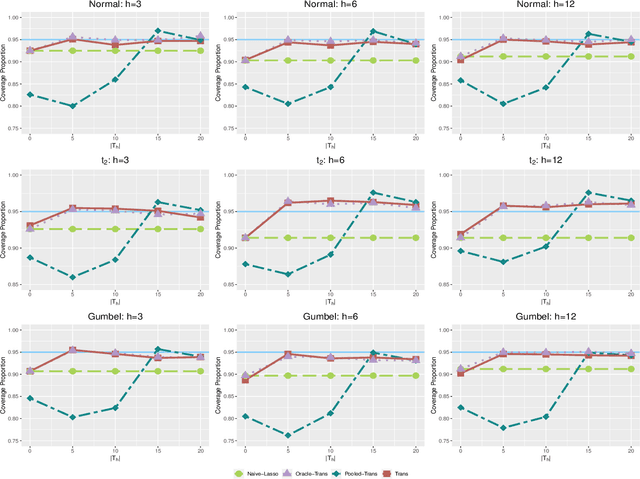

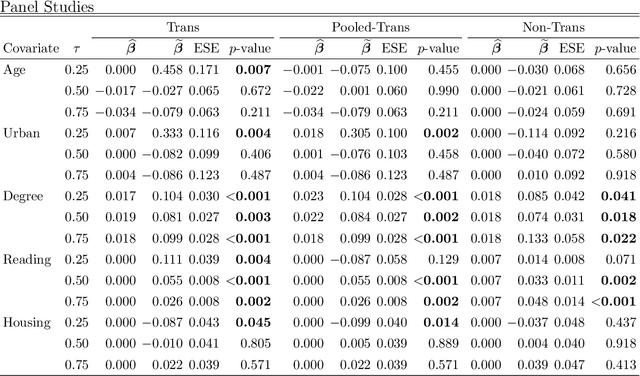

Abstract:Transfer learning has become an essential technique to exploit information from the source domain to boost performance of the target task. Despite the prevalence in high-dimensional data, heterogeneity and/or heavy tails tend to be discounted in current transfer learning approaches and thus may undermine the resulting performance. We propose a transfer learning procedure in the framework of high-dimensional quantile regression models to accommodate the heterogeneity and heavy tails in the source and target domains. We establish error bounds of the transfer learning estimator based on delicately selected transferable source domains, showing that lower error bounds can be achieved for critical selection criterion and larger sample size of source tasks. We further propose valid confidence interval and hypothesis test procedures for individual component of quantile regression coefficients by advocating a one-step debiased estimator of transfer learning estimator wherein the consistent variance estimation is proposed via the technique of transfer learning again. Simulation results demonstrate that the proposed method exhibits some favorable performances.

Posterior Regularized Bayesian Neural Network Incorporating Soft and Hard Knowledge Constraints

Oct 16, 2022

Abstract:Neural Networks (NNs) have been widely {used in supervised learning} due to their ability to model complex nonlinear patterns, often presented in high-dimensional data such as images and text. However, traditional NNs often lack the ability for uncertainty quantification. Bayesian NNs (BNNS) could help measure the uncertainty by considering the distributions of the NN model parameters. Besides, domain knowledge is commonly available and could improve the performance of BNNs if it can be appropriately incorporated. In this work, we propose a novel Posterior-Regularized Bayesian Neural Network (PR-BNN) model by incorporating different types of knowledge constraints, such as the soft and hard constraints, as a posterior regularization term. Furthermore, we propose to combine the augmented Lagrangian method and the existing BNN solvers for efficient inference. The experiments in simulation and two case studies about aviation landing prediction and solar energy output prediction have shown the knowledge constraints and the performance improvement of the proposed model over traditional BNNs without the constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge