Jianing Sun

A Comprehensive Scatter Correction Model for Micro-Focus Dual-Source Imaging Systems: Combining Ambient, Cross, and Forward Scatter

Mar 18, 2025Abstract:Compared to single-source imaging systems, dual-source imaging systems equipped with two cross-distributed scanning beams significantly enhance temporal resolution and capture more comprehensive object scanning information. Nevertheless, the interaction between the two scanning beams introduces more complex scatter signals into the acquired projection data. Existing methods typically model these scatter signals as the sum of cross-scatter and forward scatter, with cross-scatter estimation limited to single-scatter along primary paths. Through experimental measurements on our selfdeveloped micro-focus dual-source imaging system, we observed that the peak ratio of hardware-induced ambient scatter to single-source projection intensity can even exceed 60%, a factor often overlooked in conventional models. To address this limitation, we propose a more comprehensive model that decomposes the total scatter signals into three distinct components: ambient scatter, cross-scatter, and forward scatter. Furthermore, we introduce a cross-scatter kernel superposition (xSKS) module to enhance the accuracy of cross-scatter estimation by modeling both single and multiple crossscatter events along non-primary paths. Additionally, we employ a fast object-adaptive scatter kernel superposition (FOSKS) module for efficient forward scatter estimation. In Monte Carlo (MC) simulation experiments performed on a custom-designed waterbone phantom, our model demonstrated remarkable superiority, achieving a scatter-toprimary-weighted mean absolute percentage error (SPMAPE) of 1.32%, significantly lower than the 12.99% attained by the state-of-the-art method. Physical experiments further validate the superior performance of our model in correcting scatter artifacts.

First performance of hybrid spectra CT reconstruction: a general Spectrum-Model-Aided Reconstruction Technique (SMART)

Oct 24, 2024

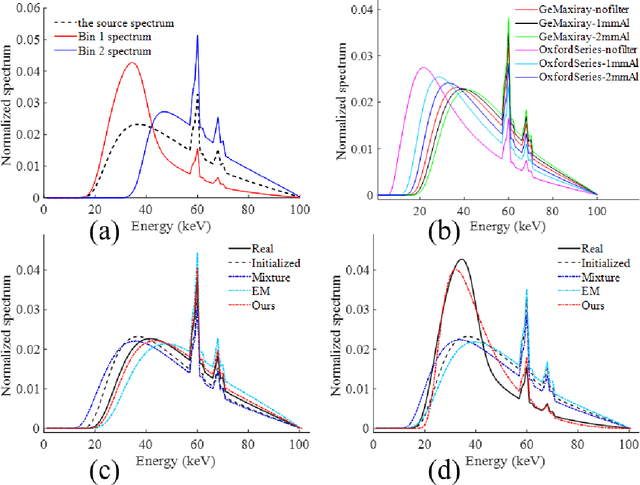

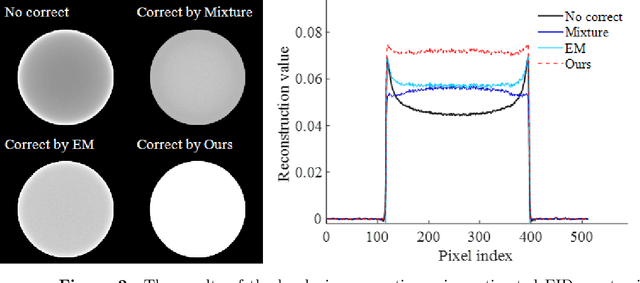

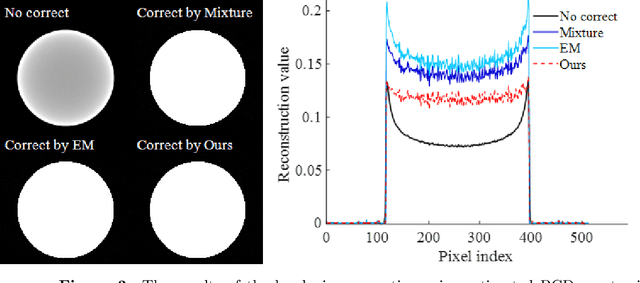

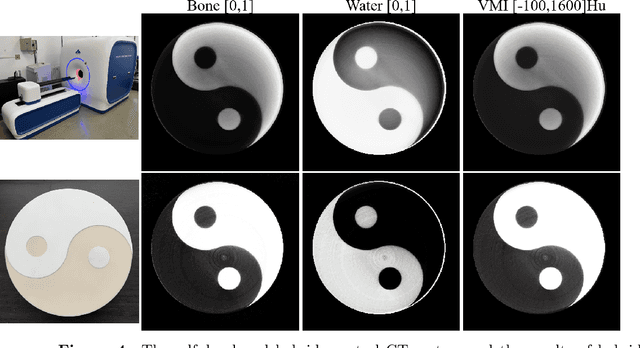

Abstract:Hybrid spectral CT integrates energy integrating detectors (EID) and photon counting detectors (PCD) into a single system, combining the large field-of-view advantage of EID with the high energy and spatial resolution of PCD. This represents a new research direction in spectral CT imaging. However, the different imaging principles and inconsistent geometric paths of the two detectors make it difficult to reconstruct images using data from hybrid detectors. In addition, the quality reconstructed images considering spectrum is affected by the accuracy of spectral estimation and the scattered photons. In this work, Firstly, we propose a general hybrid spectral reconstruction method that takes into account both the spectral CT imaging principles of the two different detectors and the influence of scattered photons in the forward process modelling. Furthermore, we also apply volume fraction constraints to the results reconstructed from the two detector data. By alternately solving the spectral estimation and the spectral image reconstruction by the ADMM method, the estimated spectra and the reconstructed images reinforce each other, thus improving the accuracy of the spectral estimation and the quality of the reconstructed images. The proposed method is the first to achieve hybrid spectral CT reconstruction for both detectors, allowing simultaneous recovery of spectrum and image reconstruction from hybrid spectral data containing scattering. In addition, the method is also applicable to spectral CT imaging using a single type of detector. We validated the effectiveness of the proposed method through numerical experiments and successfully performed the first hybrid spectral CT reconstruction experiment on our self-developed hybrid spectral CT system.

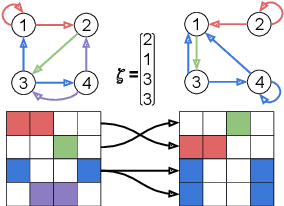

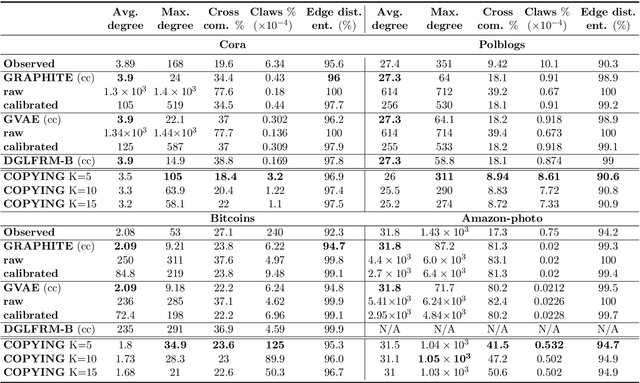

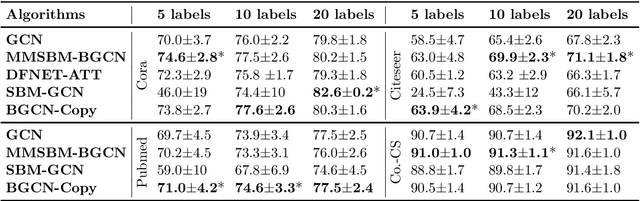

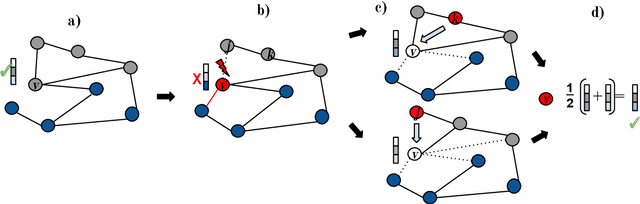

Node Copying: A Random Graph Model for Effective Graph Sampling

Aug 04, 2022

Abstract:There has been an increased interest in applying machine learning techniques on relational structured-data based on an observed graph. Often, this graph is not fully representative of the true relationship amongst nodes. In these settings, building a generative model conditioned on the observed graph allows to take the graph uncertainty into account. Various existing techniques either rely on restrictive assumptions, fail to preserve topological properties within the samples or are prohibitively expensive for larger graphs. In this work, we introduce the node copying model for constructing a distribution over graphs. Sampling of a random graph is carried out by replacing each node's neighbors by those of a randomly sampled similar node. The sampled graphs preserve key characteristics of the graph structure without explicitly targeting them. Additionally, sampling from this model is extremely simple and scales linearly with the nodes. We show the usefulness of the copying model in three tasks. First, in node classification, a Bayesian formulation based on node copying achieves higher accuracy in sparse data settings. Second, we employ our proposed model to mitigate the effect of adversarial attacks on the graph topology. Last, incorporation of the model in a recommendation system setting improves recall over state-of-the-art methods.

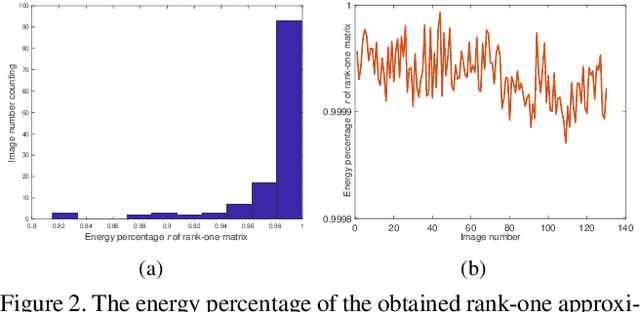

Rank-One Prior: Toward Real-Time Scene Recovery

Apr 07, 2021

Abstract:Scene recovery is a fundamental imaging task for several practical applications, e.g., video surveillance and autonomous vehicles, etc. To improve visual quality under different weather/imaging conditions, we propose a real-time light correction method to recover the degraded scenes in the cases of sandstorms, underwater, and haze. The heart of our work is that we propose an intensity projection strategy to estimate the transmission. This strategy is motivated by a straightforward rank-one transmission prior. The complexity of transmission estimation is $O(N)$ where $N$ is the size of the single image. Then we can recover the scene in real-time. Comprehensive experiments on different types of weather/imaging conditions illustrate that our method outperforms competitively several state-of-the-art imaging methods in terms of efficiency and robustness.

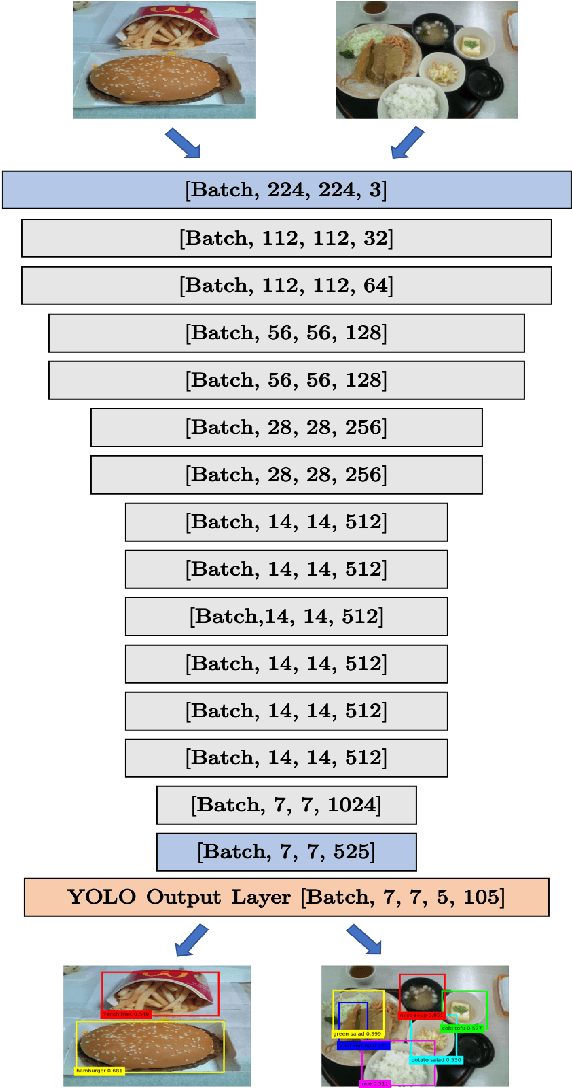

FoodTracker: A Real-time Food Detection Mobile Application by Deep Convolutional Neural Networks

Sep 16, 2019

Abstract:We present a mobile application made to recognize food items of multi-object meal from a single image in real-time, and then return the nutrition facts with components and approximate amounts. Our work is organized in two parts. First, we build a deep convolutional neural network merging with YOLO, a state-of-the-art detection strategy, to achieve simultaneous multi-object recognition and localization with nearly 80% mean average precision. Second, we adapt our model into a mobile application with extending function for nutrition analysis. After inferring and decoding the model output in the app side, we present detection results that include bounding box position and class label in either real-time or local mode. Our model is well-suited for mobile devices with negligible inference time and small memory requirements with a deep learning algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge