Jiancheng Li

Forestpest-YOLO: A High-Performance Detection Framework for Small Forestry Pests

Oct 01, 2025

Abstract:Detecting agricultural pests in complex forestry environments using remote sensing imagery is fundamental for ecological preservation, yet it is severely hampered by practical challenges. Targets are often minuscule, heavily occluded, and visually similar to the cluttered background, causing conventional object detection models to falter due to the loss of fine-grained features and an inability to handle extreme data imbalance. To overcome these obstacles, this paper introduces Forestpest-YOLO, a detection framework meticulously optimized for the nuances of forestry remote sensing. Building upon the YOLOv8 architecture, our framework introduces a synergistic trio of innovations. We first integrate a lossless downsampling module, SPD-Conv, to ensure that critical high-resolution details of small targets are preserved throughout the network. This is complemented by a novel cross-stage feature fusion block, CSPOK, which dynamically enhances multi-scale feature representation while suppressing background noise. Finally, we employ VarifocalLoss to refine the training objective, compelling the model to focus on high-quality and hard-to-classify samples. Extensive experiments on our challenging, self-constructed ForestPest dataset demonstrate that Forestpest-YOLO achieves state-of-the-art performance, showing marked improvements in detecting small, occluded pests and significantly outperforming established baseline models.

URS-NeRF: Unordered Rolling Shutter Bundle Adjustment for Neural Radiance Fields

Mar 25, 2024

Abstract:We propose a novel rolling shutter bundle adjustment method for neural radiance fields (NeRF), which utilizes the unordered rolling shutter (RS) images to obtain the implicit 3D representation. Existing NeRF methods suffer from low-quality images and inaccurate initial camera poses due to the RS effect in the image, whereas, the previous method that incorporates the RS into NeRF requires strict sequential data input, limiting its widespread applicability. In constant, our method recovers the physical formation of RS images by estimating camera poses and velocities, thereby removing the input constraints on sequential data. Moreover, we adopt a coarse-to-fine training strategy, in which the RS epipolar constraints of the pairwise frames in the scene graph are used to detect the camera poses that fall into local minima. The poses detected as outliers are corrected by the interpolation method with neighboring poses. The experimental results validate the effectiveness of our method over state-of-the-art works and demonstrate that the reconstruction of 3D representations is not constrained by the requirement of video sequence input.

MAMDR: A Model Agnostic Learning Method for Multi-Domain Recommendation

Mar 22, 2022

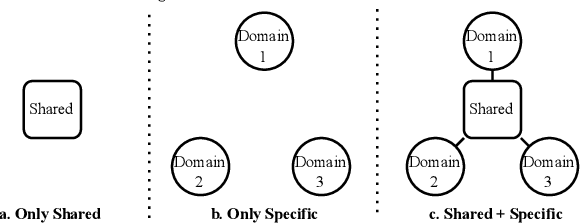

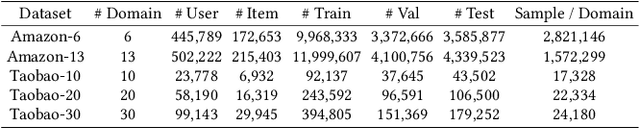

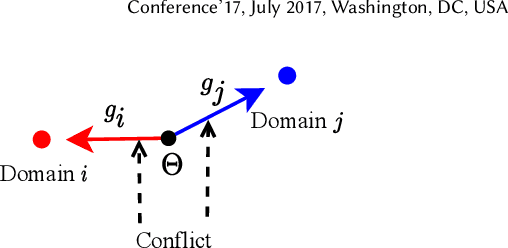

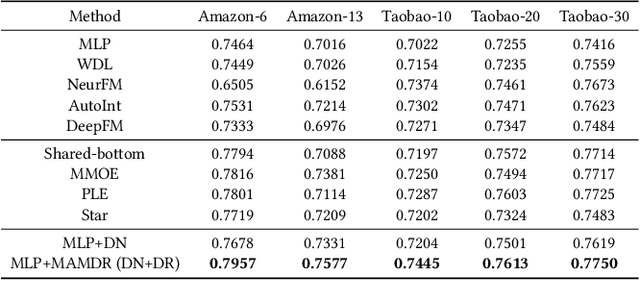

Abstract:Large-scale e-commercial platforms in the real-world usually contain various recommendation scenarios (domains) to meet demands of diverse customer groups. Multi-Domain Recommendation (MDR), which aims to jointly improve recommendations on all domains, has attracted increasing attention from practitioners and researchers. Existing MDR methods often employ a shared structure to leverage reusable features for all domains and several specific parts to capture domain-specific information. However, data from different domains may conflict with each other and cause shared parameters to stay at a compromised position on the optimization landscape. This could deteriorate the overall performance. Despite the specific parameters are separately learned for each domain, they can easily overfit on data sparsity domains. Furthermore, data distribution differs across domains, making it challenging to develop a general model that can be applied to all circumstances. To address these problems, we propose a novel model agnostic learning method, namely MAMDR, for the multi-domain recommendation. Specifically, we first propose a Domain Negotiation (DN) strategy to alleviate the conflict between domains and learn better shared parameters. Then, we develop a Domain Regularization (DR) scheme to improve the generalization ability of specific parameters by learning from other domains. Finally, we integrate these components into a unified framework and present MAMDR which can be applied to any model structure to perform multi-domain recommendation. Extensive experiments on various real-world datasets and online applications demonstrate both the effectiveness and generalizability of MAMDR.

A General Method For Automatic Discovery of Powerful Interactions In Click-Through Rate Prediction

May 21, 2021

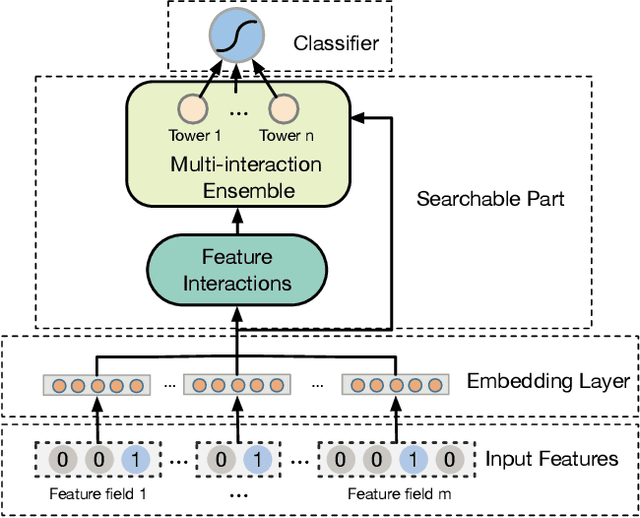

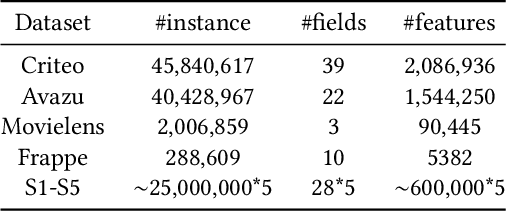

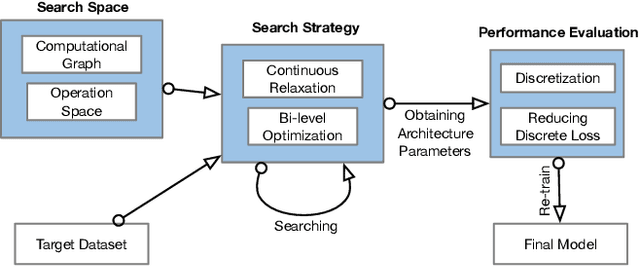

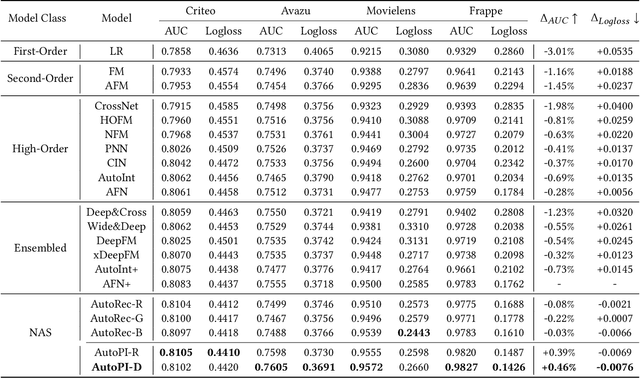

Abstract:Modeling powerful interactions is a critical challenge in Click-through rate (CTR) prediction, which is one of the most typical machine learning tasks in personalized advertising and recommender systems. Although developing hand-crafted interactions is effective for a small number of datasets, it generally requires laborious and tedious architecture engineering for extensive scenarios. In recent years, several neural architecture search (NAS) methods have been proposed for designing interactions automatically. However, existing methods only explore limited types and connections of operators for interaction generation, leading to low generalization ability. To address these problems, we propose a more general automated method for building powerful interactions named AutoPI. The main contributions of this paper are as follows: AutoPI adopts a more general search space in which the computational graph is generalized from existing network connections, and the interactive operators in the edges of the graph are extracted from representative hand-crafted works. It allows searching for various powerful feature interactions to produce higher AUC and lower Logloss in a wide variety of applications. Besides, AutoPI utilizes a gradient-based search strategy for exploration with a significantly low computational cost. Experimentally, we evaluate AutoPI on a diverse suite of benchmark datasets, demonstrating the generalizability and efficiency of AutoPI over hand-crafted architectures and state-of-the-art NAS algorithms.

MMFashion: An Open-Source Toolbox for Visual Fashion Analysis

May 19, 2020

Abstract:We present MMFashion, a comprehensive, flexible and user-friendly open-source visual fashion analysis toolbox based on PyTorch. This toolbox supports a wide spectrum of fashion analysis tasks, including Fashion Attribute Prediction, Fashion Recognition and Retrieval, Fashion Landmark Detection, Fashion Parsing and Segmentation and Fashion Compatibility and Recommendation. It covers almost all the mainstream tasks in fashion analysis community. MMFashion has several appealing properties. Firstly, MMFashion follows the principle of modular design. The framework is decomposed into different components so that it is easily extensible for diverse customized modules. In addition, detailed documentations, demo scripts and off-the-shelf models are available, which ease the burden of layman users to leverage the recent advances in deep learning-based fashion analysis. Our proposed MMFashion is currently the most complete platform for visual fashion analysis in deep learning era, with more functionalities to be added. This toolbox and the benchmark could serve the flourishing research community by providing a flexible toolkit to deploy existing models and develop new ideas and approaches. We welcome all contributions to this still-growing efforts towards open science: https://github.com/open-mmlab/mmfashion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge