Jiajie Wang

A Two-Stage Band-Split Mamba-2 Network for Music Separation

Sep 10, 2024

Abstract:Music source separation (MSS) aims to separate mixed music into its distinct tracks, such as vocals, bass, drums, and more. MSS is considered to be a challenging audio separation task due to the complexity of music signals. Although the RNN and Transformer architecture are not perfect, they are commonly used to model the music sequence for MSS. Recently, Mamba-2 has already demonstrated high efficiency in various sequential modeling tasks, but its superiority has not been investigated in MSS. This paper applies Mamba-2 with a two-stage strategy, which introduces residual mapping based on the mask method, effectively compensating for the details absent in the mask and further improving separation performance. Experiments confirm the superiority of bidirectional Mamba-2 and the effectiveness of the two-stage network in MSS. The source code is publicly accessible at https://github.com/baijinglin/TS-BSmamba2.

Vector Quantized Diffusion Model Based Speech Bandwidth Extension

Sep 09, 2024

Abstract:Recent advancements in neural audio codec (NAC) unlock new potential in audio signal processing. Studies have increasingly explored leveraging the latent features of NAC for various speech signal processing tasks. This paper introduces the first approach to speech bandwidth extension (BWE) that utilizes the discrete features obtained from NAC. By restoring high-frequency details within highly compressed discrete tokens, this approach enhances speech intelligibility and naturalness. Based on Vector Quantized Diffusion, the proposed framework combines the strengths of advanced NAC, diffusion models, and Mamba-2 to reconstruct high-frequency speech components. Extensive experiments demonstrate that this method exhibits superior performance across both log-spectral distance and ViSQOL, significantly improving speech quality.

Robust Predictions with Ambiguous Time Delays: A Bootstrap Strategy

Aug 23, 2024

Abstract:In contemporary data-driven environments, the generation and processing of multivariate time series data is an omnipresent challenge, often complicated by time delays between different time series. These delays, originating from a multitude of sources like varying data transmission dynamics, sensor interferences, and environmental changes, introduce significant complexities. Traditional Time Delay Estimation methods, which typically assume a fixed constant time delay, may not fully capture these variabilities, compromising the precision of predictive models in diverse settings. To address this issue, we introduce the Time Series Model Bootstrap (TSMB), a versatile framework designed to handle potentially varying or even nondeterministic time delays in time series modeling. Contrary to traditional approaches that hinge on the assumption of a single, consistent time delay, TSMB adopts a nonparametric stance, acknowledging and incorporating time delay uncertainties. TSMB significantly bolsters the performance of models that are trained and make predictions using this framework, making it highly suitable for a wide range of dynamic and interconnected data environments.

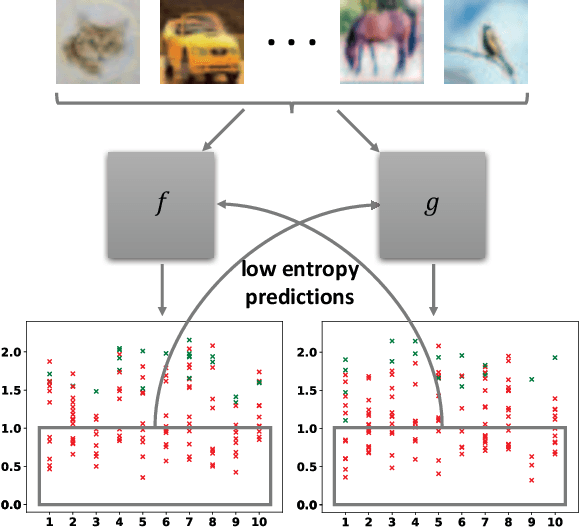

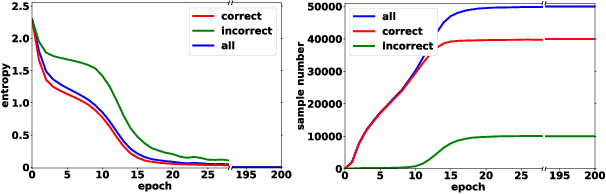

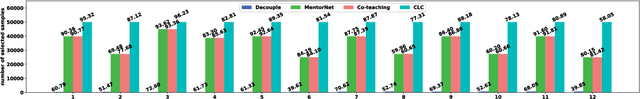

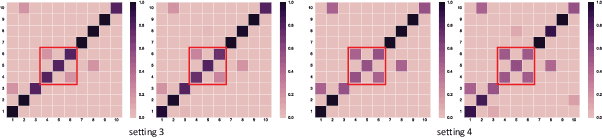

Collaborative Label Correction via Entropy Thresholding

Apr 27, 2021

Abstract:Deep neural networks (DNNs) have the capacity to fit extremely noisy labels nonetheless they tend to learn data with clean labels first and then memorize those with noisy labels. We examine this behavior in light of the Shannon entropy of the predictions and demonstrate the low entropy predictions determined by a given threshold are much more reliable as the supervision than the original noisy labels. It also shows the advantage in maintaining more training samples than previous methods. Then, we power this entropy criterion with the Collaborative Label Correction (CLC) framework to further avoid undesired local minimums of the single network. A range of experiments have been conducted on multiple benchmarks with both synthetic and real-world settings. Extensive results indicate that our CLC outperforms several state-of-the-art methods.

Collaborative Learning for Weakly Supervised Object Detection

Feb 10, 2018

Abstract:Weakly supervised object detection has recently received much attention, since it only requires image-level labels instead of the bounding-box labels consumed in strongly supervised learning. Nevertheless, the save in labeling expense is usually at the cost of model accuracy. In this paper, we propose a simple but effective weakly supervised collaborative learning framework to resolve this problem, which trains a weakly supervised learner and a strongly supervised learner jointly by enforcing partial feature sharing and prediction consistency. For object detection, taking WSDDN-like architecture as weakly supervised detector sub-network and Faster-RCNN-like architecture as strongly supervised detector sub-network, we propose an end-to-end Weakly Supervised Collaborative Detection Network. As there is no strong supervision available to train the Faster-RCNN-like sub-network, a new prediction consistency loss is defined to enforce consistency of predictions between the two sub-networks as well as within the Faster-RCNN-like sub-networks. At the same time, the two detectors are designed to partially share features to further guarantee the model consistency at perceptual level. Extensive experiments on PASCAL VOC 2007 and 2012 data sets have demonstrated the effectiveness of the proposed framework.

Deep Learning from Noisy Image Labels with Quality Embedding

Nov 02, 2017

Abstract:There is an emerging trend to leverage noisy image datasets in many visual recognition tasks. However, the label noise among the datasets severely degenerates the \mbox{performance of deep} learning approaches. Recently, one mainstream is to introduce the latent label to handle label noise, which has shown promising improvement in the network designs. Nevertheless, the mismatch between latent labels and noisy labels still affects the predictions in such methods. To address this issue, we propose a quality embedding model, which explicitly introduces a quality variable to represent the trustworthiness of noisy labels. Our key idea is to identify the mismatch between the latent and noisy labels by embedding the quality variables into different subspaces, which effectively minimizes the noise effect. At the same time, the high-quality labels is still able to be applied for training. To instantiate the model, we further propose a Contrastive-Additive Noise network (CAN), which consists of two important layers: (1) the contrastive layer estimates the quality variable in the embedding space to reduce noise effect; and (2) the additive layer aggregates the prior predictions and noisy labels as the posterior to train the classifier. Moreover, to tackle the optimization difficulty, we deduce an SGD algorithm with the reparameterization tricks, which makes our method scalable to big data. We conduct the experimental evaluation of the proposed method over a range of noisy image datasets. Comprehensive results have demonstrated CAN outperforms the state-of-the-art deep learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge