Jiageng Zhong

A Novel Solution for Drone Photogrammetry with Low-overlap Aerial Images using Monocular Depth Estimation

Mar 06, 2025

Abstract:Low-overlap aerial imagery poses significant challenges to traditional photogrammetric methods, which rely heavily on high image overlap to produce accurate and complete mapping products. In this study, we propose a novel workflow based on monocular depth estimation to address the limitations of conventional techniques. Our method leverages tie points obtained from aerial triangulation to establish a relationship between monocular depth and metric depth, thus transforming the original depth map into a metric depth map, enabling the generation of dense depth information and the comprehensive reconstruction of the scene. For the experiments, a high-overlap drone dataset containing 296 images is processed using Metashape to generate depth maps and DSMs as ground truth. Subsequently, we create a low-overlap dataset by selecting 20 images for experimental evaluation. Results demonstrate that while the recovered depth maps and resulting DSMs achieve meter-level accuracy, they provide significantly better completeness compared to traditional methods, particularly in regions covered by single images. This study showcases the potential of monocular depth estimation in low-overlap aerial photogrammetry.

Cutting-edge 3D reconstruction solutions for underwater coral reef images: A review and comparison

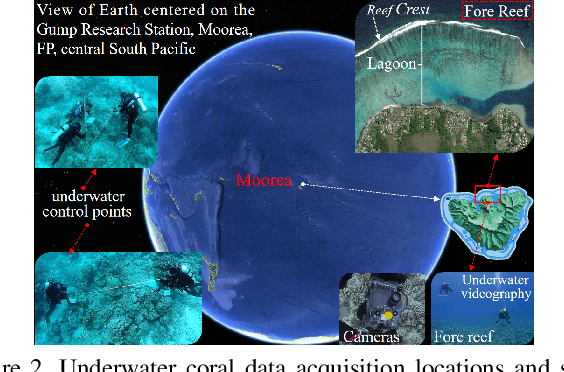

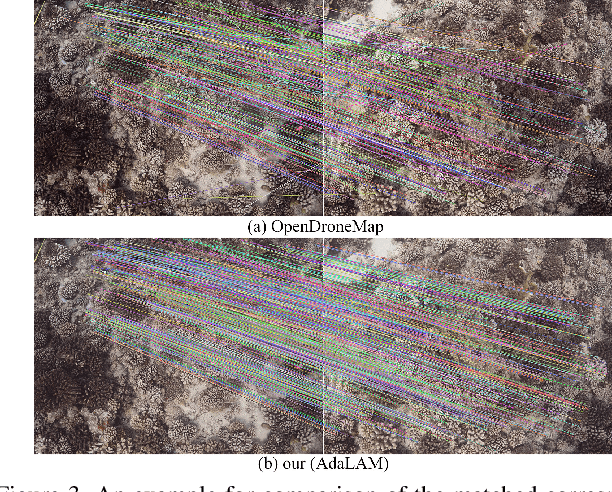

Feb 27, 2025Abstract:Corals serve as the foundational habitat-building organisms within reef ecosystems, constructing extensive structures that extend over vast distances. However, their inherent fragility and vulnerability to various threats render them susceptible to significant damage and destruction. The application of advanced 3D reconstruction technologies for high-quality modeling is crucial for preserving them. These technologies help scientists to accurately document and monitor the state of coral reefs, including their structure, species distribution and changes over time. Photogrammetry-based approaches stand out among existing solutions, especially with recent advancements in underwater videography, photogrammetric computer vision, and machine learning. Despite continuous progress in image-based 3D reconstruction techniques, there remains a lack of systematic reviews and comprehensive evaluations of cutting-edge solutions specifically applied to underwater coral reef images. The emerging advanced methods may have difficulty coping with underwater imaging environments, complex coral structures, and computational resource constraints. They need to be reviewed and evaluated to bridge the gap between many cutting-edge technical studies and practical applications. This paper focuses on the two critical stages of these approaches: camera pose estimation and dense surface reconstruction. We systematically review and summarize classical and emerging methods, conducting comprehensive evaluations through real-world and simulated datasets. Based on our findings, we offer reference recommendations and discuss the development potential and challenges of existing approaches in depth. This work equips scientists and managers with a technical foundation and practical guidance for processing underwater coral reef images for 3D reconstruction....

A Safer Vision-based Autonomous Planning System for Quadrotor UAVs with Dynamic Obstacle Trajectory Prediction and Its Application with LLMs

Nov 21, 2023Abstract:For intelligent quadcopter UAVs, a robust and reliable autonomous planning system is crucial. Most current trajectory planning methods for UAVs are suitable for static environments but struggle to handle dynamic obstacles, which can pose challenges and even dangers to flight. To address this issue, this paper proposes a vision-based planning system that combines tracking and trajectory prediction of dynamic obstacles to achieve efficient and reliable autonomous flight. We use a lightweight object detection algorithm to identify dynamic obstacles and then use Kalman Filtering to track and estimate their motion states. During the planning phase, we not only consider static obstacles but also account for the potential movements of dynamic obstacles. For trajectory generation, we use a B-spline-based trajectory search algorithm, which is further optimized with various constraints to enhance safety and alignment with the UAV's motion characteristics. We conduct experiments in both simulation and real-world environments, and the results indicate that our approach can successfully detect and avoid obstacles in dynamic environments in real-time, offering greater reliability compared to existing approaches. Furthermore, with the advancements in Natural Language Processing (NLP) technology demonstrating exceptional zero-shot generalization capabilities, more user-friendly human-machine interactions have become feasible, and this study also explores the integration of autonomous planning systems with Large Language Models (LLMs).

Deep Learning-Based UAV Aerial Triangulation without Image Control Points

Jan 07, 2023

Abstract:The emerging drone aerial survey has the advantages of low cost, high efficiency, and flexible use. However, UAVs are often equipped with cheap POS systems and non-measurement cameras, and their flight attitudes are easily affected. How to realize the large-scale mapping of UAV image-free control supported by POS faces many technical problems. The most basic and important core technology is how to accurately realize the absolute orientation of images through advanced aerial triangulation technology. In traditional aerial triangulation, image matching algorithms are constrained to varying degrees by preset prior knowledge. In recent years, deep learning has developed rapidly in the field of photogrammetric computer vision. It has surpassed the performance of traditional handcrafted features in many aspects. It has shown stronger stability in image-based navigation and positioning tasks, especially it has better resistance to unfavorable factors such as blur, illumination changes, and geometric distortion. Based on the introduction of the key technologies of aerial triangulation without image control points, this paper proposes a new drone image registration method based on deep learning image features to solve the problem of high mismatch rate in traditional methods. It adopts SuperPoint as the feature detector, uses the superior generalization performance of CNN to extract precise feature points from the UAV image, thereby achieving high-precision aerial triangulation. Experimental results show that under the same pre-processing and post-processing conditions, compared with the traditional method based on the SIFT algorithm, this method achieves suitable precision more efficiently, which can meet the requirements of UAV aerial triangulation without image control points in large-scale surveys.

Combining Photogrammetric Computer Vision and Semantic Segmentation for Fine-grained Understanding of Coral Reef Growth under Climate Change

Dec 08, 2022

Abstract:Corals are the primary habitat-building life-form on reefs that support a quarter of the species in the ocean. A coral reef ecosystem usually consists of reefs, each of which is like a tall building in any city. These reef-building corals secrete hard calcareous exoskeletons that give them structural rigidity, and are also a prerequisite for our accurate 3D modeling and semantic mapping using advanced photogrammetric computer vision and machine learning. Underwater videography as a modern underwater remote sensing tool is a high-resolution coral habitat survey and mapping technique. In this paper, detailed 3D mesh models, digital surface models and orthophotos of the coral habitat are generated from the collected coral images and underwater control points. Meanwhile, a novel pixel-wise semantic segmentation approach of orthophotos is performed by advanced deep learning. Finally, the semantic map is mapped into 3D space. For the first time, 3D fine-grained semantic modeling and rugosity evaluation of coral reefs have been completed at millimeter (mm) accuracy. This provides a new and powerful method for understanding the processes and characteristics of coral reef change at high spatial and temporal resolution under climate change.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge