Jiadi Cui

CityGo: Lightweight Urban Modeling and Rendering with Proxy Buildings and Residual Gaussians

May 28, 2025

Abstract:Accurate and efficient modeling of large-scale urban scenes is critical for applications such as AR navigation, UAV based inspection, and smart city digital twins. While aerial imagery offers broad coverage and complements limitations of ground-based data, reconstructing city-scale environments from such views remains challenging due to occlusions, incomplete geometry, and high memory demands. Recent advances like 3D Gaussian Splatting (3DGS) improve scalability and visual quality but remain limited by dense primitive usage, long training times, and poor suit ability for edge devices. We propose CityGo, a hybrid framework that combines textured proxy geometry with residual and surrounding 3D Gaussians for lightweight, photorealistic rendering of urban scenes from aerial perspectives. Our approach first extracts compact building proxy meshes from MVS point clouds, then uses zero order SH Gaussians to generate occlusion-free textures via image-based rendering and back-projection. To capture high-frequency details, we introduce residual Gaussians placed based on proxy-photo discrepancies and guided by depth priors. Broader urban context is represented by surrounding Gaussians, with importance-aware downsampling applied to non-critical regions to reduce redundancy. A tailored optimization strategy jointly refines proxy textures and Gaussian parameters, enabling real-time rendering of complex urban scenes on mobile GPUs with significantly reduced training and memory requirements. Extensive experiments on real-world aerial datasets demonstrate that our hybrid representation significantly reduces training time, achieving on average 1.4x speedup, while delivering comparable visual fidelity to pure 3D Gaussian Splatting approaches. Furthermore, CityGo enables real-time rendering of large-scale urban scenes on mobile consumer GPUs, with substantially reduced memory usage and energy consumption.

LetsGo: Large-Scale Garage Modeling and Rendering via LiDAR-Assisted Gaussian Primitives

Apr 15, 2024

Abstract:Large garages are ubiquitous yet intricate scenes in our daily lives, posing challenges characterized by monotonous colors, repetitive patterns, reflective surfaces, and transparent vehicle glass. Conventional Structure from Motion (SfM) methods for camera pose estimation and 3D reconstruction fail in these environments due to poor correspondence construction. To address these challenges, this paper introduces LetsGo, a LiDAR-assisted Gaussian splatting approach for large-scale garage modeling and rendering. We develop a handheld scanner, Polar, equipped with IMU, LiDAR, and a fisheye camera, to facilitate accurate LiDAR and image data scanning. With this Polar device, we present a GarageWorld dataset consisting of five expansive garage scenes with diverse geometric structures and will release the dataset to the community for further research. We demonstrate that the collected LiDAR point cloud by the Polar device enhances a suite of 3D Gaussian splatting algorithms for garage scene modeling and rendering. We also propose a novel depth regularizer for 3D Gaussian splatting algorithm training, effectively eliminating floating artifacts in rendered images, and a lightweight Level of Detail (LOD) Gaussian renderer for real-time viewing on web-based devices. Additionally, we explore a hybrid representation that combines the advantages of traditional mesh in depicting simple geometry and colors (e.g., walls and the ground) with modern 3D Gaussian representations capturing complex details and high-frequency textures. This strategy achieves an optimal balance between memory performance and rendering quality. Experimental results on our dataset, along with ScanNet++ and KITTI-360, demonstrate the superiority of our method in rendering quality and resource efficiency.

CP+: Camera Poses Augmentation with Large-scale LiDAR Maps

Feb 27, 2023Abstract:Large-scale colored point clouds have many advantages in navigation or scene display. Relying on cameras and LiDARs, which are now widely used in reconstruction tasks, it is possible to obtain such colored point clouds. However, the information from these two kinds of sensors is not well fused in many existing frameworks, resulting in poor colorization results, thus resulting in inaccurate camera poses and damaged point colorization results. We propose a novel framework called Camera Pose Augmentation (CP+) to improve the camera poses and align them directly with the LiDAR-based point cloud. Initial coarse camera poses are given by LiDAR-Inertial or LiDAR-Inertial-Visual Odometry with approximate extrinsic parameters and time synchronization. The key steps to improve the alignment of the images consist of selecting a point cloud corresponding to a region of interest in each camera view, extracting reliable edge features from this point cloud, and deriving 2D-3D line correspondences which are used towards iterative minimization of the re-projection error.

Efficient Globally-Optimal Correspondence-Less Visual Odometry for Planar Ground Vehicles

Mar 01, 2022

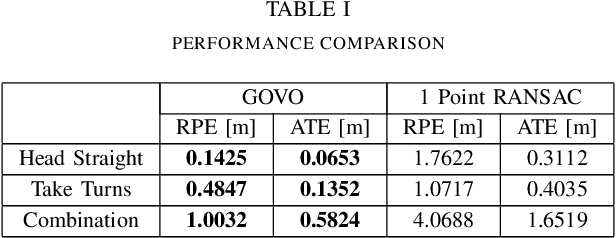

Abstract:The motion of planar ground vehicles is often non-holonomic, and as a result may be modelled by the 2 DoF Ackermann steering model. We analyse the feasibility of estimating such motion with a downward facing camera that exerts fronto-parallel motion with respect to the ground plane. This turns the motion estimation into a simple image registration problem in which we only have to identify a 2-parameter planar homography. However, one difficulty that arises from this setup is that ground-plane features are indistinctive and thus hard to match between successive views. We encountered this difficulty by introducing the first globally-optimal, correspondence-less solution to plane-based Ackermann motion estimation. The solution relies on the branch-and-bound optimisation technique. Through the low-dimensional parametrisation, a derivation of tight bounds, and an efficient implementation, we demonstrate how this technique is eventually amenable to accurate real-time motion estimation. We prove its property of global optimality and analyse the impact of assuming a locally constant centre of rotation. Our results on real data finally demonstrate a significant advantage over the more traditional, correspondence-based hypothesise-and-test schemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge