Jiachen Du

Tencent Music Entertainment

DiVa: An Iterative Framework to Harvest More Diverse and Valid Labels from User Comments for Music

Aug 09, 2023

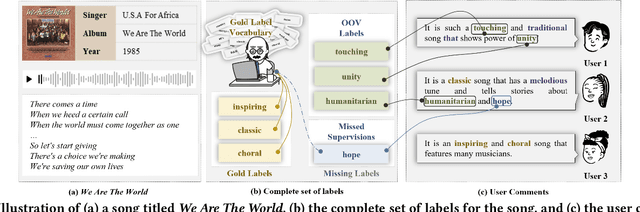

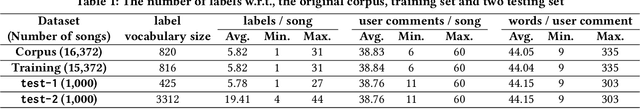

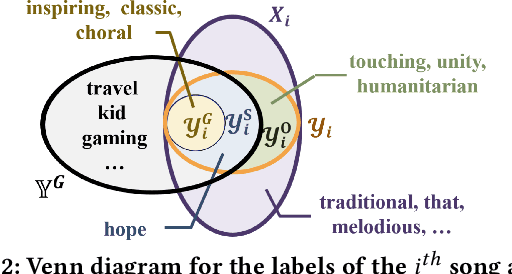

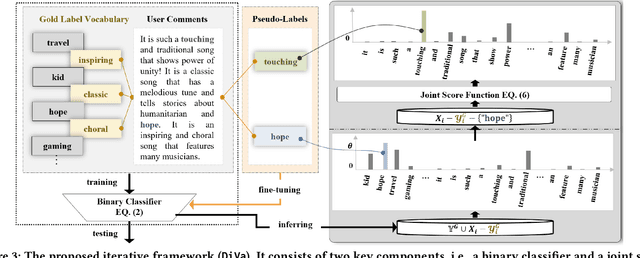

Abstract:Towards sufficient music searching, it is vital to form a complete set of labels for each song. However, current solutions fail to resolve it as they cannot produce diverse enough mappings to make up for the information missed by the gold labels. Based on the observation that such missing information may already be presented in user comments, we propose to study the automated music labeling in an essential but under-explored setting, where the model is required to harvest more diverse and valid labels from the users' comments given limited gold labels. To this end, we design an iterative framework (DiVa) to harvest more $\underline{\text{Di}}$verse and $\underline{\text{Va}}$lid labels from user comments for music. The framework makes a classifier able to form complete sets of labels for songs via pseudo-labels inferred from pre-trained classifiers and a novel joint score function. The experiment on a densely annotated testing set reveals the superiority of the Diva over state-of-the-art solutions in producing more diverse labels missed by the gold labels. We hope our work can inspire future research on automated music labeling.

Empathetic Response Generation with State Management

May 07, 2022

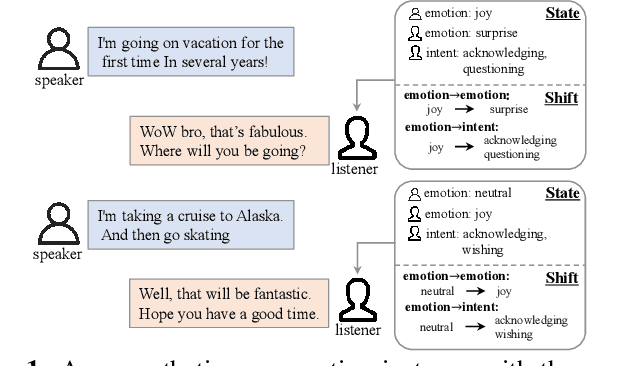

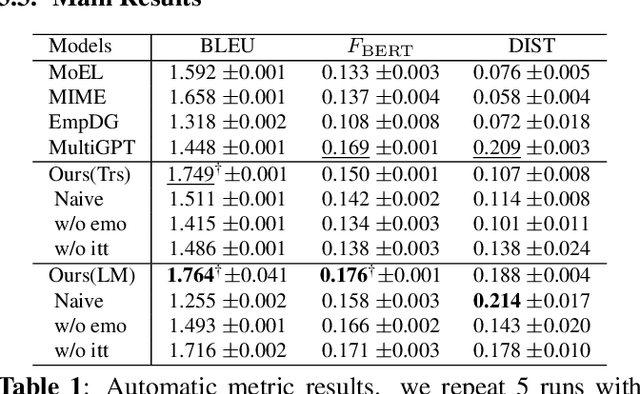

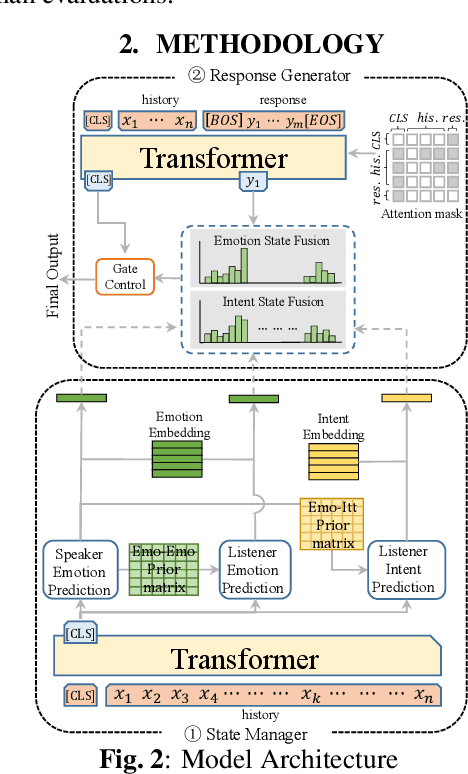

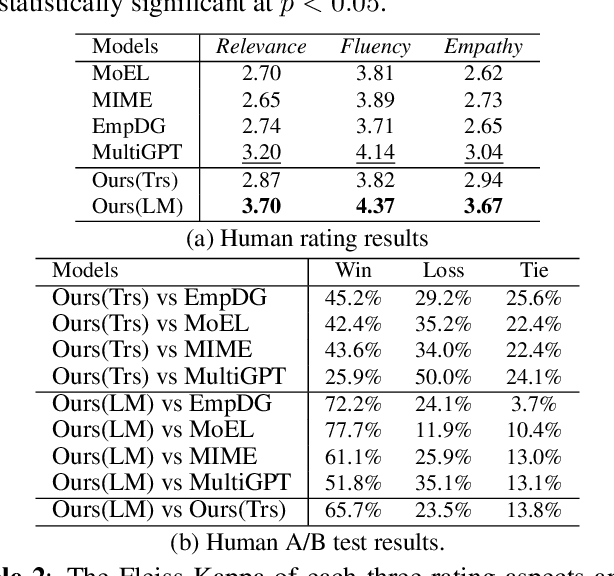

Abstract:The goal of empathetic response generation is to enhance the ability of dialogue systems to perceive and express emotions in conversations. Current approaches to this task mainly focus on improving the response generation model by recognizing the emotion of the user or predicting a target emotion to guide the generation of responses. Such models only exploit partial information (the user's emotion or the target emotion used as a guiding signal) and do not consider multiple information together. In addition to the emotional style of the response, the intent of the response is also very important for empathetic responding. Thus, we propose a novel empathetic response generation model that can consider multiple state information including emotions and intents simultaneously. Specifically, we introduce a state management method to dynamically update the dialogue states, in which the user's emotion is first recognized, then the target emotion and intent are obtained via predefined shift patterns with the user's emotion as input. The obtained information is used to control the response generation. Experimental results show that dynamically managing different information can help the model generate more empathetic responses compared with several baselines under both automatic and human evaluations.

Context-aware Embedding for Targeted Aspect-based Sentiment Analysis

Jun 17, 2019

Abstract:Attention-based neural models were employed to detect the different aspects and sentiment polarities of the same target in targeted aspect-based sentiment analysis (TABSA). However, existing methods do not specifically pre-train reasonable embeddings for targets and aspects in TABSA. This may result in targets or aspects having the same vector representations in different contexts and losing the context-dependent information. To address this problem, we propose a novel method to refine the embeddings of targets and aspects. Such pivotal embedding refinement utilizes a sparse coefficient vector to adjust the embeddings of target and aspect from the context. Hence the embeddings of targets and aspects can be refined from the highly correlative words instead of using context-independent or randomly initialized vectors. Experiment results on two benchmark datasets show that our approach yields the state-of-the-art performance in TABSA task.

A Question Answering Approach to Emotion Cause Extraction

Sep 24, 2017

Abstract:Emotion cause extraction aims to identify the reasons behind a certain emotion expressed in text. It is a much more difficult task compared to emotion classification. Inspired by recent advances in using deep memory networks for question answering (QA), we propose a new approach which considers emotion cause identification as a reading comprehension task in QA. Inspired by convolutional neural networks, we propose a new mechanism to store relevant context in different memory slots to model context information. Our proposed approach can extract both word level sequence features and lexical features. Performance evaluation shows that our method achieves the state-of-the-art performance on a recently released emotion cause dataset, outperforming a number of competitive baselines by at least 3.01% in F-measure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge