Jerome Sieber

Design Principles for Sequence Models via Coefficient Dynamics

Oct 10, 2025

Abstract:Deep sequence models, ranging from Transformers and State Space Models (SSMs) to more recent approaches such as gated linear RNNs, fundamentally compute outputs as linear combinations of past value vectors. To draw insights and systematically compare such architectures, we develop a unified framework that makes this output operation explicit, by casting the linear combination coefficients as the outputs of autonomous linear dynamical systems driven by impulse inputs. This viewpoint, in spirit substantially different from approaches focusing on connecting linear RNNs with linear attention, reveals a common mathematical theme across diverse architectures and crucially captures softmax attention, on top of RNNs, SSMs, and related models. In contrast to new model proposals that are commonly evaluated on benchmarks, we derive design principles linking architectural choices to model properties. Thereby identifying tradeoffs between expressivity and efficient implementation, geometric constraints on input selectivity, and stability conditions for numerically stable training and information retention. By connecting several insights and observations from recent literature, the framework both explains empirical successes of recent designs and provides guiding principles for systematically designing new sequence model architectures.

Task-Level Insights from Eigenvalues across Sequence Models

Oct 10, 2025

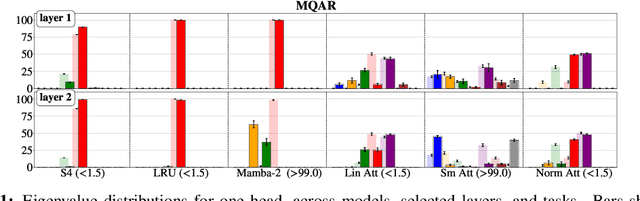

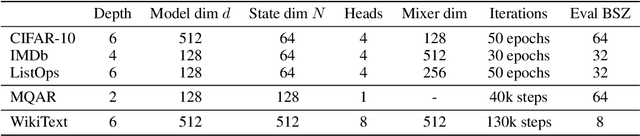

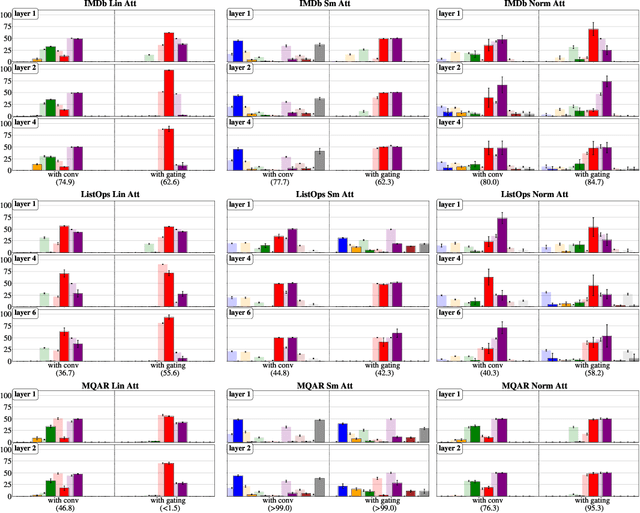

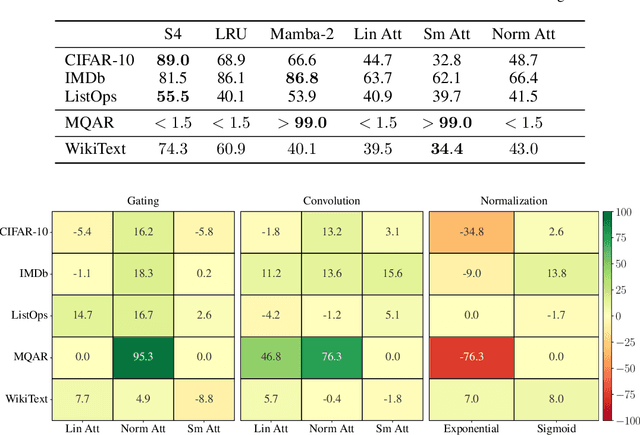

Abstract:Although softmax attention drives state-of-the-art performance for sequence models, its quadratic complexity limits scalability, motivating linear alternatives such as state space models (SSMs). While these alternatives improve efficiency, their fundamental differences in information processing remain poorly understood. In this work, we leverage the recently proposed dynamical systems framework to represent softmax, norm and linear attention as dynamical systems, enabling a structured comparison with SSMs by analyzing their respective eigenvalue spectra. Since eigenvalues capture essential aspects of dynamical system behavior, we conduct an extensive empirical analysis across diverse sequence models and benchmarks. We first show that eigenvalues influence essential aspects of memory and long-range dependency modeling, revealing spectral signatures that align with task requirements. Building on these insights, we then investigate how architectural modifications in sequence models impact both eigenvalue spectra and task performance. This correspondence further strengthens the position of eigenvalue analysis as a principled metric for interpreting, understanding, and ultimately improving the capabilities of sequence models.

Lambda-Skip Connections: the architectural component that prevents Rank Collapse

Oct 14, 2024

Abstract:Rank collapse, a phenomenon where embedding vectors in sequence models rapidly converge to a uniform token or equilibrium state, has recently gained attention in the deep learning literature. This phenomenon leads to reduced expressivity and potential training instabilities due to vanishing gradients. Empirical evidence suggests that architectural components like skip connections, LayerNorm, and MultiLayer Perceptrons (MLPs) play critical roles in mitigating rank collapse. While this issue is well-documented for transformers, alternative sequence models, such as State Space Models (SSMs), which have recently gained prominence, have not been thoroughly examined for similar vulnerabilities. This paper extends the theory of rank collapse from transformers to SSMs using a unifying framework that captures both architectures. We study how a parametrized version of the classic skip connection component, which we call \emph{lambda-skip connections}, provides guarantees for rank collapse prevention. Through analytical results, we present a sufficient condition to guarantee prevention of rank collapse across all the aforementioned architectures. We also study the necessity of this condition via ablation studies and analytical examples. To our knowledge, this is the first study that provides a general guarantee to prevent rank collapse, and that investigates rank collapse in the context of SSMs, offering valuable understanding for both theoreticians and practitioners. Finally, we validate our findings with experiments demonstrating the crucial role of architectural components such as skip connections and gating mechanisms in preventing rank collapse.

Understanding the differences in Foundation Models: Attention, State Space Models, and Recurrent Neural Networks

May 24, 2024Abstract:Softmax attention is the principle backbone of foundation models for various artificial intelligence applications, yet its quadratic complexity in sequence length can limit its inference throughput in long-context settings. To address this challenge, alternative architectures such as linear attention, State Space Models (SSMs), and Recurrent Neural Networks (RNNs) have been considered as more efficient alternatives. While connections between these approaches exist, such models are commonly developed in isolation and there is a lack of theoretical understanding of the shared principles underpinning these architectures and their subtle differences, greatly influencing performance and scalability. In this paper, we introduce the Dynamical Systems Framework (DSF), which allows a principled investigation of all these architectures in a common representation. Our framework facilitates rigorous comparisons, providing new insights on the distinctive characteristics of each model class. For instance, we compare linear attention and selective SSMs, detailing their differences and conditions under which both are equivalent. We also provide principled comparisons between softmax attention and other model classes, discussing the theoretical conditions under which softmax attention can be approximated. Additionally, we substantiate these new insights with empirical validations and mathematical arguments. This shows the DSF's potential to guide the systematic development of future more efficient and scalable foundation models.

State Space Models as Foundation Models: A Control Theoretic Overview

Mar 25, 2024Abstract:In recent years, there has been a growing interest in integrating linear state-space models (SSM) in deep neural network architectures of foundation models. This is exemplified by the recent success of Mamba, showing better performance than the state-of-the-art Transformer architectures in language tasks. Foundation models, like e.g. GPT-4, aim to encode sequential data into a latent space in order to learn a compressed representation of the data. The same goal has been pursued by control theorists using SSMs to efficiently model dynamical systems. Therefore, SSMs can be naturally connected to deep sequence modeling, offering the opportunity to create synergies between the corresponding research areas. This paper is intended as a gentle introduction to SSM-based architectures for control theorists and summarizes the latest research developments. It provides a systematic review of the most successful SSM proposals and highlights their main features from a control theoretic perspective. Additionally, we present a comparative analysis of these models, evaluating their performance on a standardized benchmark designed for assessing a model's efficiency at learning long sequences.

Chronos and CRS: Design of a miniature car-like robot and a software framework for single and multi-agent robotics and control

Sep 24, 2022

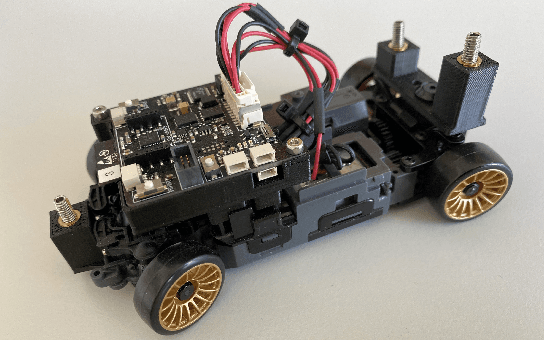

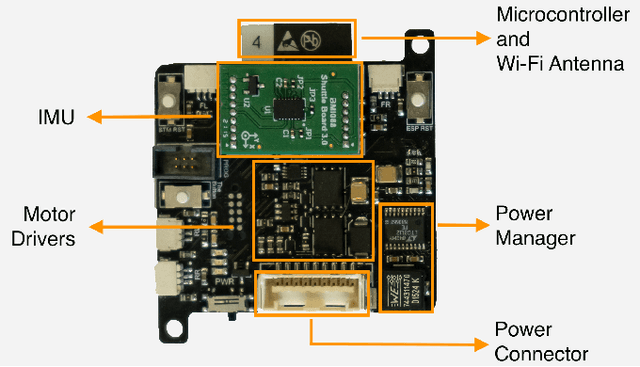

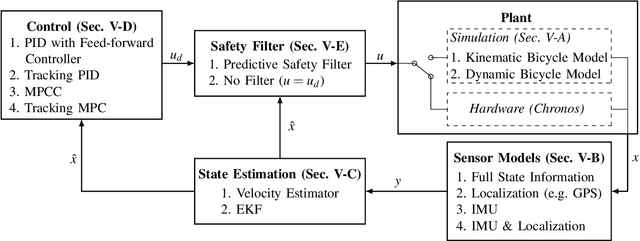

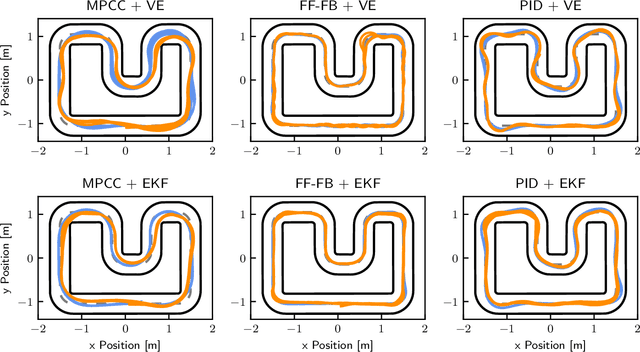

Abstract:From both an educational and research point of view, experiments on hardware are a key aspect of robotics and control. In the last decade, many open-source hardware and software frameworks for wheeled robots have been presented, mainly in the form of unicycles and car-like robots, with the goal of making robotics accessible to a wider audience and to support control systems development. Unicycles are usually small and inexpensive, and therefore facilitate experiments in a larger fleet, but they are not suited for high-speed motion. Car-like robots are more agile, but they are usually larger and more expensive, thus requiring more resources in terms of space and money. In order to bridge this gap, we present Chronos, a new car-like 1/28th scale robot with customized open-source electronics, and CRS, an open-source software framework for control and robotics. The CRS software framework includes the implementation of various state-of-the-art algorithms for control, estimation, and multi-agent coordination. With this work, we aim to provide easier access to hardware and reduce the engineering time needed to start new educational and research projects.

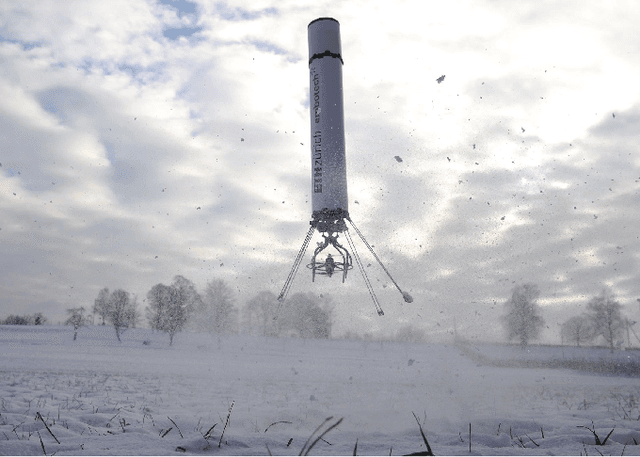

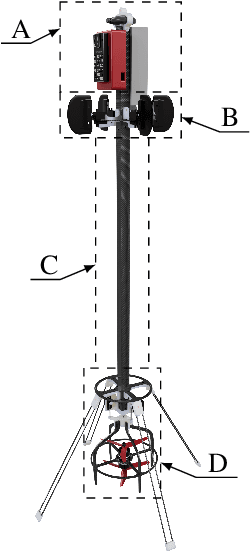

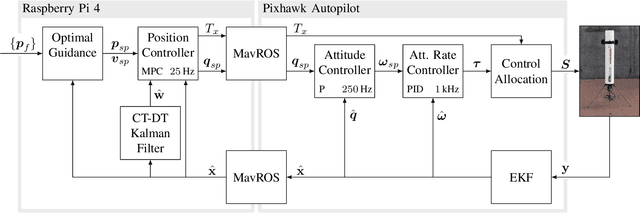

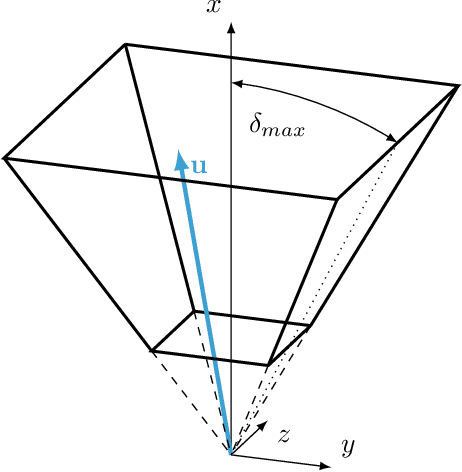

Design, Optimal Guidance and Control of a Low-cost Re-usable Electric Model Rocket

Mar 08, 2021

Abstract:In the last decade, autonomous vertical take-off and landing (VTOL) vehicles have become increasingly important as they lower mission costs thanks to their re-usability. However, their development is complex, rendering even the basic experimental validation of the required advanced guidance and control (G & C) algorithms prohibitively time-consuming and costly. In this paper, we present the design of an inexpensive small-scale VTOL platform that can be built from off-the-shelf components for less than 1000 USD. The vehicle design mimics the first stage of a reusable launcher, making it a perfect test-bed for G & C algorithms. To control the vehicle during ascent and descent, we propose a real-time optimization-based G & C algorithm. The key features are a real-time minimum fuel and free-final-time optimal guidance combined with an offset-free tracking model predictive position controller. The vehicle hardware design and the G & C algorithm are experimentally validated both indoors and outdoor, showing reliable operation in a fully autonomous fashion with all computations done on-board and in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge