Jeffrey Wishart

Science Foundation AZ/AZ Commerce Authority, SAE On-Road Automated Driving

Enhanced Cooperative Perception Through Asynchronous Vehicle to Infrastructure Framework with Delay Mitigation for Connected and Automated Vehicles

Apr 10, 2025Abstract:Perception is a key component of Automated vehicles (AVs). However, sensors mounted to the AVs often encounter blind spots due to obstructions from other vehicles, infrastructure, or objects in the surrounding area. While recent advancements in planning and control algorithms help AVs react to sudden object appearances from blind spots at low speeds and less complex scenarios, challenges remain at high speeds and complex intersections. Vehicle to Infrastructure (V2I) technology promises to enhance scene representation for AVs in complex intersections, providing sufficient time and distance to react to adversary vehicles violating traffic rules. Most existing methods for infrastructure-based vehicle detection and tracking rely on LIDAR, RADAR or sensor fusion methods, such as LIDAR-Camera and RADAR-Camera. Although LIDAR and RADAR provide accurate spatial information, the sparsity of point cloud data limits its ability to capture detailed object contours of objects far away, resulting in inaccurate 3D object detection results. Furthermore, the absence of LIDAR or RADAR at every intersection increases the cost of implementing V2I technology. To address these challenges, this paper proposes a V2I framework that utilizes monocular traffic cameras at road intersections to detect 3D objects. The results from the roadside unit (RSU) are then combined with the on-board system using an asynchronous late fusion method to enhance scene representation. Additionally, the proposed framework provides a time delay compensation module to compensate for the processing and transmission delay from the RSU. Lastly, the V2I framework is tested by simulating and validating a scenario similar to the one described in an industry report by Waymo. The results show that the proposed method improves the scene representation and the AV's perception range, giving enough time and space to react to adversary vehicles.

Developing a Safety Management System for the Autonomous Vehicle Industry

Nov 21, 2024

Abstract:Safety Management Systems (SMSs) have been used in many safety-critical industries and are now being developed and deployed in the automated driving system (ADS)-equipped vehicle (AV) sector. Industries with decades of SMS deployment have established frameworks tailored to their specific context. Several frameworks for an AV industry SMS have been proposed or are currently under development. These frameworks borrow heavily from the aviation industry although the AV and aviation industries differ in many significant ways. In this context, there is a need to review the approach to develop an SMS that is tailored to the AV industry, building on generalized lessons learned from other safety-sensitive industries. A harmonized AV-industry SMS framework would establish a single set of SMS practices to address management of broad safety risks in an integrated manner and advance the establishment of a more mature regulatory framework. This paper outlines a proposed SMS framework for the AV industry based on robust taxonomy development and validation criteria and provides rationale for such an approach. Keywords: Safety Management System (SMS), Automated Driving System (ADS), ADS-Equipped Vehicle, Autonomous Vehicles (AV)

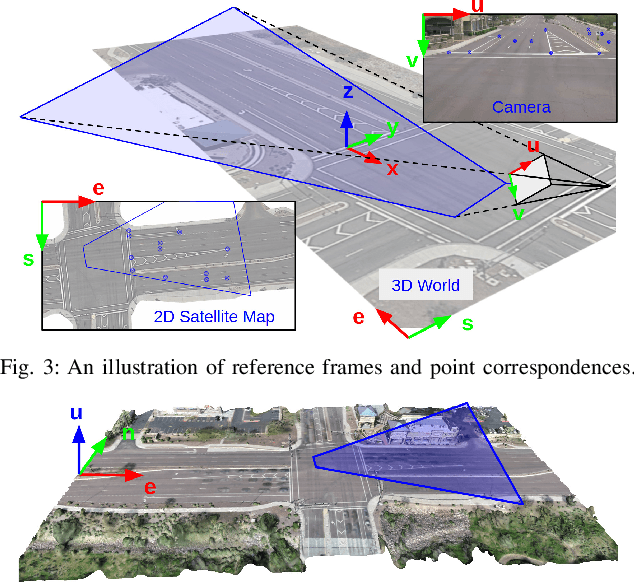

CAROM Air -- Vehicle Localization and Traffic Scene Reconstruction from Aerial Videos

May 31, 2023Abstract:Road traffic scene reconstruction from videos has been desirable by road safety regulators, city planners, researchers, and autonomous driving technology developers. However, it is expensive and unnecessary to cover every mile of the road with cameras mounted on the road infrastructure. This paper presents a method that can process aerial videos to vehicle trajectory data so that a traffic scene can be automatically reconstructed and accurately re-simulated using computers. On average, the vehicle localization error is about 0.1 m to 0.3 m using a consumer-grade drone flying at 120 meters. This project also compiles a dataset of 50 reconstructed road traffic scenes from about 100 hours of aerial videos to enable various downstream traffic analysis applications and facilitate further road traffic related research. The dataset is available at https://github.com/duolu/CAROM.

Network-level Safety Metrics for Overall Traffic Safety Assessment: A Case Study

Jan 27, 2022

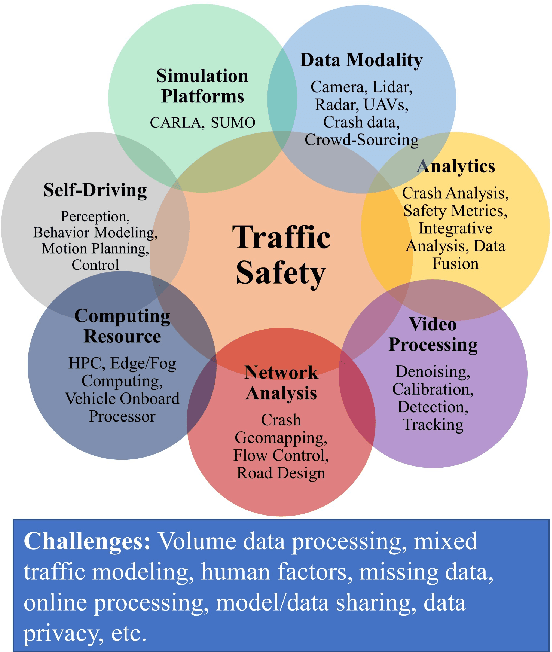

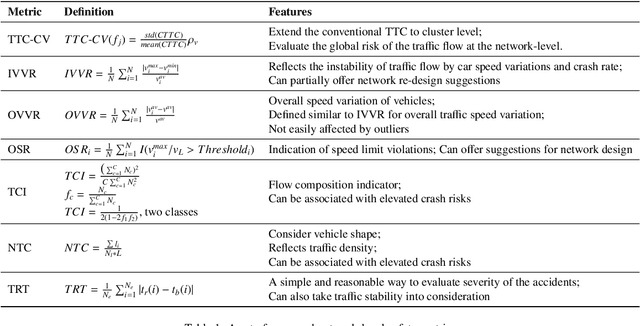

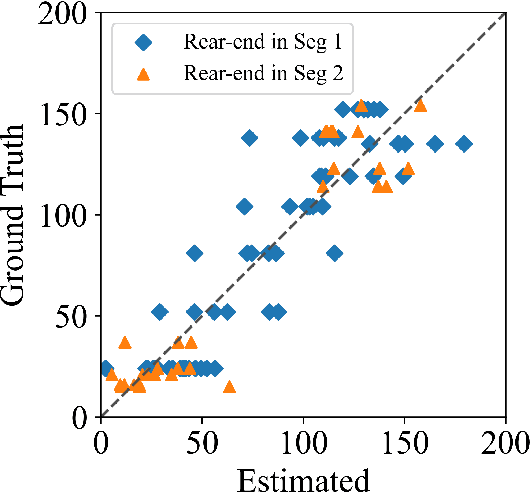

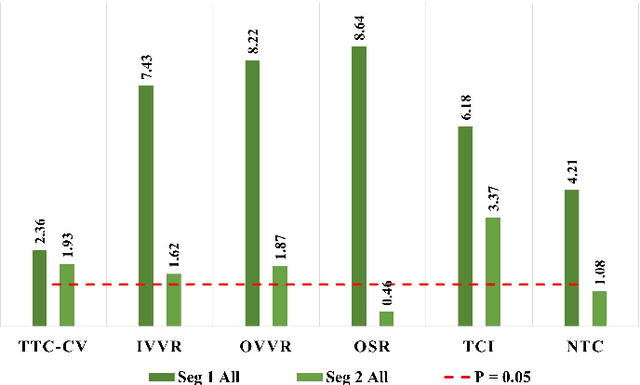

Abstract:Driving safety analysis has recently witnessed unprecedented results due to advances in computation frameworks, connected vehicle technology, new generation sensors, and artificial intelligence (AI). Particularly, the recent advances performance of deep learning (DL) methods realized higher levels of safety for autonomous vehicles and empowered volume imagery processing for driving safety analysis. An important application of DL methods is extracting driving safety metrics from traffic imagery. However, the majority of current methods use safety metrics for micro-scale analysis of individual crash incidents or near-crash events, which does not provide insightful guidelines for the overall network-level traffic management. On the other hand, large-scale safety assessment efforts mainly emphasize spatial and temporal distributions of crashes, while not always revealing the safety violations that cause crashes. To bridge these two perspectives, we define a new set of network-level safety metrics for the overall safety assessment of traffic flow by processing imagery taken by roadside infrastructure sensors. An integrative analysis of the safety metrics and crash data reveals the insightful temporal and spatial correlation between the representative network-level safety metrics and the crash frequency. The analysis is performed using two video cameras in the state of Arizona along with a 5-year crash report obtained from the Arizona Department of Transportation. The results confirm that network-level safety metrics can be used by the traffic management teams to equip traffic monitoring systems with advanced AI-based risk analysis, and timely traffic flow control decisions.

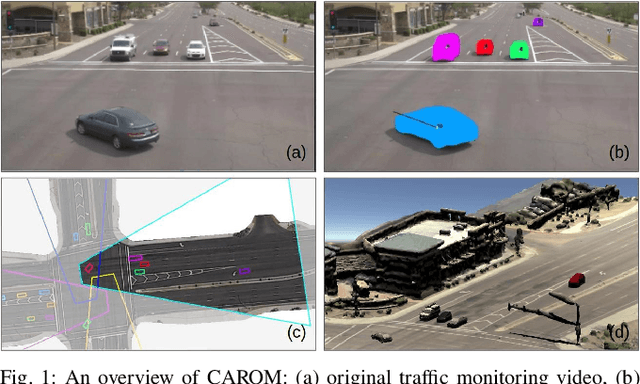

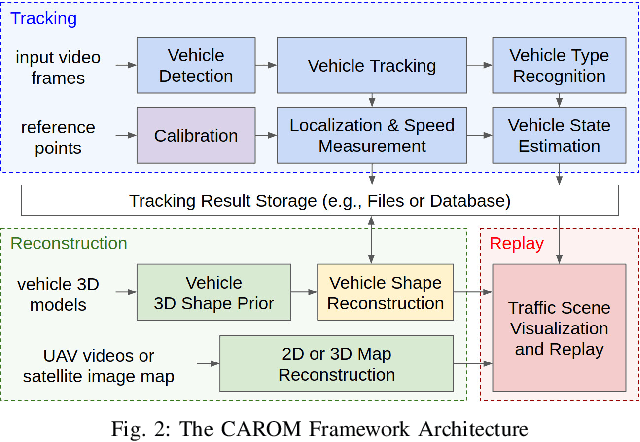

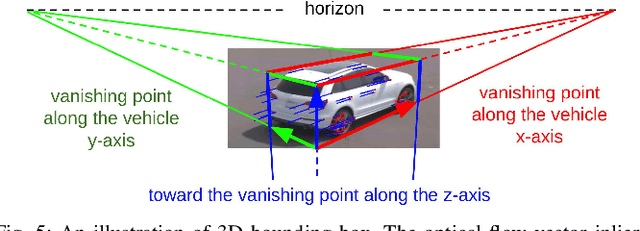

CAROM -- Vehicle Localization and Traffic Scene Reconstruction from Monocular Cameras on Road Infrastructures

Apr 02, 2021

Abstract:Traffic monitoring cameras are powerful tools for traffic management and essential components of intelligent road infrastructure systems. In this paper, we present a vehicle localization and traffic scene reconstruction framework using these cameras, dubbed as CAROM, i.e., "CARs On the Map". CAROM processes traffic monitoring videos and converts them to anonymous data structures of vehicle type, 3D shape, position, and velocity for traffic scene reconstruction and replay. Through collaborating with a local department of transportation in the United States, we constructed a benchmarking dataset containing GPS data, roadside camera videos, and drone videos to validate the vehicle tracking results. On average, the localization error is approximately 0.8 m and 1.7 m within the range of 50 m and 120 m from the cameras, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge