Jason Rebello

Next-Best-View Selection for Robot Eye-in-Hand Calibration

Mar 12, 2023

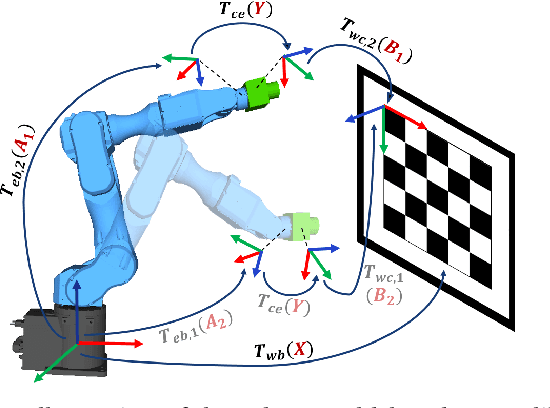

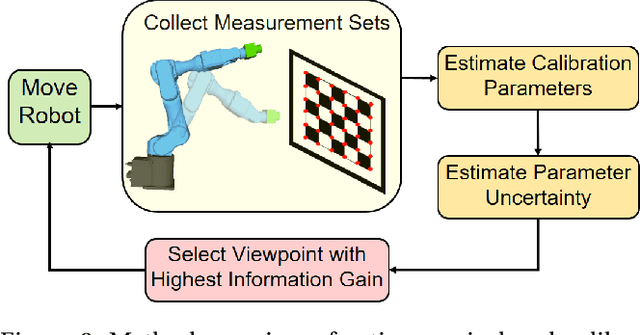

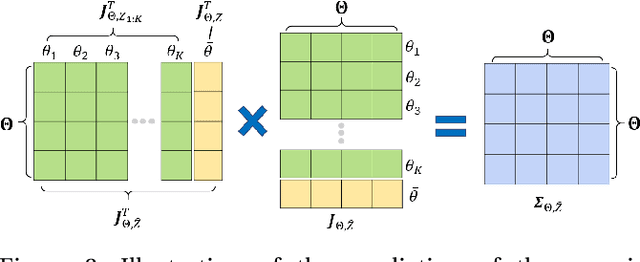

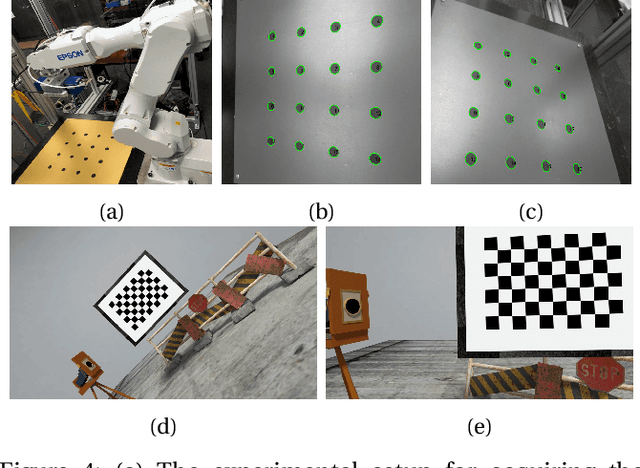

Abstract:Robotic eye-in-hand calibration is the task of determining the rigid 6-DoF pose of the camera with respect to the robot end-effector frame. In this paper, we formulate this task as a non-linear optimization problem and introduce an active vision approach to strategically select the robot pose for maximizing calibration accuracy. Specifically, given an initial collection of measurement sets, our system first computes the calibration parameters and estimates the parameter uncertainties. We then predict the next robot pose from which to collect the next measurement that brings about the maximum information gain (uncertainty reduction) in the calibration parameters. We test our approach on a simulated dataset and validate the results on a real 6-axis robot manipulator. The results demonstrate that our approach can achieve accurate calibrations using many fewer viewpoints than other commonly used baseline calibration methods.

Canadian Adverse Driving Conditions Dataset

Feb 27, 2020

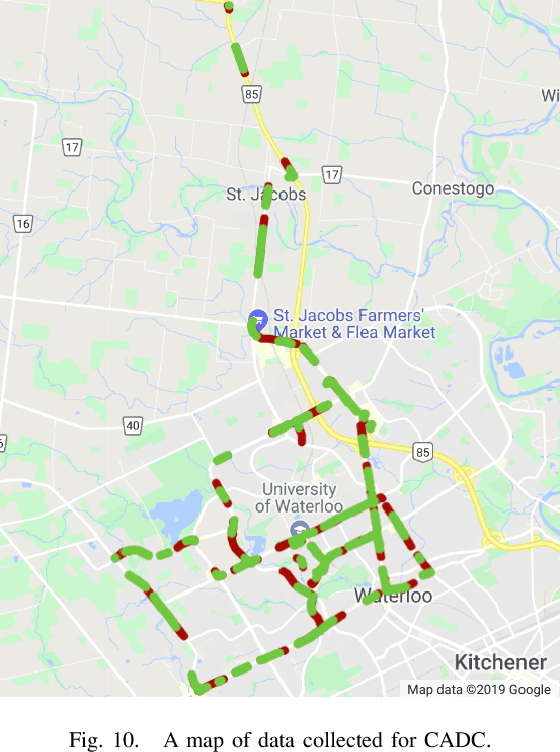

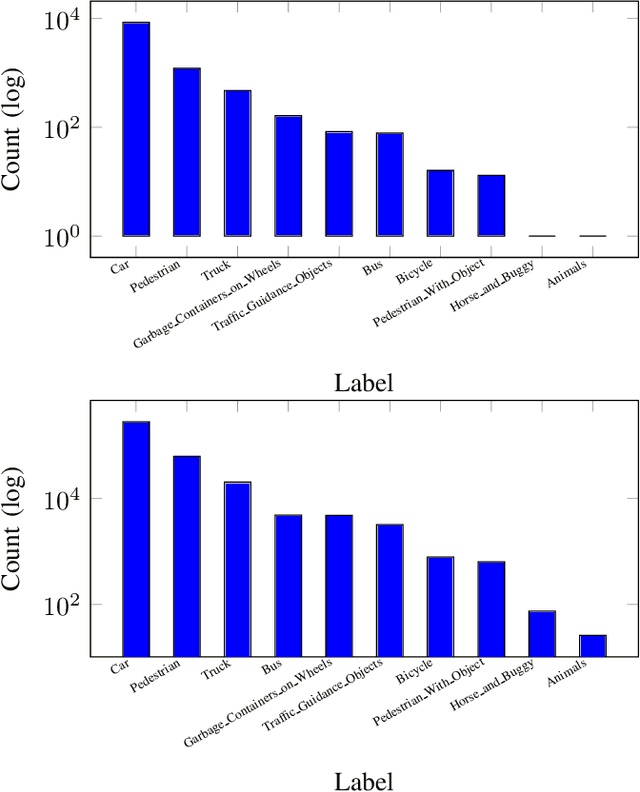

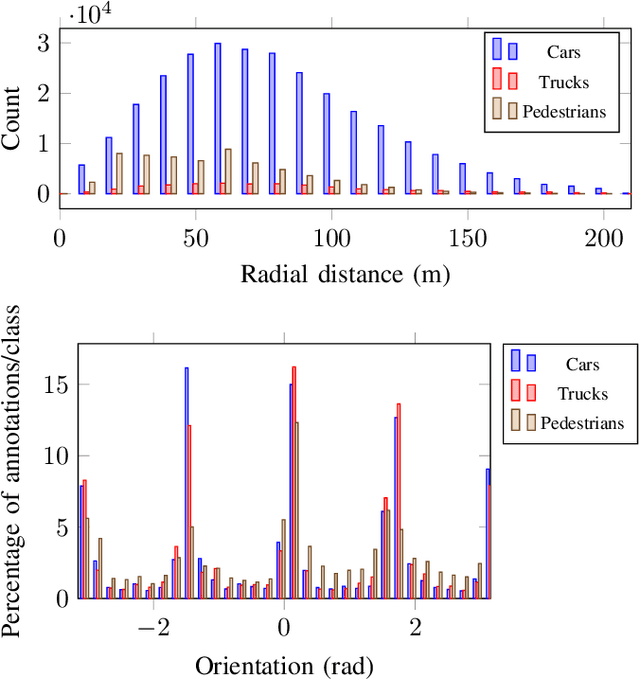

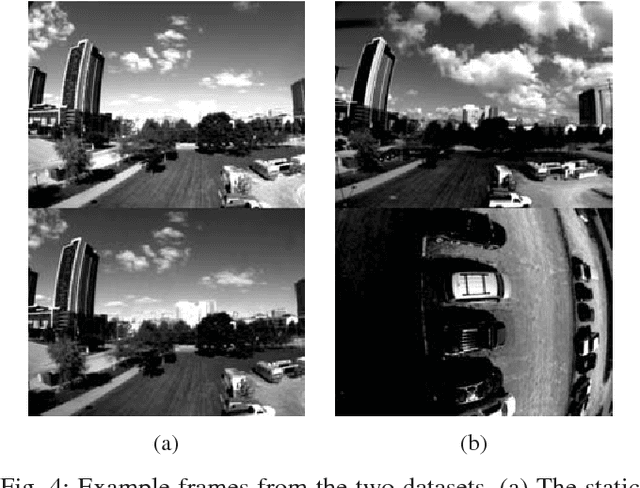

Abstract:The Canadian Adverse Driving Conditions (CADC) dataset was collected with the Autonomoose autonomous vehicle platform, based on a modified Lincoln MKZ. The dataset, collected during winter within the Region of Waterloo, Canada, is the first autonomous vehicle dataset that focuses on adverse driving conditions specifically. It contains 7,000 frames collected through a variety of winter weather conditions of annotated data from 8 cameras (Ximea MQ013CG-E2), Lidar (VLP-32C) and a GNSS+INS system (Novatel OEM638). The sensors are time synchronized and calibrated with the intrinsic and extrinsic calibrations included in the dataset. Lidar frame annotations that represent ground truth for 3D object detection and tracking have been provided by Scale AI.

Encoderless Gimbal Calibration of Dynamic Multi-Camera Clusters

Jul 24, 2018

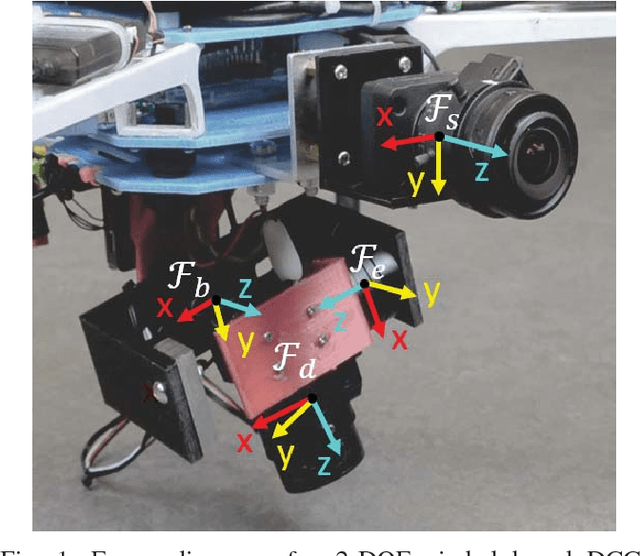

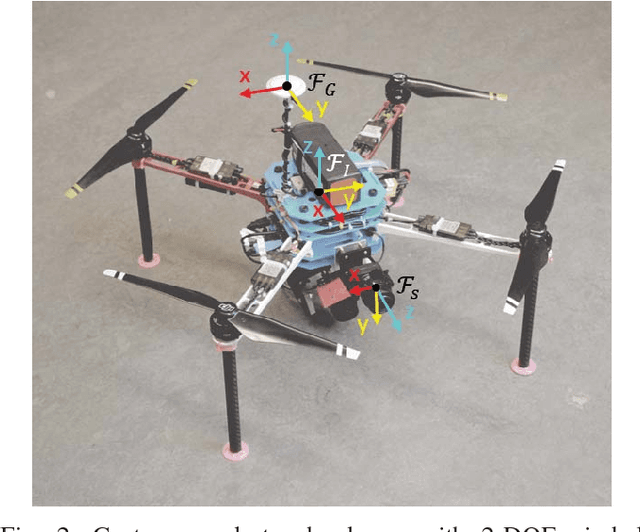

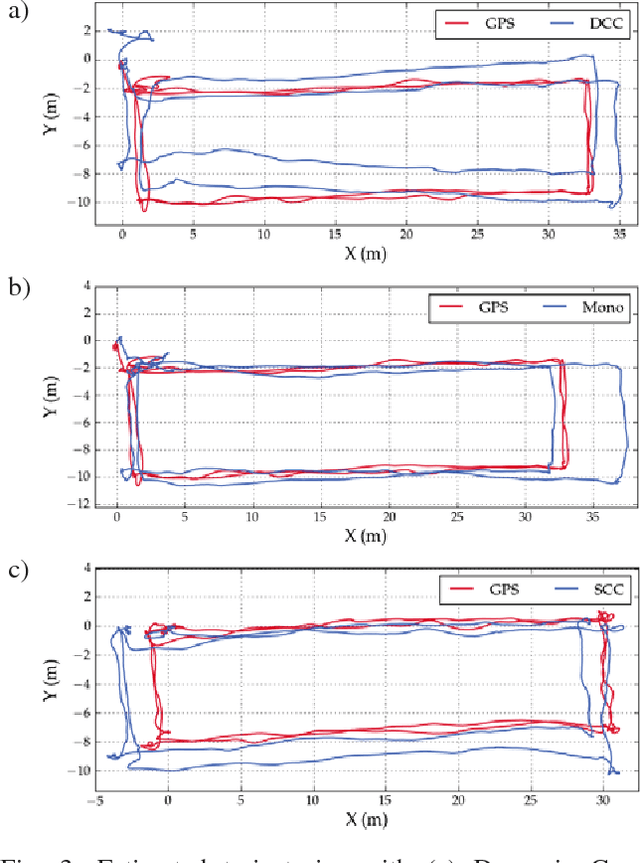

Abstract:Dynamic Camera Clusters (DCCs) are multi-camera systems where one or more cameras are mounted on actuated mechanisms such as a gimbal. Existing methods for DCC calibration rely on joint angle measurements to resolve the time-varying transformation between the dynamic and static camera. This information is usually provided by motor encoders, however, joint angle measurements are not always readily available on off-the-shelf mechanisms. In this paper, we present an encoderless approach for DCC calibration which simultaneously estimates the kinematic parameters of the transformation chain as well as the unknown joint angles. We also demonstrate the integration of an encoderless gimbal mechanism with a state-of-the art VIO algorithm, and show the extensions required in order to perform simultaneous online estimation of the joint angles and vehicle localization state. The proposed calibration approach is validated both in simulation and on a physical DCC composed of a 2-DOF gimbal mounted on a UAV. Finally, we show the experimental results of the calibrated mechanism integrated into the OKVIS VIO package, and demonstrate successful online joint angle estimation while maintaining localization accuracy that is comparable to a standard static multi-camera configuration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge