Matthew Pitropov

LiDAR-MIMO: Efficient Uncertainty Estimation for LiDAR-based 3D Object Detection

Jun 01, 2022

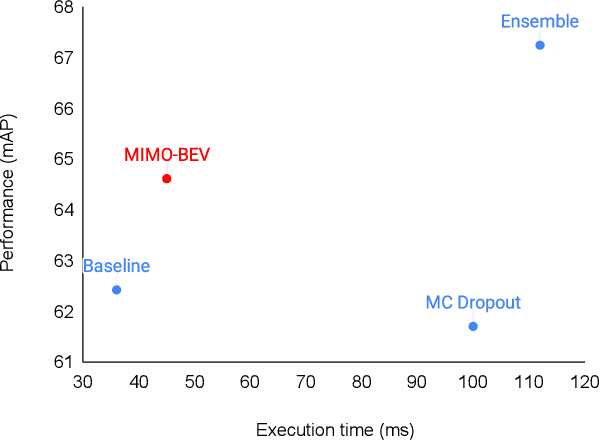

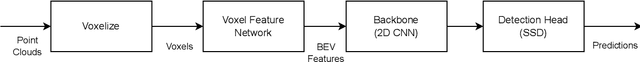

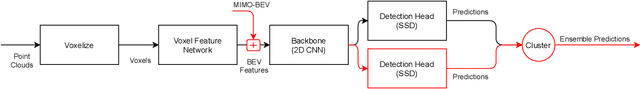

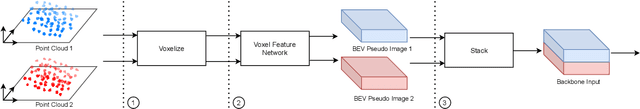

Abstract:The estimation of uncertainty in robotic vision, such as 3D object detection, is an essential component in developing safe autonomous systems aware of their own performance. However, the deployment of current uncertainty estimation methods in 3D object detection remains challenging due to timing and computational constraints. To tackle this issue, we propose LiDAR-MIMO, an adaptation of the multi-input multi-output (MIMO) uncertainty estimation method to the LiDAR-based 3D object detection task. Our method modifies the original MIMO by performing multi-input at the feature level to ensure the detection, uncertainty estimation, and runtime performance benefits are retained despite the limited capacity of the underlying detector and the large computational costs of point cloud processing. We compare LiDAR-MIMO with MC dropout and ensembles as baselines and show comparable uncertainty estimation results with only a small number of output heads. Further, LiDAR-MIMO can be configured to be twice as fast as MC dropout and ensembles, while achieving higher mAP than MC dropout and approaching that of ensembles.

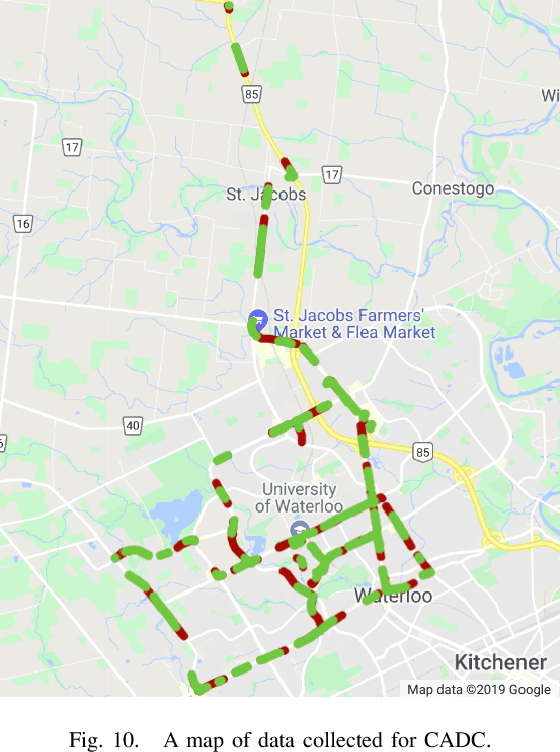

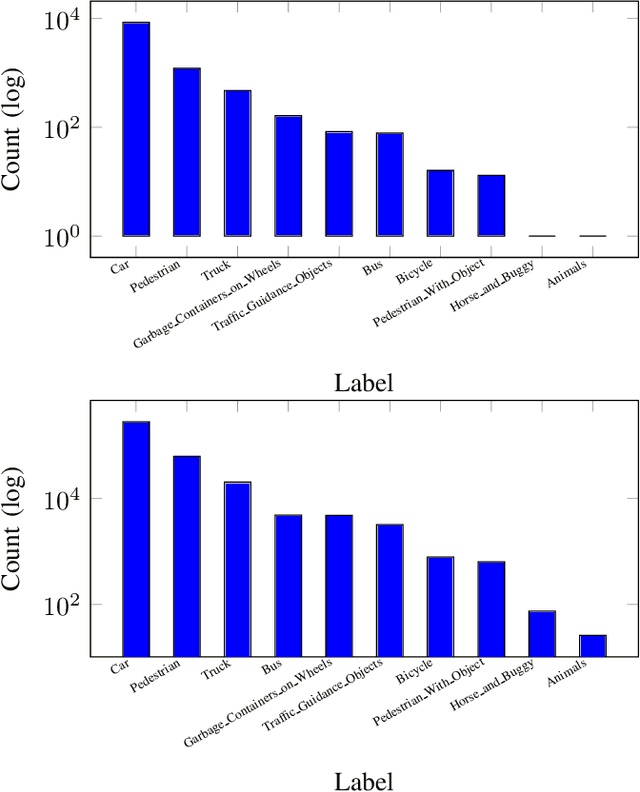

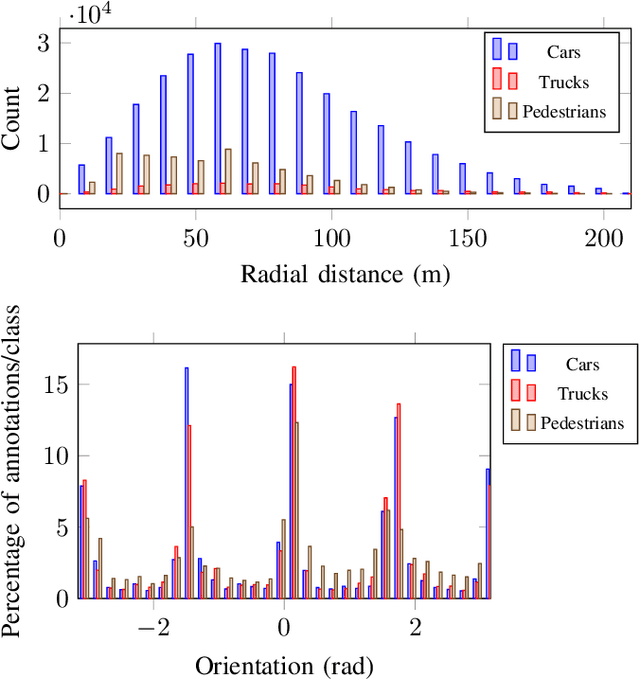

Canadian Adverse Driving Conditions Dataset

Feb 27, 2020

Abstract:The Canadian Adverse Driving Conditions (CADC) dataset was collected with the Autonomoose autonomous vehicle platform, based on a modified Lincoln MKZ. The dataset, collected during winter within the Region of Waterloo, Canada, is the first autonomous vehicle dataset that focuses on adverse driving conditions specifically. It contains 7,000 frames collected through a variety of winter weather conditions of annotated data from 8 cameras (Ximea MQ013CG-E2), Lidar (VLP-32C) and a GNSS+INS system (Novatel OEM638). The sensors are time synchronized and calibrated with the intrinsic and extrinsic calibrations included in the dataset. Lidar frame annotations that represent ground truth for 3D object detection and tracking have been provided by Scale AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge