Jason Friedman

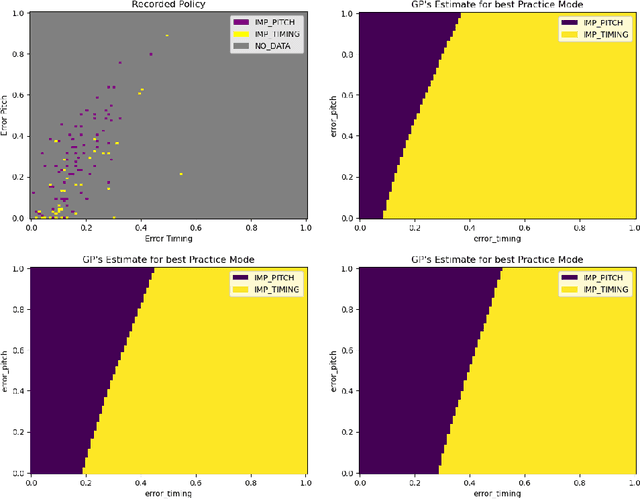

Generating Piano Practice Policy with a Gaussian Process

Jun 07, 2024

Abstract:A typical process of learning to play a piece on a piano consists of a progression through a series of practice units that focus on individual dimensions of the skill, the so-called practice modes. Practice modes in learning to play music comprise a particularly large set of possibilities, such as hand coordination, posture, articulation, ability to read a music score, correct timing or pitch, etc. Self-guided practice is known to be suboptimal, and a model that schedules optimal practice to maximize a learner's progress still does not exist. Because we each learn differently and there are many choices for possible piano practice tasks and methods, the set of practice modes should be dynamically adapted to the human learner, a process typically guided by a teacher. However, having a human teacher guide individual practice is not always feasible since it is time-consuming, expensive, and often unavailable. In this work, we present a modeling framework to guide the human learner through the learning process by choosing the practice modes generated by a policy model. To this end, we present a computational architecture building on a Gaussian process that incorporates 1) the learner state, 2) a policy that selects a suitable practice mode, 3) performance evaluation, and 4) expert knowledge. The proposed policy model is trained to approximate the expert-learner interaction during a practice session. In our future work, we will test different Bayesian optimization techniques, e.g., different acquisition functions, and evaluate their effect on the learning progress.

Left/Right Brain, human motor control and the implications for robotics

Jan 25, 2024Abstract:Neural Network movement controllers promise a variety of advantages over conventional control methods however they are not widely adopted due to their inability to produce reliably precise movements. This research explores a bilateral neural network architecture as a control system for motor tasks. We aimed to achieve hemispheric specialisation similar to what is observed in humans across different tasks; the dominant system (usually the right hand, left hemisphere) excels at tasks involving coordination and efficiency of movement, and the non-dominant system performs better at tasks requiring positional stability. Specialisation was achieved by training the hemispheres with different loss functions tailored toward the expected behaviour of the respective hemispheres. We compared bilateral models with and without specialised hemispheres, with and without inter-hemispheric connectivity (representing the biological Corpus Callosum), and unilateral models with and without specialisation. The models were trained and tested on two tasks common in the human motor control literature: the random reach task, suited to the dominant system, a model with better coordination, and the hold position task, suited to the non-dominant system, a model with more stable movement. Each system out-performed the non-favoured system in its preferred task. For both tasks, a bilateral model outperforms the 'non-preferred' hand, and is as good or better than the 'preferred' hand. The Corpus Callosum tends to improve performance, but not always for the specialised models.

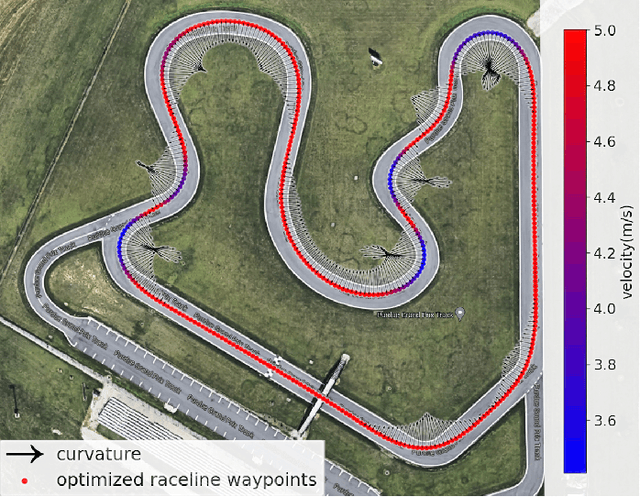

AV4EV: Open-Source Modular Autonomous Electric Vehicle Platform to Make Mobility Research Accessible

Dec 01, 2023

Abstract:When academic researchers develop and validate autonomous driving algorithms, there is a challenge in balancing high-performance capabilities with the cost and complexity of the vehicle platform. Much of today's research on autonomous vehicles (AV) is limited to experimentation on expensive commercial vehicles that require large teams with diverse skills to retrofit the vehicles and test them in dedicated testing facilities. Testing the limits of safety and performance on such vehicles is costly and hazardous. It is also outside the reach of most academic departments and research groups. On the other hand, scaled-down 1/10th-1/16th scale vehicle platforms are more affordable but have limited similitude in dynamics, control, and drivability. To address this issue, we present the design of a one-third-scale autonomous electric go-kart platform with open-source mechatronics design along with fully-functional autonomous driving software. The platform's multi-modal driving system is capable of manual, autonomous, and teleoperation driving modes. It also features a flexible sensing suite for development and deployment of algorithms across perception, localization, planning, and control. This development serves as a bridge between full-scale vehicles and reduced-scale cars while accelerating cost-effective algorithmic advancements in autonomous systems research. Our experimental results demonstrate the AV4EV platform's capabilities and ease-of-use for developing new AV algorithms. All materials are available at AV4EV.org to stimulate collaborative efforts within the AV and electric vehicle (EV) communities.

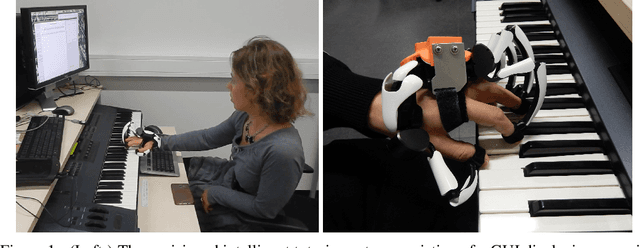

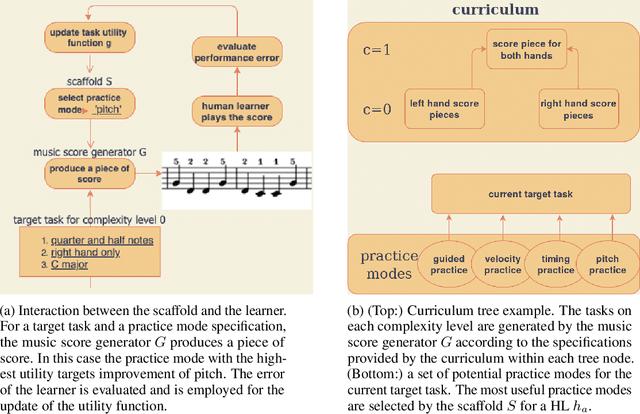

Optimizing piano practice with a utility-based scaffold

Jun 21, 2021

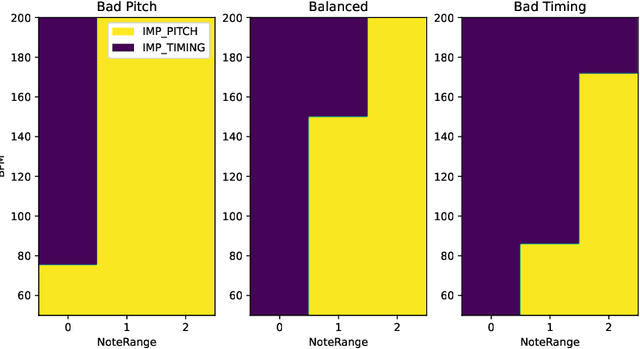

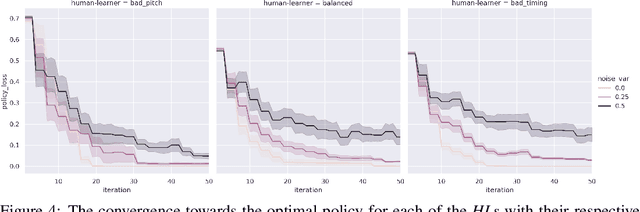

Abstract:A typical part of learning to play the piano is the progression through a series of practice units that focus on individual dimensions of the skill, such as hand coordination, correct posture, or correct timing. Ideally, a focus on a particular practice method should be made in a way to maximize the learner's progress in learning to play the piano. Because we each learn differently, and because there are many choices for possible piano practice tasks and methods, the set of practice tasks should be dynamically adapted to the human learner. However, having a human teacher guide individual practice is not always feasible since it is time consuming, expensive, and not always available. Instead, we suggest to optimize in the space of practice methods, the so-called practice modes. The proposed optimization process takes into account the skills of the individual learner and their history of learning. In this work we present a modeling framework to guide the human learner through the learning process by choosing practice modes that have the highest expected utility (i.e., improvement in piano playing skill). To this end, we propose a human learner utility model based on a Gaussian process, and exemplify the model training and its application for practice scaffolding on an example of simulated human learners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge