Jaeheung Park

TOLEBI: Learning Fault-Tolerant Bipedal Locomotion via Online Status Estimation and Fallibility Rewards

Feb 05, 2026Abstract:With the growing employment of learning algorithms in robotic applications, research on reinforcement learning for bipedal locomotion has become a central topic for humanoid robotics. While recently published contributions achieve high success rates in locomotion tasks, scarce attention has been devoted to the development of methods that enable to handle hardware faults that may occur during the locomotion process. However, in real-world settings, environmental disturbances or sudden occurrences of hardware faults might yield severe consequences. To address these issues, this paper presents TOLEBI (A faulT-tOlerant Learning framEwork for Bipedal locomotIon) that handles faults on the robot during operation. Specifically, joint locking, power loss and external disturbances are injected in simulation to learn fault-tolerant locomotion strategies. In addition to transferring the learned policy to the real robot via sim-to-real transfer, an online joint status module incorporated. This module enables to classify joint conditions by referring to the actual observations at runtime under real-world conditions. The validation experiments conducted both in real-world and simulation with the humanoid robot TOCABI highlight the applicability of the proposed approach. To our knowledge, this manuscript provides the first learning-based fault-tolerant framework for bipedal locomotion, thereby fostering the development of efficient learning methods in this field.

Phase-based Nonlinear Model Predictive Control for Humanoid Walking Stabilization with Single and Double Support Time Adjustments

Jun 04, 2025Abstract:Balance control for humanoid robots has been extensively studied to enable robots to navigate in real-world environments. However, balance controllers that explicitly optimize the durations of both the single support phase, also known as step timing, and the Double Support Phase (DSP) have not been widely explored due to the inherent nonlinearity of the associated optimization problem. Consequently, many recent approaches either ignore the DSP or adjust its duration based on heuristics or on linearization techniques that rely on sequential coordination of balance strategies. This study proposes a novel phase-based nonlinear Model Predictive Control (MPC) framework that simultaneously optimizes Zero Moment Point~(ZMP) modulation, step location, step timing, and DSP duration to maintain balance under external disturbances. In simulation, the proposed controller was compared with two state-of-the-art frameworks that rely on heuristics or sequential coordination of balance strategies under two scenarios: forward walking on terrain emulating compliant ground and external push recovery while walking in place. Overall, the findings suggest that the proposed method offers more flexible coordination of balance strategies than the sequential approach, and consistently outperforms the heuristic approach. The robustness and effectiveness of the proposed controller were also validated through experiments with a real humanoid robot.

Real-time Whole-body Model Predictive Control for Bipedal Locomotion with a Novel Kino-dynamic Model and Warm-start Method

May 26, 2025Abstract:Advancements in optimization solvers and computing power have led to growing interest in applying whole-body model predictive control (WB-MPC) to bipedal robots. However, the high degrees of freedom and inherent model complexity of bipedal robots pose significant challenges in achieving fast and stable control cycles for real-time performance. This paper introduces a novel kino-dynamic model and warm-start strategy for real-time WB-MPC in bipedal robots. Our proposed kino-dynamic model combines the linear inverted pendulum plus flywheel and full-body kinematics model. Unlike the conventional whole-body model that rely on the concept of contact wrenches, our model utilizes the zero-moment point (ZMP), reducing baseline computational costs and ensuring consistently low latency during contact state transitions. Additionally, a modularized multi-layer perceptron (MLP) based warm-start strategy is proposed, leveraging a lightweight neural network to provide a good initial guess for each control cycle. Furthermore, we present a ZMP-based whole-body controller (WBC) that extends the existing WBC for explicitly controlling impulses and ZMP, integrating it into the real-time WB-MPC framework. Through various comparative experiments, the proposed kino-dynamic model and warm-start strategy have been shown to outperform previous studies. Simulations and real robot experiments further validate that the proposed framework demonstrates robustness to perturbation and satisfies real-time control requirements during walking.

Shoulder Range of Motion Rehabilitation Robot Incorporating Scapulohumeral Rhythm for Frozen Shoulder

Apr 14, 2025Abstract:This paper presents a novel rehabilitation robot designed to address the challenges of passive range of motion (PROM) exercises for frozen shoulder patients by integrating advanced scapulohumeral rhythm stabilization. Frozen shoulder is characterized by limited glenohumeral motion and disrupted scapulohumeral rhythm, with therapist-assisted interventions being highly effective for restoring normal shoulder function. While existing robotic solutions replicate natural shoulder biomechanics, they lack the ability to stabilize compensatory movements, such as shoulder shrugging, which are critical for effective rehabilitation. Our proposed device features a 6 degrees of freedom (DoF) mechanism, including 5 DoF for shoulder motion and an innovative 1 DoF Joint press for scapular stabilization. The robot employs a personalized two-phase operation: recording normal shoulder movement patterns from the unaffected side and applying them to guide the affected side. Experimental results demonstrated the robot's ability to replicate recorded motion patterns with high precision, with root mean square error (RMSE) values consistently below 1 degree. In simulated frozen shoulder conditions, the robot effectively suppressed scapular elevation, delaying the onset of compensatory movements and guiding the affected shoulder to move more closely in alignment with normal shoulder motion, particularly during arm elevation movements such as abduction and flexion. These findings confirm the robot's potential as a rehabilitation tool capable of automating PROM exercises while correcting compensatory movements. The system provides a foundation for advanced, personalized rehabilitation for patients with frozen shoulders.

Spectral Normalization for Lipschitz-Constrained Policies on Learning Humanoid Locomotion

Apr 11, 2025Abstract:Reinforcement learning (RL) has shown great potential in training agile and adaptable controllers for legged robots, enabling them to learn complex locomotion behaviors directly from experience. However, policies trained in simulation often fail to transfer to real-world robots due to unrealistic assumptions such as infinite actuator bandwidth and the absence of torque limits. These conditions allow policies to rely on abrupt, high-frequency torque changes, which are infeasible for real actuators with finite bandwidth. Traditional methods address this issue by penalizing aggressive motions through regularization rewards, such as joint velocities, accelerations, and energy consumption, but they require extensive hyperparameter tuning. Alternatively, Lipschitz-Constrained Policies (LCP) enforce finite bandwidth action control by penalizing policy gradients, but their reliance on gradient calculations introduces significant GPU memory overhead. To overcome this limitation, this work proposes Spectral Normalization (SN) as an efficient replacement for enforcing Lipschitz continuity. By constraining the spectral norm of network weights, SN effectively limits high-frequency policy fluctuations while significantly reducing GPU memory usage. Experimental evaluations in both simulation and real-world humanoid robot show that SN achieves performance comparable to gradient penalty methods while enabling more efficient parallel training.

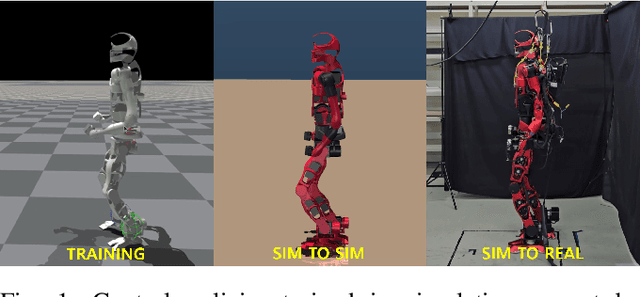

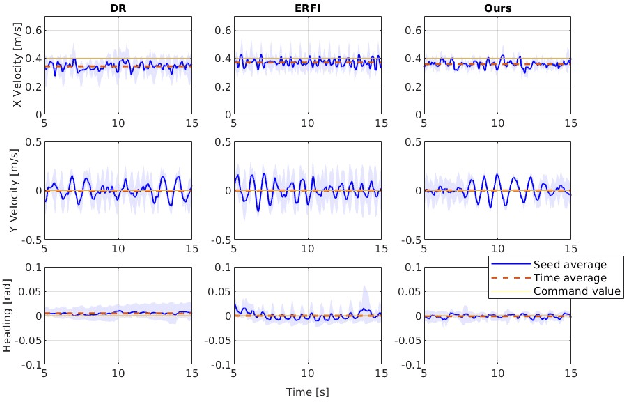

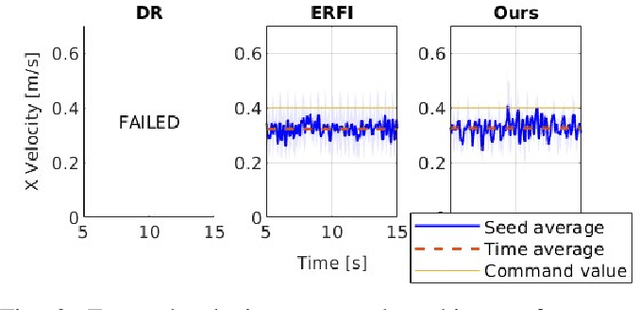

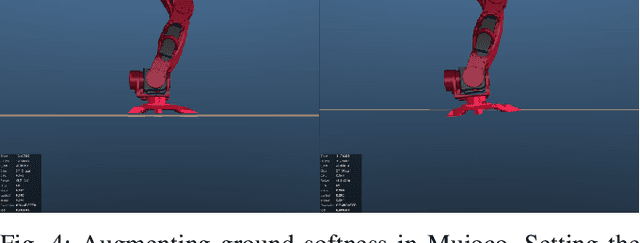

Sim-to-Real of Humanoid Locomotion Policies via Joint Torque Space Perturbation Injection

Apr 09, 2025

Abstract:This paper proposes a novel alternative to existing sim-to-real methods for training control policies with simulated experiences. Prior sim-to-real methods for legged robots mostly rely on the domain randomization approach, where a fixed finite set of simulation parameters is randomized during training. Instead, our method adds state-dependent perturbations to the input joint torque used for forward simulation during the training phase. These state-dependent perturbations are designed to simulate a broader range of reality gaps than those captured by randomizing a fixed set of simulation parameters. Experimental results show that our method enables humanoid locomotion policies that achieve greater robustness against complex reality gaps unseen in the training domain.

Efficient Computation of Whole-Body Control Utilizing Simplified Whole-Body Dynamics via Centroidal Dynamics

Sep 17, 2024

Abstract:In this study, we present a novel method for enhancing the computational efficiency of whole-body control for humanoid robots, a challenge accentuated by their high degrees of freedom. The reduced-dimension rigid body dynamics of a floating base robot is constructed by segmenting its kinematic chain into constrained and unconstrained chains, simplifying the dynamics of the unconstrained chain through the centroidal dynamics. The proposed dynamics model is possible to be applied to whole-body control methods, allowing the problem to be divided into two parts for more efficient computation. The efficiency of the framework is demonstrated by comparative experiments in simulations. The calculation results demonstrate a significant reduction in processing time, highlighting an improvement over the times reported in current methodologies. Additionally, the results also shows the computational efficiency increases as the degrees of freedom of robot model increases.

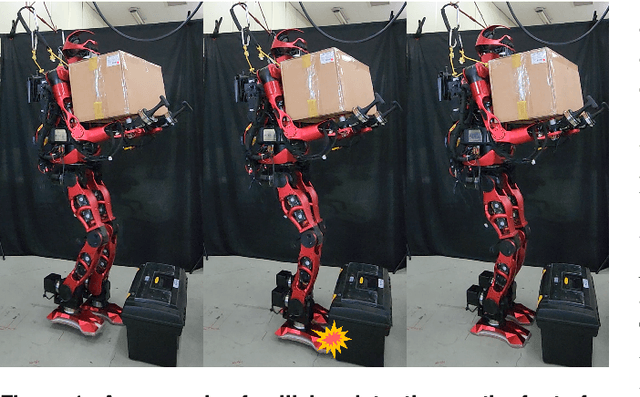

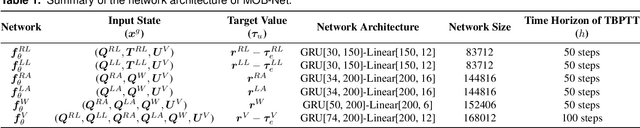

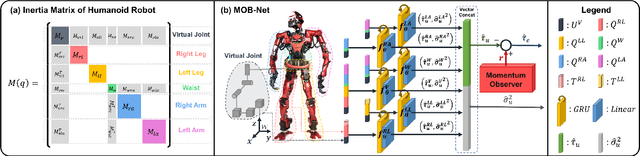

MOB-Net: Limb-modularized Uncertainty Torque Learning of Humanoids for Sensorless External Torque Estimation

Feb 17, 2024

Abstract:Momentum observer (MOB) can estimate external joint torque without requiring additional sensors, such as force/torque or joint torque sensors. However, the estimation performance of MOB deteriorates due to the model uncertainty which encompasses the modeling errors and the joint friction. Moreover, the estimation error is significant when MOB is applied to high-dimensional floating-base humanoids, which prevents the estimated external joint torque from being used for force control or collision detection in the real humanoid robot. In this paper, the pure external joint torque estimation method named MOB-Net, is proposed for humanoids. MOB-Net learns the model uncertainty torque and calibrates the estimated signal of MOB. The external joint torque can be estimated in the generalized coordinate including whole-body and virtual joints of the floating-base robot with only internal sensors (an IMU on the pelvis and encoders in the joints). Our method substantially reduces the estimation errors of MOB, and the robust performance of MOB-Net for the unseen data is validated through extensive simulations, real robot experiments, and ablation studies. Finally, various collision handling scenarios are presented using the estimated external joint torque from MOB-Net: contact wrench feedback control for locomotion, collision detection, and collision reaction for safety.

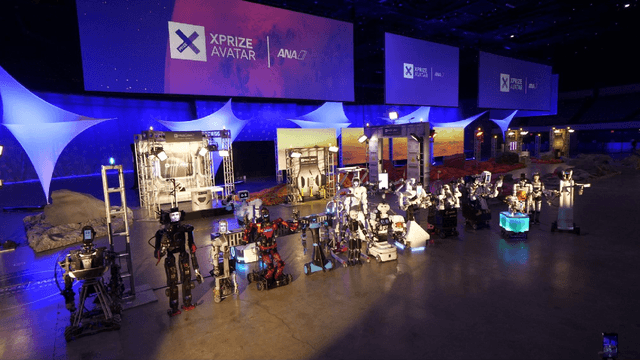

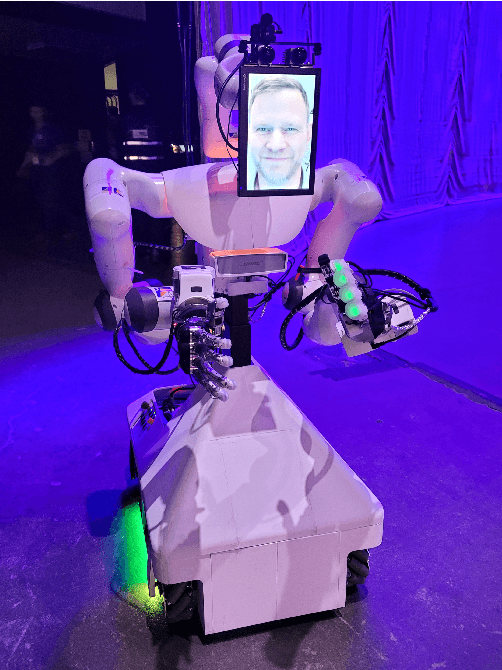

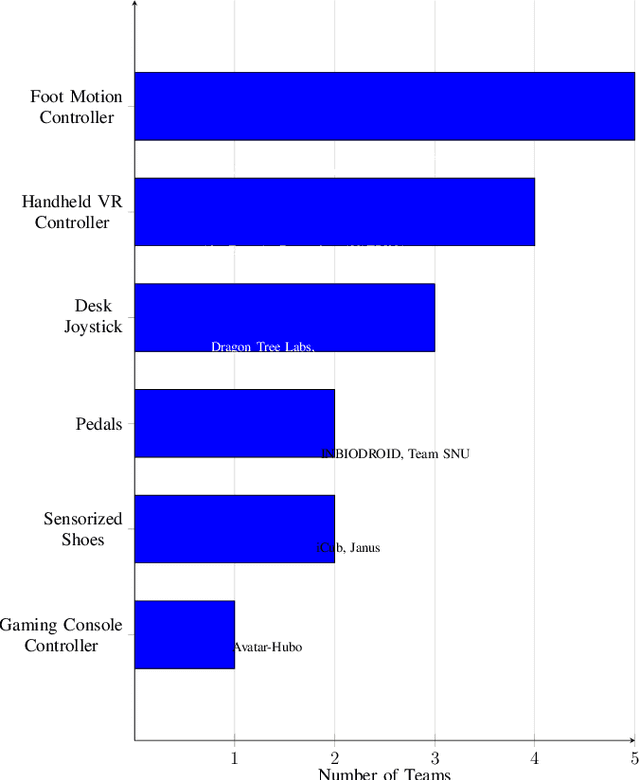

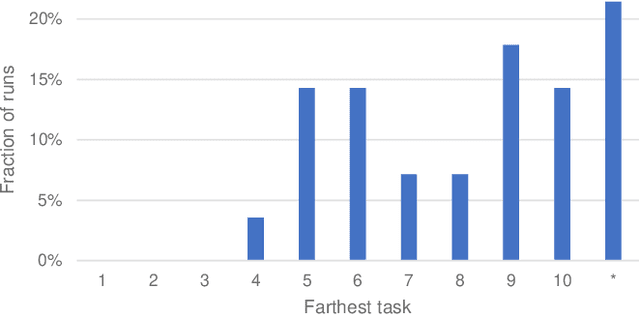

Analysis and Perspectives on the ANA Avatar XPRIZE Competition

Jan 10, 2024

Abstract:The ANA Avatar XPRIZE was a four-year competition to develop a robotic "avatar" system to allow a human operator to sense, communicate, and act in a remote environment as though physically present. The competition featured a unique requirement that judges would operate the avatars after less than one hour of training on the human-machine interfaces, and avatar systems were judged on both objective and subjective scoring metrics. This paper presents a unified summary and analysis of the competition from technical, judging, and organizational perspectives. We study the use of telerobotics technologies and innovations pursued by the competing teams in their avatar systems, and correlate the use of these technologies with judges' task performance and subjective survey ratings. It also summarizes perspectives from team leads, judges, and organizers about the competition's execution and impact to inform the future development of telerobotics and telepresence.

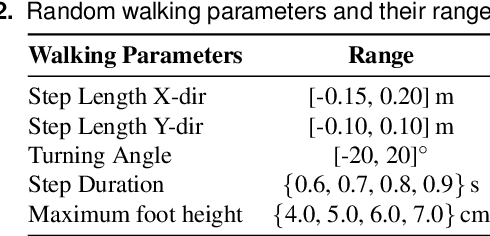

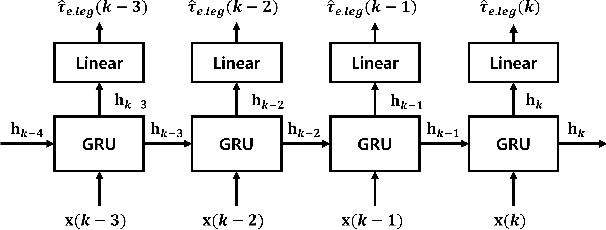

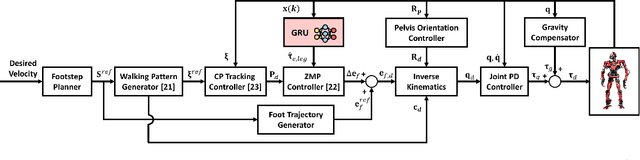

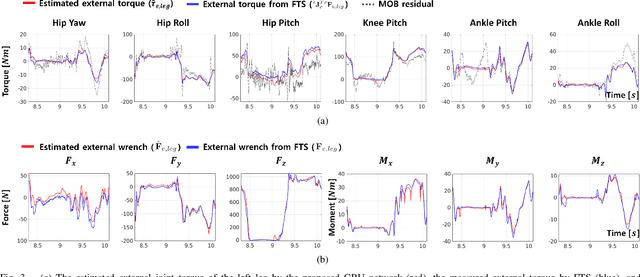

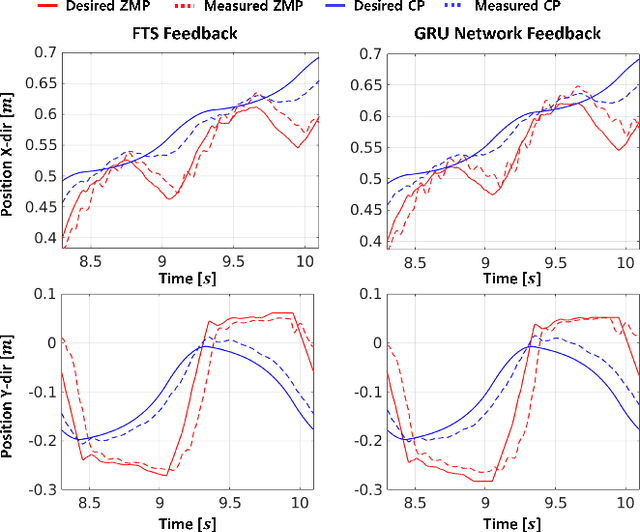

Proprioceptive External Torque Learning for Floating Base Robot and its Applications to Humanoid Locomotion

Sep 08, 2023

Abstract:The estimation of external joint torque and contact wrench is essential for achieving stable locomotion of humanoids and safety-oriented robots. Although the contact wrench on the foot of humanoids can be measured using a force-torque sensor (FTS), FTS increases the cost, inertia, complexity, and failure possibility of the system. This paper introduces a method for learning external joint torque solely using proprioceptive sensors (encoders and IMUs) for a floating base robot. For learning, the GRU network is used and random walking data is collected. Real robot experiments demonstrate that the network can estimate the external torque and contact wrench with significantly smaller errors compared to the model-based method, momentum observer (MOB) with friction modeling. The study also validates that the estimated contact wrench can be utilized for zero moment point (ZMP) feedback control, enabling stable walking. Moreover, even when the robot's feet and the inertia of the upper body are changed, the trained network shows consistent performance with a model-based calibration. This result demonstrates the possibility of removing FTS on the robot, which reduces the disadvantages of hardware sensors. The summary video is available at https://youtu.be/gT1D4tOiKpo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge