Daegyu Lim

Proleptic Temporal Ensemble for Improving the Speed of Robot Tasks Generated by Imitation Learning

Oct 22, 2024

Abstract:Imitation learning, which enables robots to learn behaviors from demonstrations by non-experts, has emerged as a promising solution for generating robot motions in such environments. The imitation learning based robot motion generation method, however, has the drawback of being limited by the demonstrators task execution speed. This paper presents a novel temporal ensemble approach applied to imitation learning algorithms, allowing for execution of future actions. The proposed method leverages existing demonstration data and pretrained policies, offering the advantages of requiring no additional computation and being easy to implement. The algorithms performance was validated through real world experiments involving robotic block color sorting, demonstrating up to 3x increase in task execution speed while maintaining a high success rate compared to the action chunking with transformer method. This study highlights the potential for significantly improving the performance of imitation learning-based policies, which were previously limited by the demonstrator's speed. It is expected to contribute substantially to future advancements in autonomous object manipulation technologies aimed at enhancing productivity.

MOB-Net: Limb-modularized Uncertainty Torque Learning of Humanoids for Sensorless External Torque Estimation

Feb 17, 2024

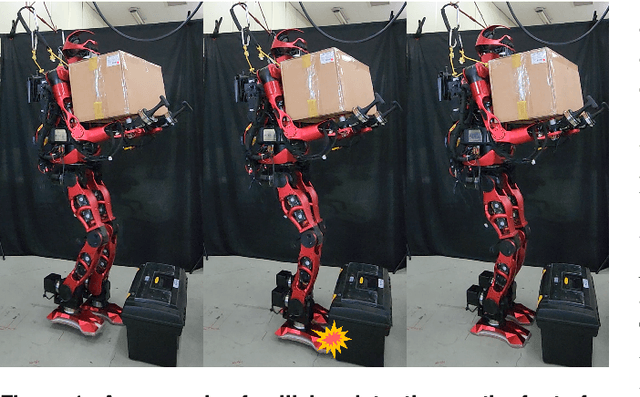

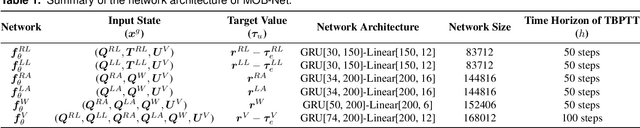

Abstract:Momentum observer (MOB) can estimate external joint torque without requiring additional sensors, such as force/torque or joint torque sensors. However, the estimation performance of MOB deteriorates due to the model uncertainty which encompasses the modeling errors and the joint friction. Moreover, the estimation error is significant when MOB is applied to high-dimensional floating-base humanoids, which prevents the estimated external joint torque from being used for force control or collision detection in the real humanoid robot. In this paper, the pure external joint torque estimation method named MOB-Net, is proposed for humanoids. MOB-Net learns the model uncertainty torque and calibrates the estimated signal of MOB. The external joint torque can be estimated in the generalized coordinate including whole-body and virtual joints of the floating-base robot with only internal sensors (an IMU on the pelvis and encoders in the joints). Our method substantially reduces the estimation errors of MOB, and the robust performance of MOB-Net for the unseen data is validated through extensive simulations, real robot experiments, and ablation studies. Finally, various collision handling scenarios are presented using the estimated external joint torque from MOB-Net: contact wrench feedback control for locomotion, collision detection, and collision reaction for safety.

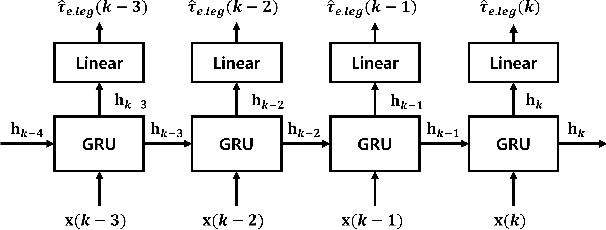

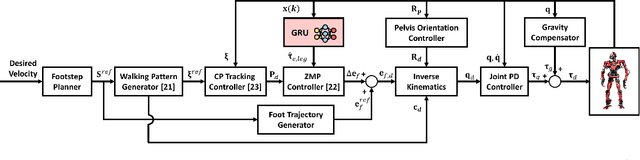

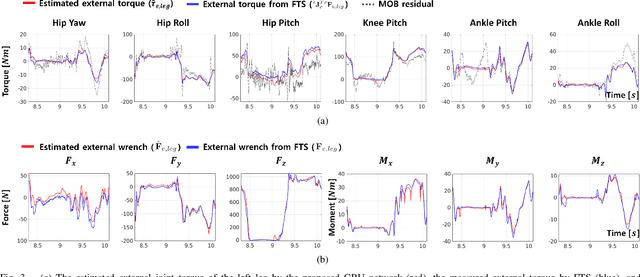

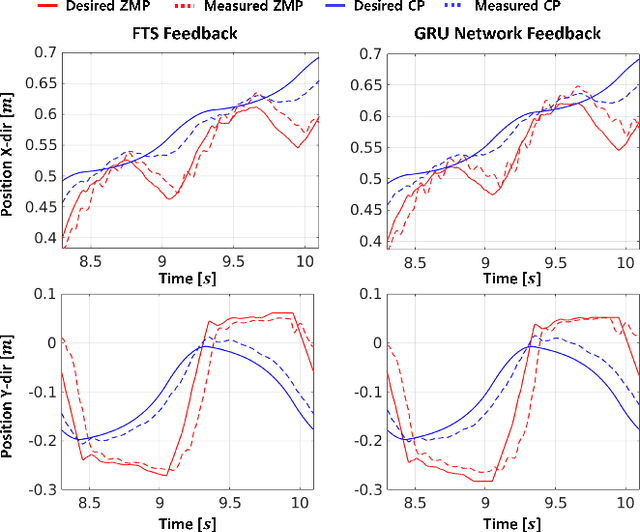

Proprioceptive External Torque Learning for Floating Base Robot and its Applications to Humanoid Locomotion

Sep 08, 2023

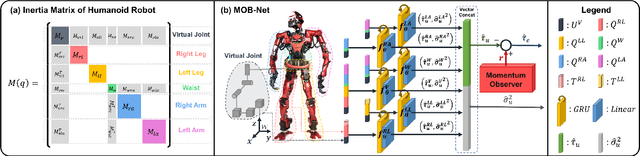

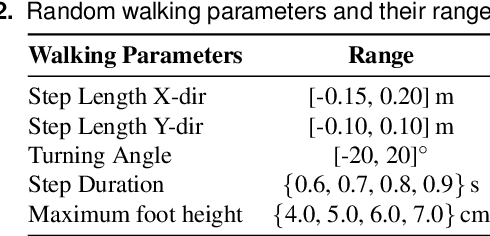

Abstract:The estimation of external joint torque and contact wrench is essential for achieving stable locomotion of humanoids and safety-oriented robots. Although the contact wrench on the foot of humanoids can be measured using a force-torque sensor (FTS), FTS increases the cost, inertia, complexity, and failure possibility of the system. This paper introduces a method for learning external joint torque solely using proprioceptive sensors (encoders and IMUs) for a floating base robot. For learning, the GRU network is used and random walking data is collected. Real robot experiments demonstrate that the network can estimate the external torque and contact wrench with significantly smaller errors compared to the model-based method, momentum observer (MOB) with friction modeling. The study also validates that the estimated contact wrench can be utilized for zero moment point (ZMP) feedback control, enabling stable walking. Moreover, even when the robot's feet and the inertia of the upper body are changed, the trained network shows consistent performance with a model-based calibration. This result demonstrates the possibility of removing FTS on the robot, which reduces the disadvantages of hardware sensors. The summary video is available at https://youtu.be/gT1D4tOiKpo.

A Model Predictive Capture Point Control Framework for Robust Humanoid Balancing via Ankle, Hip, and Stepping Strategies

Jul 25, 2023

Abstract:The robust balancing capability of humanoid robots against disturbances has been considered as one of the crucial requirements for their practical mobility in real-world environments. In particular, many studies have been devoted to the efficient implementation of the three balance strategies, inspired by human balance strategies involving ankle, hip, and stepping strategies, to endow humanoid robots with human-level balancing capability. In this paper, a robust balance control framework for humanoid robots is proposed. Firstly, a novel Model Predictive Control (MPC) framework is proposed for Capture Point (CP) tracking control, enabling the integration of ankle, hip, and stepping strategies within a single framework. Additionally, a variable weighting method is introduced that adjusts the weighting parameters of the Centroidal Angular Momentum (CAM) damping control over the time horizon of MPC to improve the balancing performance. Secondly, a hierarchical structure of the MPC and a stepping controller was proposed, allowing for the step time optimization. The robust balancing performance of the proposed method is validated through extensive simulations and real robot experiments. Furthermore, a superior balancing performance is demonstrated, particularly in the presence of disturbances, compared to a state-of-the-art Quadratic Programming (QP)-based CP controller that employs the ankle, hip, and stepping strategies. The supplementary video is available at https://youtu.be/CrD75UbYzdc

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge