Jaedeok Kim

JDEC: JPEG Decoding via Enhanced Continuous Cosine Coefficients

Apr 03, 2024

Abstract:We propose a practical approach to JPEG image decoding, utilizing a local implicit neural representation with continuous cosine formulation. The JPEG algorithm significantly quantizes discrete cosine transform (DCT) spectra to achieve a high compression rate, inevitably resulting in quality degradation while encoding an image. We have designed a continuous cosine spectrum estimator to address the quality degradation issue that restores the distorted spectrum. By leveraging local DCT formulations, our network has the privilege to exploit dequantization and upsampling simultaneously. Our proposed model enables decoding compressed images directly across different quality factors using a single pre-trained model without relying on a conventional JPEG decoder. As a result, our proposed network achieves state-of-the-art performance in flexible color image JPEG artifact removal tasks. Our source code is available at https://github.com/WooKyoungHan/JDEC.

Universal Effectiveness of High-Depth Circuits in Variational Eigenproblems

Oct 01, 2020

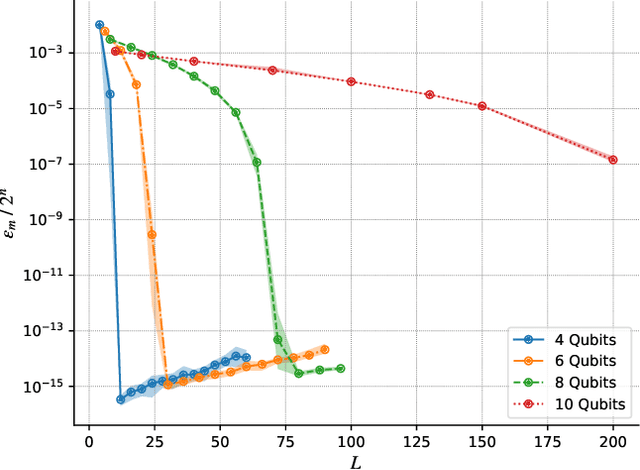

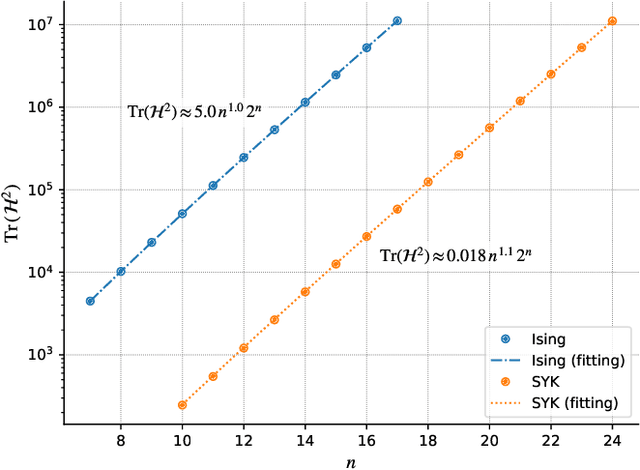

Abstract:We explore the effectiveness of high-depth, noiseless, parameteric quantum circuits by challenging their capability to simulate the ground states of quantum many-body Hamiltonians. Even a generic layered circuit Ansatz can approximate the ground state with high precision, as long as the circuit depth exceeds a certain threshold level that exponentially scales with the number of qubits, despite the abundance of the barren plateaus. This success is due to the fact that the energy landscape in the high-depth regime has a suitable structure for the gradient-based optimization, i.e., the presence of local extrema -- near any random initial points -- reaching the ground level energy. We check if these advantages are preserved across different Hamiltonians, by working out two variational eigensolver problems for the transverse field Ising model as well as for the Sachdev-Ye-Kitaev model. We expect that the contributing factors to the universal success of the high-depth circuits may also serve as the evaluation guidelines for more realistic circuit designs under hybrid quantum-classical algorithms.

Consistency of a Recurrent Language Model With Respect to Incomplete Decoding

Feb 06, 2020

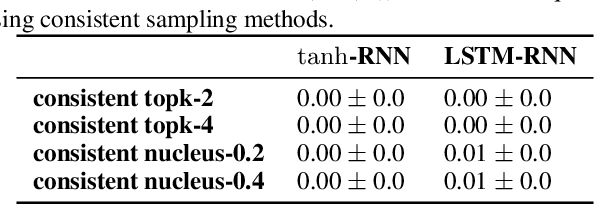

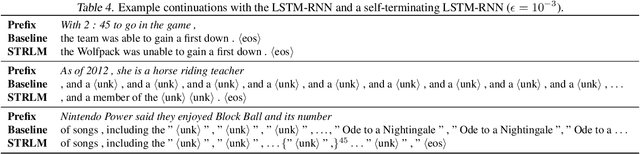

Abstract:Despite strong performance on a variety of tasks, neural sequence models trained with maximum likelihood have been shown to exhibit issues such as length bias and degenerate repetition. We study the related issue of receiving infinite-length sequences from a recurrent language model when using common decoding algorithms. To analyze this issue, we first define inconsistency of a decoding algorithm, meaning that the algorithm can yield an infinite-length sequence that has zero probability under the model. We prove that commonly used incomplete decoding algorithms - greedy search, beam search, top-k sampling, and nucleus sampling - are inconsistent, despite the fact that recurrent language models are trained to produce sequences of finite length. Based on these insights, we propose two remedies which address inconsistency: consistent variants of top-k and nucleus sampling, and a self-terminating recurrent language model. Empirical results show that inconsistency occurs in practice, and that the proposed methods prevent inconsistency.

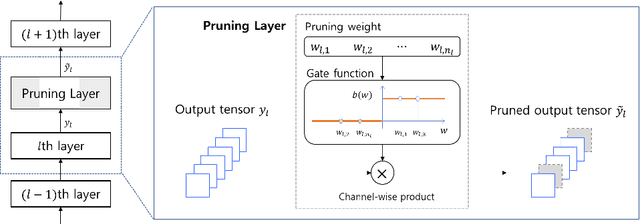

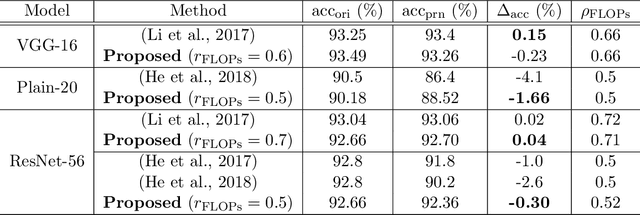

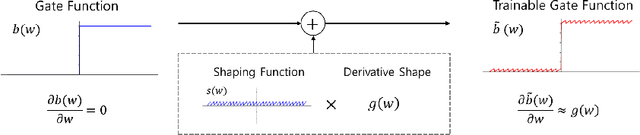

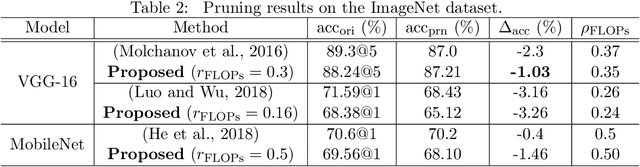

Differentiable Pruning Method for Neural Networks

Apr 24, 2019

Abstract:Architecture optimization is a promising technique to find an efficient neural network to meet certain requirements, which is usually a problem of selections. This paper introduces a concept of a trainable gate function and proposes a channel pruning method which finds automatically the optimal combination of channels using a simple gradient descent training procedure. The trainable gate function, which confers a differentiable property to discrete-valued variables, allows us to directly optimize loss functions that include discrete values such as the number of parameters or FLOPs that are generally non-differentiable. Channel pruning can be applied simply by appending trainable gate functions to each intermediate output tensor followed by fine-tuning the overall model, using any gradient-based training methods. Our experiments show that the proposed method can achieve better compression results on various models. For instance, our proposed method compresses ResNet-56 on CIFAR-10 dataset by half in terms of the number of FLOPs without accuracy drop.

How Compact?: Assessing Compactness of Representations through Layer-Wise Pruning

Jan 09, 2019

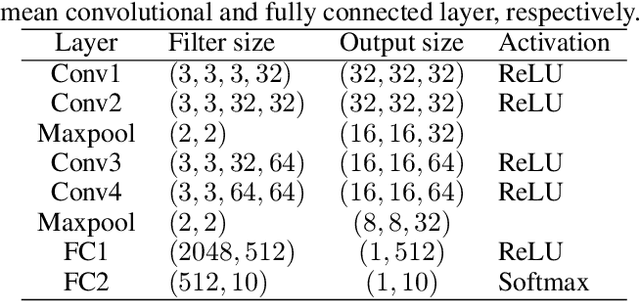

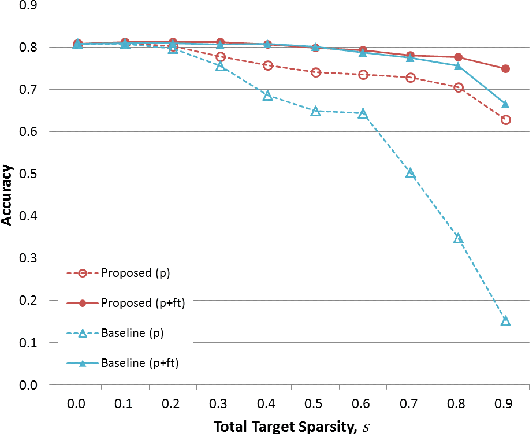

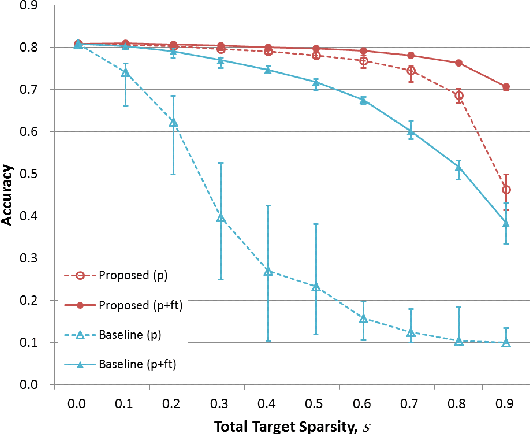

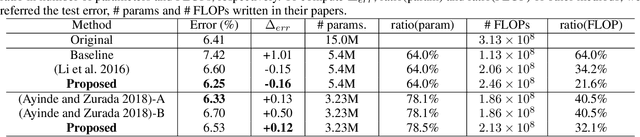

Abstract:Various forms of representations may arise in the many layers embedded in deep neural networks (DNNs). Of these, where can we find the most compact representation? We propose to use a pruning framework to answer this question: How compact can each layer be compressed, without losing performance? Most of the existing DNN compression methods do not consider the relative compressibility of the individual layers. They uniformly apply a single target sparsity to all layers or adapt layer sparsity using heuristics and additional training. We propose a principled method that automatically determines the sparsity of individual layers derived from the importance of each layer. To do this, we consider a metric to measure the importance of each layer based on the layer-wise capacity. Given the trained model and the total target sparsity, we first evaluate the importance of each layer from the model. From the evaluated importance, we compute the layer-wise sparsity of each layer. The proposed method can be applied to any DNN architecture and can be combined with any pruning method that takes the total target sparsity as a parameter. To validate the proposed method, we carried out an image classification task with two types of DNN architectures on two benchmark datasets and used three pruning methods for compression. In case of VGG-16 model with weight pruning on the ImageNet dataset, we achieved up to 75% (17.5% on average) better top-5 accuracy than the baseline under the same total target sparsity. Furthermore, we analyzed where the maximum compression can occur in the network. This kind of analysis can help us identify the most compact representation within a deep neural network.

Comparing Sample-wise Learnability Across Deep Neural Network Models

Jan 08, 2019

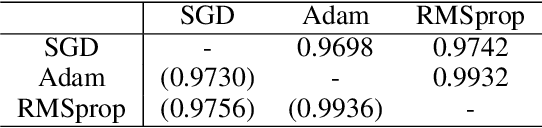

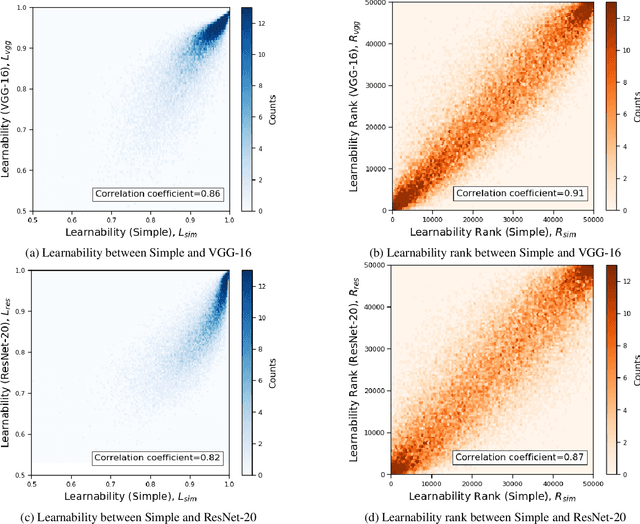

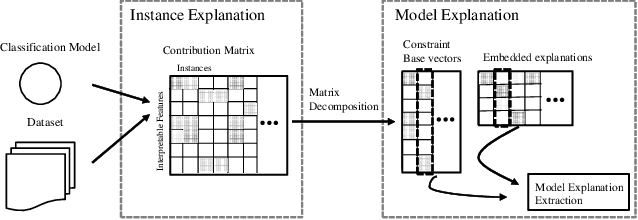

Abstract:Estimating the relative importance of each sample in a training set has important practical and theoretical value, such as in importance sampling or curriculum learning. This kind of focus on individual samples invokes the concept of sample-wise learnability: How easy is it to correctly learn each sample (cf. PAC learnability)? In this paper, we approach the sample-wise learnability problem within a deep learning context. We propose a measure of the learnability of a sample with a given deep neural network (DNN) model. The basic idea is to train the given model on the training set, and for each sample, aggregate the hits and misses over the entire training epochs. Our experiments show that the sample-wise learnability measure collected this way is highly linearly correlated across different DNN models (ResNet-20, VGG-16, and MobileNet), suggesting that such a measure can provide deep general insights on the data's properties. We expect our method to help develop better curricula for training, and help us better understand the data itself.

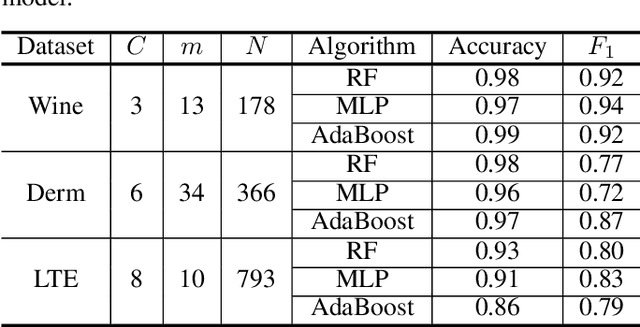

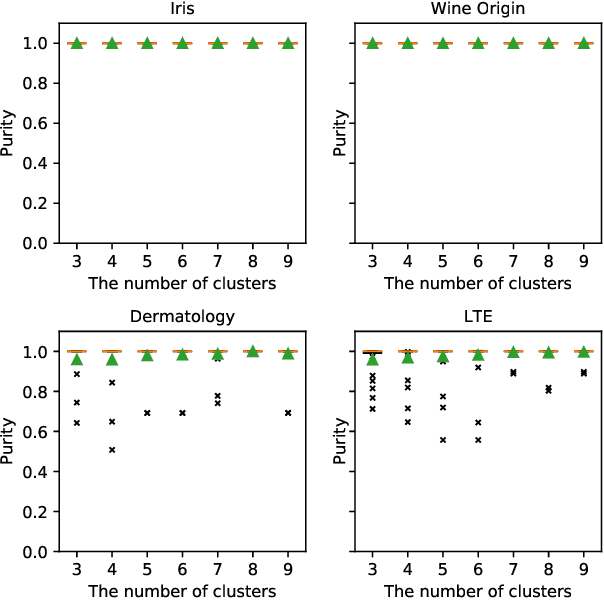

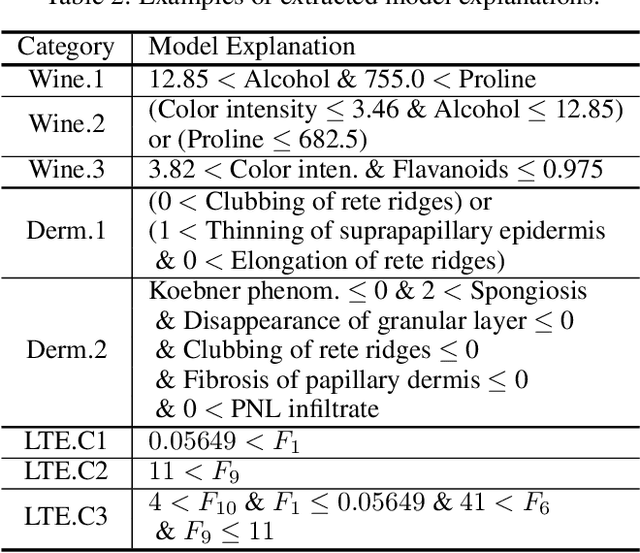

Human Understandable Explanation Extraction for Black-box Classification Models Based on Matrix Factorization

Sep 18, 2017

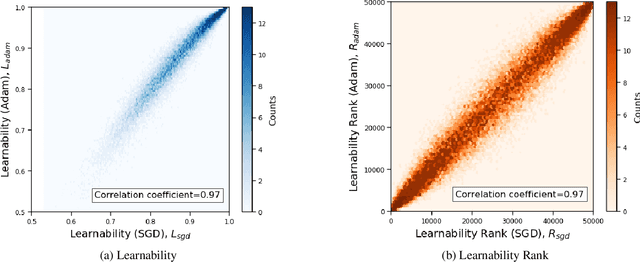

Abstract:In recent years, a number of artificial intelligent services have been developed such as defect detection system or diagnosis system for customer services. Unfortunately, the core in these services is a black-box in which human cannot understand the underlying decision making logic, even though the inspection of the logic is crucial before launching a commercial service. Our goal in this paper is to propose an analytic method of a model explanation that is applicable to general classification models. To this end, we introduce the concept of a contribution matrix and an explanation embedding in a constraint space by using a matrix factorization. We extract a rule-like model explanation from the contribution matrix with the help of the nonnegative matrix factorization. To validate our method, the experiment results provide with open datasets as well as an industry dataset of a LTE network diagnosis and the results show our method extracts reasonable explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge