Jacopo Banfi

Safe and Efficient Path Planning under Uncertainty via Deep Collision Probability Fields

Sep 06, 2024

Abstract:Estimating collision probabilities between robots and environmental obstacles or other moving agents is crucial to ensure safety during path planning. This is an important building block of modern planning algorithms in many application scenarios such as autonomous driving, where noisy sensors perceive obstacles. While many approaches exist, they either provide too conservative estimates of the collision probabilities or are computationally intensive due to their sampling-based nature. To deal with these issues, we introduce Deep Collision Probability Fields, a neural-based approach for computing collision probabilities of arbitrary objects with arbitrary unimodal uncertainty distributions. Our approach relegates the computationally intensive estimation of collision probabilities via sampling at the training step, allowing for fast neural network inference of the constraints during planning. In extensive experiments, we show that Deep Collision Probability Fields can produce reasonably accurate collision probabilities (up to 10^{-3}) for planning and that our approach can be easily plugged into standard path planning approaches to plan safe paths on 2-D maps containing uncertain static and dynamic obstacles. Additional material, code, and videos are available at https://sites.google.com/view/ral-dcpf.

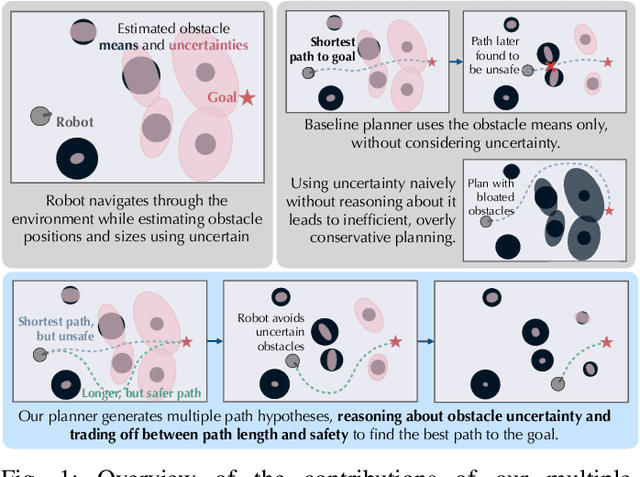

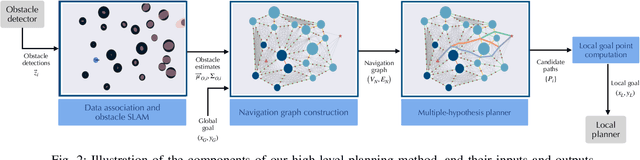

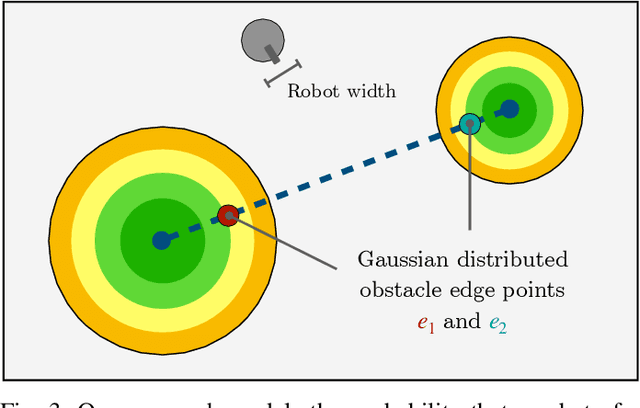

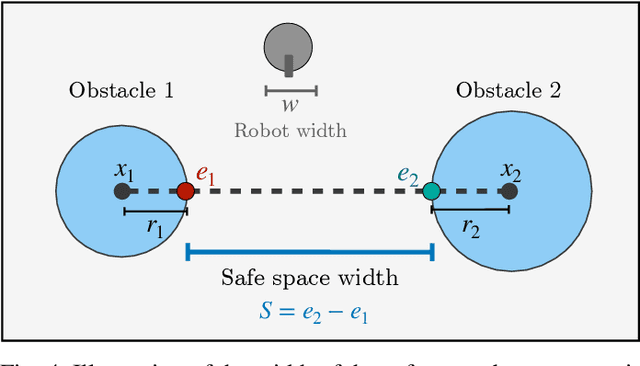

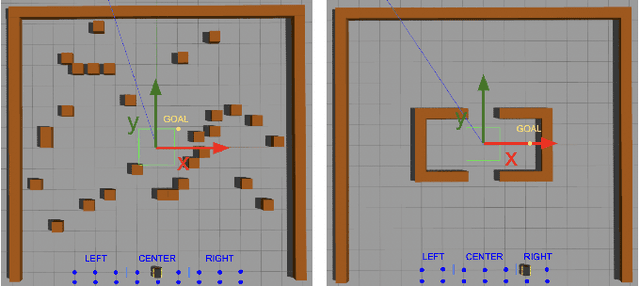

Multiple-Hypothesis Path Planning with Uncertain Object Detections

Aug 14, 2023

Abstract:Path planning in obstacle-dense environments is a key challenge in robotics, and depends on inferring scene attributes and associated uncertainties. We present a multiple-hypothesis path planner designed to navigate complex environments using obstacle detections. Path hypotheses are generated by reasoning about uncertainty and range, as initial detections are typically at far ranges with high uncertainty, before subsequent detections reduce this uncertainty. Given estimated obstacles, we build a graph of pairwise connections between objects based on the probability that the robot can safely pass between the pair. The graph is updated in real time and pruned of unsafe paths, providing probabilistic safety guarantees. The planner generates path hypotheses over this graph, then trades between safety and path length to intelligently optimize the best route. We evaluate our planner on randomly generated simulated forests, and find that in the most challenging environments, it increases the navigation success rate over an A* baseline from 20% to 75%. Results indicate that the use of evolving, range-based uncertainty and multiple hypotheses are critical for navigating dense environments.

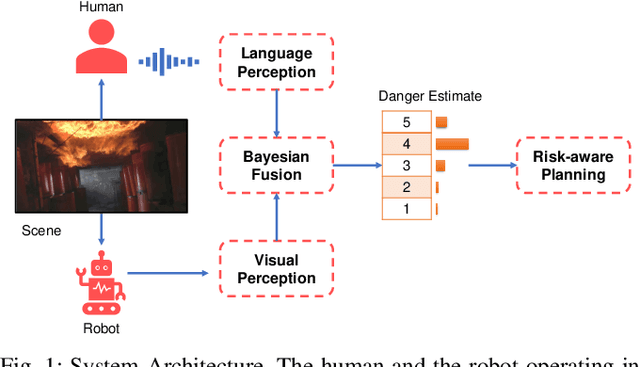

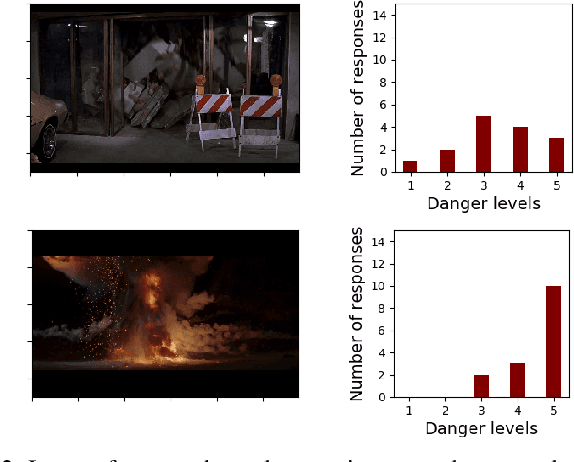

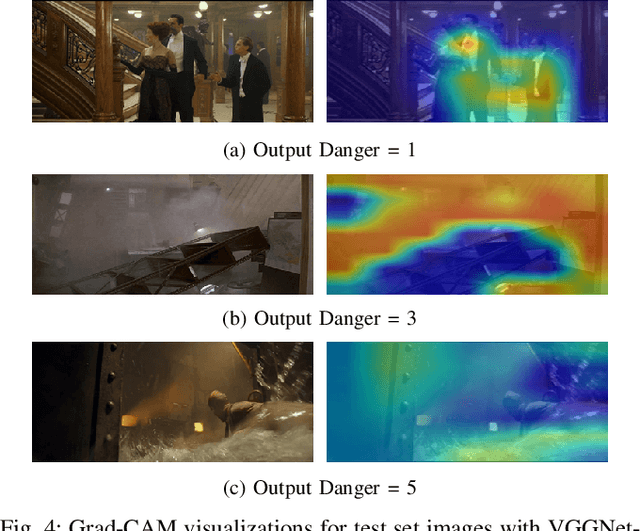

Learning to Assess Danger from Movies for Cooperative Escape Planning in Hazardous Environments

Jul 27, 2022

Abstract:There has been a plethora of work towards improving robot perception and navigation, yet their application in hazardous environments, like during a fire or an earthquake, is still at a nascent stage. We hypothesize two key challenges here: first, it is difficult to replicate such scenarios in the real world, which is necessary for training and testing purposes. Second, current systems are not fully able to take advantage of the rich multi-modal data available in such hazardous environments. To address the first challenge, we propose to harness the enormous amount of visual content available in the form of movies and TV shows, and develop a dataset that can represent hazardous environments encountered in the real world. The data is annotated with high-level danger ratings for realistic disaster images, and corresponding keywords are provided that summarize the content of the scene. In response to the second challenge, we propose a multi-modal danger estimation pipeline for collaborative human-robot escape scenarios. Our Bayesian framework improves danger estimation by fusing information from robot's camera sensor and language inputs from the human. Furthermore, we augment the estimation module with a risk-aware planner that helps in identifying safer paths out of the dangerous environment. Through extensive simulations, we exhibit the advantages of our multi-modal perception framework that gets translated into tangible benefits such as higher success rate in a collaborative human-robot mission.

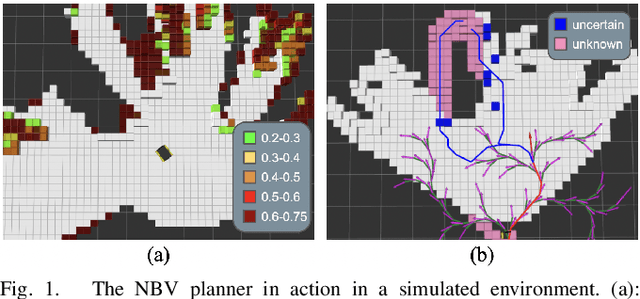

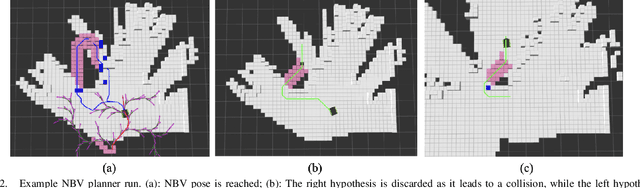

Is it Worth to Reason about Uncertainty in Occupancy Grid Maps during Path Planning?

May 27, 2022

Abstract:This paper investigates the usefulness of reasoning about the uncertain presence of obstacles during path planning, which typically stems from the usage of probabilistic occupancy grid maps for representing the environment when mapping via a noisy sensor like a stereo camera. The traditional planning paradigm prescribes using a hard threshold on the occupancy probability to declare that a cell is an obstacle, and to plan a single path accordingly while treating unknown space as free. We compare this approach against a new uncertainty-aware planner, which plans two different path hypotheses and then merges their initial trajectory segments into a single one ending in a "next-best view" pose. After this informative view is taken, the planner commits to one of the hypotheses, or to a completely new one if a collision is imminent. Simulations were conducted comparing the proposed and traditional planner. Results show the existence of planning scenarios -- like when the environment contains a dead-end, or when the goal is placed close to an obstacle -- in which reasoning about uncertainty can significantly decrease the robot's traveled distance and increase the chances of reaching the goal. The new planner was also validated on a real Clearpath Jackal robot equipped with a ZED 2 stereo camera.

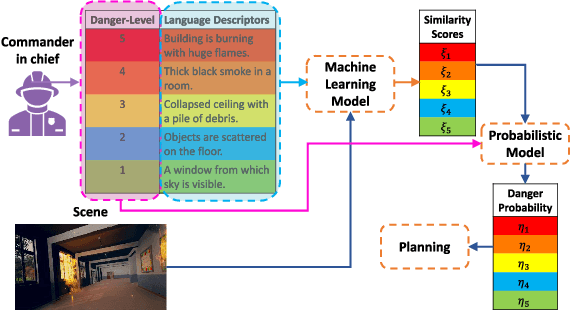

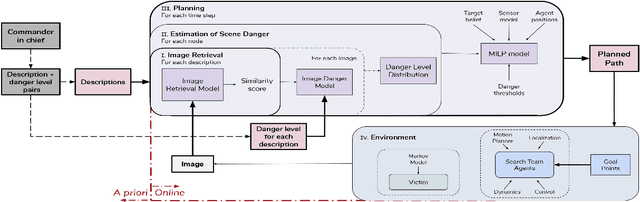

Exploiting Natural Language for Efficient Risk-Aware Multi-robot SaR Planning

Apr 08, 2021

Abstract:The ability to develop a high-level understanding of a scene, such as perceiving danger levels, can prove valuable in planning multi-robot search and rescue (SaR) missions. In this work, we propose to uniquely leverage natural language descriptions from the mission commander in chief and image data captured by robots to estimate scene danger. Given a description and an image, a state-of-the-art deep neural network is used to assess a corresponding similarity score, which is then converted into a probabilistic distribution of danger levels. Because commonly used visio-linguistic datasets do not represent SaR missions well, we collect a large-scale image-description dataset from synthetic images taken from realistic disaster scenes and use it to train our machine learning model. A risk-aware variant of the Multi-robot Efficient Search Path Planning (MESPP) problem is then formulated to use the danger estimates in order to account for high-risk locations in the environment when planning the searchers' paths. The problem is solved via a distributed approach based on Mixed-Integer Linear Programming. Our experiments demonstrate that our framework allows to plan safer yet highly successful search missions, abiding to the two most important aspects of SaR missions: to ensure both searchers' and victim safety.

* 8 pages, 5 figures. To be presented at the IEEE International Conference on Robotics and Automation, 2021. Dataset available at: https://github.com/vikshree/DISC-L.git

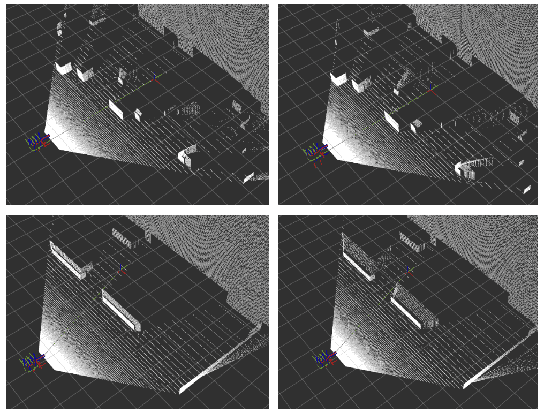

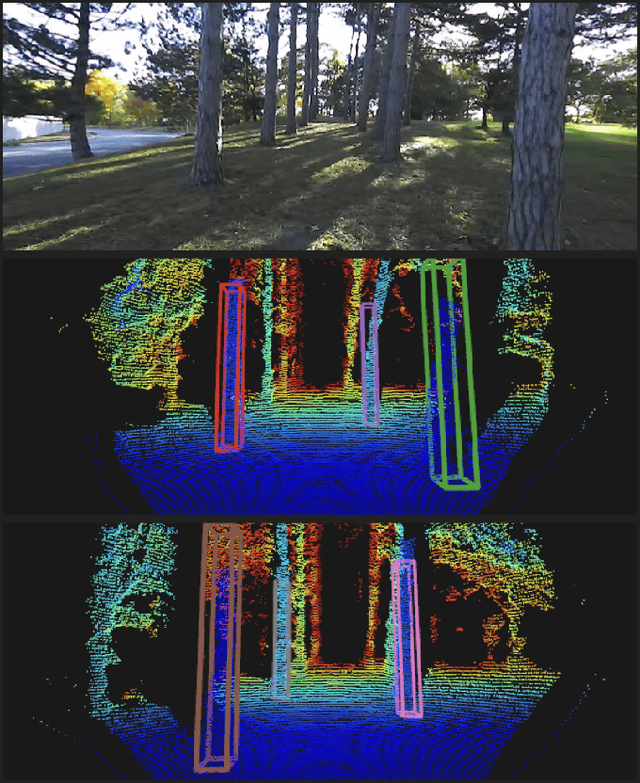

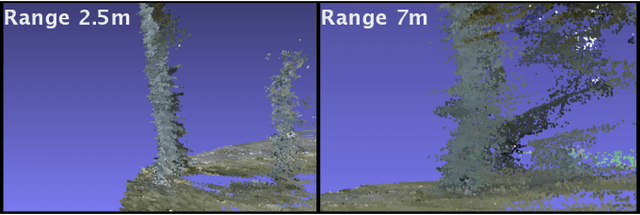

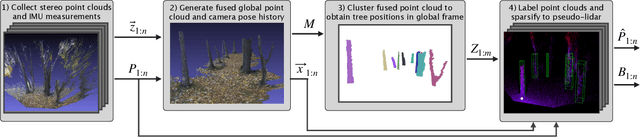

Detecting and Mapping Trees in Unstructured Environments with a Stereo Camera and Pseudo-Lidar

Mar 29, 2021

Abstract:We present a method for detecting and mapping trees in noisy stereo camera point clouds, using a learned 3-D object detector. Inspired by recent advancements in 3-D object detection using a pseudo-lidar representation for stereo data, we train a PointRCNN detector to recognize trees in forest-like environments. We generate detector training data with a novel automatic labeling process that clusters a fused global point cloud. This process annotates large stereo point cloud training data sets with minimal user supervision, and unlike previous pseudo-lidar detection pipelines, requires no 3-D ground truth from other sensors such as lidar. Our mapping system additionally uses a Kalman filter to associate detections and consistently estimate the positions and sizes of trees. We collect a data set for tree detection consisting of 8680 stereo point clouds, and validate our method on an outdoors test sequence. Our results demonstrate robust tree recognition in noisy stereo data at ranges of up to 7 meters, on 720p resolution images from a Stereolabs ZED 2 camera. Code and data are available at https://github.com/brian-h-wang/pseudolidar-tree-detection.

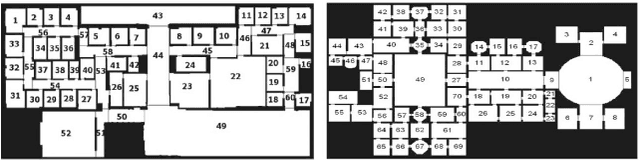

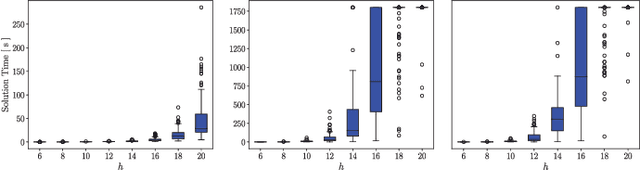

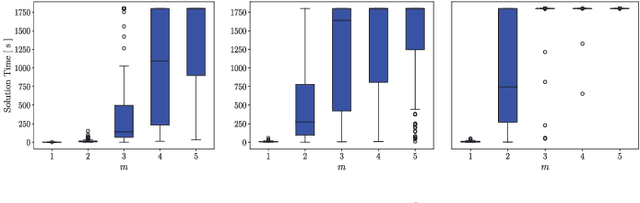

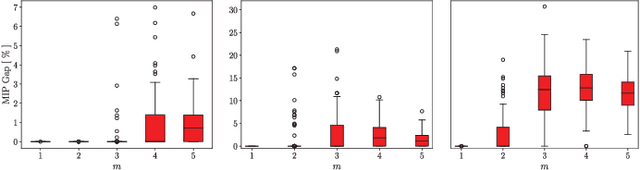

Mixed-Integer Linear Programming Models for Multi-Robot Non-Adversarial Search

Nov 25, 2020

Abstract:In this letter, we consider the Multi-Robot Efficient Search Path Planning (MESPP) problem, where a team of robots is deployed in a graph-represented environment to capture a moving target within a given deadline. We prove this problem to be NP-hard, and present the first set of Mixed-Integer Linear Programming (MILP) models to tackle the MESPP problem. Our models are the first to encompass multiple searchers, arbitrary capture ranges, and false negatives simultaneously. While state-of-the-art algorithms for MESPP are based on simple path enumeration, the adoption of MILP as a planning paradigm allows to leverage the powerful techniques of modern solvers, yielding better computational performance and, as a consequence, longer planning horizons. The models are designed for computing optimal solutions offline, but can be easily adapted for a distributed online approach. Our simulations show that it is possible to achieve 98% decrease in computational time relative to the previous state-of-the-art. We also show that the distributed approach performs nearly as well as the centralized, within 6% in the settings studied in this letter, with the advantage of requiring significant less time - an important consideration in practical search missions.

* Published at IEEE Robotics and Automation Letters, presented at IROS 2020. Presentation available at https://youtu.be/BhUczcDq3Dc Code is open source and available at https://github.com/basfora/milp_mespp.git

Planning Paths Through Unknown Space by Imagining What Lies Therein

Nov 14, 2020

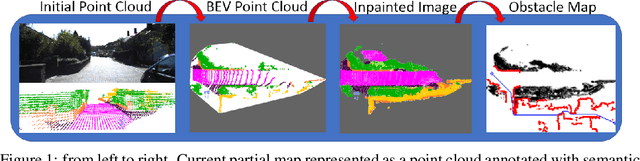

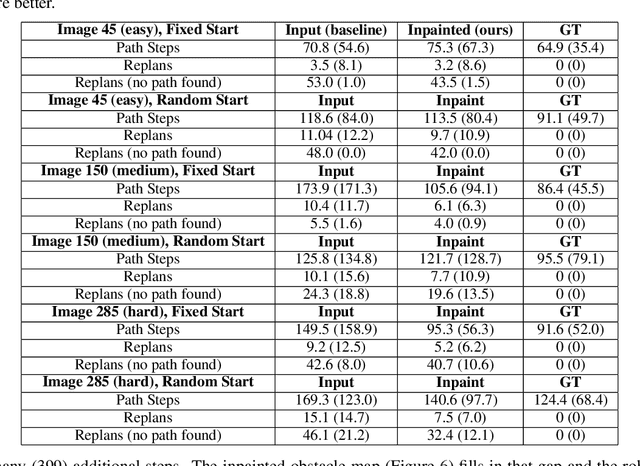

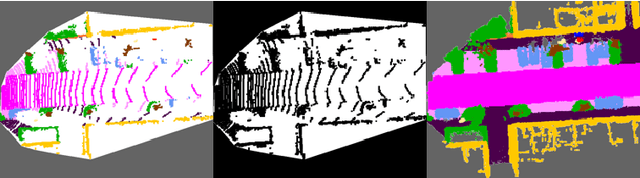

Abstract:This paper presents a novel framework for planning paths in maps containing unknown spaces, such as from occlusions. Our approach takes as input a semantically-annotated point cloud, and leverages an image inpainting neural network to generate a reasonable model of unknown space as free or occupied. Our validation campaign shows that it is possible to greatly increase the performance of standard pathfinding algorithms which adopt the general optimistic assumption of treating unknown space as free.

DeepSemanticHPPC: Hypothesis-based Planning over Uncertain Semantic Point Clouds

Mar 06, 2020

Abstract:Planning in unstructured environments is challenging -- it relies on sensing, perception, scene reconstruction, and reasoning about various uncertainties. We propose DeepSemanticHPPC, a novel uncertainty-aware hypothesis-based planner for unstructured environments. Our algorithmic pipeline consists of: a deep Bayesian neural network which segments surfaces with uncertainty estimates; a flexible point cloud scene representation; a next-best-view planner which minimizes the uncertainty of scene semantics using sparse visual measurements; and a hypothesis-based path planner that proposes multiple kinematically feasible paths with evolving safety confidences given next-best-view measurements. Our pipeline iteratively decreases semantic uncertainty along planned paths, filtering out unsafe paths with high confidence. We show that our framework plans safe paths in real-world environments where existing path planners typically fail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge