Carlos Diaz-Ruiz

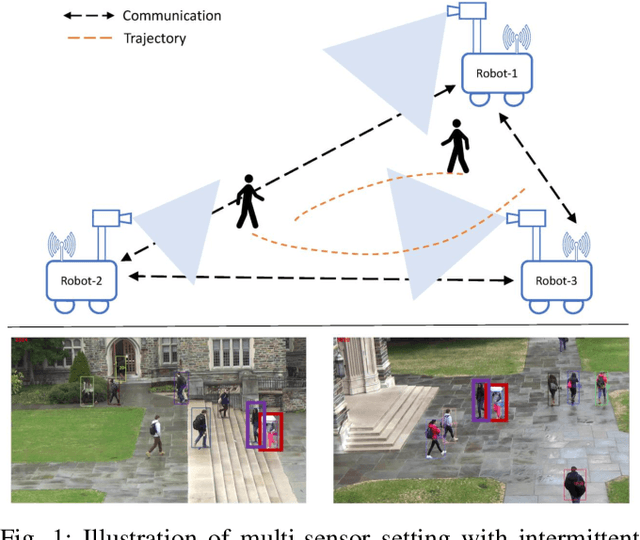

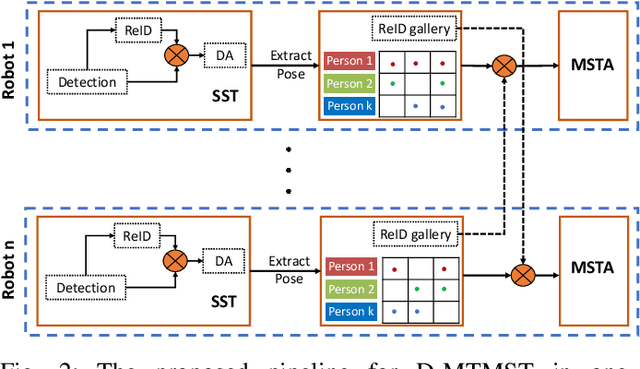

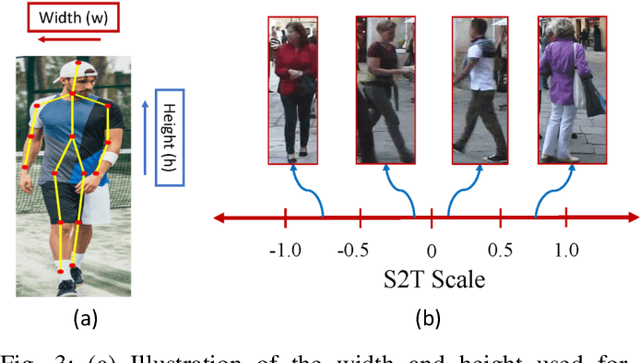

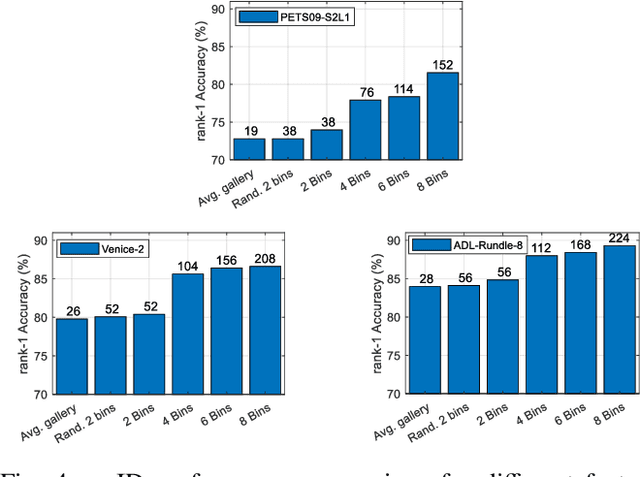

Orientation-Discriminative Feature Representation for Decentralized Pedestrian Tracking

Feb 26, 2022

Abstract:This paper focuses on the problem of decentralized pedestrian tracking using a sensor network. Traditional works on pedestrian tracking usually use a centralized framework, which becomes less practical for robotic applications due to limited communication bandwidth. Our paper proposes a communication-efficient, orientation-discriminative feature representation to characterize pedestrian appearance information, that can be shared among sensors. Building upon that representation, our work develops a cross-sensor track association approach to achieve decentralized tracking. Extensive evaluations are conducted on publicly available datasets and results show that our proposed approach leads to improved performance in multi-sensor tracking.

Detecting and Mapping Trees in Unstructured Environments with a Stereo Camera and Pseudo-Lidar

Mar 29, 2021

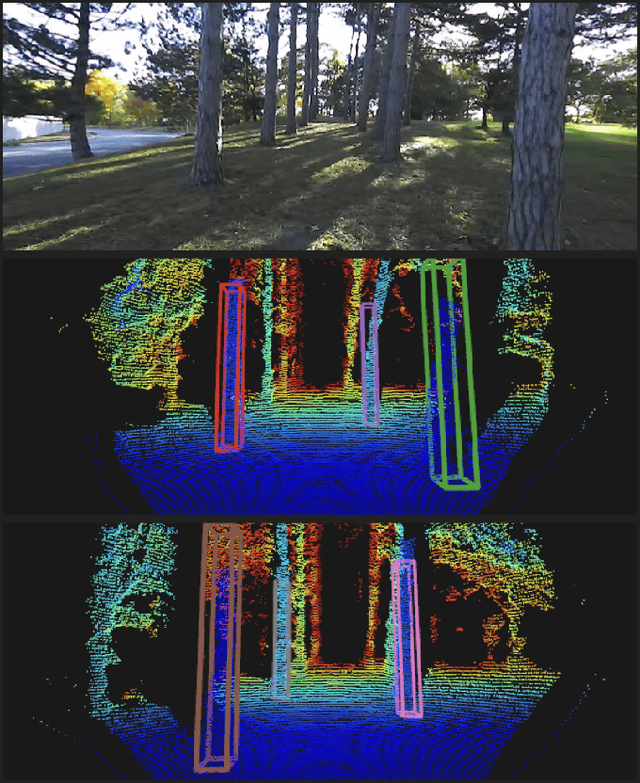

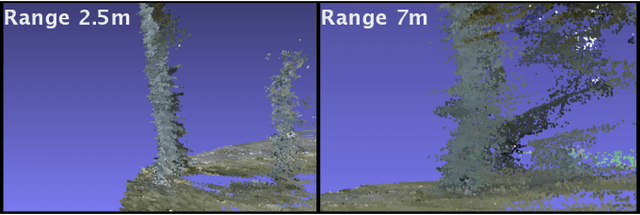

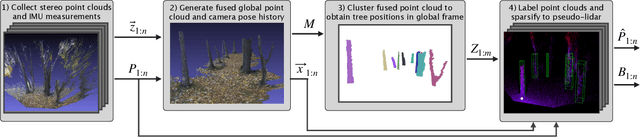

Abstract:We present a method for detecting and mapping trees in noisy stereo camera point clouds, using a learned 3-D object detector. Inspired by recent advancements in 3-D object detection using a pseudo-lidar representation for stereo data, we train a PointRCNN detector to recognize trees in forest-like environments. We generate detector training data with a novel automatic labeling process that clusters a fused global point cloud. This process annotates large stereo point cloud training data sets with minimal user supervision, and unlike previous pseudo-lidar detection pipelines, requires no 3-D ground truth from other sensors such as lidar. Our mapping system additionally uses a Kalman filter to associate detections and consistently estimate the positions and sizes of trees. We collect a data set for tree detection consisting of 8680 stereo point clouds, and validate our method on an outdoors test sequence. Our results demonstrate robust tree recognition in noisy stereo data at ranges of up to 7 meters, on 720p resolution images from a Stereolabs ZED 2 camera. Code and data are available at https://github.com/brian-h-wang/pseudolidar-tree-detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge