Ian M. Mitchell

ROS-X-Habitat: Bridging the ROS Ecosystem with Embodied AI

Sep 17, 2021

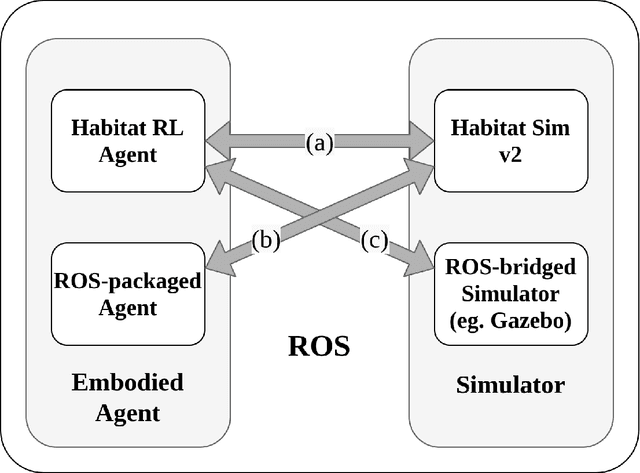

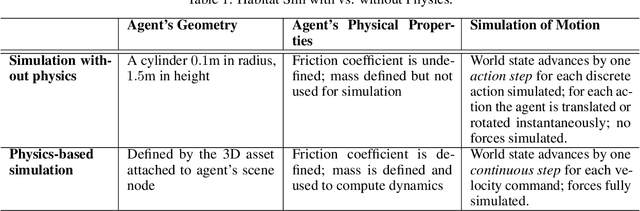

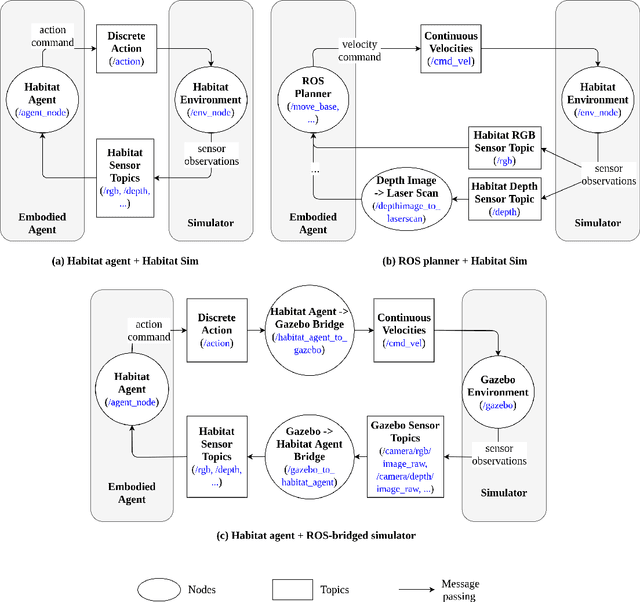

Abstract:We introduce ROS-X-Habitat, a software interface that bridges the AI Habitat platform for embodied reinforcement learning agents with other robotics resources via ROS. This interface not only offers standardized communication protocols between embodied agents and simulators, but also enables physics-based simulation. With this interface, roboticists are able to train their own Habitat RL agents in another simulation environment or to develop their own robotic algorithms inside Habitat Sim. Through in silico experiments, we demonstrate that ROS-X-Habitat has minimal impact on the navigation performance and simulation speed of Habitat agents; that a standard set of ROS mapping, planning and navigation tools can run in the Habitat simulator, and that a Habitat agent can run in the standard ROS simulator Gazebo.

Safe Motion Planning against Multimodal Distributions based on a Scenario Approach

Aug 05, 2021

Abstract:We present the design of a motion planning algorithm that ensures safety for an autonomous vehicle. In particular, we consider a multimodal distribution over uncertainties; for example, the uncertain predictions of future trajectories of surrounding vehicles reflect discrete decisions, such as turning or going straight at intersections. We develop a computationally efficient, scenario-based approach that solves the motion planning problem with high confidence given a quantifiable number of samples from the multimodal distribution. Our approach is based on two preprocessing steps, which 1) separate the samples into distinct clusters and 2) compute a bounding polytope for each cluster. Then, we rewrite the motion planning problem approximately as a mixed-integer problem using the polytopes. We demonstrate via simulation on the nuScenes dataset that our approach ensures safety with high probability in the presence of multimodal uncertainties, and is computationally more efficient and less conservative than a conventional scenario approach.

* Published in IEEE Control Systems Letters

Learning Motion Predictors for Smart Wheelchair using Autoregressive Sparse Gaussian Process

Jun 07, 2018

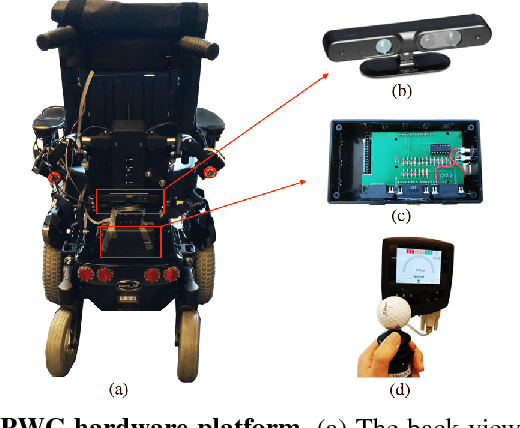

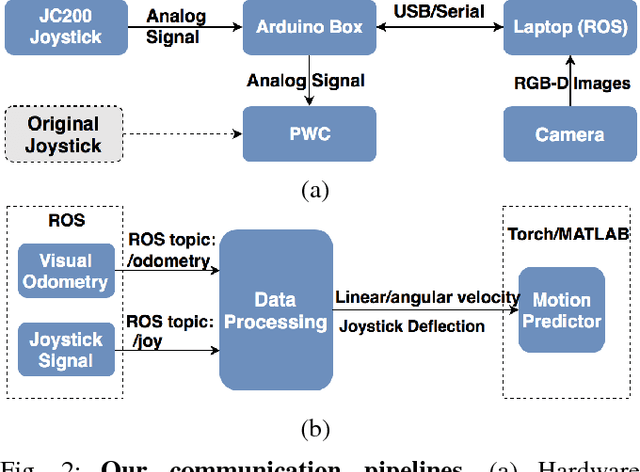

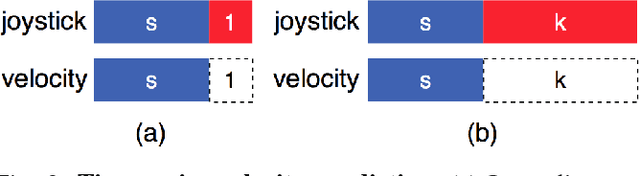

Abstract:Constructing a smart wheelchair on a commercially available powered wheelchair (PWC) platform avoids a host of seating, mechanical design and reliability issues but requires methods of predicting and controlling the motion of a device never intended for robotics. Analog joystick inputs are subject to black-box transformations which may produce intuitive and adaptable motion control for human operators, but complicate robotic control approaches; furthermore, installation of standard axle mounted odometers on a commercial PWC is difficult. In this work, we present an integrated hardware and software system for predicting the motion of a commercial PWC platform that does not require any physical or electronic modification of the chair beyond plugging into an industry standard auxiliary input port. This system uses an RGB-D camera and an Arduino interface board to capture motion data, including visual odometry and joystick signals, via ROS communication. Future motion is predicted using an autoregressive sparse Gaussian process model. We evaluate the proposed system on real-world short-term path prediction experiments. Experimental results demonstrate the system's efficacy when compared to a baseline neural network model.

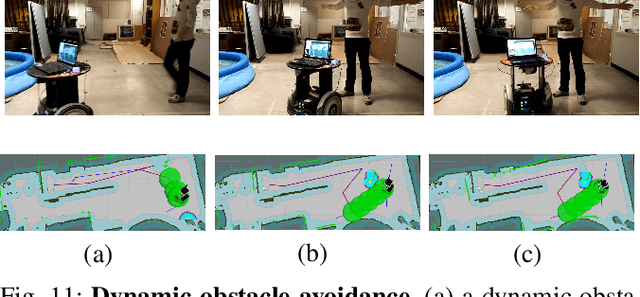

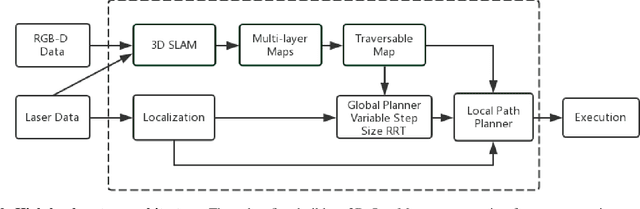

Autonomous Mobile Robot Navigation in Uneven and Unstructured Indoor Environments

Oct 28, 2017

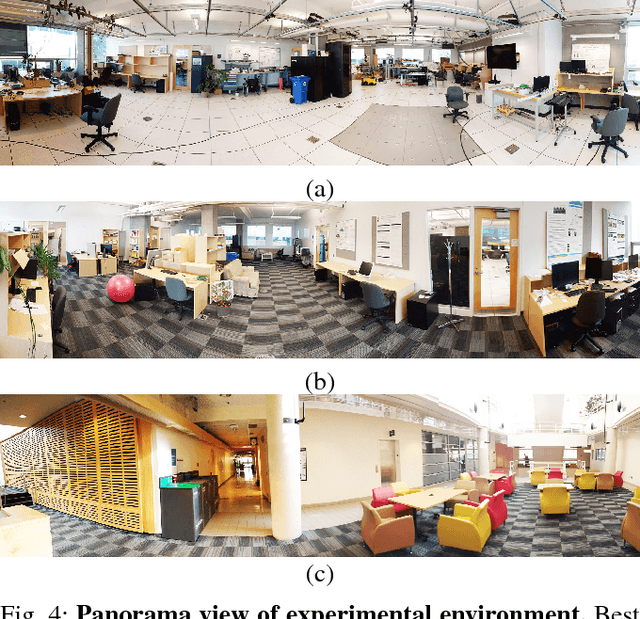

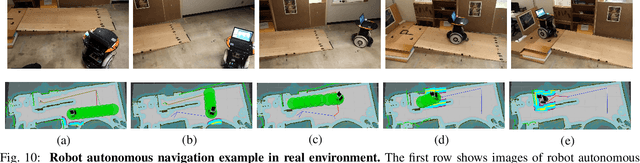

Abstract:Robots are increasingly operating in indoor environments designed for and shared with people. However, robots working safely and autonomously in uneven and unstructured environments still face great challenges. Many modern indoor environments are designed with wheelchair accessibility in mind. This presents an opportunity for wheeled robots to navigate through sloped areas while avoiding staircases. In this paper, we present an integrated software and hardware system for autonomous mobile robot navigation in uneven and unstructured indoor environments. This modular and reusable software framework incorporates capabilities of perception and navigation. Our robot first builds a 3D OctoMap representation for the uneven environment with the 3D mapping using wheel odometry, 2D laser and RGB-D data. Then we project multilayer 2D occupancy maps from OctoMap to generate the the traversable map based on layer differences. The safe traversable map serves as the input for efficient autonomous navigation. Furthermore, we employ a variable step size Rapidly Exploring Random Trees that could adjust the step size automatically, eliminating tuning step sizes according to environments. We conduct extensive experiments in simulation and real-world, demonstrating the efficacy and efficiency of our system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge