Hyunseok Oh

Beyond designer's knowledge: Generating materials design hypotheses via large language models

Sep 10, 2024

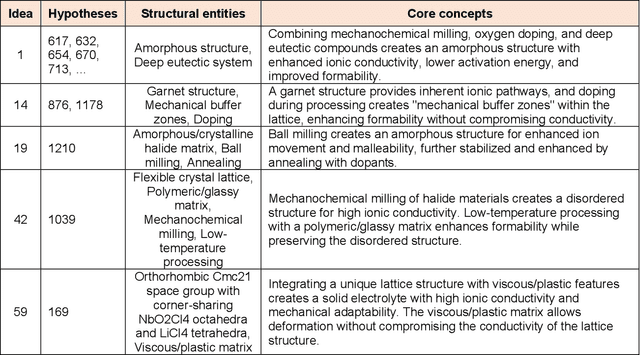

Abstract:Materials design often relies on human-generated hypotheses, a process inherently limited by cognitive constraints such as knowledge gaps and limited ability to integrate and extract knowledge implications, particularly when multidisciplinary expertise is required. This work demonstrates that large language models (LLMs), coupled with prompt engineering, can effectively generate non-trivial materials hypotheses by integrating scientific principles from diverse sources without explicit design guidance by human experts. These include design ideas for high-entropy alloys with superior cryogenic properties and halide solid electrolytes with enhanced ionic conductivity and formability. These design ideas have been experimentally validated in high-impact publications in 2023 not available in the LLM training data, demonstrating the LLM's ability to generate highly valuable and realizable innovative ideas not established in the literature. Our approach primarily leverages materials system charts encoding processing-structure-property relationships, enabling more effective data integration by condensing key information from numerous papers, and evaluation and categorization of numerous hypotheses for human cognition, both through the LLM. This LLM-driven approach opens the door to new avenues of artificial intelligence-driven materials discovery by accelerating design, democratizing innovation, and expanding capabilities beyond the designer's direct knowledge.

Sign Gradient Descent-based Neuronal Dynamics: ANN-to-SNN Conversion Beyond ReLU Network

Jul 01, 2024Abstract:Spiking neural network (SNN) is studied in multidisciplinary domains to (i) enable order-of-magnitudes energy-efficient AI inference and (ii) computationally simulate neuro-scientific mechanisms. The lack of discrete theory obstructs the practical application of SNN by limiting its performance and nonlinearity support. We present a new optimization-theoretic perspective of the discrete dynamics of spiking neurons. We prove that a discrete dynamical system of simple integrate-and-fire models approximates the sub-gradient method over unconstrained optimization problems. We practically extend our theory to introduce a novel sign gradient descent (signGD)-based neuronal dynamics that can (i) approximate diverse nonlinearities beyond ReLU and (ii) advance ANN-to-SNN conversion performance in low time steps. Experiments on large-scale datasets show that our technique achieves (i) state-of-the-art performance in ANN-to-SNN conversion and (ii) is the first to convert new DNN architectures, e.g., ConvNext, MLP-Mixer, and ResMLP. We publicly share our source code at https://github.com/snuhcs/snn_signgd .

Papez: Resource-Efficient Speech Separation with Auditory Working Memory

Jul 01, 2024

Abstract:Transformer-based models recently reached state-of-the-art single-channel speech separation accuracy; However, their extreme computational load makes it difficult to deploy them in resource-constrained mobile or IoT devices. We thus present Papez, a lightweight and computation-efficient single-channel speech separation model. Papez is based on three key techniques. We first replace the inter-chunk Transformer with small-sized auditory working memory. Second, we adaptively prune the input tokens that do not need further processing. Finally, we reduce the number of parameters through the recurrent transformer. Our extensive evaluation shows that Papez achieves the best resource and accuracy tradeoffs with a large margin. We publicly share our source code at \texttt{https://github.com/snuhcs/Papez}

On the Importance of Critical Period in Multi-stage Reinforcement Learning

Aug 09, 2022

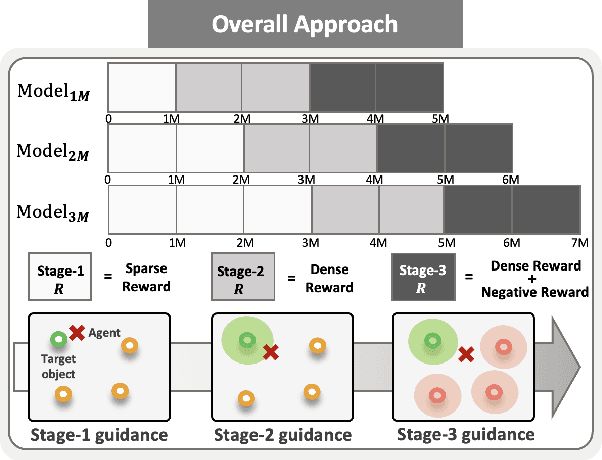

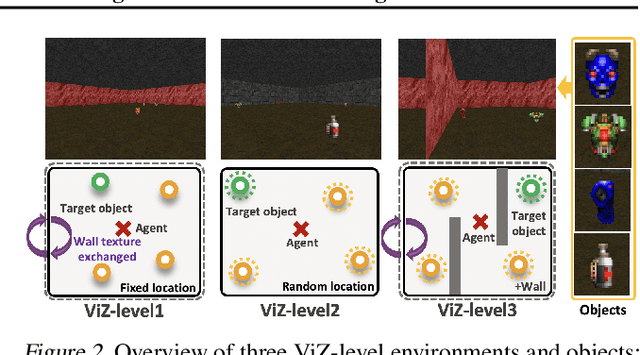

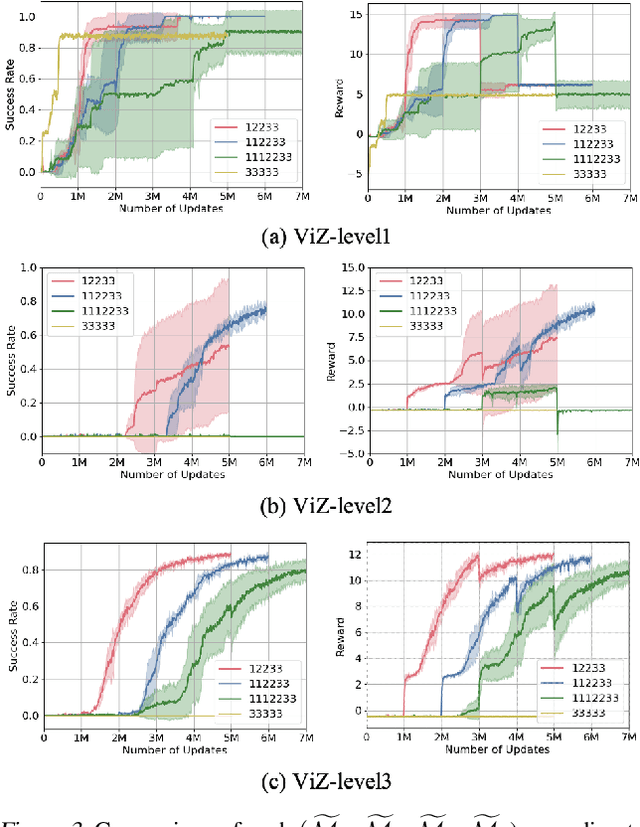

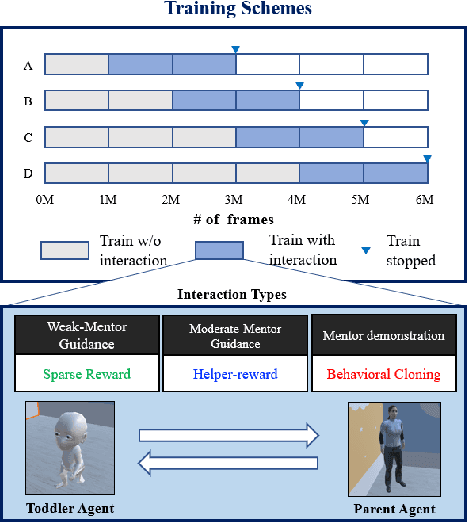

Abstract:The initial years of an infant's life are known as the critical period, during which the overall development of learning performance is significantly impacted due to neural plasticity. In recent studies, an AI agent, with a deep neural network mimicking mechanisms of actual neurons, exhibited a learning period similar to human's critical period. Especially during this initial period, the appropriate stimuli play a vital role in developing learning ability. However, transforming human cognitive bias into an appropriate shaping reward is quite challenging, and prior works on critical period do not focus on finding the appropriate stimulus. To take a step further, we propose multi-stage reinforcement learning to emphasize finding ``appropriate stimulus" around the critical period. Inspired by humans' early cognitive-developmental stage, we use multi-stage guidance near the critical period, and demonstrate the appropriate shaping reward (stage-2 guidance) in terms of the AI agent's performance, efficiency, and stability.

Toddler-Guidance Learning: Impacts of Critical Period on Multimodal AI Agents

Jan 12, 2022

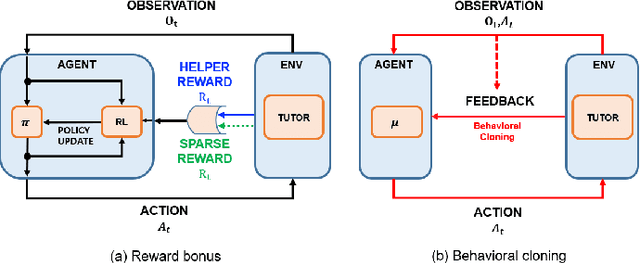

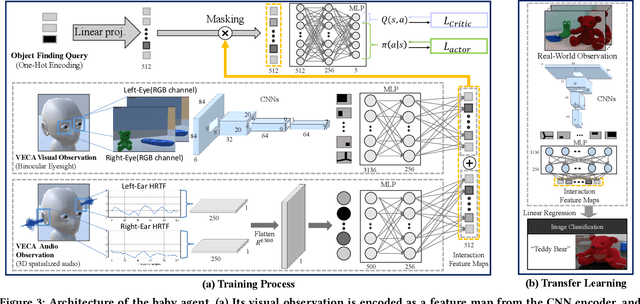

Abstract:Critical periods are phases during which a toddler's brain develops in spurts. To promote children's cognitive development, proper guidance is critical in this stage. However, it is not clear whether such a critical period also exists for the training of AI agents. Similar to human toddlers, well-timed guidance and multimodal interactions might significantly enhance the training efficiency of AI agents as well. To validate this hypothesis, we adapt this notion of critical periods to learning in AI agents and investigate the critical period in the virtual environment for AI agents. We formalize the critical period and Toddler-guidance learning in the reinforcement learning (RL) framework. Then, we built up a toddler-like environment with VECA toolkit to mimic human toddlers' learning characteristics. We study three discrete levels of mutual interaction: weak-mentor guidance (sparse reward), moderate mentor guidance (helper-reward), and mentor demonstration (behavioral cloning). We also introduce the EAVE dataset consisting of 30,000 real-world images to fully reflect the toddler's viewpoint. We evaluate the impact of critical periods on AI agents from two perspectives: how and when they are guided best in both uni- and multimodal learning. Our experimental results show that both uni- and multimodal agents with moderate mentor guidance and critical period on 1 million and 2 million training steps show a noticeable improvement. We validate these results with transfer learning on the EAVE dataset and find the performance advancement on the same critical period and the guidance.

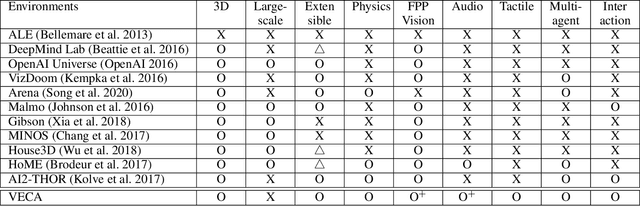

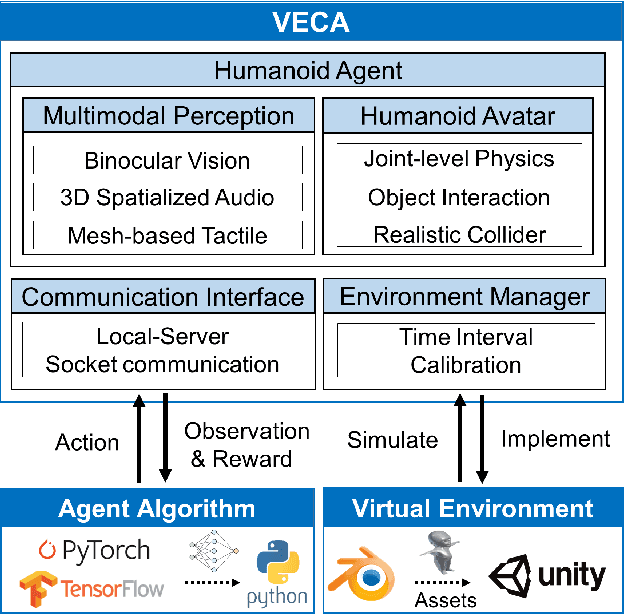

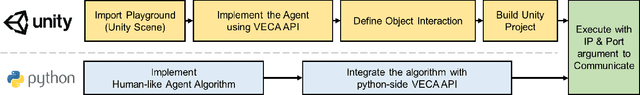

VECA : A Toolkit for Building Virtual Environments to Train and Test Human-like Agents

May 03, 2021

Abstract:Building human-like agent, which aims to learn and think like human intelligence, has long been an important research topic in AI. To train and test human-like agents, we need an environment that imposes the agent to rich multimodal perception and allows comprehensive interactions for the agent, while also easily extensible to develop custom tasks. However, existing approaches do not support comprehensive interaction with the environment or lack variety in modalities. Also, most of the approaches are difficult or even impossible to implement custom tasks. In this paper, we propose a novel VR-based toolkit, VECA, which enables building fruitful virtual environments to train and test human-like agents. In particular, VECA provides a humanoid agent and an environment manager, enabling the agent to receive rich human-like perception and perform comprehensive interactions. To motivate VECA, we also provide 24 interactive tasks, which represent (but are not limited to) four essential aspects in early human development: joint-level locomotion and control, understanding contexts of objects, multimodal learning, and multi-agent learning. To show the usefulness of VECA on training and testing human-like learning agents, we conduct experiments on VECA and show that users can build challenging tasks for engaging human-like algorithms, and the features supported by VECA are critical on training human-like agents.

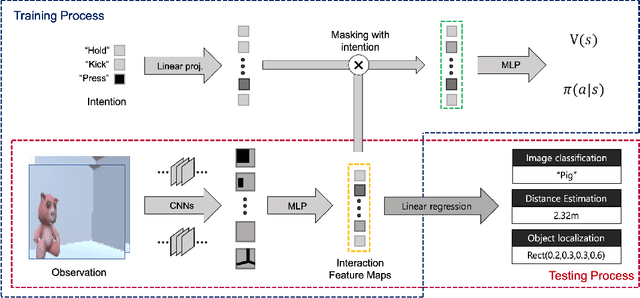

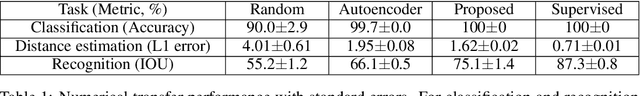

Learning task-agnostic representation via toddler-inspired learning

Jan 27, 2021

Abstract:One of the inherent limitations of current AI systems, stemming from the passive learning mechanisms (e.g., supervised learning), is that they perform well on labeled datasets but cannot deduce knowledge on their own. To tackle this problem, we derive inspiration from a highly intentional learning system via action: the toddler. Inspired by the toddler's learning procedure, we design an interactive agent that can learn and store task-agnostic visual representation while exploring and interacting with objects in the virtual environment. Experimental results show that such obtained representation was expandable to various vision tasks such as image classification, object localization, and distance estimation tasks. In specific, the proposed model achieved 100%, 75.1% accuracy and 1.62% relative error, respectively, which is noticeably better than autoencoder-based model (99.7%, 66.1%, 1.95%), and also comparable with those of supervised models (100%, 87.3%, 0.71%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge