Huixuan Chi

Modeling Spatiotemporal Periodicity and Collaborative Signal for Local-Life Service Recommendation

Sep 22, 2023

Abstract:Online local-life service platforms provide services like nearby daily essentials and food delivery for hundreds of millions of users. Different from other types of recommender systems, local-life service recommendation has the following characteristics: (1) spatiotemporal periodicity, which means a user's preferences for items vary from different locations at different times. (2) spatiotemporal collaborative signal, which indicates similar users have similar preferences at specific locations and times. However, most existing methods either focus on merely the spatiotemporal contexts in sequences, or model the user-item interactions without spatiotemporal contexts in graphs. To address this issue, we design a new method named SPCS in this paper. Specifically, we propose a novel spatiotemporal graph transformer (SGT) layer, which explicitly encodes relative spatiotemporal contexts, and aggregates the information from multi-hop neighbors to unify spatiotemporal periodicity and collaborative signal. With extensive experiments on both public and industrial datasets, this paper validates the state-of-the-art performance of SPCS.

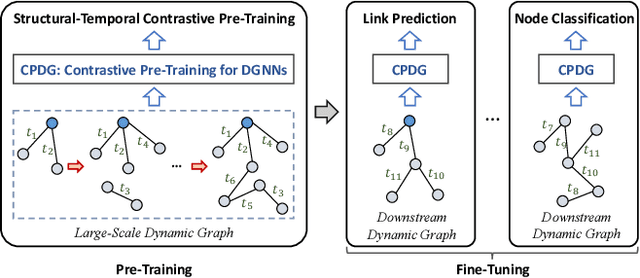

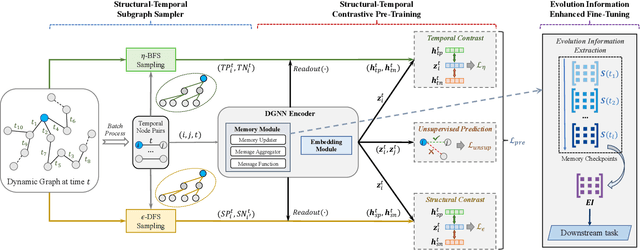

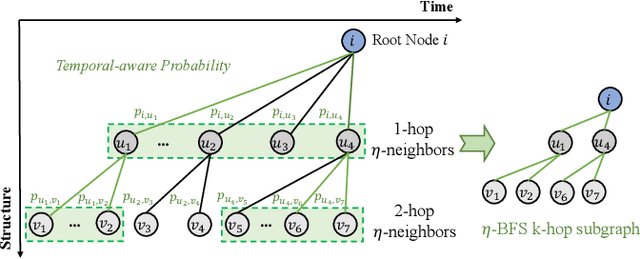

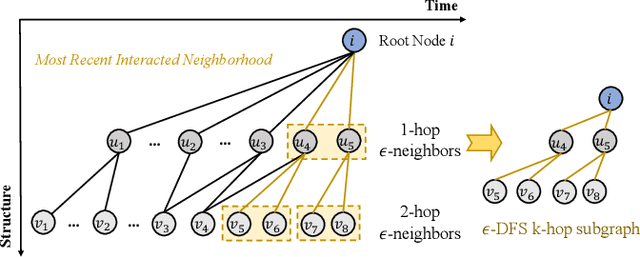

CPDG: A Contrastive Pre-Training Method for Dynamic Graph Neural Networks

Jul 24, 2023

Abstract:Dynamic graph data mining has gained popularity in recent years due to the rich information contained in dynamic graphs and their widespread use in the real world. Despite the advances in dynamic graph neural networks (DGNNs), the rich information and diverse downstream tasks have posed significant difficulties for the practical application of DGNNs in industrial scenarios. To this end, in this paper, we propose to address them by pre-training and present the Contrastive Pre-Training Method for Dynamic Graph Neural Networks (CPDG). CPDG tackles the challenges of pre-training for DGNNs, including generalization capability and long-short term modeling capability, through a flexible structural-temporal subgraph sampler along with structural-temporal contrastive pre-training schemes. Extensive experiments conducted on both large-scale research and industrial dynamic graph datasets show that CPDG outperforms existing methods in dynamic graph pre-training for various downstream tasks under three transfer settings.

Long Short-Term Preference Modeling for Continuous-Time Sequential Recommendation

Aug 01, 2022

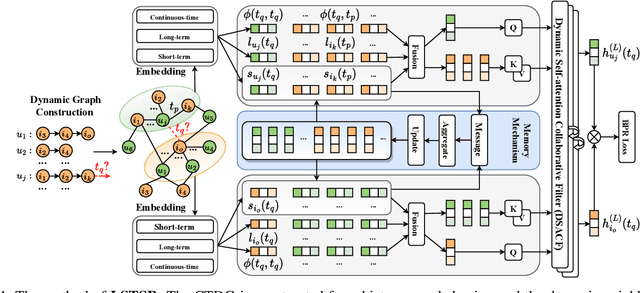

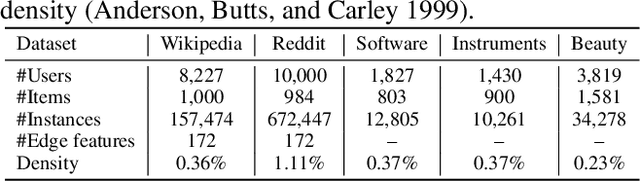

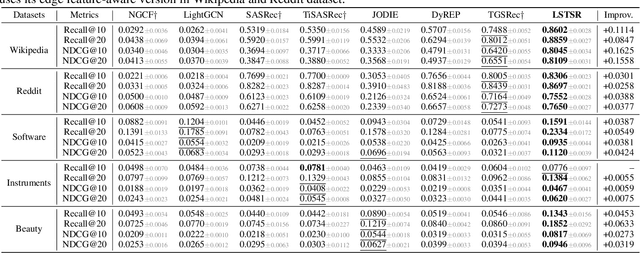

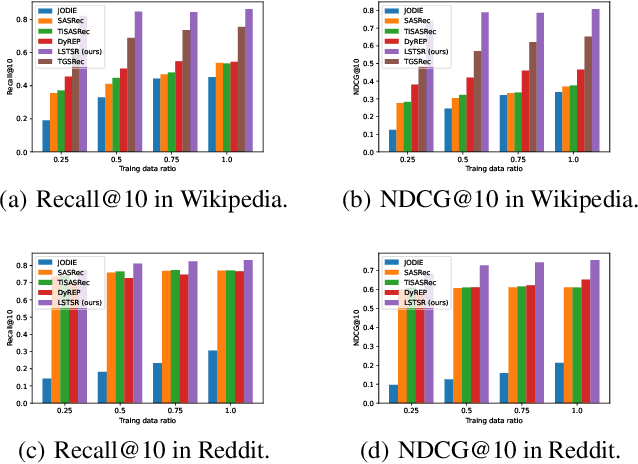

Abstract:Modeling the evolution of user preference is essential in recommender systems. Recently, dynamic graph-based methods have been studied and achieved SOTA for recommendation, majority of which focus on user's stable long-term preference. However, in real-world scenario, user's short-term preference evolves over time dynamically. Although there exists sequential methods that attempt to capture it, how to model the evolution of short-term preference with dynamic graph-based methods has not been well-addressed yet. In particular: 1) existing methods do not explicitly encode and capture the evolution of short-term preference as sequential methods do; 2) simply using last few interactions is not enough for modeling the changing trend. In this paper, we propose Long Short-Term Preference Modeling for Continuous-Time Sequential Recommendation (LSTSR) to capture the evolution of short-term preference under dynamic graph. Specifically, we explicitly encode short-term preference and optimize it via memory mechanism, which has three key operations: Message, Aggregate and Update. Our memory mechanism can not only store one-hop information, but also trigger with new interactions online. Extensive experiments conducted on five public datasets show that LSTSR consistently outperforms many state-of-the-art recommendation methods across various lines.

Residual Network and Embedding Usage: New Tricks of Node Classification with Graph Convolutional Networks

May 21, 2021

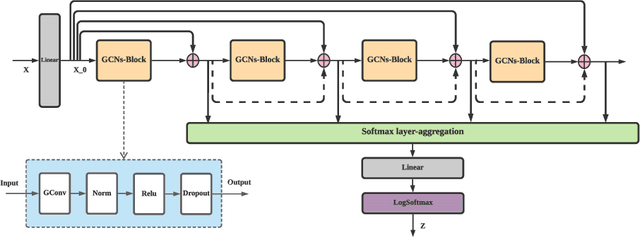

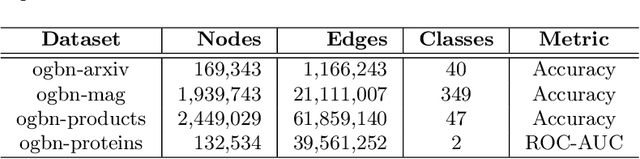

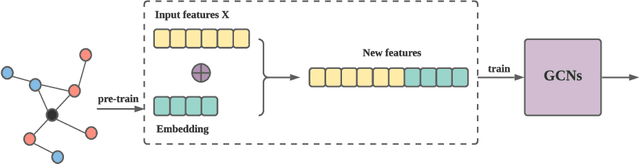

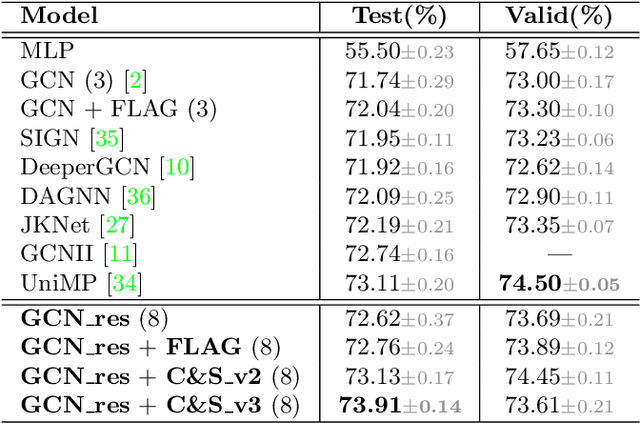

Abstract:Graph Convolutional Networks (GCNs) and subsequent variants have been proposed to solve tasks on graphs, especially node classification tasks. In the literature, however, most tricks or techniques are either briefly mentioned as implementation details or only visible in source code. In this paper, we first summarize some existing effective tricks used in GCNs mini-batch training. Based on this, two novel tricks named GCN_res Framework and Embedding Usage are proposed by leveraging residual network and pre-trained embedding to improve baseline's test accuracy in different datasets. Experiments on Open Graph Benchmark (OGB) show that, by combining these techniques, the test accuracy of various GCNs increases by 1.21%~2.84%. We open source our implementation at https://github.com/ytchx1999/PyG-OGB-Tricks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge