Hui Guo

Diffusion Epistemic Uncertainty with Asymmetric Learning for Diffusion-Generated Image Detection

Jan 21, 2026Abstract:The rapid progress of diffusion models highlights the growing need for detecting generated images. Previous research demonstrates that incorporating diffusion-based measurements, such as reconstruction error, can enhance the generalizability of detectors. However, ignoring the differing impacts of aleatoric and epistemic uncertainty on reconstruction error can undermine detection performance. Aleatoric uncertainty, arising from inherent data noise, creates ambiguity that impedes accurate detection of generated images. As it reflects random variations within the data (e.g., noise in natural textures), it does not help distinguish generated images. In contrast, epistemic uncertainty, which represents the model's lack of knowledge about unfamiliar patterns, supports detection. In this paper, we propose a novel framework, Diffusion Epistemic Uncertainty with Asymmetric Learning~(DEUA), for detecting diffusion-generated images. We introduce Diffusion Epistemic Uncertainty~(DEU) estimation via the Laplace approximation to assess the proximity of data to the manifold of diffusion-generated samples. Additionally, an asymmetric loss function is introduced to train a balanced classifier with larger margins, further enhancing generalizability. Extensive experiments on large-scale benchmarks validate the state-of-the-art performance of our method.

nncase: An End-to-End Compiler for Efficient LLM Deployment on Heterogeneous Storage Architectures

Dec 25, 2025Abstract:The efficient deployment of large language models (LLMs) is hindered by memory architecture heterogeneity, where traditional compilers suffer from fragmented workflows and high adaptation costs. We present nncase, an open-source, end-to-end compilation framework designed to unify optimization across diverse targets. Central to nncase is an e-graph-based term rewriting engine that mitigates the phase ordering problem, enabling global exploration of computation and data movement strategies. The framework integrates three key modules: Auto Vectorize for adapting to heterogeneous computing units, Auto Distribution for searching parallel strategies with cost-aware communication optimization, and Auto Schedule for maximizing on-chip cache locality. Furthermore, a buffer-aware Codegen phase ensures efficient kernel instantiation. Evaluations show that nncase outperforms mainstream frameworks like MLC LLM and Intel IPEX on Qwen3 series models and achieves performance comparable to the hand-optimized llama.cpp on CPUs, demonstrating the viability of automated compilation for high-performance LLM deployment. The source code is available at https://github.com/kendryte/nncase.

DCD: A Semantic Segmentation Model for Fetal Ultrasound Four-Chamber View

Jun 10, 2025Abstract:Accurate segmentation of anatomical structures in the apical four-chamber (A4C) view of fetal echocardiography is essential for early diagnosis and prenatal evaluation of congenital heart disease (CHD). However, precise segmentation remains challenging due to ultrasound artifacts, speckle noise, anatomical variability, and boundary ambiguity across different gestational stages. To reduce the workload of sonographers and enhance segmentation accuracy, we propose DCD, an advanced deep learning-based model for automatic segmentation of key anatomical structures in the fetal A4C view. Our model incorporates a Dense Atrous Spatial Pyramid Pooling (Dense ASPP) module, enabling superior multi-scale feature extraction, and a Convolutional Block Attention Module (CBAM) to enhance adaptive feature representation. By effectively capturing both local and global contextual information, DCD achieves precise and robust segmentation, contributing to improved prenatal cardiac assessment.

A Self-Learning Multimodal Approach for Fake News Detection

Dec 08, 2024

Abstract:The rapid growth of social media has resulted in an explosion of online news content, leading to a significant increase in the spread of misleading or false information. While machine learning techniques have been widely applied to detect fake news, the scarcity of labeled datasets remains a critical challenge. Misinformation frequently appears as paired text and images, where a news article or headline is accompanied by a related visuals. In this paper, we introduce a self-learning multimodal model for fake news classification. The model leverages contrastive learning, a robust method for feature extraction that operates without requiring labeled data, and integrates the strengths of Large Language Models (LLMs) to jointly analyze both text and image features. LLMs are excel at this task due to their ability to process diverse linguistic data drawn from extensive training corpora. Our experimental results on a public dataset demonstrate that the proposed model outperforms several state-of-the-art classification approaches, achieving over 85% accuracy, precision, recall, and F1-score. These findings highlight the model's effectiveness in tackling the challenges of multimodal fake news detection.

Learning from Noisy Labels via Conditional Distributionally Robust Optimization

Nov 26, 2024Abstract:While crowdsourcing has emerged as a practical solution for labeling large datasets, it presents a significant challenge in learning accurate models due to noisy labels from annotators with varying levels of expertise. Existing methods typically estimate the true label posterior, conditioned on the instance and noisy annotations, to infer true labels or adjust loss functions. These estimates, however, often overlook potential misspecification in the true label posterior, which can degrade model performances, especially in high-noise scenarios. To address this issue, we investigate learning from noisy annotations with an estimated true label posterior through the framework of conditional distributionally robust optimization (CDRO). We propose formulating the problem as minimizing the worst-case risk within a distance-based ambiguity set centered around a reference distribution. By examining the strong duality of the formulation, we derive upper bounds for the worst-case risk and develop an analytical solution for the dual robust risk for each data point. This leads to a novel robust pseudo-labeling algorithm that leverages the likelihood ratio test to construct a pseudo-empirical distribution, providing a robust reference probability distribution in CDRO. Moreover, to devise an efficient algorithm for CDRO, we derive a closed-form expression for the empirical robust risk and the optimal Lagrange multiplier of the dual problem, facilitating a principled balance between robustness and model fitting. Our experimental results on both synthetic and real-world datasets demonstrate the superiority of our method.

Uncertainty-Aware Explainable Recommendation with Large Language Models

Jan 31, 2024

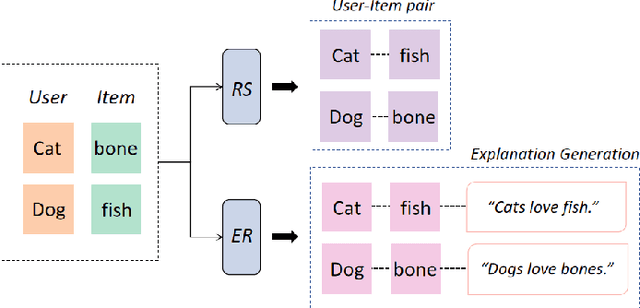

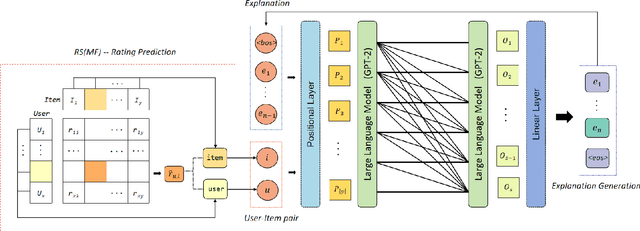

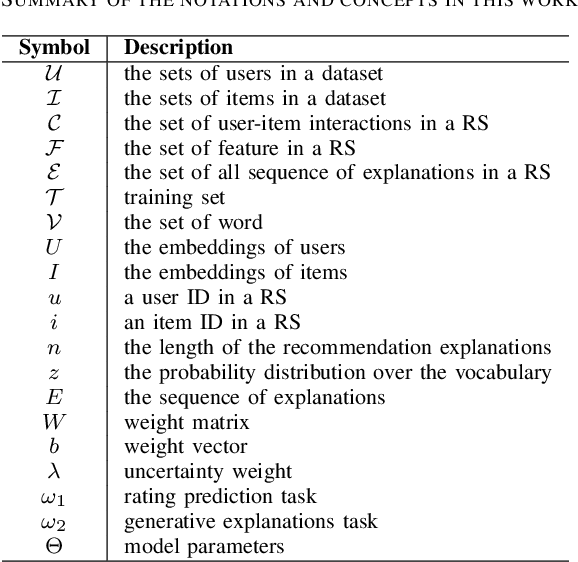

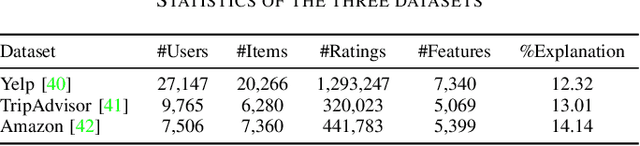

Abstract:Providing explanations within the recommendation system would boost user satisfaction and foster trust, especially by elaborating on the reasons for selecting recommended items tailored to the user. The predominant approach in this domain revolves around generating text-based explanations, with a notable emphasis on applying large language models (LLMs). However, refining LLMs for explainable recommendations proves impractical due to time constraints and computing resource limitations. As an alternative, the current approach involves training the prompt rather than the LLM. In this study, we developed a model that utilizes the ID vectors of user and item inputs as prompts for GPT-2. We employed a joint training mechanism within a multi-task learning framework to optimize both the recommendation task and explanation task. This strategy enables a more effective exploration of users' interests, improving recommendation effectiveness and user satisfaction. Through the experiments, our method achieving 1.59 DIV, 0.57 USR and 0.41 FCR on the Yelp, TripAdvisor and Amazon dataset respectively, demonstrates superior performance over four SOTA methods in terms of explainability evaluation metric. In addition, we identified that the proposed model is able to ensure stable textual quality on the three public datasets.

Object-Driven One-Shot Fine-tuning of Text-to-Image Diffusion with Prototypical Embedding

Jan 28, 2024Abstract:As large-scale text-to-image generation models have made remarkable progress in the field of text-to-image generation, many fine-tuning methods have been proposed. However, these models often struggle with novel objects, especially with one-shot scenarios. Our proposed method aims to address the challenges of generalizability and fidelity in an object-driven way, using only a single input image and the object-specific regions of interest. To improve generalizability and mitigate overfitting, in our paradigm, a prototypical embedding is initialized based on the object's appearance and its class, before fine-tuning the diffusion model. And during fine-tuning, we propose a class-characterizing regularization to preserve prior knowledge of object classes. To further improve fidelity, we introduce object-specific loss, which can also use to implant multiple objects. Overall, our proposed object-driven method for implanting new objects can integrate seamlessly with existing concepts as well as with high fidelity and generalization. Our method outperforms several existing works. The code will be released.

FGSI: Distant Supervision for Relation Extraction method based on Fine-Grained Semantic Information

Feb 04, 2023Abstract:The main purpose of relation extraction is to extract the semantic relationships between tagged pairs of entities in a sentence, which plays an important role in the semantic understanding of sentences and the construction of knowledge graphs. In this paper, we propose that the key semantic information within a sentence plays a key role in the relationship extraction of entities. We propose the hypothesis that the key semantic information inside the sentence plays a key role in entity relationship extraction. And based on this hypothesis, we split the sentence into three segments according to the location of the entity from the inside of the sentence, and find the fine-grained semantic features inside the sentence through the intra-sentence attention mechanism to reduce the interference of irrelevant noise information. The proposed relational extraction model can make full use of the available positive semantic information. The experimental results show that the proposed relation extraction model improves the accuracy-recall curves and P@N values compared with existing methods, which proves the effectiveness of this model.

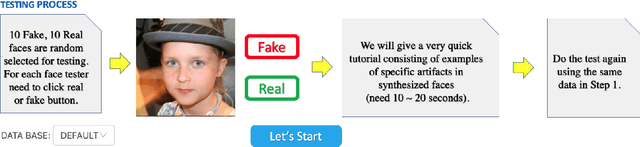

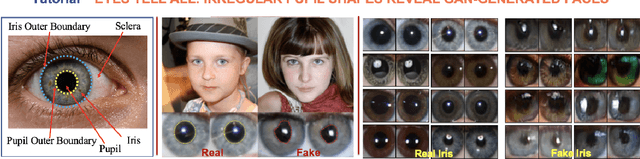

Open-Eye: An Open Platform to Study Human Performance on Identifying AI-Synthesized Faces

May 13, 2022

Abstract:AI-synthesized faces are visually challenging to discern from real ones. They have been used as profile images for fake social media accounts, which leads to high negative social impacts. Although progress has been made in developing automatic methods to detect AI-synthesized faces, there is no open platform to study the human performance of AI-synthesized faces detection. In this work, we develop an online platform called Open-eye to study the human performance of AI-synthesized face detection. We describe the design and workflow of the Open-eye in this paper.

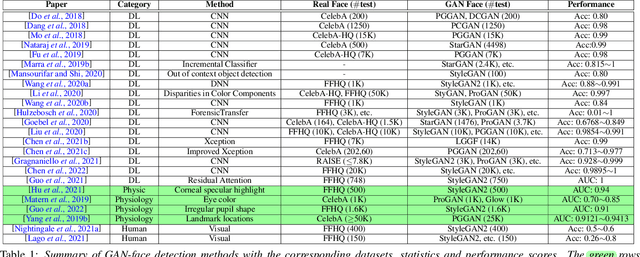

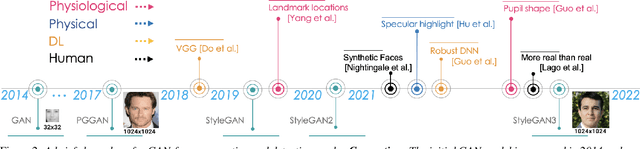

GAN-generated Faces Detection: A Survey and New Perspectives

Feb 15, 2022

Abstract:Generative Adversarial Networks (GAN) have led to the generation of very realistic face images, which have been used in fake social media accounts and other disinformation matters that can generate profound impacts. Therefore, the corresponding GAN-face detection techniques are under active development that can examine and expose such fake faces. In this work, we aim to provide a comprehensive review of recent progress in GAN-face detection. We focus on methods that can detect face images that are generated or synthesized from GAN models. We classify the existing detection works into four categories: (1) deep learning-based, (2) physical-based, (3) physiological-based methods, and (4) evaluation and comparison against human visual performance. For each category, we summarize the key ideas and connect them with method implementations. We also discuss open problems and suggest future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge