Huang Xiao

HTP: Exploiting Holistic Temporal Patterns for Sequential Recommendation

Jul 22, 2023

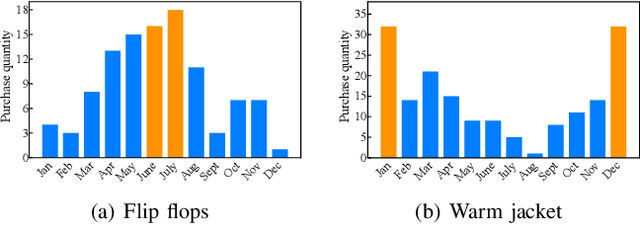

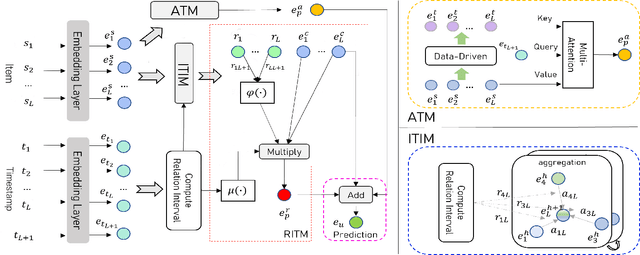

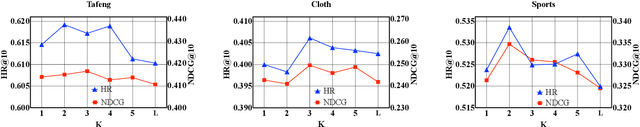

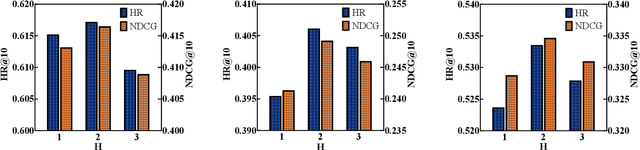

Abstract:Sequential recommender systems have demonstrated a huge success for next-item recommendation by explicitly exploiting the temporal order of users' historical interactions. In practice, user interactions contain more useful temporal information beyond order, as shown by some pioneering studies. In this paper, we systematically investigate various temporal information for sequential recommendation and identify three types of advantageous temporal patterns beyond order, including absolute time information, relative item time intervals and relative recommendation time intervals. We are the first to explore item-oriented absolute time patterns. While existing models consider only one or two of these three patterns, we propose a novel holistic temporal pattern based neural network, named HTP, to fully leverage all these three patterns. In particular, we introduce novel components to address the subtle correlations between relative item time intervals and relative recommendation time intervals, which render a major technical challenge. Extensive experiments on three real-world benchmark datasets show that our HTP model consistently and substantially outperforms many state-of-the-art models. Our code is publically available at https://github.com/623851394/HTP/tree/main/HTP-main

Support Vector Machines under Adversarial Label Contamination

Jun 01, 2022

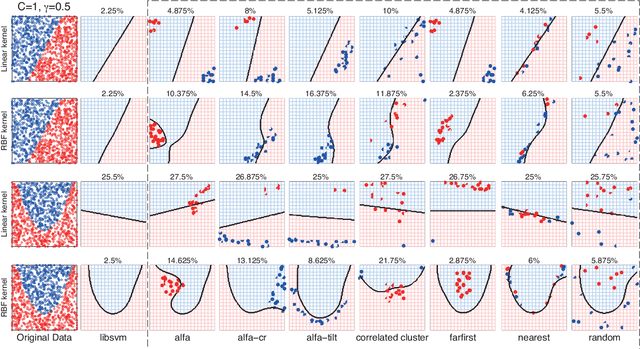

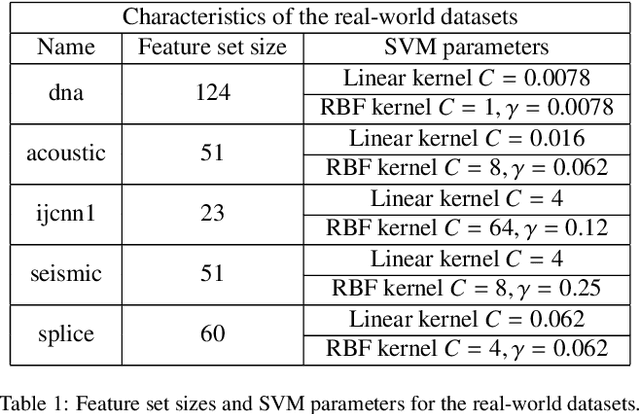

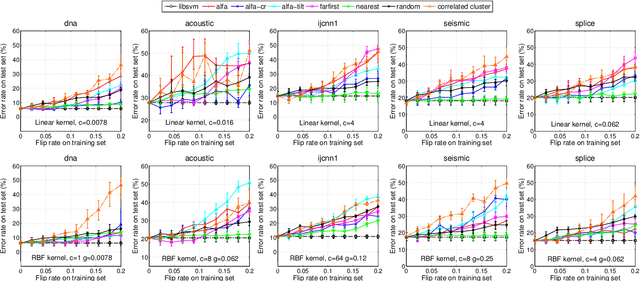

Abstract:Machine learning algorithms are increasingly being applied in security-related tasks such as spam and malware detection, although their security properties against deliberate attacks have not yet been widely understood. Intelligent and adaptive attackers may indeed exploit specific vulnerabilities exposed by machine learning techniques to violate system security. Being robust to adversarial data manipulation is thus an important, additional requirement for machine learning algorithms to successfully operate in adversarial settings. In this work, we evaluate the security of Support Vector Machines (SVMs) to well-crafted, adversarial label noise attacks. In particular, we consider an attacker that aims to maximize the SVM's classification error by flipping a number of labels in the training data. We formalize a corresponding optimal attack strategy, and solve it by means of heuristic approaches to keep the computational complexity tractable. We report an extensive experimental analysis on the effectiveness of the considered attacks against linear and non-linear SVMs, both on synthetic and real-world datasets. We finally argue that our approach can also provide useful insights for developing more secure SVM learning algorithms, and also novel techniques in a number of related research areas, such as semi-supervised and active learning.

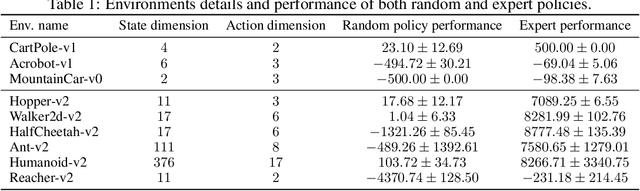

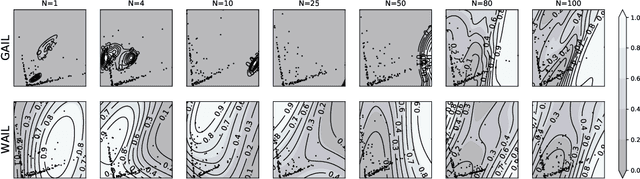

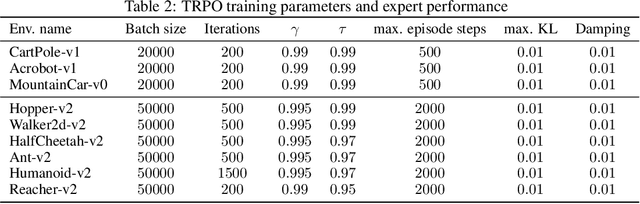

Wasserstein Adversarial Imitation Learning

Jun 19, 2019

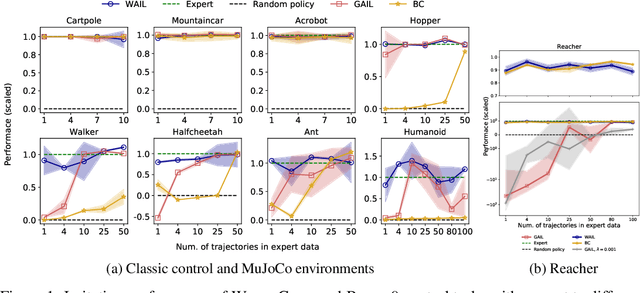

Abstract:Imitation Learning describes the problem of recovering an expert policy from demonstrations. While inverse reinforcement learning approaches are known to be very sample-efficient in terms of expert demonstrations, they usually require problem-dependent reward functions or a (task-)specific reward-function regularization. In this paper, we show a natural connection between inverse reinforcement learning approaches and Optimal Transport, that enables more general reward functions with desirable properties (e.g., smoothness). Based on our observation, we propose a novel approach called Wasserstein Adversarial Imitation Learning. Our approach considers the Kantorovich potentials as a reward function and further leverages regularized optimal transport to enable large-scale applications. In several robotic experiments, our approach outperforms the baselines in terms of average cumulative rewards and shows a significant improvement in sample-efficiency, by requiring just one expert demonstration.

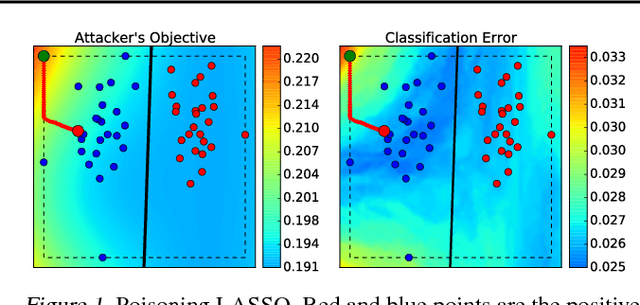

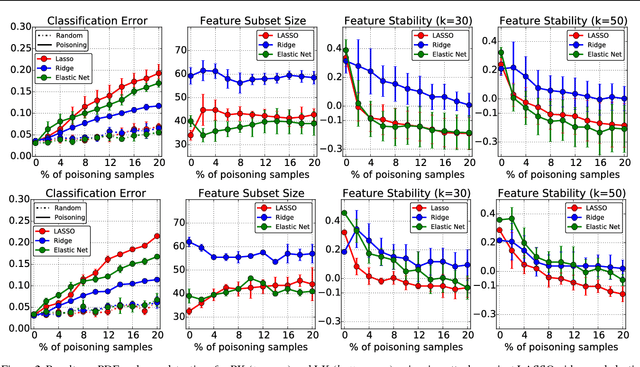

Is feature selection secure against training data poisoning?

Apr 21, 2018

Abstract:Learning in adversarial settings is becoming an important task for application domains where attackers may inject malicious data into the training set to subvert normal operation of data-driven technologies. Feature selection has been widely used in machine learning for security applications to improve generalization and computational efficiency, although it is not clear whether its use may be beneficial or even counterproductive when training data are poisoned by intelligent attackers. In this work, we shed light on this issue by providing a framework to investigate the robustness of popular feature selection methods, including LASSO, ridge regression and the elastic net. Our results on malware detection show that feature selection methods can be significantly compromised under attack (we can reduce LASSO to almost random choices of feature sets by careful insertion of less than 5% poisoned training samples), highlighting the need for specific countermeasures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge