Hongyu Shen

Boosting Test Performance with Importance Sampling--a Subpopulation Perspective

Dec 17, 2024

Abstract:Despite empirical risk minimization (ERM) is widely applied in the machine learning community, its performance is limited on data with spurious correlation or subpopulation that is introduced by hidden attributes. Existing literature proposed techniques to maximize group-balanced or worst-group accuracy when such correlation presents, yet, at the cost of lower average accuracy. In addition, many existing works conduct surveys on different subpopulation methods without revealing the inherent connection between these methods, which could hinder the technology advancement in this area. In this paper, we identify important sampling as a simple yet powerful tool for solving the subpopulation problem. On the theory side, we provide a new systematic formulation of the subpopulation problem and explicitly identify the assumptions that are not clearly stated in the existing works. This helps to uncover the cause of the dropped average accuracy. We provide the first theoretical discussion on the connections of existing methods, revealing the core components that make them different. On the application side, we demonstrate a single estimator is enough to solve the subpopulation problem. In particular, we introduce the estimator in both attribute-known and -unknown scenarios in the subpopulation setup, offering flexibility in practical use cases. And empirically, we achieve state-of-the-art performance on commonly used benchmark datasets.

* 16 pages, 1 figure, 2 tables

DeepDRK: Deep Dependency Regularized Knockoff for Feature Selection

Feb 27, 2024

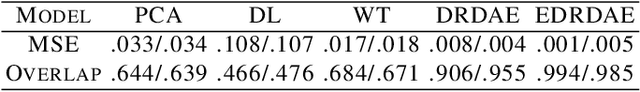

Abstract:Model-X knockoff, among various feature selection methods, received much attention recently due to its guarantee on false discovery rate (FDR) control. Subsequent to its introduction in parametric design, knockoff is advanced to handle arbitrary data distributions using deep learning-based generative modeling. However, we observed that current implementations of the deep Model-X knockoff framework exhibit limitations. Notably, the "swap property" that knockoffs necessitate frequently encounter challenges on sample level, leading to a diminished selection power. To overcome, we develop "Deep Dependency Regularized Knockoff (DeepDRK)", a distribution-free deep learning method that strikes a balance between FDR and power. In DeepDRK, a generative model grounded in a transformer architecture is introduced to better achieve the "swap property". Novel efficient regularization techniques are also proposed to reach higher power. Our model outperforms other benchmarks in synthetic, semi-synthetic, and real-world data, especially when sample size is small and data distribution is complex.

Learning Personalized Representations using Graph Convolutional Network

Jul 28, 2022

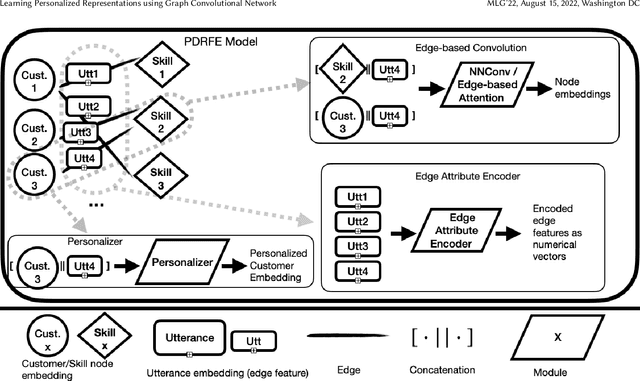

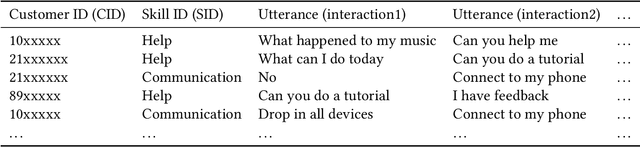

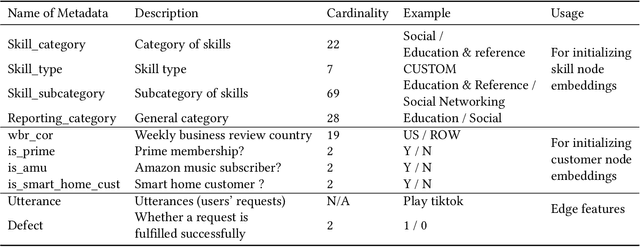

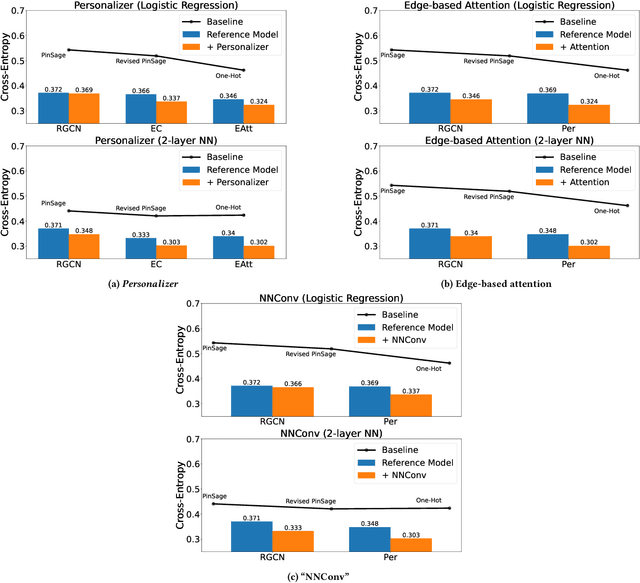

Abstract:Generating representations that precisely reflect customers' behavior is an important task for providing personalized skill routing experience in Alexa. Currently, Dynamic Routing (DR) team, which is responsible for routing Alexa traffic to providers or skills, relies on two features to be served as personal signals: absolute traffic count and normalized traffic count of every skill usage per customer. Neither of them considers the network based structure for interactions between customers and skills, which contain richer information for customer preferences. In this work, we first build a heterogeneous edge attributed graph based customers' past interactions with the invoked skills, in which the user requests (utterances) are modeled as edges. Then we propose a graph convolutional network(GCN) based model, namely Personalized Dynamic Routing Feature Encoder(PDRFE), that generates personalized customer representations learned from the built graph. Compared with existing models, PDRFE is able to further capture contextual information in the graph convolutional function. The performance of our proposed model is evaluated by a downstream task, defect prediction, that predicts the defect label from the learned embeddings of customers and their triggered skills. We observe up to 41% improvements on the cross entropy metric for our proposed models compared to the baselines.

Do You Live a Healthy Life? Analyzing Lifestyle by Visual Life Logging

Nov 24, 2020

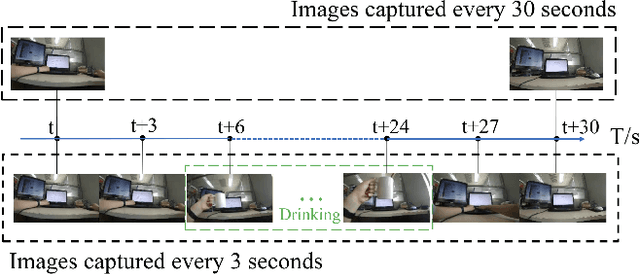

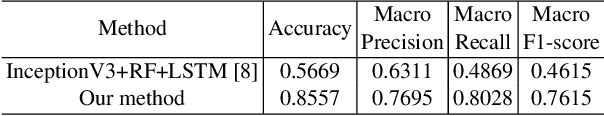

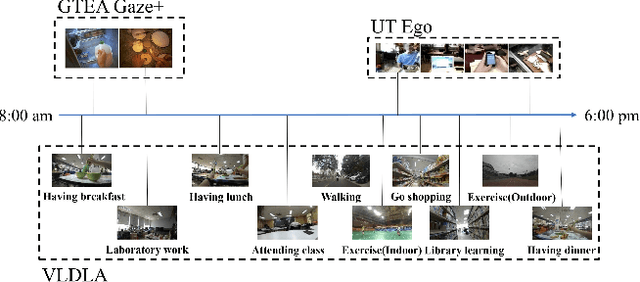

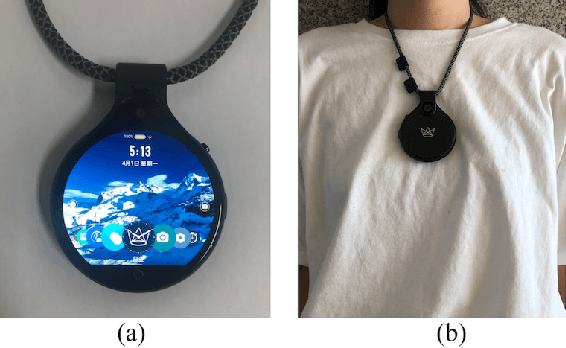

Abstract:A healthy lifestyle is the key to better health and happiness and has a considerable effect on quality of life and disease prevention. Current lifelogging/egocentric datasets are not suitable for lifestyle analysis; consequently, there is no research on lifestyle analysis in the field of computer vision. In this work, we investigate the problem of lifestyle analysis and build a visual lifelogging dataset for lifestyle analysis (VLDLA). The VLDLA contains images captured by a wearable camera every 3 seconds from 8:00 am to 6:00 pm for seven days. In contrast to current lifelogging/egocentric datasets, our dataset is suitable for lifestyle analysis as images are taken with short intervals to capture activities of short duration; moreover, images are taken continuously from morning to evening to record all the activities performed by a user. Based on our dataset, we classify the user activities in each frame and use three latent fluents of the user, which change over time and are associated with activities, to measure the healthy degree of the user's lifestyle. The scores for the three latent fluents are computed based on recognized activities, and the healthy degree of the lifestyle for the day is determined based on the scores for the latent fluents. Experimental results show that our method can be used to analyze the healthiness of users' lifestyles.

Enabling real-time multi-messenger astrophysics discoveries with deep learning

Nov 26, 2019

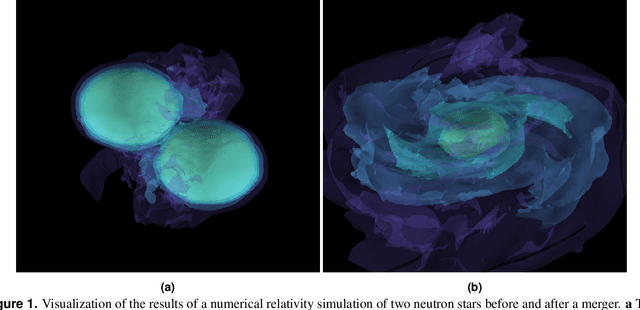

Abstract:Multi-messenger astrophysics is a fast-growing, interdisciplinary field that combines data, which vary in volume and speed of data processing, from many different instruments that probe the Universe using different cosmic messengers: electromagnetic waves, cosmic rays, gravitational waves and neutrinos. In this Expert Recommendation, we review the key challenges of real-time observations of gravitational wave sources and their electromagnetic and astroparticle counterparts, and make a number of recommendations to maximize their potential for scientific discovery. These recommendations refer to the design of scalable and computationally efficient machine learning algorithms; the cyber-infrastructure to numerically simulate astrophysical sources, and to process and interpret multi-messenger astrophysics data; the management of gravitational wave detections to trigger real-time alerts for electromagnetic and astroparticle follow-ups; a vision to harness future developments of machine learning and cyber-infrastructure resources to cope with the big-data requirements; and the need to build a community of experts to realize the goals of multi-messenger astrophysics.

* Invited Expert Recommendation for Nature Reviews Physics. The art work produced by E. A. Huerta and Shawn Rosofsky for this article was used by Carl Conway to design the cover of the October 2019 issue of Nature Reviews Physics

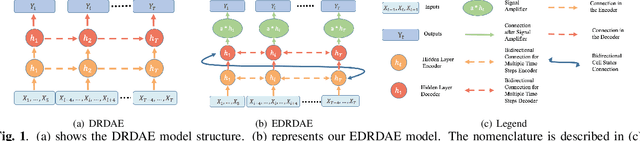

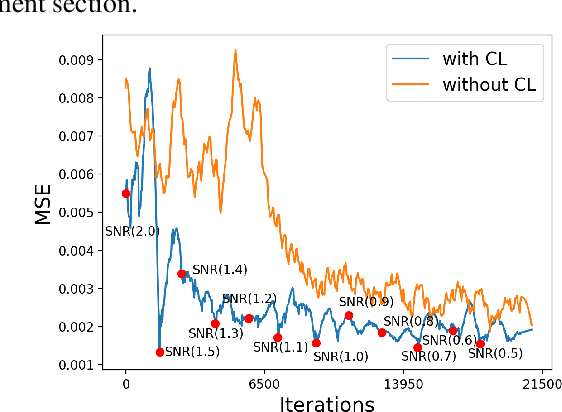

Denoising Gravitational Waves with Enhanced Deep Recurrent Denoising Auto-Encoders

Mar 06, 2019

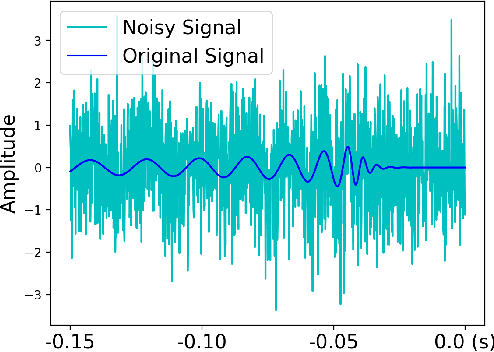

Abstract:Denoising of time domain data is a crucial task for many applications such as communication, translation, virtual assistants etc. For this task, a combination of a recurrent neural net (RNNs) with a Denoising Auto-Encoder (DAEs) has shown promising results. However, this combined model is challenged when operating with low signal-to-noise ratio (SNR) data embedded in non-Gaussian and non-stationary noise. To address this issue, we design a novel model, referred to as 'Enhanced Deep Recurrent Denoising Auto-Encoder' (EDRDAE), that incorporates a signal amplifier layer, and applies curriculum learning by first denoising high SNR signals, before gradually decreasing the SNR until the signals become noise dominated. We showcase the performance of EDRDAE using time-series data that describes gravitational waves embedded in very noisy backgrounds. In addition, we show that EDRDAE can accurately denoise signals whose topology is significantly more complex than those used for training, demonstrating that our model generalizes to new classes of gravitational waves that are beyond the scope of established denoising algorithms.

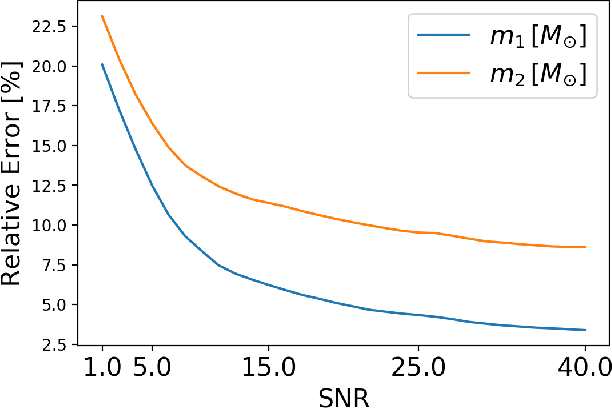

Deep Learning at Scale for Gravitational Wave Parameter Estimation of Binary Black Hole Mergers

Mar 05, 2019

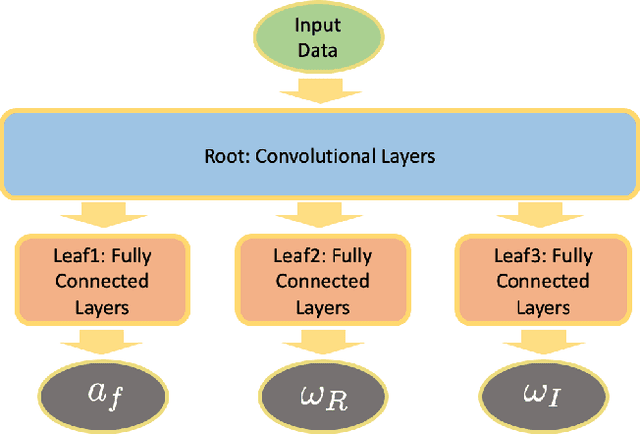

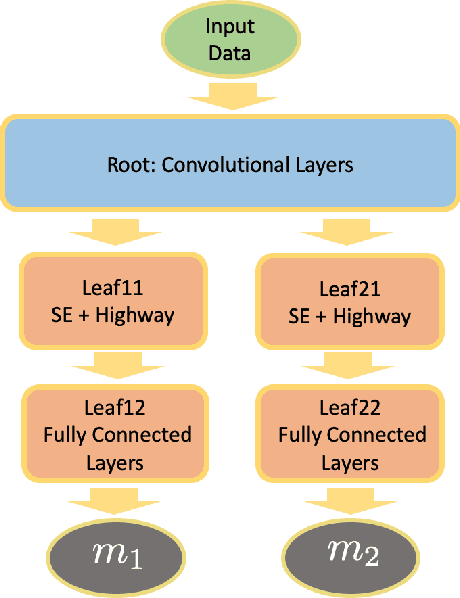

Abstract:We present the first application of deep learning at scale to do gravitational wave parameter estimation of binary black hole mergers that describe a 4-D signal manifold, i.e., black holes whose spins are aligned or anti-aligned, and which evolve on quasi-circular orbits. We densely sample this 4-D signal manifold using over three hundred thousand simulated waveforms. In order to cover a broad range of astrophysically motivated scenarios, we synthetically enhance this waveform dataset to ensure that our deep learning algorithms can process waveforms located at any point in the data stream of gravitational wave detectors (time invariance) for a broad range of signal-to-noise ratios (scale invariance), which in turn means that our neural network models are trained with over $10^{7}$ waveform signals. We then apply these neural network models to estimate the astrophysical parameters of black hole mergers, and their corresponding black hole remnants, including the final spin and the gravitational wave quasi-normal frequencies. These neural network models represent the first time deep learning is used to provide point-parameter estimation calculations endowed with statistical errors. For each binary black hole merger that ground-based gravitational wave detectors have observed, our deep learning algorithms can reconstruct its parameters within 2 milliseconds using a single Tesla V100 GPU. We show that this new approach produces parameter estimation results that are consistent with Bayesian analyses that have been used to reconstruct the parameters of the catalog of binary black hole mergers observed by the advanced LIGO and Virgo detectors.

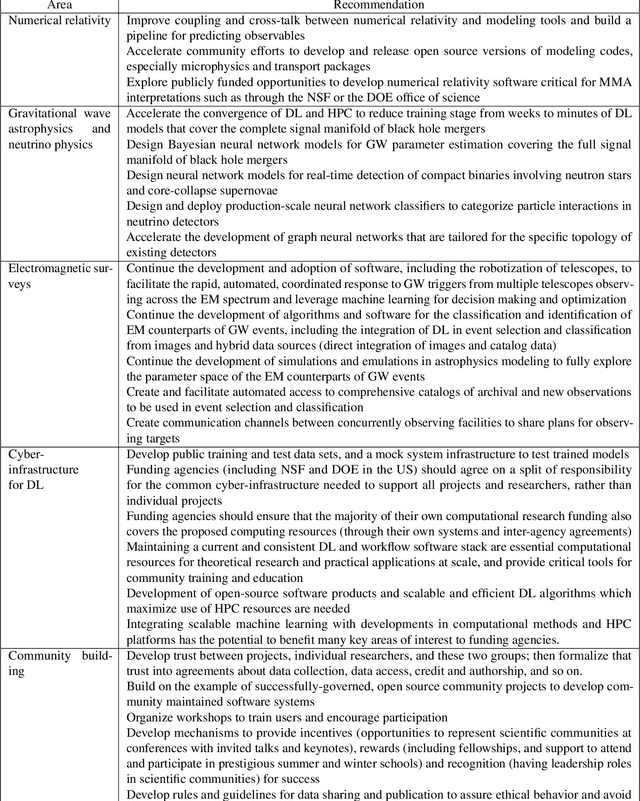

Deep Learning for Multi-Messenger Astrophysics: A Gateway for Discovery in the Big Data Era

Feb 01, 2019Abstract:This report provides an overview of recent work that harnesses the Big Data Revolution and Large Scale Computing to address grand computational challenges in Multi-Messenger Astrophysics, with a particular emphasis on real-time discovery campaigns. Acknowledging the transdisciplinary nature of Multi-Messenger Astrophysics, this document has been prepared by members of the physics, astronomy, computer science, data science, software and cyberinfrastructure communities who attended the NSF-, DOE- and NVIDIA-funded "Deep Learning for Multi-Messenger Astrophysics: Real-time Discovery at Scale" workshop, hosted at the National Center for Supercomputing Applications, October 17-19, 2018. Highlights of this report include unanimous agreement that it is critical to accelerate the development and deployment of novel, signal-processing algorithms that use the synergy between artificial intelligence (AI) and high performance computing to maximize the potential for scientific discovery with Multi-Messenger Astrophysics. We discuss key aspects to realize this endeavor, namely (i) the design and exploitation of scalable and computationally efficient AI algorithms for Multi-Messenger Astrophysics; (ii) cyberinfrastructure requirements to numerically simulate astrophysical sources, and to process and interpret Multi-Messenger Astrophysics data; (iii) management of gravitational wave detections and triggers to enable electromagnetic and astro-particle follow-ups; (iv) a vision to harness future developments of machine and deep learning and cyberinfrastructure resources to cope with the scale of discovery in the Big Data Era; (v) and the need to build a community that brings domain experts together with data scientists on equal footing to maximize and accelerate discovery in the nascent field of Multi-Messenger Astrophysics.

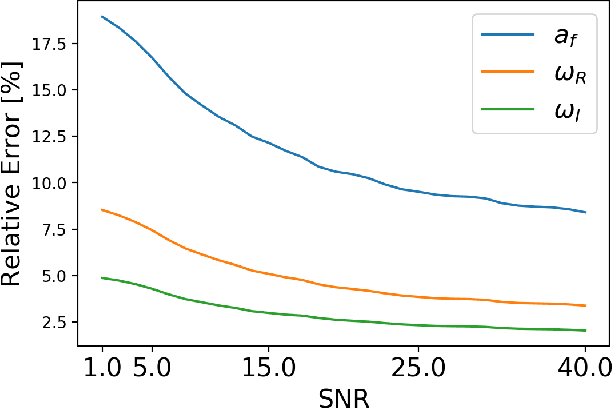

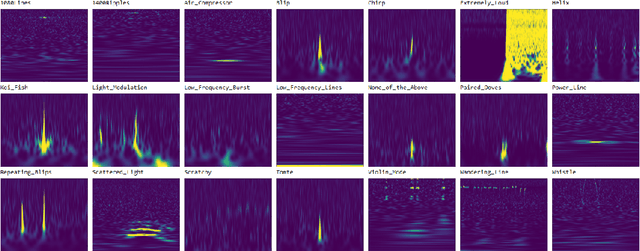

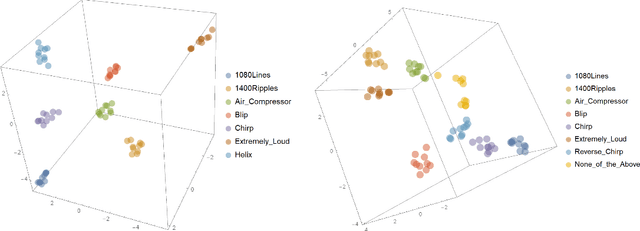

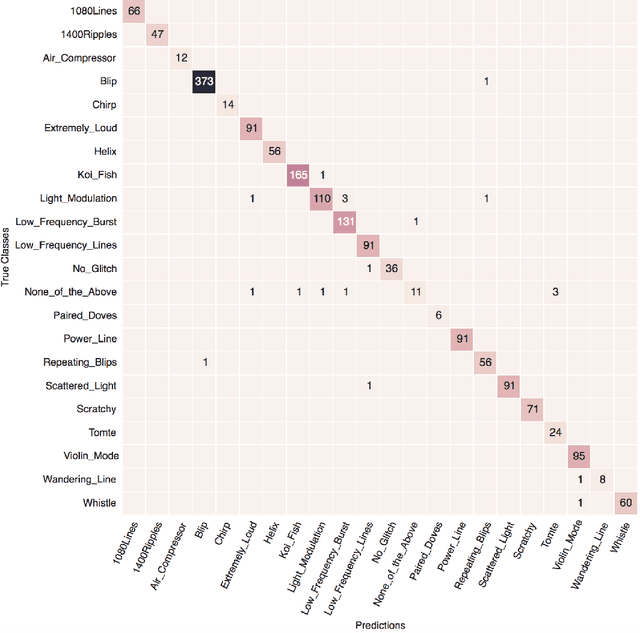

Glitch Classification and Clustering for LIGO with Deep Transfer Learning

Dec 11, 2017

Abstract:The detection of gravitational waves with LIGO and Virgo requires a detailed understanding of the response of these instruments in the presence of environmental and instrumental noise. Of particular interest is the study of anomalous non-Gaussian noise transients known as glitches, since their high occurrence rate in LIGO/Virgo data can obscure or even mimic true gravitational wave signals. Therefore, successfully identifying and excising glitches is of utmost importance to detect and characterize gravitational waves. In this article, we present the first application of Deep Learning combined with Transfer Learning for glitch classification, using real data from LIGO's first discovery campaign labeled by Gravity Spy, showing that knowledge from pre-trained models for real-world object recognition can be transferred for classifying spectrograms of glitches. We demonstrate that this method enables the optimal use of very deep convolutional neural networks for glitch classification given small unbalanced training datasets, significantly reduces the training time, and achieves state-of-the-art accuracy above 98.8%. Once trained via transfer learning, we show that the networks can be truncated and used as feature extractors for unsupervised clustering to automatically group together new classes of glitches and anomalies. This novel capability is of critical importance to identify and remove new types of glitches which will occur as the LIGO/Virgo detectors gradually attain design sensitivity.

* Camera-ready (final) paper accepted to NIPS 2017 conference workshop on Deep Learning for Physical Sciences. Extended article: arXiv:1706.07446

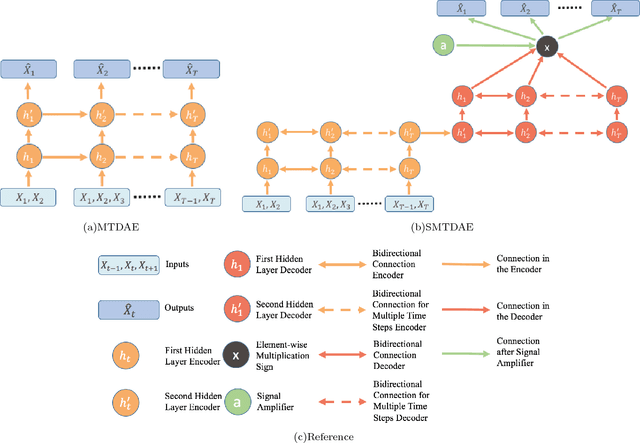

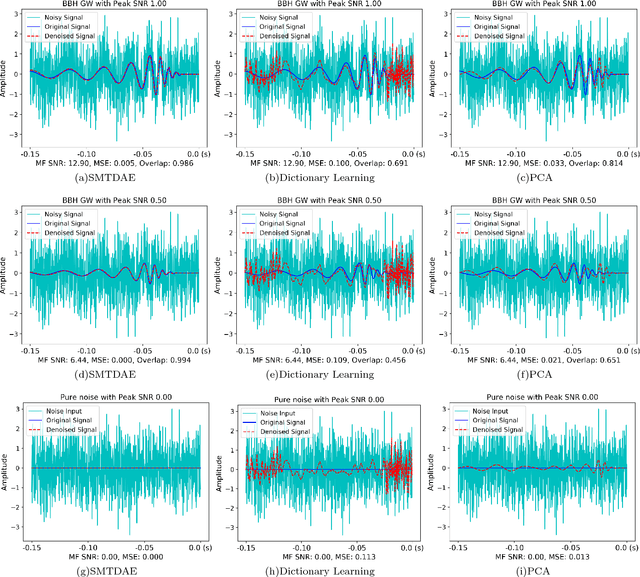

Denoising Gravitational Waves using Deep Learning with Recurrent Denoising Autoencoders

Nov 27, 2017

Abstract:Gravitational wave astronomy is a rapidly growing field of modern astrophysics, with observations being made frequently by the LIGO detectors. Gravitational wave signals are often extremely weak and the data from the detectors, such as LIGO, is contaminated with non-Gaussian and non-stationary noise, often containing transient disturbances which can obscure real signals. Traditional denoising methods, such as principal component analysis and dictionary learning, are not optimal for dealing with this non-Gaussian noise, especially for low signal-to-noise ratio gravitational wave signals. Furthermore, these methods are computationally expensive on large datasets. To overcome these issues, we apply state-of-the-art signal processing techniques, based on recent groundbreaking advancements in deep learning, to denoise gravitational wave signals embedded either in Gaussian noise or in real LIGO noise. We introduce SMTDAE, a Staired Multi-Timestep Denoising Autoencoder, based on sequence-to-sequence bi-directional Long-Short-Term-Memory recurrent neural networks. We demonstrate the advantages of using our unsupervised deep learning approach and show that, after training only using simulated Gaussian noise, SMTDAE achieves superior recovery performance for gravitational wave signals embedded in real non-Gaussian LIGO noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge