Heshaam Faili

DEEPQUESTION: Systematic Generation of Real-World Challenges for Evaluating LLMs Performance

May 30, 2025Abstract:LLMs often excel on standard benchmarks but falter on real-world tasks. We introduce DeepQuestion, a scalable automated framework that augments existing datasets based on Bloom's taxonomy and creates novel questions that trace original solution paths to probe evaluative and creative skills. Extensive experiments across ten open-source and proprietary models, covering both general-purpose and reasoning LLMs, reveal substantial performance drops (even up to 70% accuracy loss) on higher-order tasks, underscoring persistent gaps in deep reasoning. Our work highlights the need for cognitively diverse benchmarks to advance LLM progress. DeepQuestion and related datasets will be released upon acceptance of the paper.

SchemaGraphSQL: Efficient Schema Linking with Pathfinding Graph Algorithms for Text-to-SQL on Large-Scale Databases

May 23, 2025

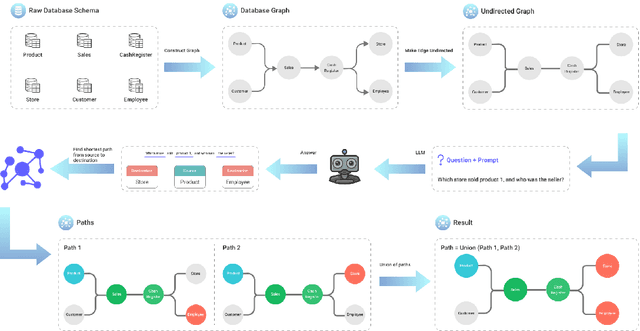

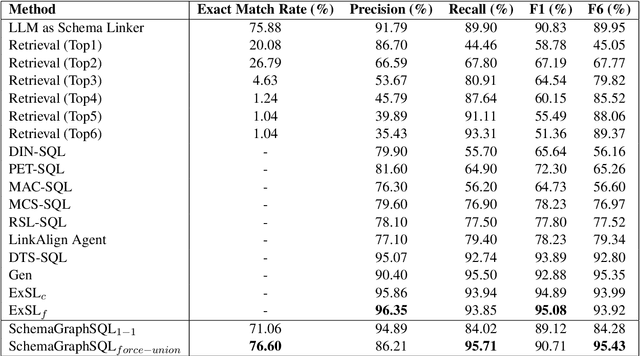

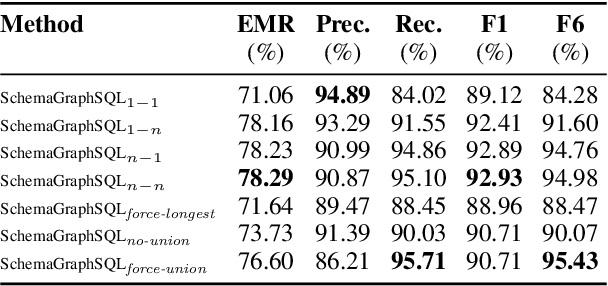

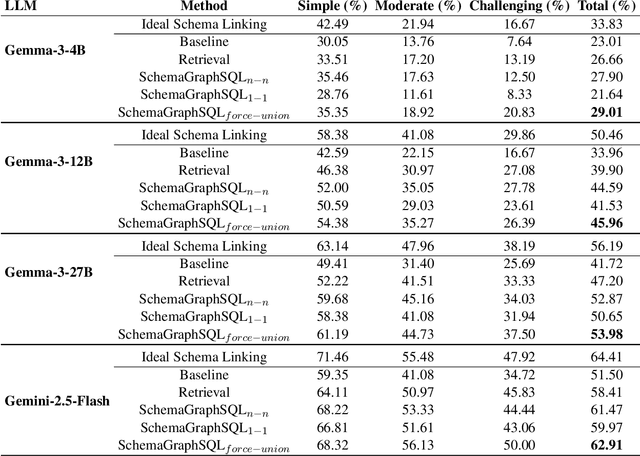

Abstract:Text-to-SQL systems translate natural language questions into executable SQL queries, and recent progress with large language models (LLMs) has driven substantial improvements in this task. Schema linking remains a critical component in Text-to-SQL systems, reducing prompt size for models with narrow context windows and sharpening model focus even when the entire schema fits. We present a zero-shot, training-free schema linking approach that first constructs a schema graph based on foreign key relations, then uses a single prompt to Gemini 2.5 Flash to extract source and destination tables from the user query, followed by applying classical path-finding algorithms and post-processing to identify the optimal sequence of tables and columns that should be joined, enabling the LLM to generate more accurate SQL queries. Despite being simple, cost-effective, and highly scalable, our method achieves state-of-the-art results on the BIRD benchmark, outperforming previous specialized, fine-tuned, and complex multi-step LLM-based approaches. We conduct detailed ablation studies to examine the precision-recall trade-off in our framework. Additionally, we evaluate the execution accuracy of our schema filtering method compared to other approaches across various model sizes.

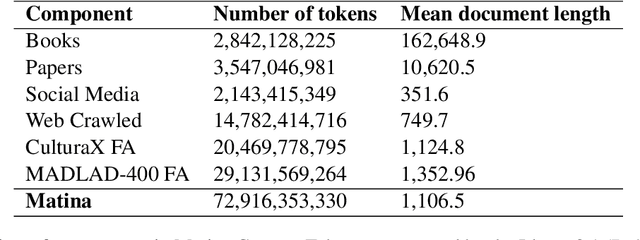

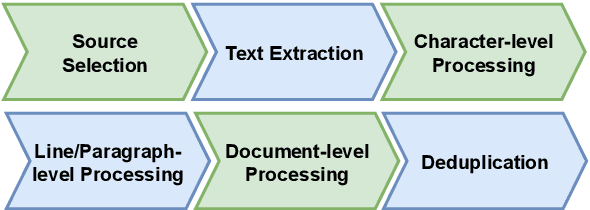

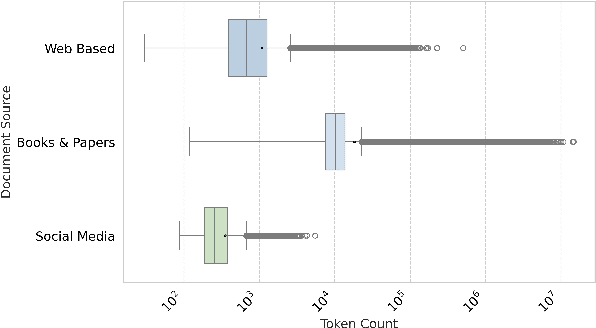

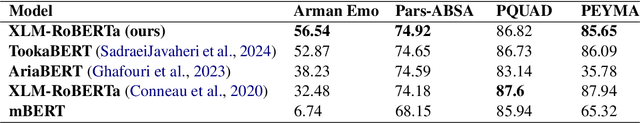

Matina: A Large-Scale 73B Token Persian Text Corpus

Feb 13, 2025

Abstract:Text corpora are essential for training models used in tasks like summarization, translation, and large language models (LLMs). While various efforts have been made to collect monolingual and multilingual datasets in many languages, Persian has often been underrepresented due to limited resources for data collection and preprocessing. Existing Persian datasets are typically small and lack content diversity, consisting mainly of weblogs and news articles. This shortage of high-quality, varied data has slowed the development of NLP models and open-source LLMs for Persian. Since model performance depends heavily on the quality of training data, we address this gap by introducing the Matina corpus, a new Persian dataset of 72.9B tokens, carefully preprocessed and deduplicated to ensure high data quality. We further assess its effectiveness by training and evaluating transformer-based models on key NLP tasks. Both the dataset and preprocessing codes are publicly available, enabling researchers to build on and improve this resource for future Persian NLP advancements.

PerSHOP -- A Persian dataset for shopping dialogue systems modeling

Jan 01, 2024Abstract:Nowadays, dialogue systems are used in many fields of industry and research. There are successful instances of these systems, such as Apple Siri, Google Assistant, and IBM Watson. Task-oriented dialogue system is a category of these, that are used in specific tasks. They can perform tasks such as booking plane tickets or making restaurant reservations. Shopping is one of the most popular areas on these systems. The bot replaces the human salesperson and interacts with the customers by speaking. To train the models behind the scenes of these systems, annotated data is needed. In this paper, we developed a dataset of dialogues in the Persian language through crowd-sourcing. We annotated these dialogues to train a model. This dataset contains nearly 22k utterances in 15 different domains and 1061 dialogues. This is the largest Persian dataset in this field, which is provided freely so that future researchers can use it. Also, we proposed some baseline models for natural language understanding (NLU) tasks. These models perform two tasks for NLU: intent classification and entity extraction. The F-1 score metric obtained for intent classification is around 91% and for entity extraction is around 93%, which can be a baseline for future research.

Persian Typographical Error Type Detection using Many-to-Many Deep Neural Networks on Algorithmically-Generated Misspellings

May 19, 2023Abstract:Digital technologies have led to an influx of text created daily in a variety of languages, styles, and formats. A great deal of the popularity of spell-checking systems can be attributed to this phenomenon since they are crucial to polishing the digitally conceived text. In this study, we tackle Typographical Error Type Detection in Persian, which has been relatively understudied. In this paper, we present a public dataset named FarsTypo, containing 3.4 million chronologically ordered and part-of-speech tagged words of diverse topics and linguistic styles. An algorithm for applying Persian-specific errors is developed and applied to a scalable size of these words, forming a parallel dataset of correct and incorrect words. Using FarsTypo, we establish a firm baseline and compare different methodologies using various architectures. In addition, we present a novel Many-to-Many Deep Sequential Neural Network to perform token classification using both word and character embeddings in combination with bidirectional LSTM layers to detect typographical errors across 51 classes. We compare our approach with highly-advanced industrial systems that, unlike this study, have been developed utilizing a variety of resources. The results of our final method were competitive in that we achieved an accuracy of 97.62%, a precision of 98.83%, a recall of 98.61%, and outperformed the rest in terms of speed.

Mismatching-Aware Unsupervised Translation Quality Estimation For Low-Resource Languages

Jul 31, 2022

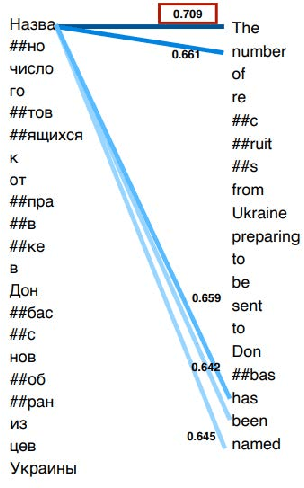

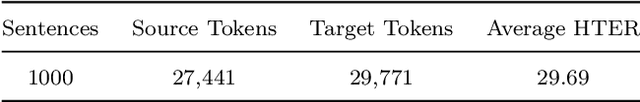

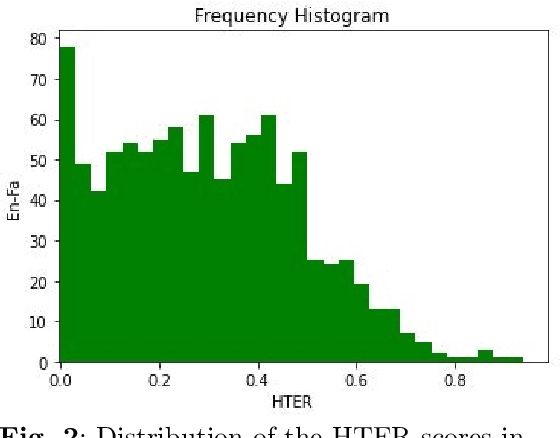

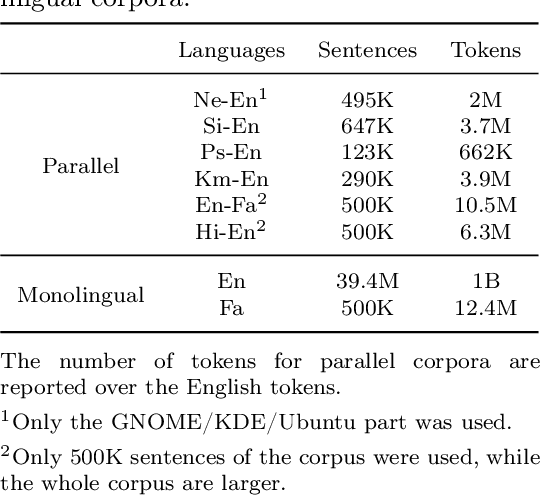

Abstract:Translation Quality Estimation (QE) is the task of predicting the quality of machine translation (MT) output without any reference. This task has gained increasing attention as an important component in practical applications of MT. In this paper, we first propose XLMRScore, a simple unsupervised QE method based on the BERTScore computed using the XLM-RoBERTa (XLMR) model while discussing the issues that occur using this method. Next, we suggest two approaches to mitigate the issues: replacing untranslated words with the unknown token and the cross-lingual alignment of pre-trained model to represent aligned words closer to each other. We evaluate the proposed method on four low-resource language pairs of WMT21 QE shared task, as well as a new English-Farsi test dataset introduced in this paper. Experiments show that our method could get comparable results with the supervised baseline for two zero-shot scenarios, i.e., with less than 0.01 difference in Pearson correlation, while outperforming the unsupervised rivals in all the low-resource language pairs for above 8% in average.

Pruned Graph Neural Network for Short Story Ordering

Mar 13, 2022

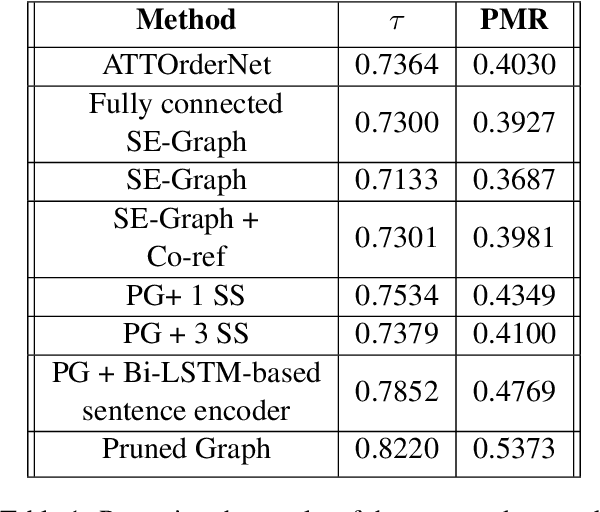

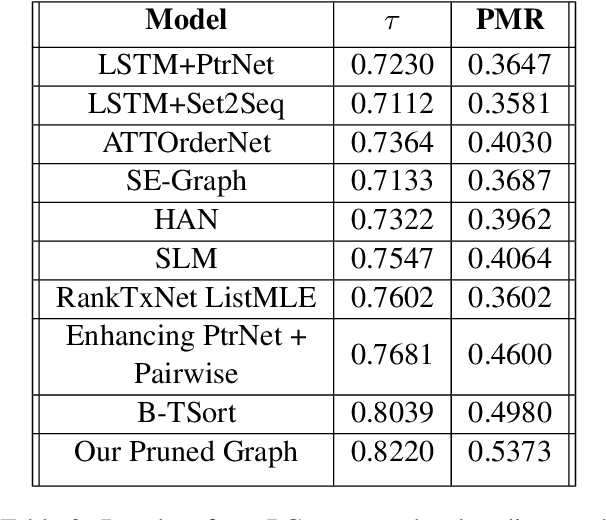

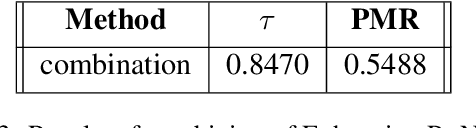

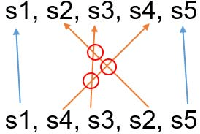

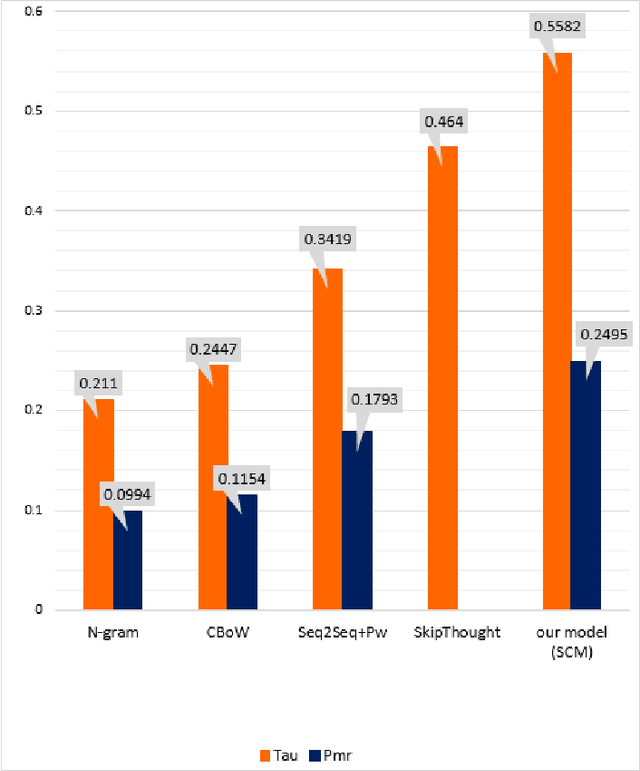

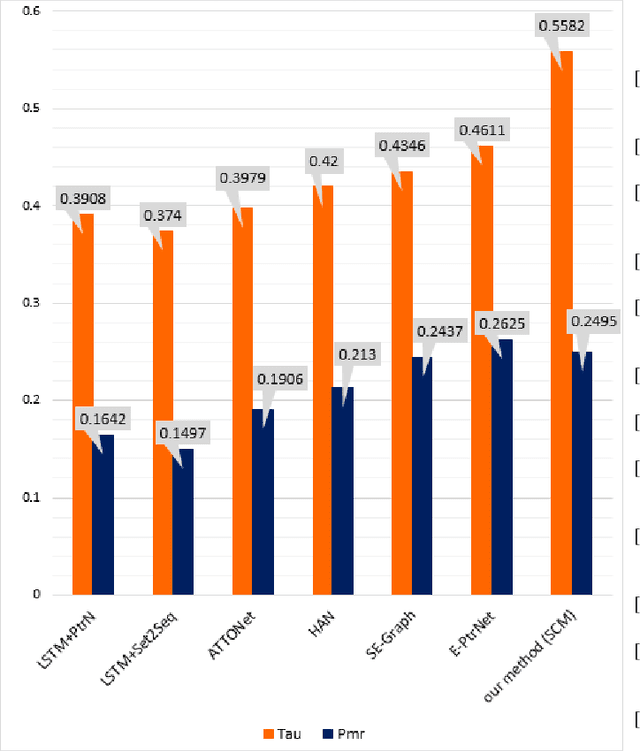

Abstract:Text coherence is a fundamental problem in natural language generation and understanding. Organizing sentences into an order that maximizes coherence is known as sentence ordering. This paper is proposing a new approach based on the graph neural network approach to encode a set of sentences and learn orderings of short stories. We propose a new method for constructing sentence-entity graphs of short stories to create the edges between sentences and reduce noise in our graph by replacing the pronouns with their referring entities. We improve the sentence ordering by introducing an aggregation method based on majority voting of state-of-the-art methods and our proposed one. Our approach employs a BERT-based model to learn semantic representations of the sentences. The results demonstrate that the proposed method significantly outperforms existing baselines on a corpus of short stories with a new state-of-the-art performance in terms of Perfect Match Ratio (PMR) and Kendall's Tau (Tau) metrics. More precisely, our method increases PMR and Tau criteria by more than 5% and 4.3%, respectively. These outcomes highlight the benefit of forming the edges between sentences based on their cosine similarity. We also observe that replacing pronouns with their referring entities effectively encodes sentences in sentence-entity graphs.

A New Sentence Ordering Method Using BERT Pretrained Model

Aug 26, 2021

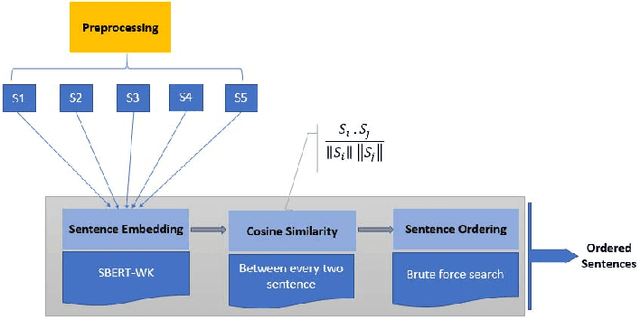

Abstract:Building systems with capability of natural language understanding (NLU) has been one of the oldest areas of AI. An essential component of NLU is to detect logical succession of events contained in a text. The task of sentence ordering is proposed to learn succession of events with applications in AI tasks. The performance of previous works employing statistical methods is poor, while the neural networks-based approaches are in serious need of large corpora for model learning. In this paper, we propose a method for sentence ordering which does not need a training phase and consequently a large corpus for learning. To this end, we generate sentence embedding using BERT pre-trained model and measure sentence similarity using cosine similarity score. We suggest this score as an indicator of sequential events' level of coherence. We finally sort the sentences through brute-force search to maximize overall similarities of the sequenced sentences. Our proposed method outperformed other baselines on ROCStories, a corpus of 5-sentence human-made stories. The method is specifically more efficient than neural network-based methods when no huge corpus is available. Among other advantages of this method are its interpretability and needlessness to linguistic knowledge.

NLP-IIS@UT at SemEval-2021 Task 4: Machine Reading Comprehension using the Long Document Transformer

May 08, 2021

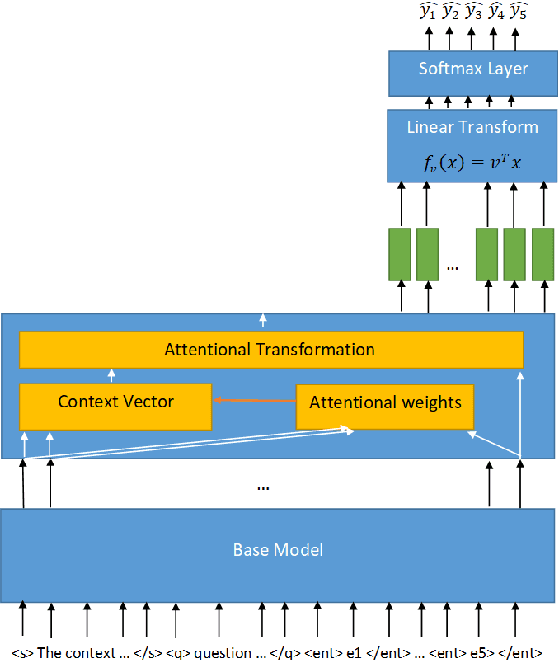

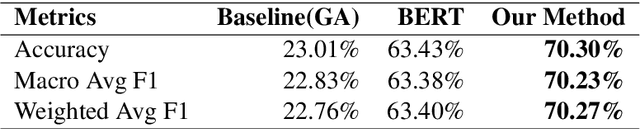

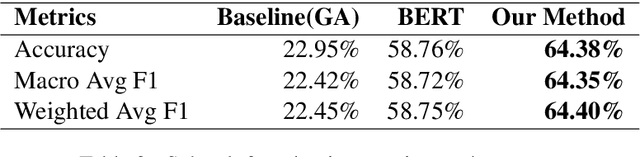

Abstract:This paper presents a technical report of our submission to the 4th task of SemEval-2021, titled: Reading Comprehension of Abstract Meaning. In this task, we want to predict the correct answer based on a question given a context. Usually, contexts are very lengthy and require a large receptive field from the model. Thus, common contextualized language models like BERT miss fine representation and performance due to the limited capacity of the input tokens. To tackle this problem, we used the Longformer model to better process the sequences. Furthermore, we utilized the method proposed in the Longformer benchmark on Wikihop dataset which improved the accuracy on our task data from 23.01% and 22.95% achieved by the baselines for subtask 1 and 2, respectively, to 70.30% and 64.38%.

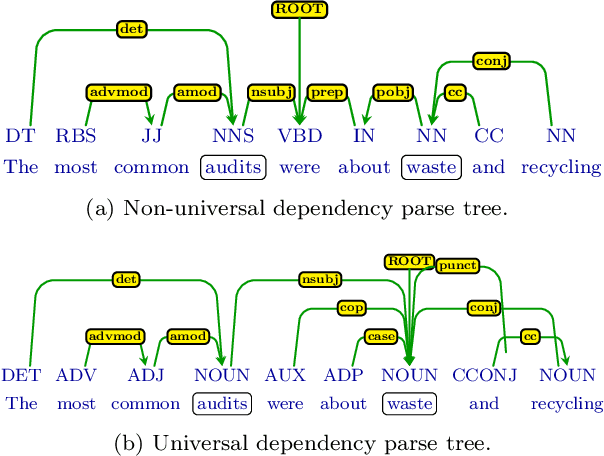

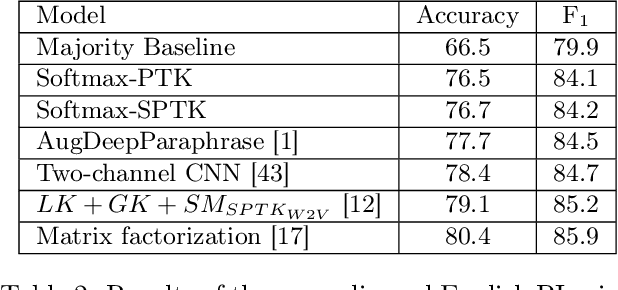

Cross-Lingual Adaptation Using Universal Dependencies

Mar 28, 2020

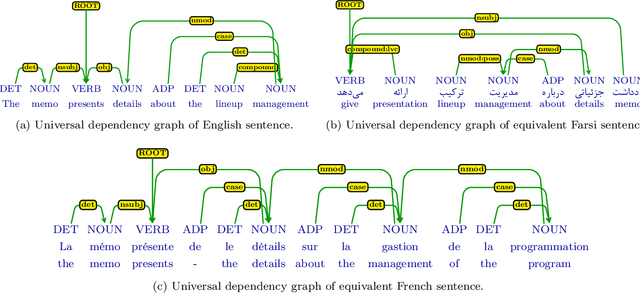

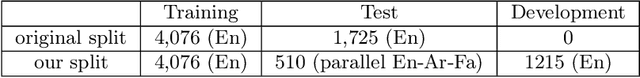

Abstract:We describe a cross-lingual adaptation method based on syntactic parse trees obtained from the Universal Dependencies (UD), which are consistent across languages, to develop classifiers in low-resource languages. The idea of UD parsing is to capture similarities as well as idiosyncrasies among typologically different languages. In this paper, we show that models trained using UD parse trees for complex NLP tasks can characterize very different languages. We study two tasks of paraphrase identification and semantic relation extraction as case studies. Based on UD parse trees, we develop several models using tree kernels and show that these models trained on the English dataset can correctly classify data of other languages e.g. French, Farsi, and Arabic. The proposed approach opens up avenues for exploiting UD parsing in solving similar cross-lingual tasks, which is very useful for languages that no labeled data is available for them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge