A New Sentence Ordering Method Using BERT Pretrained Model

Paper and Code

Aug 26, 2021

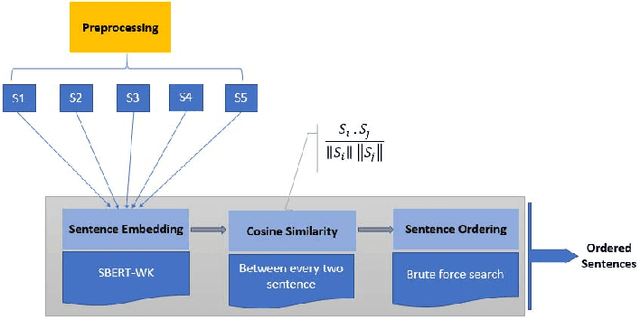

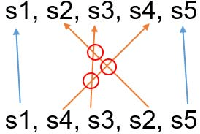

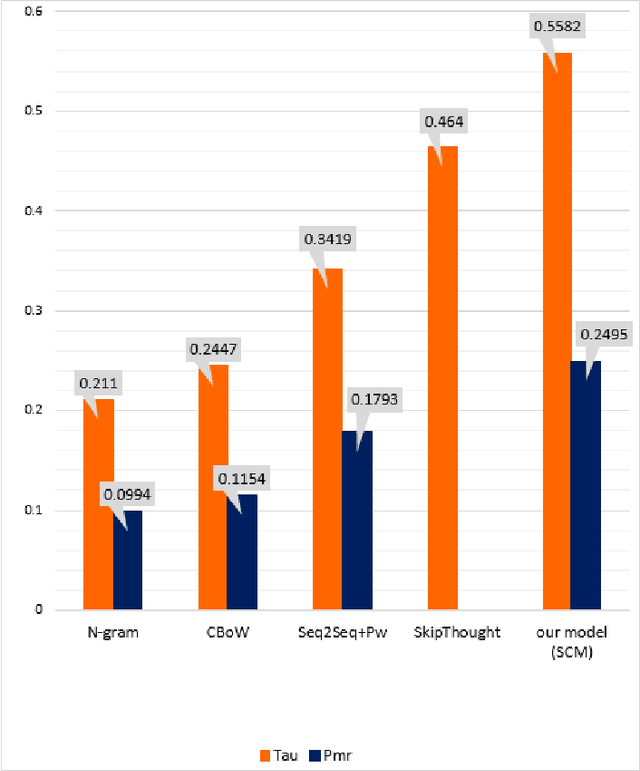

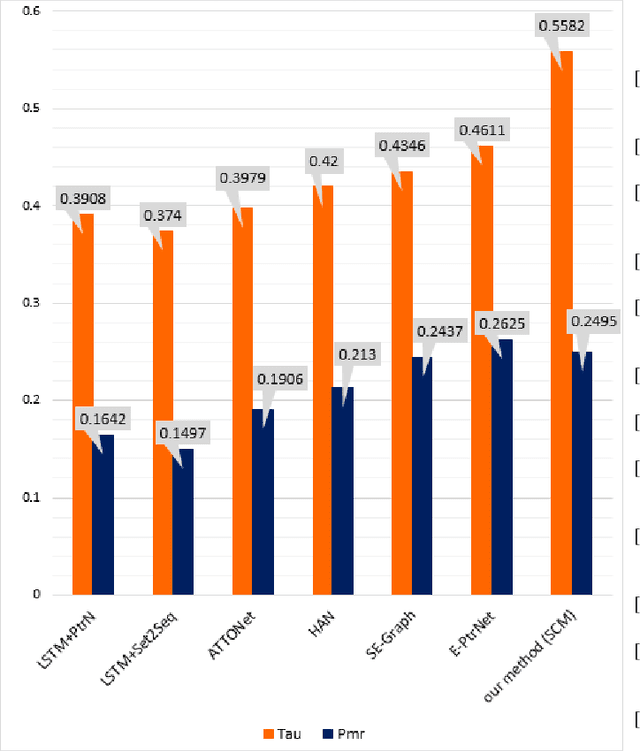

Building systems with capability of natural language understanding (NLU) has been one of the oldest areas of AI. An essential component of NLU is to detect logical succession of events contained in a text. The task of sentence ordering is proposed to learn succession of events with applications in AI tasks. The performance of previous works employing statistical methods is poor, while the neural networks-based approaches are in serious need of large corpora for model learning. In this paper, we propose a method for sentence ordering which does not need a training phase and consequently a large corpus for learning. To this end, we generate sentence embedding using BERT pre-trained model and measure sentence similarity using cosine similarity score. We suggest this score as an indicator of sequential events' level of coherence. We finally sort the sentences through brute-force search to maximize overall similarities of the sequenced sentences. Our proposed method outperformed other baselines on ROCStories, a corpus of 5-sentence human-made stories. The method is specifically more efficient than neural network-based methods when no huge corpus is available. Among other advantages of this method are its interpretability and needlessness to linguistic knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge