Helin Gong

DINO-Detect: A Simple yet Effective Framework for Blur-Robust AI-Generated Image Detection

Nov 18, 2025Abstract:With growing concerns over image authenticity and digital safety, the field of AI-generated image (AIGI) detection has progressed rapidly. Yet, most AIGI detectors still struggle under real-world degradations, particularly motion blur, which frequently occurs in handheld photography, fast motion, and compressed video. Such blur distorts fine textures and suppresses high-frequency artifacts, causing severe performance drops in real-world settings. We address this limitation with a blur-robust AIGI detection framework based on teacher-student knowledge distillation. A high-capacity teacher (DINOv3), trained on clean (i.e., sharp) images, provides stable and semantically rich representations that serve as a reference for learning. By freezing the teacher to maintain its generalization ability, we distill its feature and logit responses from sharp images to a student trained on blurred counterparts, enabling the student to produce consistent representations under motion degradation. Extensive experiments benchmarks show that our method achieves state-of-the-art performance under both motion-blurred and clean conditions, demonstrating improved generalization and real-world applicability. Source codes will be released at: https://github.com/JiaLiangShen/Dino-Detect-for-blur-robust-AIGC-Detection.

Artificial Intelligence in Reactor Physics: Current Status and Future Prospects

Mar 04, 2025Abstract:Reactor physics is the study of neutron properties, focusing on using models to examine the interactions between neutrons and materials in nuclear reactors. Artificial intelligence (AI) has made significant contributions to reactor physics, e.g., in operational simulations, safety design, real-time monitoring, core management and maintenance. This paper presents a comprehensive review of AI approaches in reactor physics, especially considering the category of Machine Learning (ML), with the aim of describing the application scenarios, frontier topics, unsolved challenges and future research directions. From equation solving and state parameter prediction to nuclear industry applications, this paper provides a step-by-step overview of ML methods applied to steady-state, transient and combustion problems. Most literature works achieve industry-demanded models by enhancing the efficiency of deterministic methods or correcting uncertainty methods, which leads to successful applications. However, research on ML methods in reactor physics is somewhat fragmented, and the ability to generalize models needs to be strengthened. Progress is still possible, especially in addressing theoretical challenges and enhancing industrial applications such as building surrogate models and digital twins.

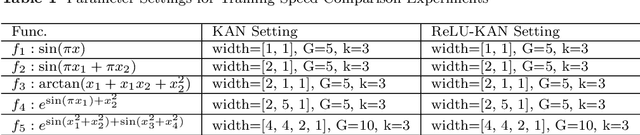

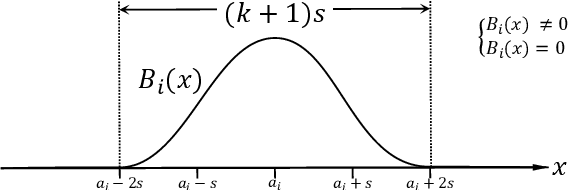

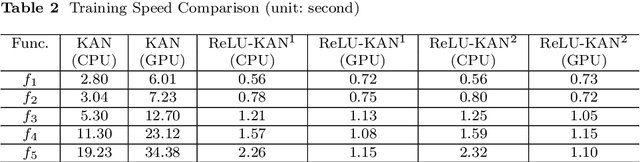

ReLU-KAN: New Kolmogorov-Arnold Networks that Only Need Matrix Addition, Dot Multiplication, and ReLU

Jun 04, 2024

Abstract:Limited by the complexity of basis function (B-spline) calculations, Kolmogorov-Arnold Networks (KAN) suffer from restricted parallel computing capability on GPUs. This paper proposes a novel ReLU-KAN implementation that inherits the core idea of KAN. By adopting ReLU (Rectified Linear Unit) and point-wise multiplication, we simplify the design of KAN's basis function and optimize the computation process for efficient CUDA computing. The proposed ReLU-KAN architecture can be readily implemented on existing deep learning frameworks (e.g., PyTorch) for both inference and training. Experimental results demonstrate that ReLU-KAN achieves a 20x speedup compared to traditional KAN with 4-layer networks. Furthermore, ReLU-KAN exhibits a more stable training process with superior fitting ability while preserving the "catastrophic forgetting avoidance" property of KAN. You can get the code in https://github.com/quiqi/relu_kan

On the uncertainty analysis of the data-enabled physics-informed neural network for solving neutron diffusion eigenvalue problem

Mar 17, 2023Abstract:In practical engineering experiments, the data obtained through detectors are inevitably noisy. For the already proposed data-enabled physics-informed neural network (DEPINN) \citep{DEPINN}, we investigate the performance of DEPINN in calculating the neutron diffusion eigenvalue problem from several perspectives when the prior data contain different scales of noise. Further, in order to reduce the effect of noise and improve the utilization of the noisy prior data, we propose innovative interval loss functions and give some rigorous mathematical proofs. The robustness of DEPINN is examined on two typical benchmark problems through a large number of numerical results, and the effectiveness of the proposed interval loss function is demonstrated by comparison. This paper confirms the feasibility of the improved DEPINN for practical engineering applications in nuclear reactor physics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge