Hayden Gunraj

Cancer-Net PCa-Gen: Synthesis of Realistic Prostate Diffusion Weighted Imaging Data via Anatomic-Conditional Controlled Latent Diffusion

Nov 30, 2023Abstract:In Canada, prostate cancer is the most common form of cancer in men and accounted for 20% of new cancer cases for this demographic in 2022. Due to recent successes in leveraging machine learning for clinical decision support, there has been significant interest in the development of deep neural networks for prostate cancer diagnosis, prognosis, and treatment planning using diffusion weighted imaging (DWI) data. A major challenge hindering widespread adoption in clinical use is poor generalization of such networks due to scarcity of large-scale, diverse, balanced prostate imaging datasets for training such networks. In this study, we explore the efficacy of latent diffusion for generating realistic prostate DWI data through the introduction of an anatomic-conditional controlled latent diffusion strategy. To the best of the authors' knowledge, this is the first study to leverage conditioning for synthesis of prostate cancer imaging. Experimental results show that the proposed strategy, which we call Cancer-Net PCa-Gen, enhances synthesis of diverse prostate images through controllable tumour locations and better anatomical and textural fidelity. These crucial features make it well-suited for augmenting real patient data, enabling neural networks to be trained on a more diverse and comprehensive data distribution. The Cancer-Net PCa-Gen framework and sample images have been made publicly available at https://www.kaggle.com/datasets/deetsadi/cancer-net-pca-gen-dataset as a part of a global open-source initiative dedicated to accelerating advancement in machine learning to aid clinicians in the fight against cancer.

COVIDx CXR-4: An Expanded Multi-Institutional Open-Source Benchmark Dataset for Chest X-ray Image-Based Computer-Aided COVID-19 Diagnostics

Nov 29, 2023Abstract:The global ramifications of the COVID-19 pandemic remain significant, exerting persistent pressure on nations even three years after its initial outbreak. Deep learning models have shown promise in improving COVID-19 diagnostics but require diverse and larger-scale datasets to improve performance. In this paper, we introduce COVIDx CXR-4, an expanded multi-institutional open-source benchmark dataset for chest X-ray image-based computer-aided COVID-19 diagnostics. COVIDx CXR-4 expands significantly on the previous COVIDx CXR-3 dataset by increasing the total patient cohort size by greater than 2.66 times, resulting in 84,818 images from 45,342 patients across multiple institutions. We provide extensive analysis on the diversity of the patient demographic, imaging metadata, and disease distributions to highlight potential dataset biases. To the best of the authors' knowledge, COVIDx CXR-4 is the largest and most diverse open-source COVID-19 CXR dataset and is made publicly available as part of an open initiative to advance research to aid clinicians against the COVID-19 disease.

Cancer-Net PCa-Data: An Open-Source Benchmark Dataset for Prostate Cancer Clinical Decision Support using Synthetic Correlated Diffusion Imaging Data

Nov 20, 2023Abstract:The recent introduction of synthetic correlated diffusion (CDI$^s$) imaging has demonstrated significant potential in the realm of clinical decision support for prostate cancer (PCa). CDI$^s$ is a new form of magnetic resonance imaging (MRI) designed to characterize tissue characteristics through the joint correlation of diffusion signal attenuation across different Brownian motion sensitivities. Despite the performance improvement, the CDI$^s$ data for PCa has not been previously made publicly available. In our commitment to advance research efforts for PCa, we introduce Cancer-Net PCa-Data, an open-source benchmark dataset of volumetric CDI$^s$ imaging data of PCa patients. Cancer-Net PCa-Data consists of CDI$^s$ volumetric images from a patient cohort of 200 patient cases, along with full annotations (gland masks, tumor masks, and PCa diagnosis for each tumor). We also analyze the demographic and label region diversity of Cancer-Net PCa-Data for potential biases. Cancer-Net PCa-Data is the first-ever public dataset of CDI$^s$ imaging data for PCa, and is a part of the global open-source initiative dedicated to advancement in machine learning and imaging research to aid clinicians in the global fight against cancer.

Explaining Explainability: Towards Deeper Actionable Insights into Deep Learning through Second-order Explainability

Jun 14, 2023

Abstract:Explainability plays a crucial role in providing a more comprehensive understanding of deep learning models' behaviour. This allows for thorough validation of the model's performance, ensuring that its decisions are based on relevant visual indicators and not biased toward irrelevant patterns existing in training data. However, existing methods provide only instance-level explainability, which requires manual analysis of each sample. Such manual review is time-consuming and prone to human biases. To address this issue, the concept of second-order explainable AI (SOXAI) was recently proposed to extend explainable AI (XAI) from the instance level to the dataset level. SOXAI automates the analysis of the connections between quantitative explanations and dataset biases by identifying prevalent concepts. In this work, we explore the use of this higher-level interpretation of a deep neural network's behaviour to allows us to "explain the explainability" for actionable insights. Specifically, we demonstrate for the first time, via example classification and segmentation cases, that eliminating irrelevant concepts from the training set based on actionable insights from SOXAI can enhance a model's performance.

Cancer-Net BCa-S: Breast Cancer Grade Prediction using Volumetric Deep Radiomic Features from Synthetic Correlated Diffusion Imaging

Apr 12, 2023Abstract:The prevalence of breast cancer continues to grow, affecting about 300,000 females in the United States in 2023. However, there are different levels of severity of breast cancer requiring different treatment strategies, and hence, grading breast cancer has become a vital component of breast cancer diagnosis and treatment planning. Specifically, the gold-standard Scarff-Bloom-Richardson (SBR) grade has been shown to consistently indicate a patient's response to chemotherapy. Unfortunately, the current method to determine the SBR grade requires removal of some cancer cells from the patient which can lead to stress and discomfort along with costly expenses. In this paper, we study the efficacy of deep learning for breast cancer grading based on synthetic correlated diffusion (CDI$^s$) imaging, a new magnetic resonance imaging (MRI) modality and found that it achieves better performance on SBR grade prediction compared to those learnt using gold-standard imaging modalities. Hence, we introduce Cancer-Net BCa-S, a volumetric deep radiomics approach for predicting SBR grade based on volumetric CDI$^s$ data. Given the promising results, this proposed method to identify the severity of the cancer would allow for better treatment decisions without the need for a biopsy. Cancer-Net BCa-S has been made publicly available as part of a global open-source initiative for advancing machine learning for cancer care.

A Multi-Institutional Open-Source Benchmark Dataset for Breast Cancer Clinical Decision Support using Synthetic Correlated Diffusion Imaging Data

Apr 12, 2023

Abstract:Recently, a new form of magnetic resonance imaging (MRI) called synthetic correlated diffusion (CDI$^s$) imaging was introduced and showed considerable promise for clinical decision support for cancers such as prostate cancer when compared to current gold-standard MRI techniques. However, the efficacy for CDI$^s$ for other forms of cancers such as breast cancer has not been as well-explored nor have CDI$^s$ data been previously made publicly available. Motivated to advance efforts in the development of computer-aided clinical decision support for breast cancer using CDI$^s$, we introduce Cancer-Net BCa, a multi-institutional open-source benchmark dataset of volumetric CDI$^s$ imaging data of breast cancer patients. Cancer-Net BCa contains CDI$^s$ volumetric images from a pre-treatment cohort of 253 patients across ten institutions, along with detailed annotation metadata (the lesion type, genetic subtype, longest diameter on the MRI (MRLD), the Scarff-Bloom-Richardson (SBR) grade, and the post-treatment breast cancer pathologic complete response (pCR) to neoadjuvant chemotherapy). We further examine the demographic and tumour diversity of the Cancer-Net BCa dataset to gain deeper insights into potential biases. Cancer-Net BCa is publicly available as a part of a global open-source initiative dedicated to accelerating advancement in machine learning to aid clinicians in the fight against cancer.

SolderNet: Towards Trustworthy Visual Inspection of Solder Joints in Electronics Manufacturing Using Explainable Artificial Intelligence

Nov 18, 2022Abstract:In electronics manufacturing, solder joint defects are a common problem affecting a variety of printed circuit board components. To identify and correct solder joint defects, the solder joints on a circuit board are typically inspected manually by trained human inspectors, which is a very time-consuming and error-prone process. To improve both inspection efficiency and accuracy, in this work we describe an explainable deep learning-based visual quality inspection system tailored for visual inspection of solder joints in electronics manufacturing environments. At the core of this system is an explainable solder joint defect identification system called SolderNet which we design and implement with trust and transparency in mind. While several challenges remain before the full system can be developed and deployed, this study presents important progress towards trustworthy visual inspection of solder joints in electronics manufacturing.

Cancer-Net BCa: Breast Cancer Pathologic Complete Response Prediction using Volumetric Deep Radiomic Features from Synthetic Correlated Diffusion Imaging

Nov 10, 2022Abstract:Breast cancer is the second most common type of cancer in women in Canada and the United States, representing over 25% of all new female cancer cases. Neoadjuvant chemotherapy treatment has recently risen in usage as it may result in a patient having a pathologic complete response (pCR), and it can shrink inoperable breast cancer tumors prior to surgery so that the tumor becomes operable, but it is difficult to predict a patient's pathologic response to neoadjuvant chemotherapy. In this paper, we investigate the efficacy of leveraging learnt volumetric deep features from a newly introduced magnetic resonance imaging (MRI) modality called synthetic correlated diffusion imaging (CDI$^s$) for the purpose of pCR prediction. More specifically, we leverage a volumetric convolutional neural network to learn volumetric deep radiomic features from a pre-treatment cohort and construct a predictor based on the learnt features using the post-treatment response. As the first study to explore the utility of CDI$^s$ within a deep learning perspective for clinical decision support, we evaluated the proposed approach using the ACRIN-6698 study against those learnt using gold-standard imaging modalities, and found that the proposed approach can provide enhanced pCR prediction performance and thus may be a useful tool to aid oncologists in improving recommendation of treatment of patients. Subsequently, this approach to leverage volumetric deep radiomic features (which we name Cancer-Net BCa) can be further extended to other applications of CDI$^s$ in the cancer domain to further improve prediction performance.

MMRNet: Improving Reliability for Multimodal Computer Vision for Bin Picking via Multimodal Redundancy

Oct 19, 2022

Abstract:Recently, there has been tremendous interest in industry 4.0 infrastructure to address labor shortages in global supply chains. Deploying artificial intelligence-enabled robotic bin picking systems in real world has become particularly important for reducing labor demands and costs while increasing efficiency. To this end, artificial intelligence-enabled robotic bin picking systems may be used to automate bin picking, but may also cause expensive damage during an abnormal event such as a sensor failure. As such, reliability becomes a critical factor for translating artificial intelligence research to real world applications and products. In this paper, we propose a reliable vision system with MultiModal Redundancy (MMRNet) for tackling object detection and segmentation for robotic bin picking using data from different modalities. This is the first system that introduces the concept of multimodal redundancy to combat sensor failure issues during deployment. In particular, we realize the multimodal redundancy framework with a gate fusion module and dynamic ensemble learning. Finally, we present a new label-free multimodal consistency score that utilizes the output from all modalities to measure the overall system output reliability and uncertainty. Through experiments, we demonstrate that in an event of missing modality, our system provides a much more reliable performance compared to baseline models. We also demonstrate that our MC score is a more powerful reliability indicator for outputs during inference time where model generated confidence score are often over-confident.

COVIDx CXR-3: A Large-Scale, Open-Source Benchmark Dataset of Chest X-ray Images for Computer-Aided COVID-19 Diagnostics

Jun 08, 2022

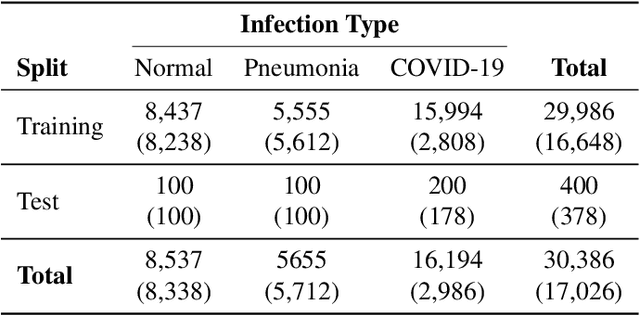

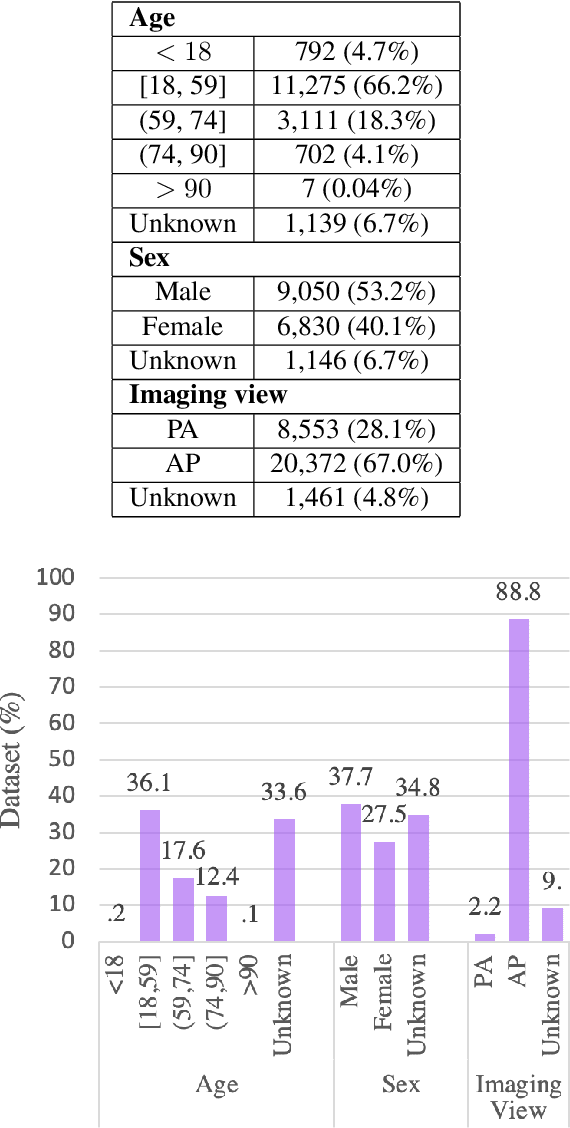

Abstract:After more than two years since the beginning of the COVID-19 pandemic, the pressure of this crisis continues to devastate globally. The use of chest X-ray (CXR) imaging as a complementary screening strategy to RT-PCR testing is not only prevailing but has greatly increased due to its routine clinical use for respiratory complaints. Thus far, many visual perception models have been proposed for COVID-19 screening based on CXR imaging. Nevertheless, the accuracy and the generalization capacity of these models are very much dependent on the diversity and the size of the dataset they were trained on. Motivated by this, we introduce COVIDx CXR-3, a large-scale benchmark dataset of CXR images for supporting COVID-19 computer vision research. COVIDx CXR-3 is composed of 30,386 CXR images from a multinational cohort of 17,026 patients from at least 51 countries, making it, to the best of our knowledge, the most extensive, most diverse COVID-19 CXR dataset in open access form. Here, we provide comprehensive details on the various aspects of the proposed dataset including patient demographics, imaging views, and infection types. The hope is that COVIDx CXR-3 can assist scientists in advancing computer vision research against the COVID-19 pandemic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge