Haris Vikalo

Regularized Calibration with Successive Rounding for Post-Training Quantization

Feb 05, 2026Abstract:Large language models (LLMs) deliver robust performance across diverse applications, yet their deployment often faces challenges due to the memory and latency costs of storing and accessing billions of parameters. Post-training quantization (PTQ) enables efficient inference by mapping pretrained weights to low-bit formats without retraining, but its effectiveness depends critically on both the quantization objective and the rounding procedure used to obtain low-bit weight representations. In this work, we show that interpolating between symmetric and asymmetric calibration acts as a form of regularization that preserves the standard quadratic structure used in PTQ while providing robustness to activation mismatch. Building on this perspective, we derive a simple successive rounding procedure that naturally incorporates asymmetric calibration, as well as a bounded-search extension that allows for an explicit trade-off between quantization quality and the compute cost. Experiments across multiple LLM families, quantization bit-widths, and benchmarks demonstrate that the proposed bounded search based on a regularized asymmetric calibration objective consistently improves perplexity and accuracy over PTQ baselines, while incurring only modest and controllable additional computational cost.

Optimal Resource Allocation for ML Model Training and Deployment under Concept Drift

Dec 14, 2025Abstract:We study how to allocate resources for training and deployment of machine learning (ML) models under concept drift and limited budgets. We consider a setting in which a model provider distributes trained models to multiple clients whose devices support local inference but lack the ability to retrain those models, placing the burden of performance maintenance on the provider. We introduce a model-agnostic framework that captures the interaction between resource allocation, concept drift dynamics, and deployment timing. We show that optimal training policies depend critically on the aging properties of concept durations. Under sudden concept changes, we derive optimal training policies subject to budget constraints when concept durations follow distributions with Decreasing Mean Residual Life (DMRL), and show that intuitive heuristics are provably suboptimal under Increasing Mean Residual Life (IMRL). We further study model deployment under communication constraints, prove that the associated optimization problem is quasi-convex under mild conditions, and propose a randomized scheduling strategy that achieves near-optimal client-side performance. These results offer theoretical and algorithmic foundations for cost-efficient ML model management under concept drift, with implications for continual learning, distributed inference, and adaptive ML systems.

Robust Super-Capacity SRS Channel Inpainting via Diffusion Models

Oct 30, 2025

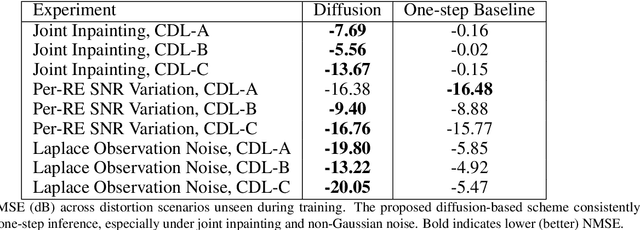

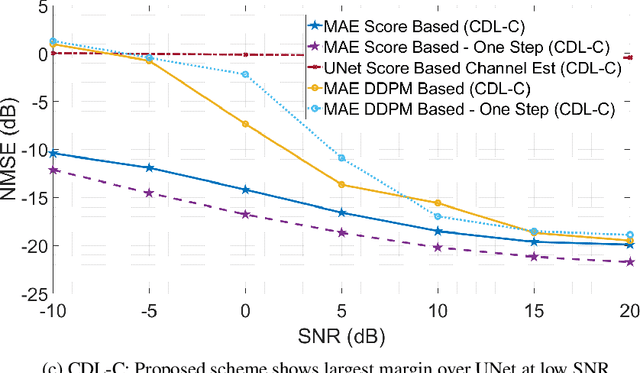

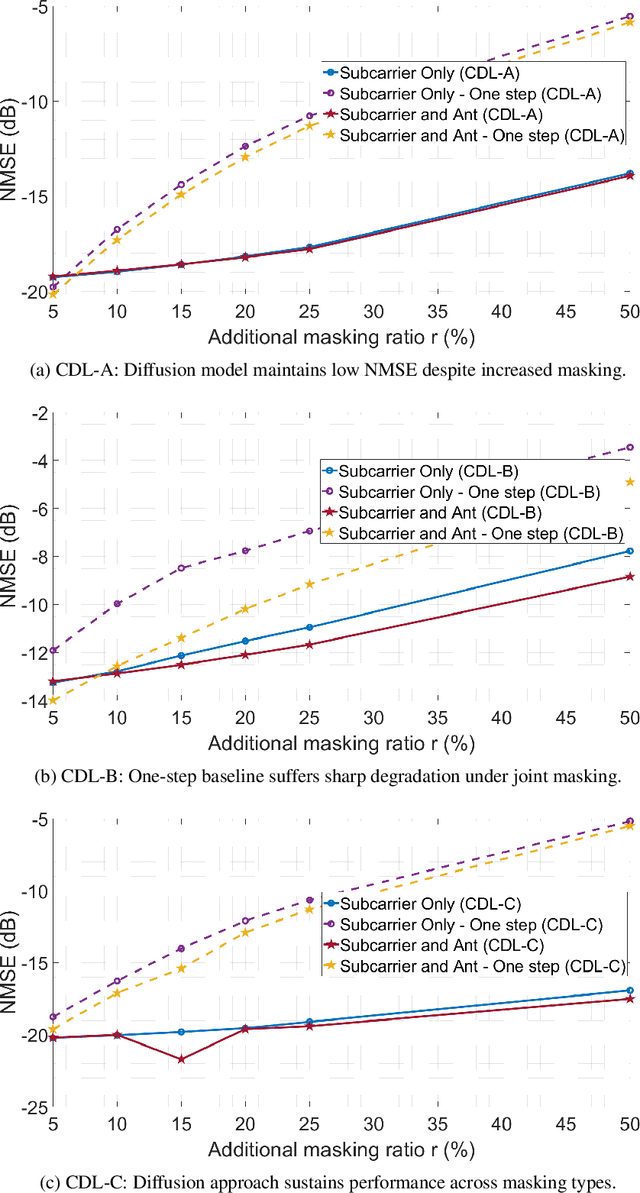

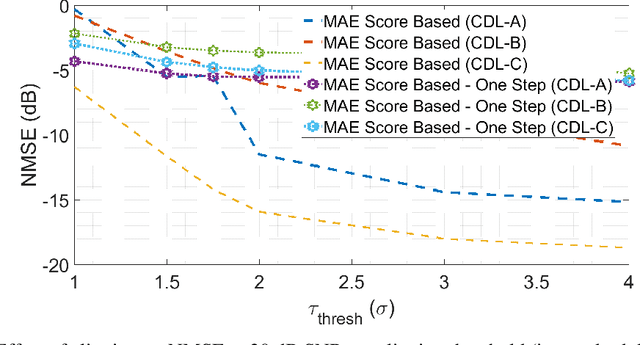

Abstract:Accurate channel state information (CSI) is essential for reliable multiuser MIMO operation. In 5G NR, reciprocity-based beamforming via uplink Sounding Reference Signals (SRS) face resource and coverage constraints, motivating sparse non-uniform SRS allocation. Prior masked-autoencoder (MAE) approaches improve coverage but overfit to training masks and degrade under unseen distortions (e.g., additional masking, interference, clipping, non-Gaussian noise). We propose a diffusion-based channel inpainting framework that integrates system-model knowledge at inference via a likelihood-gradient term, enabling a single trained model to adapt across mismatched conditions. On standardized CDL channels, the score-based diffusion variant consistently outperforms a UNet score-model baseline and the one-step MAE under distribution shift, with improvements up to 14 dB NMSE in challenging settings (e.g., Laplace noise, user interference), while retaining competitive accuracy under matched conditions. These results demonstrate that diffusion-guided inpainting is a robust and generalizable approach for super-capacity SRS design in 5G NR systems.

Fed-REACT: Federated Representation Learning for Heterogeneous and Evolving Data

Sep 08, 2025Abstract:Motivated by the high resource costs and privacy concerns associated with centralized machine learning, federated learning (FL) has emerged as an efficient alternative that enables clients to collaboratively train a global model while keeping their data local. However, in real-world deployments, client data distributions often evolve over time and differ significantly across clients, introducing heterogeneity that degrades the performance of standard FL algorithms. In this work, we introduce Fed-REACT, a federated learning framework designed for heterogeneous and evolving client data. Fed-REACT combines representation learning with evolutionary clustering in a two-stage process: (1) in the first stage, each client learns a local model to extracts feature representations from its data; (2) in the second stage, the server dynamically groups clients into clusters based on these representations and coordinates cluster-wise training of task-specific models for downstream objectives such as classification or regression. We provide a theoretical analysis of the representation learning stage, and empirically demonstrate that Fed-REACT achieves superior accuracy and robustness on real-world datasets.

Boundary Attention Constrained Zero-Shot Layout-To-Image Generation

Nov 15, 2024

Abstract:Recent text-to-image diffusion models excel at generating high-resolution images from text but struggle with precise control over spatial composition and object counting. To address these challenges, several studies developed layout-to-image (L2I) approaches that incorporate layout instructions into text-to-image models. However, existing L2I methods typically require either fine-tuning pretrained parameters or training additional control modules for the diffusion models. In this work, we propose a novel zero-shot L2I approach, BACON (Boundary Attention Constrained generation), which eliminates the need for additional modules or fine-tuning. Specifically, we use text-visual cross-attention feature maps to quantify inconsistencies between the layout of the generated images and the provided instructions, and then compute loss functions to optimize latent features during the diffusion reverse process. To enhance spatial controllability and mitigate semantic failures in complex layout instructions, we leverage pixel-to-pixel correlations in the self-attention feature maps to align cross-attention maps and combine three loss functions constrained by boundary attention to update latent features. Comprehensive experimental results on both L2I and non-L2I pretrained diffusion models demonstrate that our method outperforms existing zero-shot L2I techniuqes both quantitatively and qualitatively in terms of image composition on the DrawBench and HRS benchmarks.

Can Transformers In-Context Learn Behavior of a Linear Dynamical System?

Oct 21, 2024

Abstract:We investigate whether transformers can learn to track a random process when given observations of a related process and parameters of the dynamical system that relates them as context. More specifically, we consider a finite-dimensional state-space model described by the state transition matrix $F$, measurement matrices $h_1, \dots, h_N$, and the process and measurement noise covariance matrices $Q$ and $R$, respectively; these parameters, randomly sampled, are provided to the transformer along with the observations $y_1,\dots,y_N$ generated by the corresponding linear dynamical system. We argue that in such settings transformers learn to approximate the celebrated Kalman filter, and empirically verify this both for the task of estimating hidden states $\hat{x}_{N|1,2,3,...,N}$ as well as for one-step prediction of the $(N+1)^{st}$ observation, $\hat{y}_{N+1|1,2,3,...,N}$. A further study of the transformer's robustness reveals that its performance is retained even if the model's parameters are partially withheld. In particular, we demonstrate that the transformer remains accurate at the considered task even in the absence of state transition and noise covariance matrices, effectively emulating operations of the Dual-Kalman filter.

Recovering Labels from Local Updates in Federated Learning

May 02, 2024

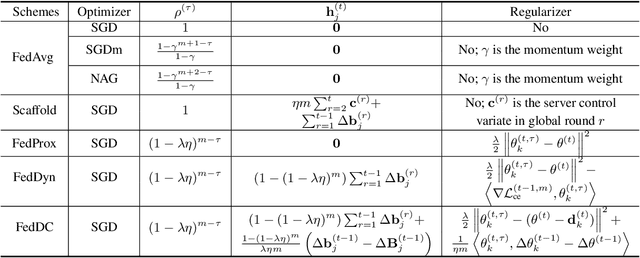

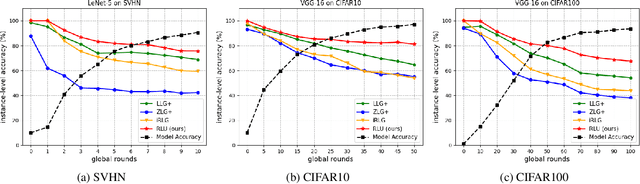

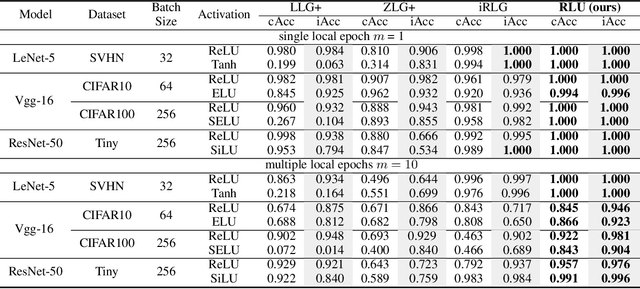

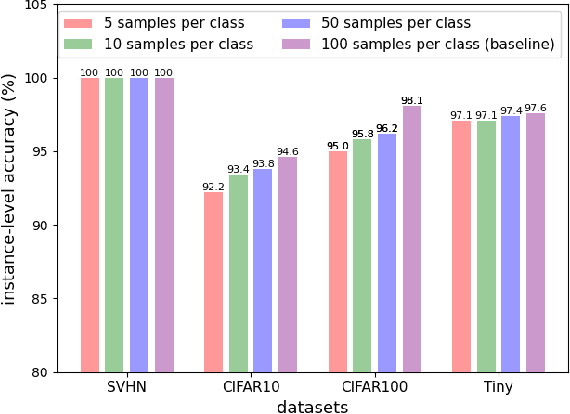

Abstract:Gradient inversion (GI) attacks present a threat to the privacy of clients in federated learning (FL) by aiming to enable reconstruction of the clients' data from communicated model updates. A number of such techniques attempts to accelerate data recovery by first reconstructing labels of the samples used in local training. However, existing label extraction methods make strong assumptions that typically do not hold in realistic FL settings. In this paper we present a novel label recovery scheme, Recovering Labels from Local Updates (RLU), which provides near-perfect accuracy when attacking untrained (most vulnerable) models. More significantly, RLU achieves high performance even in realistic real-world settings where the clients in an FL system run multiple local epochs, train on heterogeneous data, and deploy various optimizers to minimize different objective functions. Specifically, RLU estimates labels by solving a least-square problem that emerges from the analysis of the correlation between labels of the data points used in a training round and the resulting update of the output layer. The experimental results on several datasets, architectures, and data heterogeneity scenarios demonstrate that the proposed method consistently outperforms existing baselines, and helps improve quality of the reconstructed images in GI attacks in terms of both PSNR and LPIPS.

Fed-QSSL: A Framework for Personalized Federated Learning under Bitwidth and Data Heterogeneity

Dec 20, 2023Abstract:Motivated by high resource costs of centralized machine learning schemes as well as data privacy concerns, federated learning (FL) emerged as an efficient alternative that relies on aggregating locally trained models rather than collecting clients' potentially private data. In practice, available resources and data distributions vary from one client to another, creating an inherent system heterogeneity that leads to deterioration of the performance of conventional FL algorithms. In this work, we present a federated quantization-based self-supervised learning scheme (Fed-QSSL) designed to address heterogeneity in FL systems. At clients' side, to tackle data heterogeneity we leverage distributed self-supervised learning while utilizing low-bit quantization to satisfy constraints imposed by local infrastructure and limited communication resources. At server's side, Fed-QSSL deploys de-quantization, weighted aggregation and re-quantization, ultimately creating models personalized to both data distribution as well as specific infrastructure of each client's device. We validated the proposed algorithm on real world datasets, demonstrating its efficacy, and theoretically analyzed impact of low-bit training on the convergence and robustness of the learned models.

Mixed-Precision Quantization for Federated Learning on Resource-Constrained Heterogeneous Devices

Nov 29, 2023Abstract:While federated learning (FL) systems often utilize quantization to battle communication and computational bottlenecks, they have heretofore been limited to deploying fixed-precision quantization schemes. Meanwhile, the concept of mixed-precision quantization (MPQ), where different layers of a deep learning model are assigned varying bit-width, remains unexplored in the FL settings. We present a novel FL algorithm, FedMPQ, which introduces mixed-precision quantization to resource-heterogeneous FL systems. Specifically, local models, quantized so as to satisfy bit-width constraint, are trained by optimizing an objective function that includes a regularization term which promotes reduction of precision in some of the layers without significant performance degradation. The server collects local model updates, de-quantizes them into full-precision models, and then aggregates them into a global model. To initialize the next round of local training, the server relies on the information learned in the previous training round to customize bit-width assignments of the models delivered to different clients. In extensive benchmarking experiments on several model architectures and different datasets in both iid and non-iid settings, FedMPQ outperformed the baseline FL schemes that utilize fixed-precision quantization while incurring only a minor computational overhead on the participating devices.

Accelerating Non-IID Federated Learning via Heterogeneity-Guided Client Sampling

Sep 30, 2023Abstract:Statistical heterogeneity of data present at client devices in a federated learning (FL) system renders the training of a global model in such systems difficult. Particularly challenging are the settings where due to resource constraints only a small fraction of clients can participate in any given round of FL. Recent approaches to training a global model in FL systems with non-IID data have focused on developing client selection methods that aim to sample clients with more informative updates of the model. However, existing client selection techniques either introduce significant computation overhead or perform well only in the scenarios where clients have data with similar heterogeneity profiles. In this paper, we propose HiCS-FL (Federated Learning via Hierarchical Clustered Sampling), a novel client selection method in which the server estimates statistical heterogeneity of a client's data using the client's update of the network's output layer and relies on this information to cluster and sample the clients. We analyze the ability of the proposed techniques to compare heterogeneity of different datasets, and characterize convergence of the training process that deploys the introduced client selection method. Extensive experimental results demonstrate that in non-IID settings HiCS-FL achieves faster convergence and lower training variance than state-of-the-art FL client selection schemes. Notably, HiCS-FL drastically reduces computation cost compared to existing selection schemes and is adaptable to different heterogeneity scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge